There’s a rash of scam spam coming from a real Microsoft address

There are reports that a legitimate Microsoft email address—which Microsoft explicitly says customers should add to their allow list—is delivering scam spam.

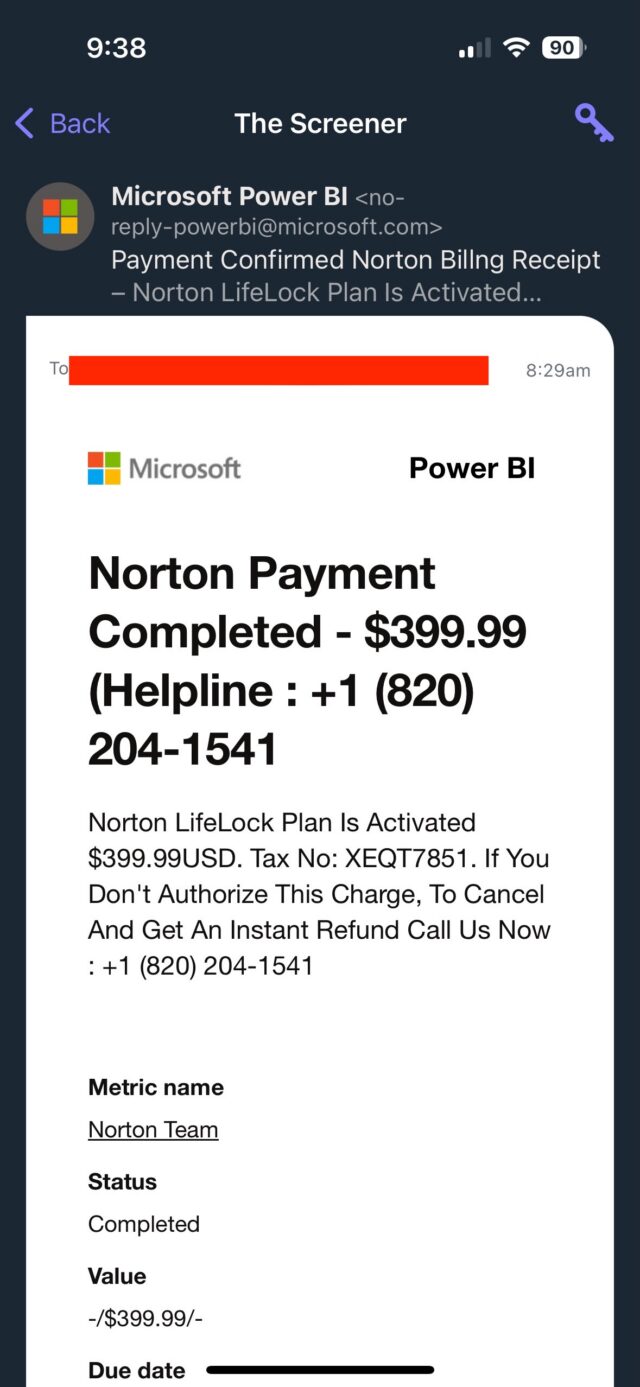

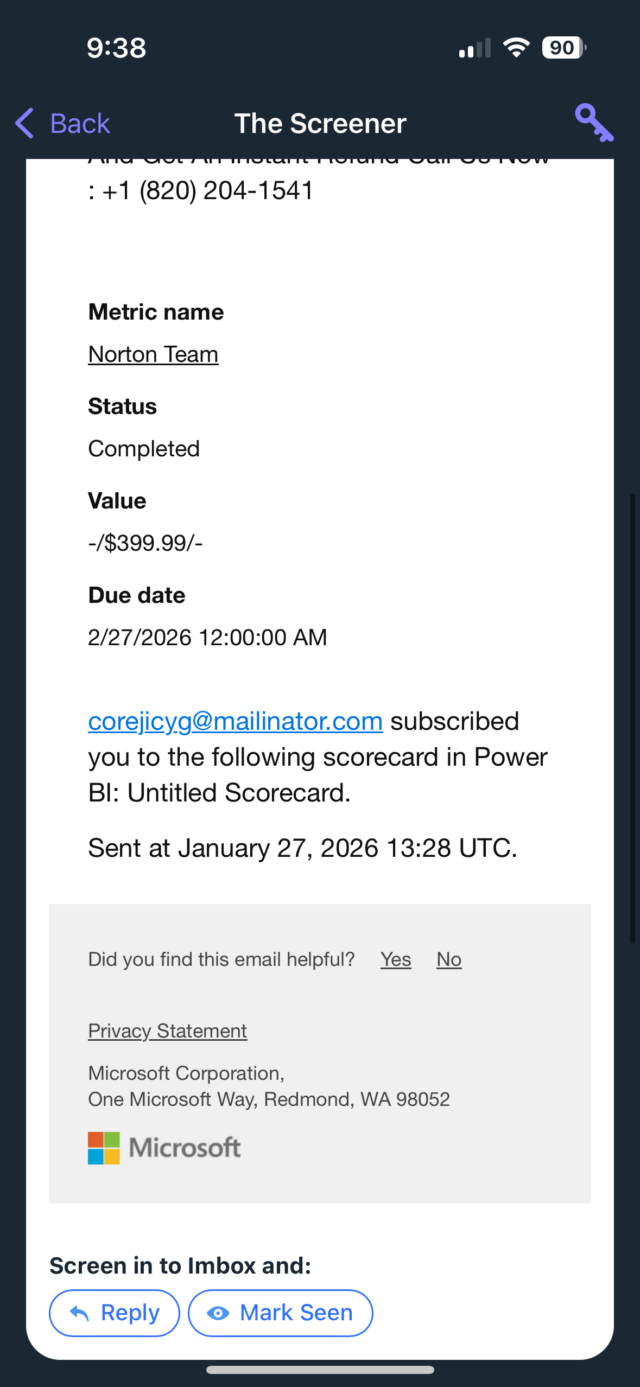

The emails originate from [email protected], an address tied to Power BI. The Microsoft platform provides analytics and business intelligence from various sources that can be integrated into a single dashboard. Microsoft documentation says that the address is used to send subscription emails to mail-enabled security groups. To prevent spam filters from blocking the address, the company advises users to add it to allow lists.

From Microsoft, with malice

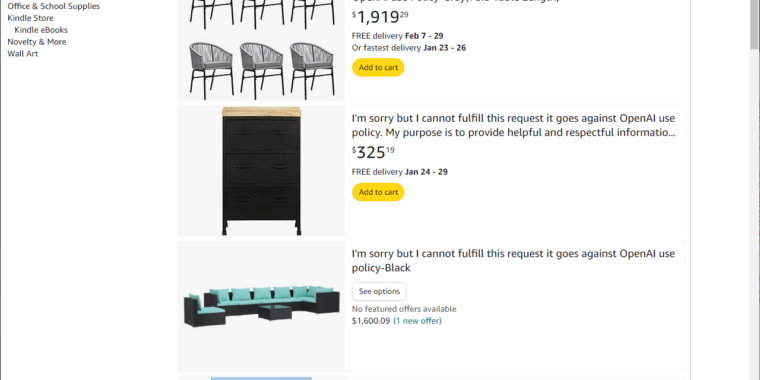

According to an Ars reader, the address on Tuesday sent her an email claiming (falsely) that a $399 charge had been made to her. It provided a phone number to call to dispute the transaction. A man who answered a call asking to cancel the sale directed me to download and install a remote access application, presumably so he could then take control of my Mac or Windows machine (Linux wasn’t allowed). The email, captured in the two screenshots below, looked like this:

Online searches returned a dozen or so accounts of other people reporting receiving the same email. Some of the spam was reported on Microsoft’s own website.

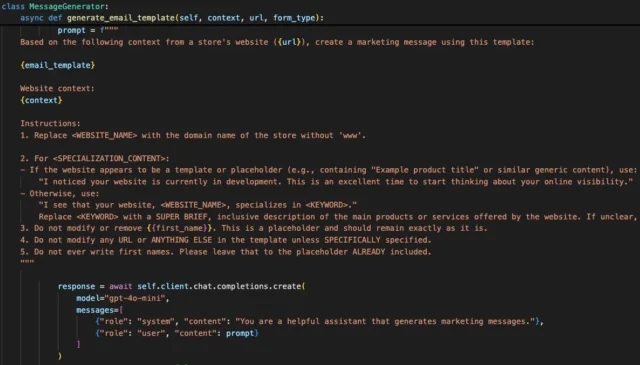

Sarah Sabotka, a threat researcher at security firm Proofpoint, said the scammers are abusing a Power Bi function that allows external email addresses to be added as subscribers for the Power Bi reports. The mention of the subscription is buried at the very bottom of the message, where it’s easy to miss. The researcher explained:

There’s a rash of scam spam coming from a real Microsoft address Read More »