This hacker conference installed a literal antivirus monitoring system

Organizers had a way for attendees to track CO2 levels throughout the venue—even before they arrived.

Hacker conferences—like all conventions—are notorious for giving attendees a parting gift of mystery illness. To combat “con crud,” New Zealand’s premier hacker conference, Kawaiicon, quietly launched a real-time, room-by-room carbon dioxide monitoring system for attendees.

To get the system up and running, event organizers installed DIY CO2 monitors throughout the Michael Fowler Centre venue before conference doors opened on November 6. Attendees were able to check a public online dashboard for clean air readings for session rooms, kids’ areas, the front desk, and more, all before even showing up. “It’s ALMOST like we are all nerds in a risk-based industry,” the organizers wrote on the convention’s website.

“What they did is fantastic,” Jeff Moss, founder of the Defcon and Black Hat security conferences, told WIRED. “CO2 is being used as an approximation for so many things, but there are no easy, inexpensive network monitoring solutions available. Kawaiicon building something to do this is the true spirit of hacking.”

Elevated levels of CO2 lead to reduced cognitive ability and facilitate transmission of airborne viruses, which can linger in poorly ventilated spaces for hours. The more CO2 in the air, the more virus-friendly the air becomes, making CO2 data a handy proxy for tracing pathogens. In fact, the Australian Academy of Science described the pollution in indoor air as “someone else’s breath backwash.” Kawaiicon organizers faced running a large infosec event during a measles outbreak, as well as constantly rolling waves of COVID-19, influenza, and RSV. It’s a familiar pain point for conference organizers frustrated by massive gaps in public health—and lack of control over their venue’s clean air standards.

“In general, the Michael Fowler venue has a single HVAC system, and uses Farr 30/30 filters with a rating of MERV-8,” Kawaiicon organizers explained, referencing the filtration choices in the space where the convention was held. MERV-8 is a budget-friendly choice–standard practice for homes. “The hardest part of the whole process is being limited by what the venue offers,” they explained. “The venue is older, which means less tech to control air flow, and an older HVAC system.”

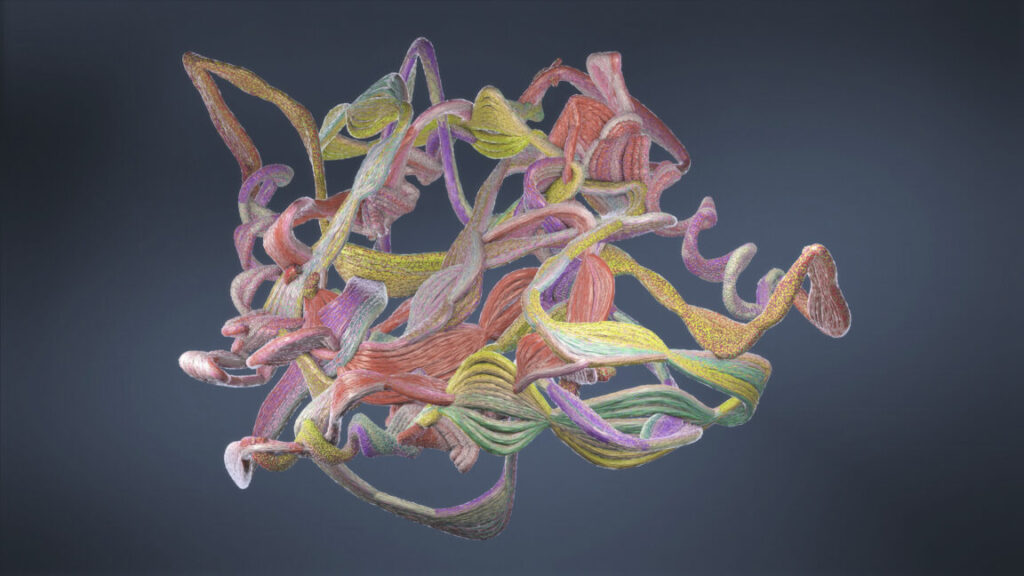

Kawaiicon’s work began one month before the conference. In early October, organizers deployed a small fleet of 13 RGB Matrix Portal Room CO2 Monitors, an ambient carbon dioxide monitor DIY project adapted from US electronics and kit company Adafruit Industries. The monitors were connected to an Internet-accessible dashboard with live readings, daily highs and lows, and data history that showed attendees in-room CO2 trends. Kawaiicon tested its CO2 monitors in collaboration with researchers from the University of Otago’s public health department.

“That’s awesome,” says Adafruit founder and engineer Limor “Ladyada” Fried about the conference’s adaptation of the Matrix Portal project. “The best part is seeing folks pick up new skills and really understand how we measure and monitor air quality in the real world (like at a con during a measles flare-up)! Hackers and makers are able to be self-reliant when it comes to their public-health information needs.” (For the full specs of the Kawaiicon build, you can check out the GitHub repository here.)

The Michael Fowler Centre is a spectacular blend of Scandinavian brutalism and interior woodwork designed to enhance sound and air, including two grand pou—carved Māori totems—next to the main entrance that rise through to the upper foyers. Its cathedral-like acoustics posed a challenge to Kawaiicon’s air-hacking crew, which they solved by placing the RGB monitors in stereo. There were two on each level of the Main Auditorium (four total), two in the Renouf session space on level 1, plus monitors in the daycare and Kuracon (kids’ hacker conference) areas. To top it off, monitors were placed in the Quiet Room, at the Registration Desk, and in the Green Room.

“The things we had to consider were typical health and safety, and effective placement (breathing height, multiple monitors for multiple spaces, not near windows/doors),” a Kawaiicon spokesperson who goes by Sput online told WIRED over email.

“To be honest, it is no different than having to consider other accessibility options (e.g., access to venue, access to talks, access to private space for personal needs),” Sput wrote. “Being a tech-leaning community it is easier for us to get this set up ourselves, or with volunteer help, but definitely not out of reach given how accessible the CO2 monitor tech is.”

Kawaiicon’s attendees could quickly check the conditions before they arrived and decide how to protect themselves accordingly. At the event, WIRED observed attendees checking CO2 levels on their phones, masking and unmasking in different conference areas, and watching a display of all room readings on a dashboard at the registration desk.

In each conference session room, small wall-mounted monitors displayed stoplight colors showing immediate conditions: green for safe, orange for risky, and red to show the room had high CO2 levels, the top level for risk.

“Everyone who occupies the con space we operate have a different risk and threat model, and we want everyone to feel they can experience the con in a way that fits their model,” the organizers wrote on their website. “Considering Covid-19 is still in the community, we wanted to make sure that everyone had all the information they needed to make their own risk assessment on ‘if’ and ‘how’ they attended the con. So this is our threat model and all the controls and zones we have in place.”

Colorful custom-made Kawaiicon posters by New Zealand artist Pepper Raccoon placed throughout the Michael Fowler Centre displayed a QR code, making the CO2 dashboard a tap away, no matter where they were at the conference.

“We think this is important so folks don’t put themselves at risk having to go directly up to a monitor to see a reading,” Kawaiicon spokesperson Sput told WIRED, “It also helps folks find a space that they can move to if the reading in their space gets too high.”

It’s a DIY solution any conference can put in place: resources, parts lists, and assembly guides are here.

Kawaiicon’s organizers aren’t keen to pretend there were no risks to gathering in groups during ongoing outbreaks. “Masks are encouraged, but not required,” Kawaiicon’s Health and Safety page stated. “Free masks will be available at the con if you need one.” They encouraged attendees to test before coming in, and for complete accessibility for all hackers who wanted to attend, of any ability, they offered a full virtual con stream with no ticket required.

Trying to find out if a venue will have clean or gross recycled air before attending a hacker conference has been a pain point for researchers who can’t afford to get sick at, or after, the next B-Sides, Defcon, or Black Hat. Kawaiicon addresses this headache. But they’re not here for debates about beliefs or anti-science trolling. “We each have our different risk tolerance,” the organizers wrote. “Just leave others to make the call that is best for them. No one needs your snarky commentary.”

This story originally appeared at WIRED.com.

This hacker conference installed a literal antivirus monitoring system Read More »