The Starliner spacecraft has started to emit strange noises

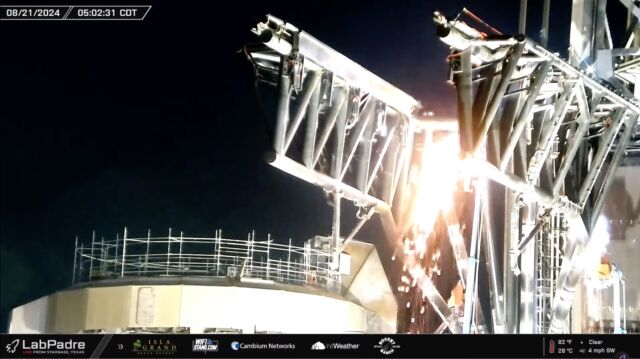

Enlarge / Boeing’s Starliner spacecraft is seen docked at the International Space Station on June 13.

On Saturday NASA astronaut Butch Wilmore noticed some strange noises emanating from a speaker inside the Starliner spacecraft.

“I’ve got a question about Starliner,” Wilmore radioed down to Mission Control, at Johnson Space Center in Houston. “There’s a strange noise coming through the speaker … I don’t know what’s making it.”

Wilmore said he was not sure if there was some oddity in the connection between the station and the spacecraft causing the noise, or something else. He asked the flight controllers in Houston to see if they could listen to the audio inside the spacecraft. A few minutes later, Mission Control radioed back that they were linked via “hardline” to listen to audio inside Starliner, which has now been docked to the International Space Station for nearly three months.

Wilmore, apparently floating in Starliner, then put his microphone up to the speaker inside Starliner. Shortly thereafter, there was an audible pinging that was quite distinctive. “Alright Butch, that one came through,” Mission control radioed up to Wilmore. “It was kind of like a pulsing noise, almost like a sonar ping.”

“I’ll do it one more time, and I’ll let y’all scratch your heads and see if you can figure out what’s going on,” Wilmore replied. The odd, sonar-like audio then repeated itself. “Alright, over to you. Call us if you figure it out.”

A space oddity

A recording of this audio, and Wilmore’s conversation with Mission Control, was captured and shared by a Michigan-based meteorologist named Rob Dale.

It was not immediately clear what was causing the odd, and somewhat eerie noise. As Starliner flies to the space station, it maintains communications with the space station via a radio frequency system. Once docked, however, there is a hardline umbilical that carries audio.

Astronauts notice such oddities in space from time to time. For example, during China’s first human spaceflight int 2003, astronaut Yang Liwei said he heard what sounded like an iron bucket being knocked by a wooden hammer while in orbit. Later, scientists realized the noise was due to small deformations in the spacecraft due to a difference in pressure between its inner and outer walls.

This weekend’s sonar-like noises most likely have a benign cause, and Wilmore certainly did not sound frazzled. But the odd noises are worth noting given the challenges that Boeing and NASA have had with the debut crewed flight of Starliner, including substantial helium leaks in flight, and failing thrusters. NASA announced a week ago that, due to uncertainty about the flyability of Starliner, it would come home without its original crew of Wilmore and Suni Williams.

Starliner is now due to fly back autonomously to Earth on Friday, September 6. Wilmore and Williams will return to Earth next February, flying aboard a Crew Dragon spacecraft scheduled to launch with just two astronauts later this month.

The Starliner spacecraft has started to emit strange noises Read More »