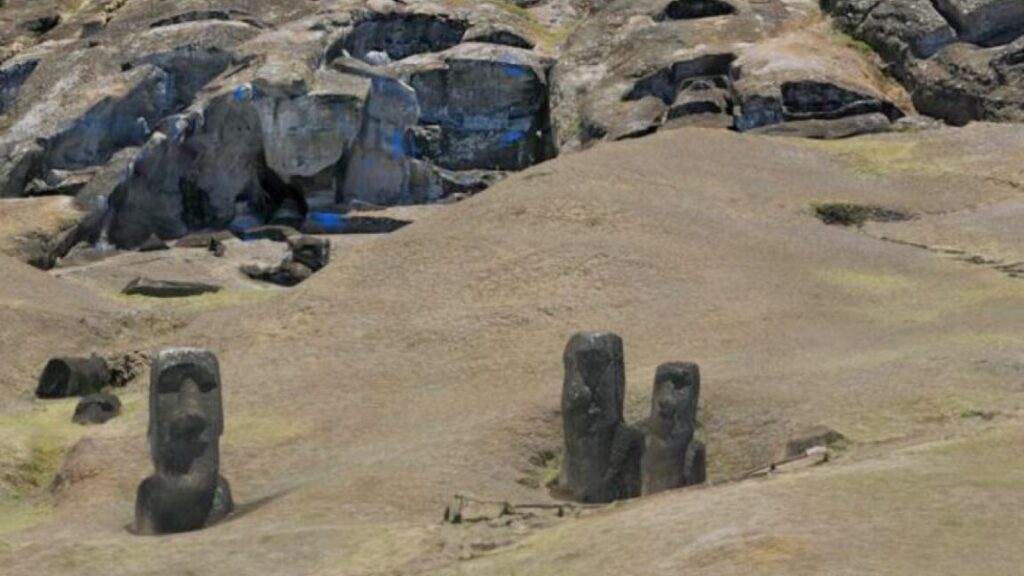

3D model shows small clans created Easter Island statues

Credit: ArcGIS

Easter Island is famous for its giant monumental statues, called moai, built some 800 years ago. The volcanic rock used for the moai came from a quarry site called Rano Raraku. Archaeologists have created a high-resolution interactive 3D model of the quarry site to learn more about the processes used to create the moai. (You can explore the full interactive model here.) According to a paper published in the journal PLoS ONE, the model shows that there were numerous independent groups, probably family clans, that created the moai, rather than a centralized management system.

“You can see things that you couldn’t actually see on the ground. You can see tops and sides and all kinds of areas that just would never be able to walk to,” said co-author Carl Lipo of Binghamton University. “We can say, ‘Here, go look at it.’ If you want to see the different kinds of carving, fly around and see stuff there. We’re documenting something that really has needed to be documented, but in a way that’s really comprehensive and shareable.”

Lipo is one of the foremost experts on the Easter Island moai. In October, we reported on Lipo’s experimental confirmation—based on 3D modeling of the physics and new field tests to re-create that motion—that Easter Island’s people transported the statues in a vertical position, with workers using ropes to essentially “walk” the moai onto their platforms. To explain the presence of so many moai, the assumption has been that the island was once home to tens of thousands of people.

Lipo’s latest field trials showed that the “walking” method can be accomplished with far fewer workers: 18 people, four on each lateral rope and 10 on a rear rope, to achieve the side-to-side walking motion. They were efficient enough in coordinating their efforts to move the statue forward 100 meters in just 40 minutes. That’s because the method operates on basic pendulum dynamics, which minimizes friction between the base and the ground. It’s also a technique that exploits the gradual build-up of amplitude, suggesting a sophisticated understanding of resonance principles.

3D model shows small clans created Easter Island statues Read More »