Rocket Report: Channeling the future at Wallops; SpaceX recovers rocket wreckage

China’s Space Pioneer seems to be back on track a year after an accidental launch.

A SpaceX Falcon 9 rocket carrying a payload of 24 Starlink Internet satellites soars into space after launching from Vandenberg Space Force Base, California, shortly after sunset on July 18, 2025. This image was taken in Santee, California, approximately 250 miles (400 kilometers) away from the launch site. Credit: Kevin Carter/Getty Images

Welcome to Edition 8.04 of the Rocket Report! The Pentagon’s Golden Dome missile defense shield will be a lot of things. Along with new sensors, command and control systems, and satellites, Golden Dome will require a lot of rockets. The pieces of the Golden Dome architecture operating in orbit will ride to space on commercial launch vehicles. And Golden Dome’s space-based interceptors will essentially be designed as flying fuel tanks with rocket engines. This shouldn’t be overlooked, and that’s why we include a couple of entries discussing Golden Dome in this week’s Rocket Report.

As always, we welcome reader submissions. If you don’t want to miss an issue, please subscribe using the box below (the form will not appear on AMP-enabled versions of the site). Each report will include information on small-, medium-, and heavy-lift rockets, as well as a quick look ahead at the next three launches on the calendar.

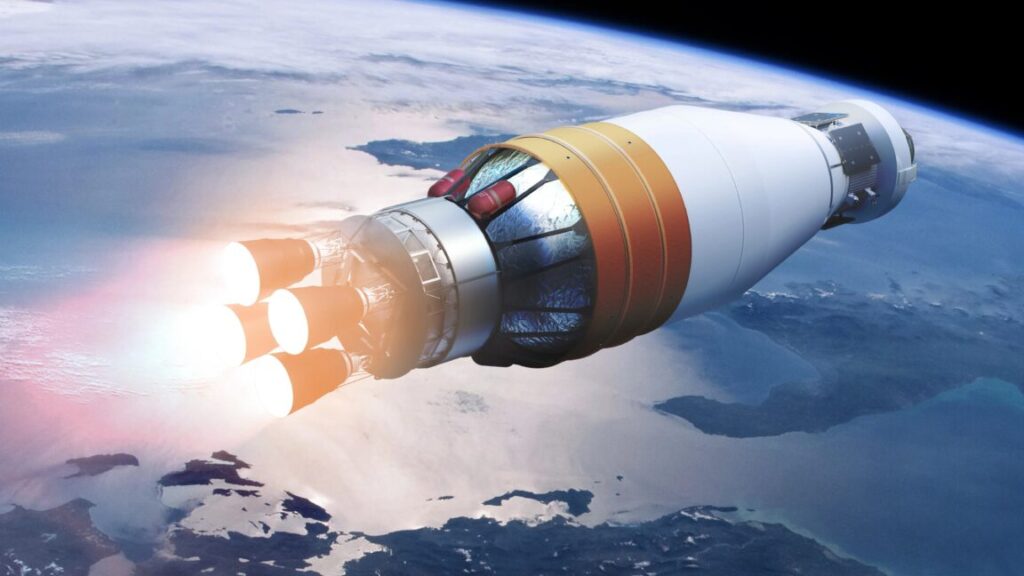

Space-based interceptors are a real challenge. The newly installed head of the Pentagon’s Golden Dome missile defense shield knows the clock is ticking to show President Donald Trump some results before the end of his term in the White House, Ars reports. Gen. Michael Guetlein identified command-and-control and the development of space-based interceptors as two of the most pressing technical challenges for Golden Dome. He believes the command-and-control problem can be “overcome in pretty short order.” The space-based interceptor piece of the architecture is a different story.

Proven physics, unproven economics … “I think the real technical challenge will be building the space-based interceptor,” Guetlein said. “That technology exists. I believe we have proven every element of the physics that we can make it work. What we have not proven is, first, can I do it economically, and then second, can I do it at scale? Can I build enough satellites to get after the threat? Can I expand the industrial base fast enough to build those satellites? Do I have enough raw materials, etc.?” Military officials haven’t said how many space-based interceptors will be required for Golden Dome, but outside estimates put the number in the thousands.

The easiest way to keep up with Eric Berger’s and Stephen Clark’s reporting on all things space is to sign up for our newsletter. We’ll collect their stories and deliver them straight to your inbox.

One big defense prime is posturing for Golden Dome. Northrop Grumman is conducting ground-based testing related to space-based interceptors as part of a competition for that segment of the Trump administration’s Golden Dome missile-defense initiative, The War Zone reports. Kathy Warden, Northrop Grumman’s CEO, highlighted the company’s work on space-based interceptors, as well as broader business opportunities stemming from Golden Dome, during a quarterly earnings call this week. Warden identified Northrop’s work in radars, drones, and command-and-control systems as potentially applicable to Golden Dome.

But here’s the real news … “It will also include new innovation, like space-based interceptors, which we’re testing now,” Warden continued. “These are ground-based tests today, and we are in competition, obviously, so not a lot of detail that I can provide here.” Warden declined to respond directly to a question about how the space-based interceptors Northrop Grumman is developing now will actually defeat their targets. (submitted by Biokleen)

Trump may slash environmental rules for rocket launches. The Trump administration is considering slashing rules meant to protect the environment and the public during commercial rocket launches, changes that companies like Elon Musk’s SpaceX have long sought, ProPublica reports. A draft executive order being circulated among federal agencies, and viewed by ProPublica, directs Secretary of Transportation Sean Duffy to “use all available authorities to eliminate or expedite” environmental reviews for launch licenses. It could also, in time, require states to allow more launches or even more launch sites along their coastlines.

Getting political at the FAA … The order is a step toward the rollback of federal oversight that Musk, who has fought bitterly with the Federal Aviation Administration over his space operations, and others have pushed for. Commercial rocket launches have grown exponentially more frequent in recent years. In addition to slashing environmental rules, the draft executive order would make the head of the FAA’s Office of Commercial Space Transportation a political appointee. This is currently a civil servant position, but the last head of the office took a voluntary separation offer earlier this year.

There’s a SPAC for that. An unproven small launch startup is partnering with a severely depleted SPAC trust to do the impossible: go public in a deal they say will be valued at $400 million, TechCrunch reports. Innovative Rocket Technologies Inc., or iRocket, is set to merge with a Special Purpose Acquisition Company, or SPAC, founded by former Commerce Secretary Wilbur Ross. But the most recent regulatory filings by this SPAC showed it was in a tenuous financial position last year, with just $1.6 million held in trust. Likewise, iRocket isn’t flooded with cash. The company has raised only a few million in venture funding, a fraction of what would be needed to develop and test the company’s small orbital-class rocket, named Shockwave.

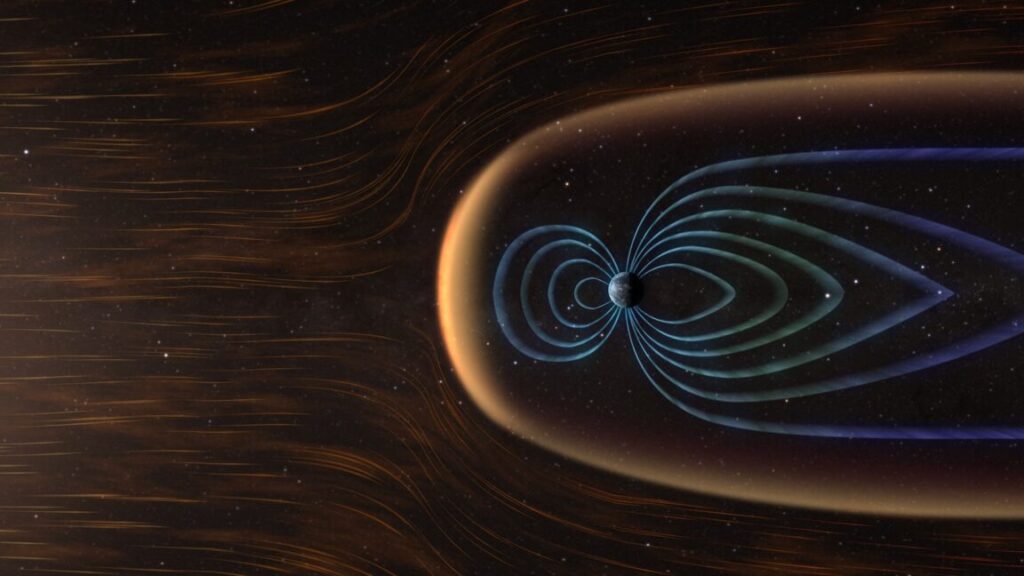

SpaceX traces a path to orbit for NASA. Two NASA satellites soared into orbit from California aboard a SpaceX Falcon 9 rocket Wednesday, commencing a $170 million mission to study a phenomenon of space physics that has eluded researchers since the dawn of the Space Age, Ars reports. The twin spacecraft are part of the NASA-funded TRACERS mission, which will spend at least a year measuring plasma conditions in narrow regions of Earth’s magnetic field known as polar cusps. As the name suggests, these regions are located over the poles. They play an important but poorly understood role in creating colorful auroras as plasma streaming out from the Sun interacts with the magnetic field surrounding Earth. The same process drives geomagnetic storms capable of disrupting GPS navigation, radio communications, electrical grids, and satellite operations.

Plenty of room for more … The TRACERS satellites are relatively small, each about the size of a washing machine, so they filled only a fraction of the capacity of SpaceX’s Falcon 9 rocket. Three other small NASA tech demo payloads hitched a ride to orbit with TRACERS, kicking off missions to test an experimental communications terminal, demonstrate an innovative scalable satellite platform made of individual building blocks, and study the link between Earth’s atmosphere and the Van Allen radiation belts. In addition to those missions, the European Space Agency launched its own CubeSat to test 5G communications from orbit. Five smallsats from an Australian company rounded out the group. Still, the Falcon 9 rocket’s payload shroud was filled with less than a quarter of the payload mass it could have delivered to the TRACERS mission’s targeted Sun-synchronous orbit.

Tianlong launch pad ready for action. Chinese startup Space Pioneer has completed a launch pad at Jiuquan spaceport in northwestern China for its Tianlong 3 liquid propellent rocket ahead of a first orbital launch, Space News reports. Space Pioneer said the launch pad passed an acceptance test, and ground crews raised a full-scale model of the Tianlong 3 rocket on the launch pad. “The rehearsal test was successfully completed,” said Space Pioneer, one of China’s leading private launch companies. The activation of the launch pad followed a couple of weeks after Space Pioneer announced the completion of static loads testing on Tianlong 3.

More to come … While this is an important step forward for Space Pioneer, construction of the launch pad is just one element the company needs to finish before Tianlong 3 can lift off for the first time. In June 2024, the company ignited Tianlong 3’s nine-engine first stage on a test stand in China. But the rocket broke free of its moorings on the test stand and unexpectedly climbed into the sky before crashing in a fireball nearby. Space Pioneer says the “weak design of the rocket’s tail structure was the direct cause of the failure” last year. The company hasn’t identified next steps for Tianlong 3, or when it might be ready to fly. Tianlong 3 is a kerosene-fueled rocket with nine main engines, similar in design architecture and payload capacity to SpaceX’s Falcon 9. Also, like Falcon 9, Tianlong 3 is supposed to have a recoverable and reusable first stage booster.

Dredging up an issue at Wallops. Rocket Lab has asked regulators for permission to transport oversized Neutron rocket structures through shallow waters to a spaceport off the coast of Virginia as it races to meet a September delivery deadline, TechCrunch reports. The request, which was made in July, is a temporary stopgap while the company awaits federal clearance to dredge a permanent channel to the Wallops Island site. Rocket Lab plans to launch its Neutron medium-lift rocket from the Mid-Atlantic Regional Spaceport (MARS) on Wallops Island, Virginia, a lower-traffic spaceport that’s surrounded by shallow channels and waterways. Rocket Lab has a sizable checklist to tick off before Neutron can make its orbital debut, like mating the rocket stages, performing a “wet dress” rehearsal, and getting its launch license from the Federal Aviation Administration. Before any of that can happen, the rocket hardware needs to make it onto the island from Rocket Lab’s factory on the nearby mainland.

Kedging bets … Access to the channel leading to Wallops Island is currently available only at low tides. So, Rocket Lab submitted an application earlier this year to dredge the channel. The dredging project was approved by the Virginia Marine Resources Commission in May, but the company has yet to start digging because it’s still awaiting federal sign-off from the Army Corps of Engineers. As the company waits for federal approval, Rocket Lab is seeking permission to use a temporary method called “kedging” to ensure the first five hardware deliveries can arrive on schedule starting in September. We don’t cover maritime issues in the Rocket Report, but if you’re interested in learning a little about kedging, here’s a link.

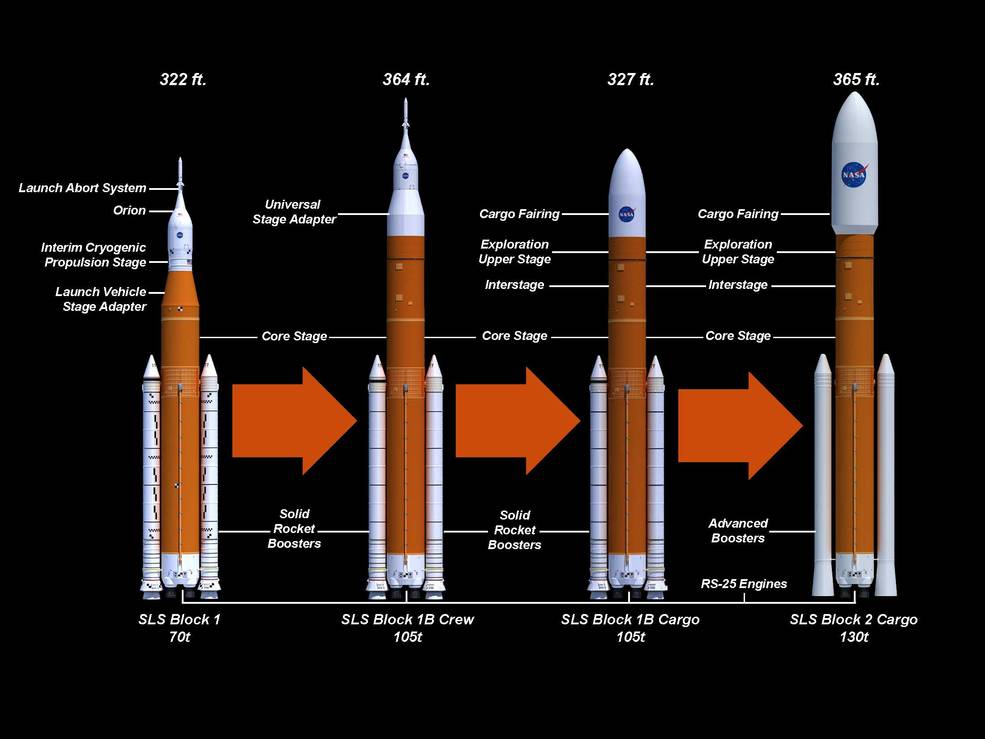

Any better ideas for an Exploration Upper Stage? Not surprisingly, Congress is pushing back against the Trump administration’s proposal to cancel the Space Launch System, the behemoth rocket NASA has developed to propel astronauts back to the Moon. But legislation making its way through the House of Representatives includes an interesting provision that would direct NASA to evaluate alternatives for the Boeing-built Exploration Upper Stage, an upgrade for the SLS rocket set to debut on its fourth flight, Ars reports. Essentially, the House Appropriations Committee is telling NASA to look for cheaper, faster options for a new SLS upper stage.

CYA EUS? … The four-engine Exploration Upper Stage, or EUS, is an expensive undertaking. Last year, NASA’s inspector general reported that the new upper stage’s development costs had ballooned from $962 million to $2.8 billion, and the project had been delayed more than six years. That’s almost a year-for-year delay since NASA and Boeing started development of the EUS. So, what are the options if NASA went with a new upper stage for the SLS rocket? One possibility is a modified version of United Launch Alliance’s dual-engine Centaur V upper stage that flies on the Vulcan rocket. It’s no longer possible to keep flying the SLS rocket’s existing single-engine upper stage because ULA has shut down the production line for it.

Raising Super Heavy from the deep. For the second time, SpaceX has retrieved an engine section from one of its Super Heavy boosters from the Gulf of Mexico, NASASpaceflight.com reports. Images posted on social media showed the tail end of a Super Heavy booster being raised from the sea off the coast of northern Mexico. Most of the rocket’s 33 Raptor engines appear to still be attached to the lower section of the stainless steel booster. Online sleuths who closely track SpaceX’s activities at Starbase, Texas, have concluded the rocket recovered from the Gulf is Booster 13, which flew on the sixth test flight of the Starship mega-rocket last November. The booster ditched in the ocean after aborting an attempted catch back at the launch pad in South Texas.

But why? … SpaceX recovered the engine section of a different Super Heavy booster from the Gulf last year. The company’s motivation for salvaging the wreckage is unclear. “Speculated reasons include engineering research, environmental mitigation, or even historical preservation,” NASASpaceflight reports.

Next three launches

July 26: Vega C | CO3D & MicroCarb | Guiana Space Center, French Guiana | 02: 03 UTC

July 26: Falcon 9 | Starlink 10-26 | Cape Canaveral Space Force Station, Florida | 08: 34 UTC

July 27: Falcon 9 | Starlink 17-2 | Vandenberg Space Force Base, California | 03: 55 UTC

Rocket Report: Channeling the future at Wallops; SpaceX recovers rocket wreckage Read More »