Trump can save TikTok without forcing a sale, ByteDance board member claims

TikTok owner ByteDance is reportedly still searching for non-sale options to stay in the US after the Supreme Court upheld a national security law requiring that TikTok’s US operations either be shut down or sold to a non-foreign adversary.

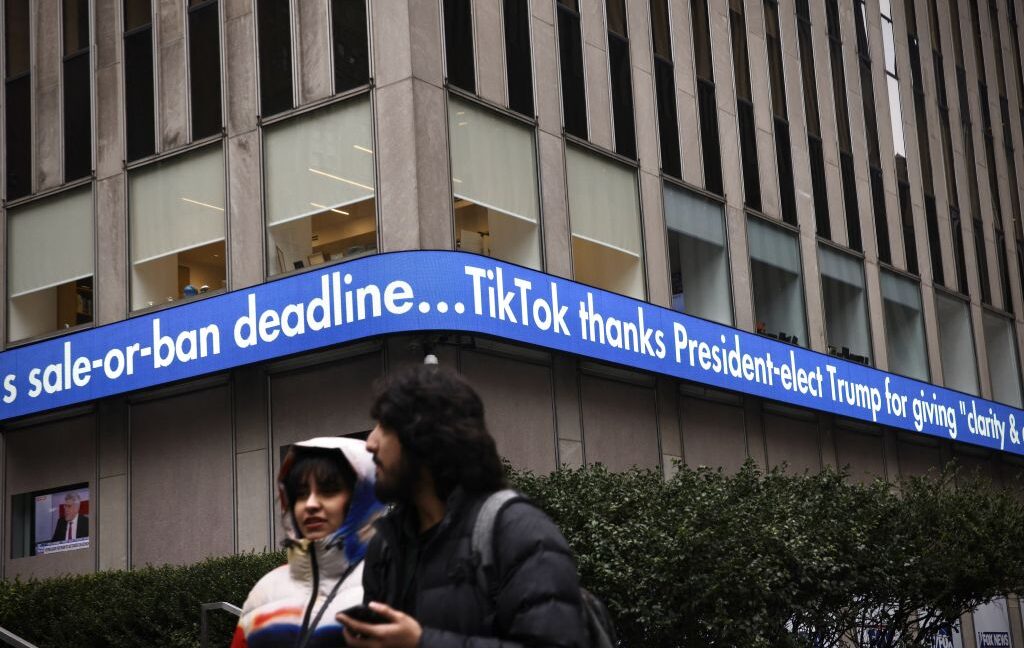

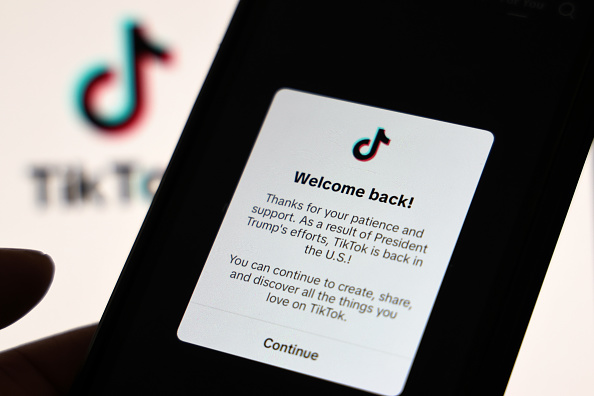

Last weekend, TikTok briefly went dark in the US, only to come back online hours later after Donald Trump reassured ByteDance that the US law would not be enforced. Then, shortly after Trump took office, he signed an executive order delaying enforcement for 75 days while he consulted with advisers to “pursue a resolution that protects national security while saving a platform used by 170 million Americans.”

Trump’s executive order did not suggest that he intended to attempt to override the national security law’s ban-or-sale requirements. But that hasn’t stopped ByteDance, board member Bill Ford told World Economic Forum (WEF) attendees, from searching for a potential non-sale option that “could involve a change of control locally to ensure it complies with US legislation,” Bloomberg reported.

It’s currently unclear how ByteDance could negotiate a non-sale option without facing a ban. Joe Biden’s extended efforts through Project Texas to keep US TikTok data out of China-controlled ByteDance’s hands without forcing a sale dead-ended, prompting Congress to pass the national security law requiring a ban or sale.

At the WEF, Ford said that the ByteDance board is “optimistic we will find a solution” that avoids ByteDance giving up a significant chunk of TikTok’s operations.

“There are a number of alternatives we can talk to President Trump and his team about that are short of selling the company that allow the company to continue to operate, maybe with a change of control of some kind, but short of having to sell,” Ford said.

Trump can save TikTok without forcing a sale, ByteDance board member claims Read More »