Google’s new hurricane model was breathtakingly good this season

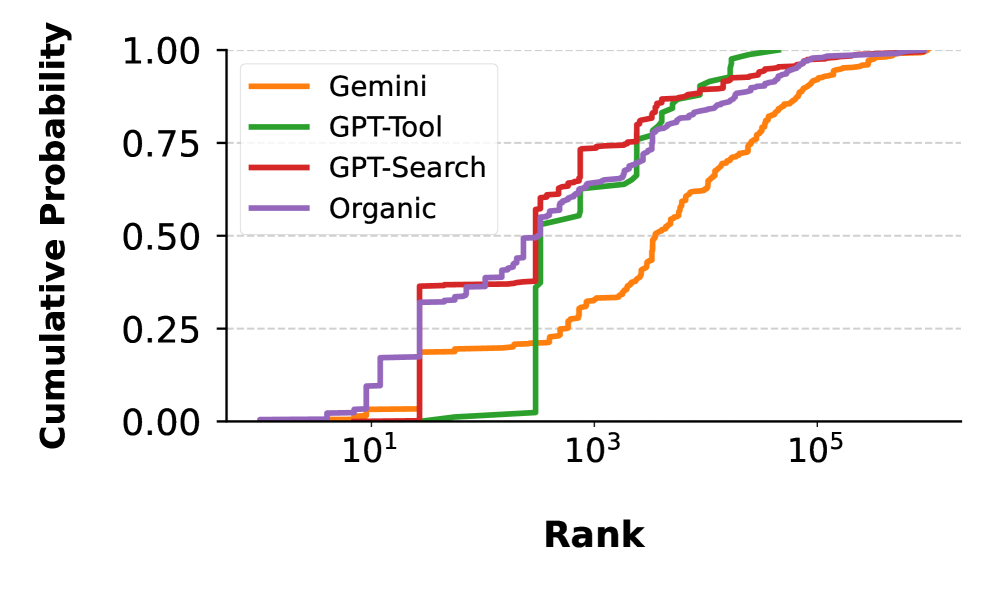

This early model comparison does not include the “gold standard” traditional, physics-based model produced by the European Centre for Medium-Range Weather Forecasts. However, the ECMWF model typically does not do better on hurricane track forecasts than the hurricane center or consensus models, which weigh several different model outputs. So it is unlikely to be superior to Google’s DeepMind.

This will change forecasting forever

It’s worth noting that DeepMind also did exceptionally well at intensity forecasting, which is the fluctuations in the strength of a hurricane. So in its first season, it nailed both hurricane tracks and intensity.

As a forecaster who has relied on traditional physics-based models for a quarter of a century, it is difficult to say how gobsmacking these results are. Going forward, it is safe to say that we will rely heavily on Google and other AI weather models, which are likely to improve in the coming years, as they are relatively new and have room for improvement.

“The beauty of DeepMind and other similar data-driven, AI-based weather models is how much more quickly they produce a forecast compared to their traditional physics-based counterparts that require some of the most expensive and advanced supercomputers in the world,” noted Michael Lowry, a hurricane specialist and author of the Eye on the Tropics newsletter, about the model performance. “Beyond that, these ‘smart’ models with their neural network architectures have the ability to learn from their mistakes and correct on-the-fly.”

What about the North American model?

As for the GFS model, it is difficult to explain why it performed so poorly this season. In the past, it has been, at worst, worthy of consideration in making a forecast. But this year, myself and other forecasters often disregarded it.

“It’s not immediately clear why the GFS performed so poorly this hurricane season,” Lowry wrote. “Some have speculated the lapse in data collection from DOGE-related government cuts this year could have been a contributing factor, but presumably such a factor would have affected other global physics-based models as well, not just the American GFS.”

With the US government in shutdown mode, we probably cannot expect many answers soon. But it seems clear that the massive upgrade of the model’s dynamic core, which began in 2019, has largely been a failure. If the GFS was a little bit behind some competitors a decade ago, it is now fading further and faster.

Google’s new hurricane model was breathtakingly good this season Read More »