Google’s nightmare: How a search spinoff could remake the web

Google has shaped the Internet as we know it, and unleashing its index could change everything.

Google may be forced to license its search technology when the final antitrust ruling comes down. Credit: Aurich Lawson

Google wasn’t around for the advent of the World Wide Web, but it successfully remade the web on its own terms. Today, any website that wants to be findable has to play by Google’s rules, and after years of search dominance, the company has lost a major antitrust case that could reshape both it and the web.

The closing arguments in the case just wrapped up last week, and Google could be facing serious consequences when the ruling comes down in August. Losing Chrome would certainly change things for Google, but the Department of Justice is pursuing other remedies that could have even more lasting impacts. During his testimony, Google CEO Sundar Pichai seemed genuinely alarmed at the prospect of being forced to license Google’s search index and algorithm, the so-called data remedies in the case. He claimed this would be no better than a spinoff of Google Search. The company’s statements have sometimes derisively referred to this process as “white labeling” Google Search.

But does a white label Google Search sound so bad? Google has built an unrivaled index of the web, but the way it shows results has become increasingly frustrating. A handful of smaller players in search have tried to offer alternatives to Google’s search tools. They all have different approaches to retrieving information for you, but they agree that spinning off Google Search could change the web again. Whether or not those changes are positive depends on who you ask.

The Internet is big and noisy

As Google’s search results have changed over the years, more people have been open to other options. Some have simply moved to AI chatbots to answer their questions, hallucinations be damned. But for most people, it’s still about the 10 blue links (for now).

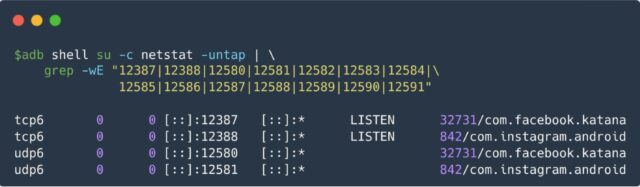

Because of the scale of the Internet, there are only three general web search indexes: Google, Bing, and Brave. Every search product (including AI tools) relies on one or more of these indexes to probe the web. But what does that mean?

“Generally, a search index is a service that, when given a query, is able to find relevant documents published on the Internet,” said Brave’s search head Josep Pujol.

A search index is essentially a big database, and that’s not the same as search results. According to JP Schmetz, Brave’s chief of ads, it’s entirely possible to have the best and most complete search index in the world and still show poor results for a given query. Sound like anyone you know?

Google’s technological lead has allowed it to crawl more websites than anyone else. It has all the important parts of the web, plus niche sites, abandoned blogs, sketchy copies of legitimate websites, copies of those copies, and AI-rephrased copies of the copied copies—basically everything. And the result of this Herculean digital inventory is a search experience that feels increasingly discombobulated.

“Google is running large-scale experiments in ways that no rival can because we’re effectively blinded,” said Kamyl Bazbaz, head of public affairs at DuckDuckGo, which uses the Bing index. “Google’s scale advantage fuels a powerful feedback loop of different network effects that ensure a perpetual scale and quality deficit for rivals that locks in Google’s advantage.”

The size of the index may not be the only factor that matters, though. Brave, which is perhaps best known for its browser, also has a search engine. Brave Search is the default in its browser, but you can also just go to the URL in your current browser. Unlike most other search engines, Brave doesn’t need to go to anyone else for results. Pujol suggested that Brave doesn’t need the scale of Google’s index to find what you need. And admittedly, Brave’s search results don’t feel meaningfully worse than Google’s—they may even be better when you consider the way that Google tries to keep you from clicking.

Brave’s index spans around 25 billion pages, but it leaves plenty of the web uncrawled. “We could be indexing five to 10 times more pages, but we choose not to because not all the web has signal. Most web pages are basically noise,” said Pujol.

The freemium search engine Kagi isn’t worried about having the most comprehensive index. Kagi is a meta search engine. It pulls in data from multiple indexes, like Bing and Brave, but it has a custom index of what founder and CEO Vladimir Prelovac calls the “non-commercial web.”

When you search with Kagi, some of the results (it tells you the proportion) come from its custom index of personal blogs, hobbyist sites, and other content that is poorly represented on other search engines. It’s reminiscent of the days when huge brands weren’t always clustered at the top of Google—but even these results are being pushed out of reach in favor of AI, ads, Knowledge Graph content, and other Google widgets. That’s a big part of why Kagi exists, according to Prelovac.

A Google spinoff could change everything

We’ve all noticed the changes in Google’s approach to search, and most would agree that they have made finding reliable and accurate information harder. Regardless, Google’s incredibly deep and broad index of the Internet is in demand.

Even with Bing and Brave available, companies are going to extremes to syndicate Google Search results. A cottage industry has emerged to scrape Google searches as a stand-in for an official index. These companies are violating Google’s terms, yet they appear in Google Search results themselves. Google could surely do something about this if it wanted to.

The DOJ calls Google’s mountain of data the “essential raw material” for building a general search engine, and it believes forcing the firm to license that material is key to breaking its monopoly. The sketchy syndication firms will evaporate if the DOJ’s data remedies are implemented, which would give competitors an official way to utilize Google’s index. And utilize it they will.

Google CEO Sundar Pichai decried the court’s efforts to force a “de facto divestiture” of Google’s search tech. Credit: Ryan Whitwam

According to Prelovac, this could lead to an explosion in search choices. “The whole purpose of the Sherman Act is to proliferate a healthy, competitive marketplace. Once you have access to a search index, then you can have thousands of search startups,” said Prelovac.

The Kagi founder suggested that licensing Google Search could allow entities of all sizes to have genuinely useful custom search tools. Cities could use the data to create deep, hyper-local search, and people who love cats could make a cat-specific search engine, in both cases pulling what they want from the most complete database of online content. And, of course, general search products like Kagi would be able to license Google’s tech for a “nominal fee,” as the DOJ puts it.

Prelovac didn’t hesitate when asked if Kagi, which offers a limited number of free searches before asking users to subscribe, would integrate Google’s index. “Yes, that is something we would do,” he said. “And that’s what I believe should happen.”

There may be some drawbacks to unleashing Google’s search services. Judge Amit Mehta has expressed concern that blocking Google’s search placement deals could reduce browser choice, and there is a similar issue with the data remedies. If Google is forced to license search as an API, its few competitors in web indexing could struggle to remain afloat. In a roundabout way, giving away Google’s search tech could actually increase its influence.

The Brave team worries about how open access to Google’s search technology could impact diversity on the web. “If implemented naively, it’s a big problem,” said Brave’s ad chief JP Schmetz, “If the court forces Google to provide search at a marginal cost, it will not be possible for Bing or Brave to survive until the remedy ends.”

The landscape of AI-based search could also change. We know from testimony given during the remedy trial by OpenAI’s Nick Turley that the ChatGPT maker tried and failed to get access to Google Search to ground its AI models—it currently uses Bing. If Google were suddenly an option, you can be sure OpenAI and others would rush to connect Google’s web data to their large language models (LLMs).

The attempt to reduce Google’s power could actually grant it new monopolies in AI, according to Brave Chief Business Officer Brian Brown. “All of a sudden, you would have a single monolithic voice of truth across all the LLMs, across all the web,” Brown said.

What if you weren’t the product?

If white labeling Google does expand choice, even at the expense of other indexes, it will give more kinds of search products a chance in the market—maybe even some that shun Google’s focus on advertising. You don’t see much of that right now.

For most people, web search is and always has been a free service supported by ads. Google, Brave, DuckDuckGo, and Bing offer all the search queries you want for free because they want eyeballs. It’s been said often, but it’s true: If you’re not paying for it, you’re the product. This is an arrangement that bothers Kagi’s founder.

“For something as important as information consumption, there should not be an intermediary between me and the information, especially one that is trying to sell me something,” said Prelovac.

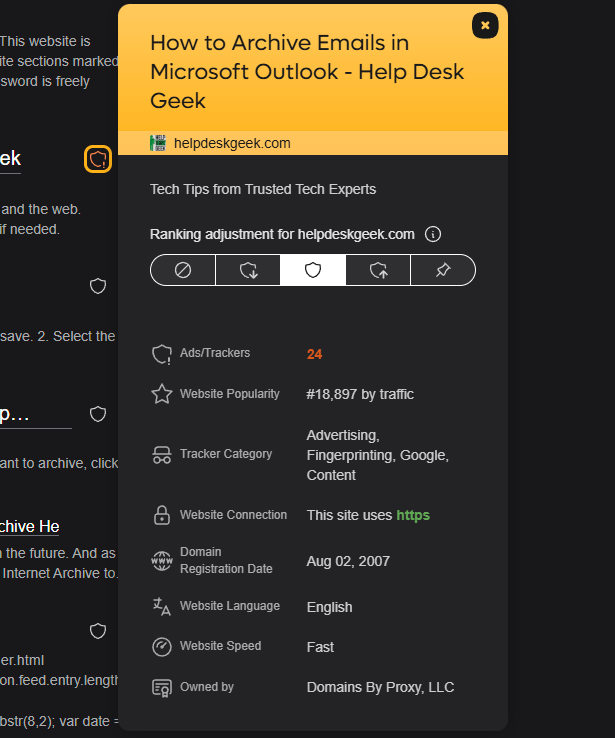

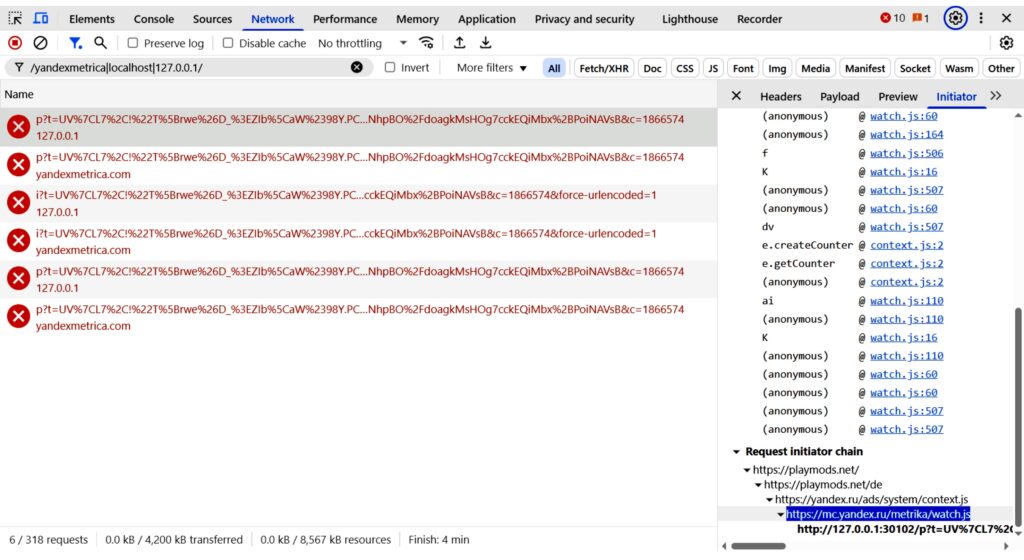

Kagi search results acknowledge the negative impact of today’s advertising regime. Kagi users see a warning next to results with a high number of ads and trackers. According to Prelovac, that is by far the strongest indication that a result is of low quality. That icon also lets you adjust the prevalence of such sites in your personal results. You can demote a site or completely hide it, which is a valuable option in the age of clickbait.

Kagi search gives you a lot of control. Credit: Ryan Whitwam

Kagi’s paid approach to search changes its relationship with your data. “We literally don’t need user data,” Prelovac said. “But it’s not only that we don’t need it. It’s a liability.”

Prelovac admitted that getting people to pay for search is “really hard.” Nevertheless, he believes ad-supported search is a dead end. So Kagi is planning for a future in five or 10 years when more people have realized they’re still “paying” for ad-based search with lost productivity time and personal data, he said.

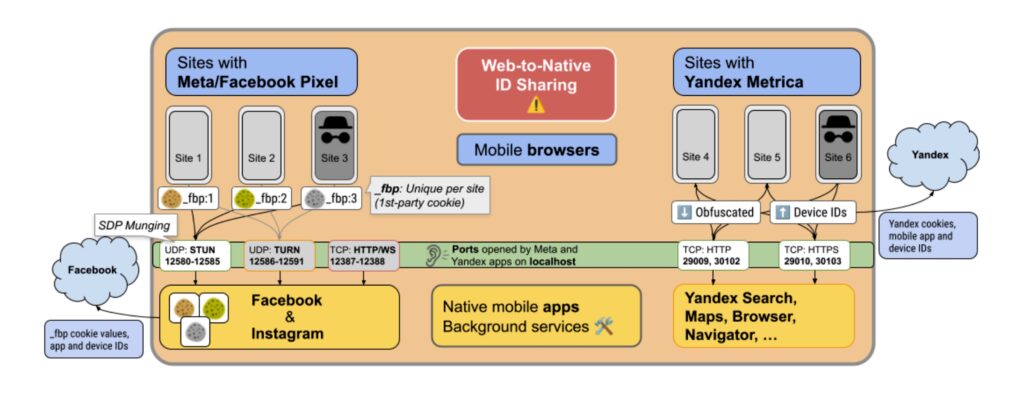

We know how Google handles user data (it collects a lot of it), but what does that mean for smaller search engines like Brave and DuckDuckGo that rely on ads?

“I’m sure they mean well,” said Prelovac.

Brave said that it shields user data from advertisers, relying on first-party tracking to attribute clicks to Brave without touching the user. “They cannot retarget people later; none of that is happening,” said Brave’s JP Schmetz.

DuckDuckGo is a bit of an odd duck—it relies on Bing’s general search index, but it adds a layer of privacy tools on top. It’s free and ad-supported like Google and Brave, but the company says it takes user privacy seriously.

“Viewing ads is privacy protected by DuckDuckGo, and most ad clicks are managed by Microsoft’s ad network,” DuckDuckGo’s Kamyl Bazbaz said. He explained that DuckDuckGo has worked with Microsoft to ensure its network does not track users or create any profiles based on clicks. He added that the company has a similar privacy arrangement with TripAdvisor for travel-related ads.

It’s AI all the way down

We can’t talk about the future of search without acknowledging the artificially intelligent elephant in the room. As Google continues its shift to AI-based search, it’s tempting to think of the potential search spin-off as a way to escape that trend. However, you may find few refuges in the coming years. There’s a real possibility that search is evolving beyond the 10 blue links and toward an AI assistant model.

All non-Google search engines have AI integrations, with the most prominent being Microsoft Bing, which has a partnership with OpenAI. But smaller players have AI search features, too. The folks working on these products agree with Microsoft and Google on one important point: They see AI as inevitable.

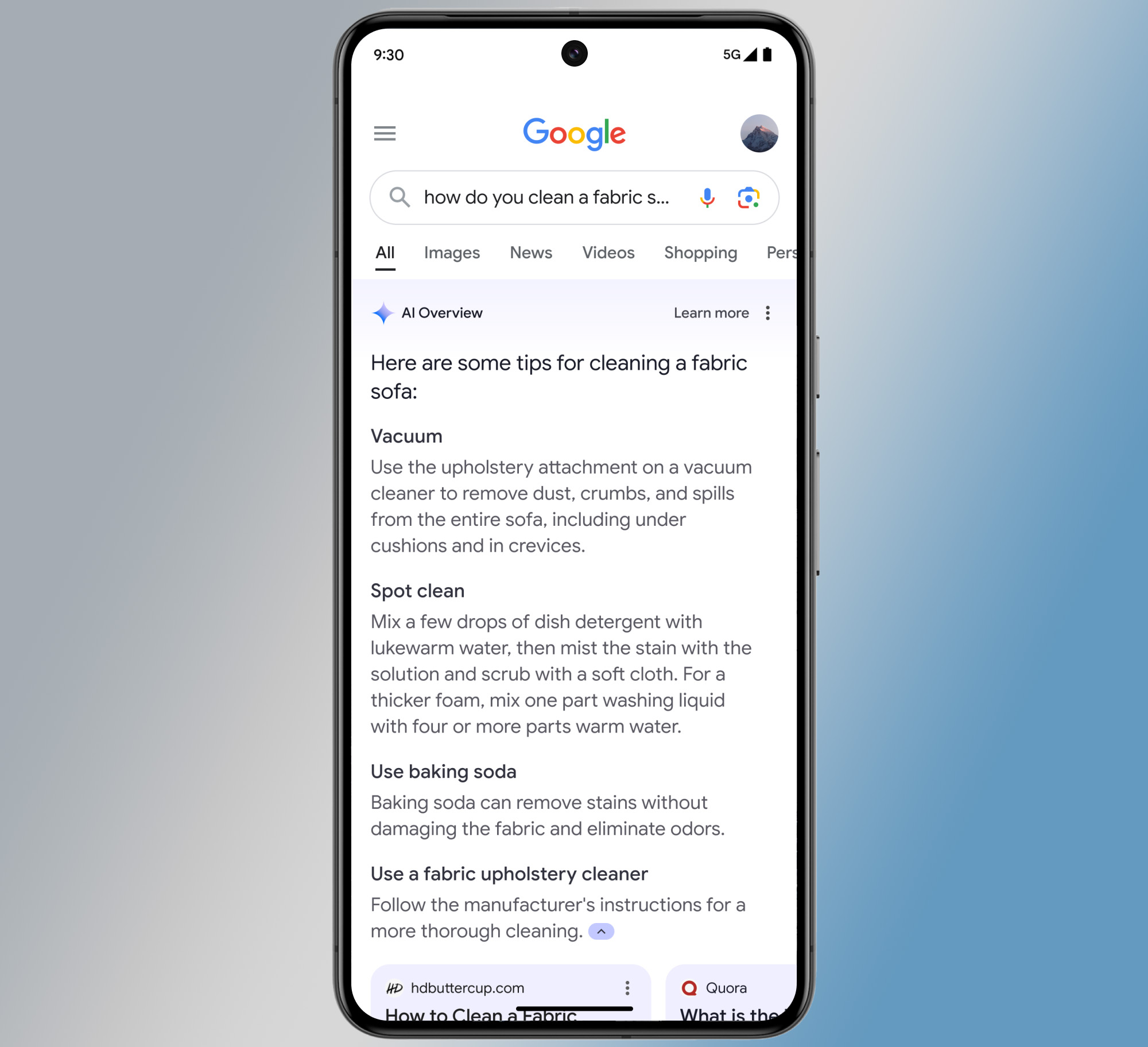

Today’s Google alternatives all have their own take on AI Overviews, which generates responses to queries based on search results. They’re generally not as in-your-face as Google AI, though. While Google and Microsoft are intensely focused on increasing the usage of AI search, other search operators aren’t pushing for that future. They are along for the ride, though.

AI Overviews are integrated with Google’s search results, and most other players have their own version. Credit: Google

“We’re finding that some people prefer to start in chat mode and then jump into more traditional search results when needed, while others prefer the opposite,” Bazbaz said. “So we thought the best thing to do was offer both. We made it easy to move between them, and we included an off switch for those who’d like to avoid AI altogether.”

The team at Brave views AI as a core means of accessing search and one that will continue to grow. Brave generates AI answers for many searches and prominently cites sources. You can also disable Brave’s AI if you prefer. But according to search chief Josep Pujol, the move to AI search is inevitable for a pretty simple reason: It’s convenient, and people will always choose convenience. So AI is changing the web as we know it, for better or worse, because LLMs can save a smidge of time, especially for more detailed “long-tail” queries. These AI features may give you false information while they do it, but that’s not always apparent.

This is very similar to the language Google uses when discussing agentic search, although it expresses it in a more nuanced way. By understanding the task behind a query, Google hopes to provide AI answers that save people time, even if the model needs a few ticks to fan out and run multiple searches to generate a more comprehensive report on a topic. That’s probably still faster than running multiple searches and manually reviewing the results, and it could leave traditional search as an increasingly niche service, even in a world with more choices.

“Will the 10 blue links continue to exist in 10 years?” Pujol asked. “Actually, one question would be, does it even exist now? In 10 years, [search] will have evolved into more of an AI conversation behavior or even agentic. That is probably the case. What, for sure, will continue to exist is the need to search. Search is a verb, an action that you do, and whether you will do it directly or whether it will be done through an agent, it’s a search engine.”

Vlad from Kagi sees AI becoming the default way we access information in the long term, but his search engine doesn’t force you to use it. On Kagi, you can expand the AI box for your searches and ask follow-ups, and the AI will open automatically if you use a question mark in your search. But that’s just the start.

“You watch Star Trek, nobody’s clicking on links there—I do believe in that vision in science fiction movies,” Prelovac said. “I don’t think my daughter will be clicking links in 10 years. The only question is if the current technology will be the one that gets us there. LLMs have inherent flaws. I would even tend to say it’s likely not going to get us to Star Trek.”

If we think of AI mainly as a way to search for information, the future becomes murky. With generative AI in the driver’s seat, questions of authority and accuracy may be left to language models that often behave in unpredictable and difficult-to-understand ways. Whether we’re headed for an AI boom or bust—for continued Google dominance or a new era of choice—we’re facing fundamental changes to how we access information.

Maybe if we get those thousands of search startups, there will be a few that specialize in 10 blue links. We can only hope.

Ryan Whitwam is a senior technology reporter at Ars Technica, covering the ways Google, AI, and mobile technology continue to change the world. Over his 20-year career, he’s written for Android Police, ExtremeTech, Wirecutter, NY Times, and more. He has reviewed more phones than most people will ever own. You can follow him on Bluesky, where you will see photos of his dozens of mechanical keyboards.

Google’s nightmare: How a search spinoff could remake the web Read More »