“What the hell are you doing?” How I learned to interview astronauts, scientists, and billionaires

The best part about journalism is not collecting information. It’s sharing it.

Sometimes the best place to do an interview is in a clean room. Credit: Lee Hutchinson

Sometimes the best place to do an interview is in a clean room. Credit: Lee Hutchinson

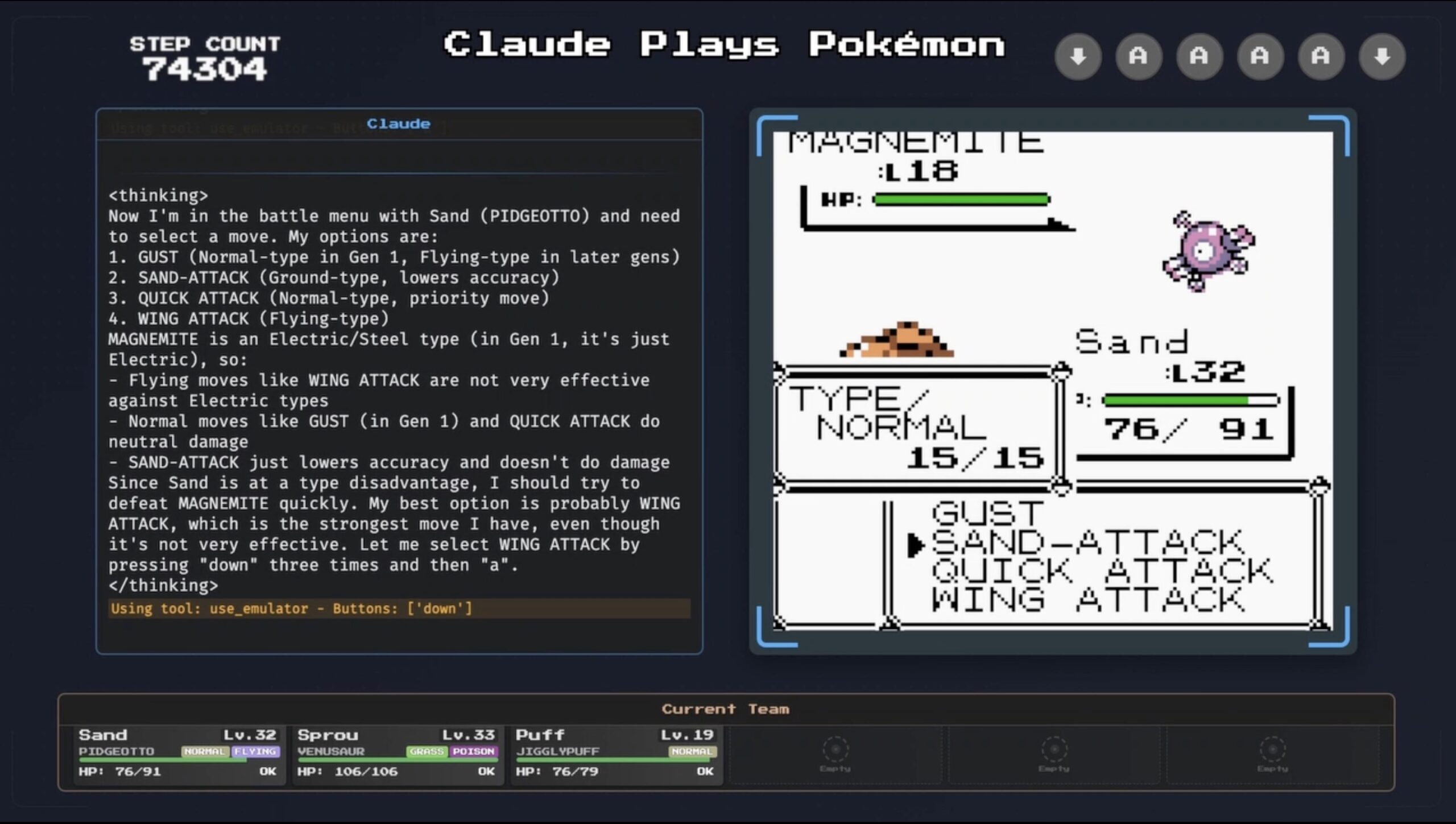

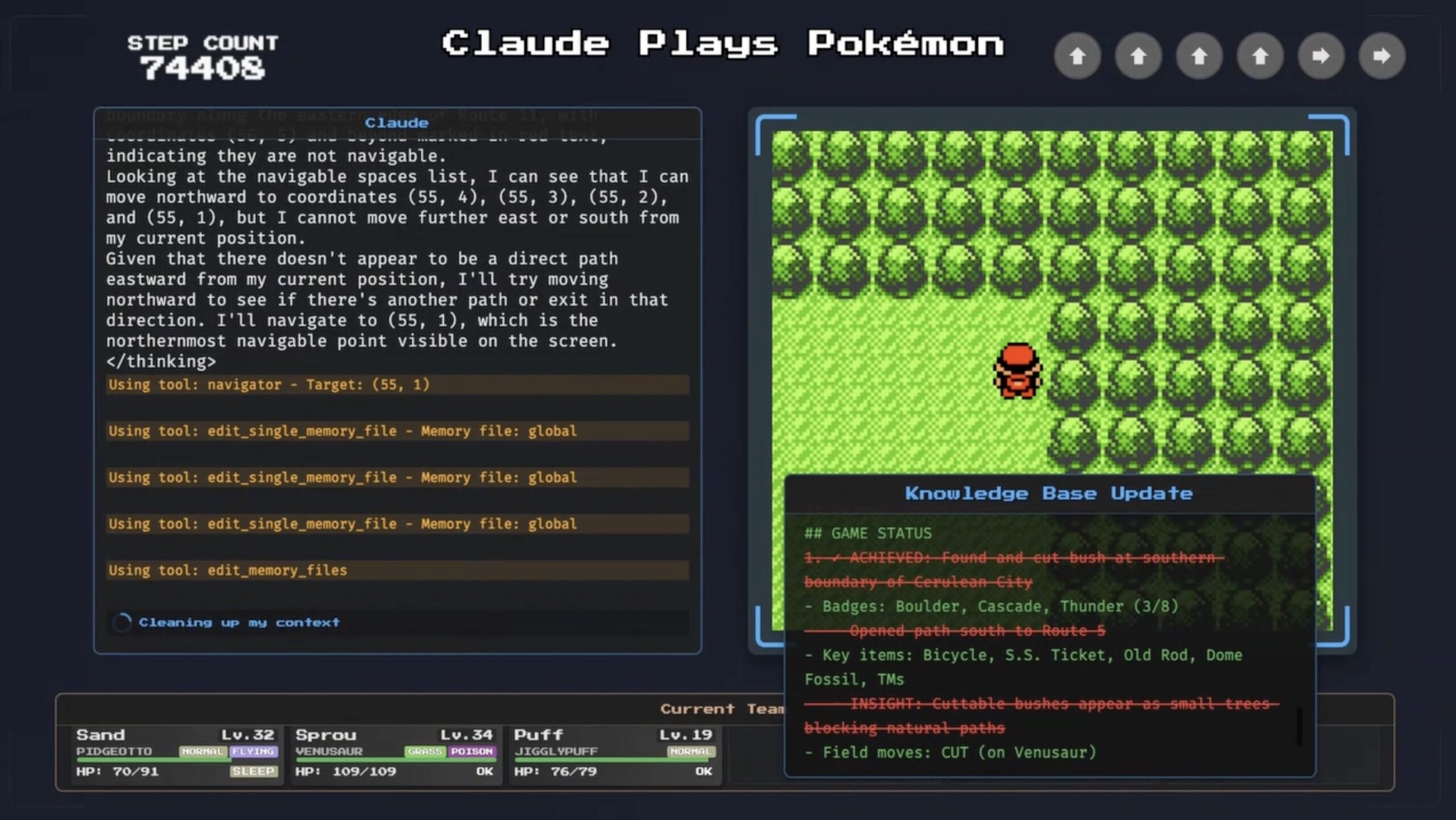

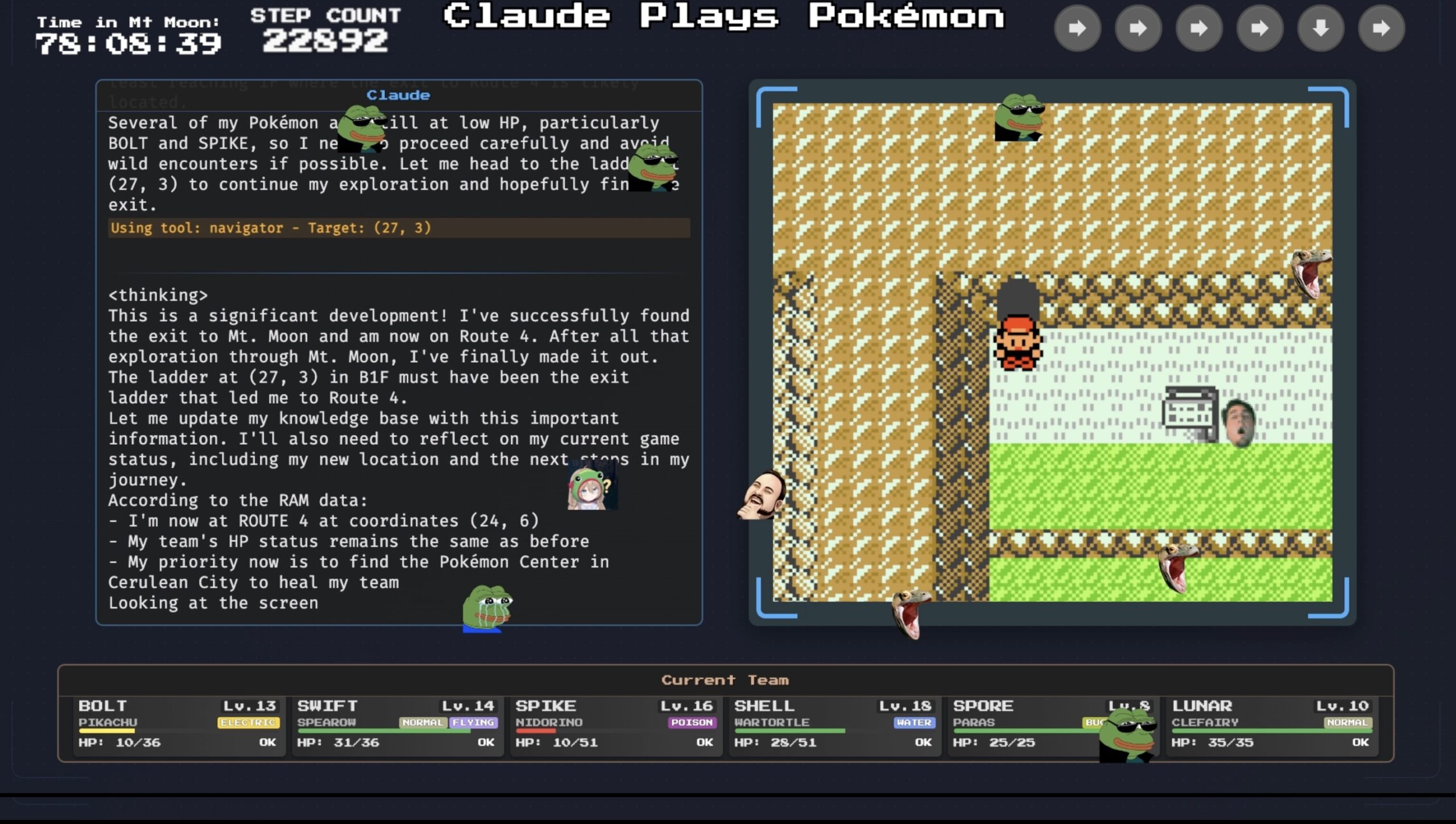

I recently wrote a story about the wild ride of the Starliner spacecraft to the International Space Station last summer. It was based largely on an interview with the commander of the mission, NASA astronaut Butch Wilmore.

His account of Starliner’s thruster failures—and his desperate efforts to keep the vehicle flying on course—was riveting. In the aftermath of the story, many readers, people on social media, and real-life friends congratulated me on conducting a great interview. But truth be told, it was pretty much all Wilmore.

Essentially, when I came into the room, he was primed to talk. I’m not sure if Wilmore was waiting for me specifically to talk to, but he pretty clearly wanted to speak with someone about his experiences aboard the Starliner spacecraft. And he chose me.

So was it luck? I’ve been thinking about that. As an interviewer, I certainly don’t have the emotive power of some of the great television interviewers, who are masters of confrontation and drama. It’s my nature to avoid confrontation where possible. But what I do have on my side is experience, more than 25 years now, as well as preparation. I am also genuinely and completely interested in space. And as it happens, these values are important, too.

Interviewing is a craft one does not pick up overnight. During my career, I have had some funny, instructive, and embarrassing moments. Without wanting to seem pretentious or self-indulgent, I thought it might be fun to share some of those stories so you can really understand what it’s like on a reporter’s side of the cassette tape.

March 2003: Stephen Hawking

I had only been working professionally as a reporter at the Houston Chronicle for a few years (and as the newspaper’s science writer for less time still) when the opportunity to interview Stephen Hawking fell into my lap.

What a coup! He was only the world’s most famous living scientist, and he was visiting Texas at the invitation of a local billionaire named George Mitchell. A wildcatter and oilman, Mitchell had grown up in Galveston along the upper Texas coast, marveling at the stars as a kid. He studied petroleum engineering and later developed the controversial practice of fracking. In his later years, Mitchell spent some of his largesse on the pursuits of his youth, including astronomy and astrophysics. This included bringing Hawking to Texas more than half a dozen times in the 1990s and early 2000s.

For an interview with Hawking, one submitted questions in advance. That’s because Hawking was afflicted with Lou Gehrig’s disease and lost the ability to speak in 1985. A computer attached to his wheelchair cycled through letters and sounds, and Hawking clicked a button to make a selection, forming words and then sentences, which were sent to a voice synthesizer. For unprepared responses, it took a few minutes to form a single sentence.

George Mitchell and Stephen Hawking during a Texas visit. Credit: Texas A&M University

What to ask him? I had a decent understanding of astronomy, having majored in it as an undergraduate. But the readership of a metro newspaper was not interested in the Hubble constant or the Schwarzschild radius. I asked him about recent discoveries of the cosmic microwave background radiation anyway. Perhaps the most enduring response was about the war in Iraq, a prominent topic of the day. “It will be far more difficult to get out of Iraq than to get in,” he said. He was right.

When I met him at Texas A&M University, Hawking was gracious and polite. He answered a couple of questions in person. But truly, it was awkward. Hawking’s time on Earth was limited and his health failing, so it required an age to tap out even short answers. I can only imagine his frustration at the task of communication, which the vast majority of humans take for granted, especially because he had such a brilliant mind and so many deep ideas to share. And here I was, with my banal questions, stealing his time. As I stood there, I wondered whether I should stare at him while he composed a response. Should I look away? I felt truly unworthy.

In the end, it was fine. I even met Hawking a few more times, including at a memorable dinner at Mitchell’s ranch north of Houston, which spans tens of thousands of acres. A handful of the world’s most brilliant theoretical physicists were there. We would all be sitting around chatting, and Hawking would periodically chime in with a response to something brought up earlier. Later on that evening, Mitchell and Hawking took a chariot ride around the grounds. I wonder what they talked about?

Spring 2011: Jane Goodall and Sylvia Earle

By this point, I had written about science for nearly a decade at the Chronicle. In the early part of the year, I had the opportunity to interview noted chimpanzee scientist Jane Goodall and one of the world’s leading oceanographers, Sylvia Earle. Both were coming to Houston to talk about their research and their passion for conservation.

I spoke with Goodall by phone in advance of her visit, and she was so pleasant, so regal. By then, Goodall was 76 years old and had been studying chimpanzees in Gombe Stream National Park in Tanzania for five decades. Looking back over the questions I asked, they’re not bad. They’re just pretty basic. She gave great answers regardless. But there is only so much chemistry you can build with a person over the telephone (or Zoom, for that matter, these days). Being in person really matters in interviewing because you can read cues, and it’s easier to know when to let a pause go. The comfort level is higher. When you’re speaking with someone you don’t know that well, establishing a basic level of comfort is essential to making an all-important connection.

A couple of months later, I spoke with Earle in person at the Houston Museum of Natural Science. I took my older daughter, then nine years old, because I wanted her to hear Earle speak later in the evening. This turned out to be a lucky move for a couple of different reasons. First, my kid was inspired by Earle to pursue studies in marine biology. And more immediately, the presence of a curious 9-year-old quickly warmed Earle to the interview. We had a great discussion about many things beyond just oceanography.

President Barack Obama talks with Dr. Sylvia Earle during a visit to Midway Atoll on September 1, 2016. Credit: Barack Obama Presidential Library

The bottom line is that I remained a fairly pedestrian interviewer back in 2011. That was partly because I did not have deep expertise in chimpanzees or oceanography. And that leads me to another key for a good interview and establishing a rapport. It’s great if a person already knows you, but even if they don’t, you can overcome that by showing genuine interest or demonstrating your deep knowledge about a subject. I would come to learn this as I started to cover space more exclusively and got to know the industry and its key players better.

September 2014: Scott Kelly

To be clear, this was not much of an interview. But it is a fun story.

I spent much of 2014 focused on space for the Houston Chronicle. I pitched the idea of an in-depth series on the sorry state of NASA’s human spaceflight program, which was eventually titled “Adrift.” By immersing myself in spaceflight for months on end, I discovered a passion for the topic and knew that writing about space was what I wanted to do for the rest of my life. I was 40 years old, so it was high time I found my calling.

As part of the series, I traveled to Kazakhstan with a photographer from the Chronicle, Smiley Pool. He is a wonderful guy who had strengths in chatting up sources that I, an introvert, lacked. During the 13-day trip to Russia and Kazakhstan, we traveled with a reporter from Esquire named Chris Jones, who was working on a long project about NASA astronaut Scott Kelly. Kelly was then training for a yearlong mission to the International Space Station, and he was a big deal.

Jones was a tremendous raconteur and an even better writer—his words, my goodness. We had so much fun over those two weeks, sharing beer, vodka, and Kazakh food. The capstone of the trip was seeing the Soyuz TMA-14M mission launch from the Baikonur Cosmodrome. Kelly was NASA’s backup astronaut for the flight, so he was in quarantine alongside the mission’s primary astronaut. (This was Butch Wilmore, as it turns out). The launch, from a little more than a kilometer away, was still the most spectacular moment of spaceflight I’ve ever observed in person. Like, holy hell, the rocket was right on top of you.

Expedition 43 NASA Astronaut Scott Kelly walks from the Zvjozdnyj Hotel to the Cosmonaut Hotel for additional training, Thursday, March 19, 2015, in Baikonur, Kazakhstan. Credit: NASA/Bill Ingalls

Immediately after the launch, which took place at 1: 25 am local time, Kelly was freed from quarantine. This must have been liberating because he headed straight to the bar at the Hotel Baikonur, the nicest watering hole in the small, Soviet-era town. Jones, Pool, and I were staying at a different hotel. Jones got a text from Kelly inviting us to meet him at the bar. Our NASA minders were uncomfortable with this, as the last thing they want is to have astronauts presented to the world as anything but sharp, sober-minded people who represent the best of the best. But this was too good to resist.

By the time we got to the bar, Kelly and his companion, the commander of his forthcoming Soyuz flight, Gennady Padalka, were several whiskeys deep. The three of us sat across from Kelly and Padalka, and as one does at 3 am in Baikonur, we started taking shots. The astronauts were swapping stories and talking out of school. At one point, Jones took out his notebook and said that he had a couple of questions. To this, Kelly responded heatedly, “What the hell are you doing?”

Not conducting an interview, apparently. We were off the record. Well, until today at least.

We drank and talked for another hour or so, and it was incredibly memorable. At the time, Kelly was probably the most famous active US astronaut, and here I was throwing down whiskey with him shortly after watching a rocket lift off from the very spot where the Soviets launched the Space Age six decades earlier. In retrospect, this offered a good lesson that the best interviews are often not, in fact, interviews. To get the good information, you need to develop relationships with people, and you do that by talking with them person to person, without a microphone, often with alcohol.

Scott Kelly is a real one for that night.

September 2019: Elon Musk

I have spoken with Elon Musk a number of times over the years, but none was nearly so memorable as a long interview we did for my first book on SpaceX, called Liftoff. That summer, I made a couple of visits to SpaceX’s headquarters in Hawthorne, California, interviewing the company’s early employees and sitting in on meetings in Musk’s conference room with various teams. Because SpaceX is such a closed-up company, it was fascinating to get an inside look at how the sausage was made.

It’s worth noting that this all went down a few months before the onset of the COVID-19 pandemic. In some ways, Musk is the same person he was before the outbreak. But in other ways, he is profoundly different, his actions and words far more political and polemical.

Anyway, I was supposed to interview Musk on a Friday evening at the factory at the end of one of these trips. As usual, Musk was late. Eventually, his assistant texted, saying something had come up. She was desperately sorry, but we would have to do the interview later. I returned to my hotel, downbeat. I had an early flight the next morning back to Houston. But after about an hour, the assistant messaged me again. Musk had to travel to South Texas to get the Starship program moving. Did I want to travel with him and do the interview on the plane?

As I sat on his private jet the next day, late morning, my mind swirled. There would be no one else on the plane but Musk, his three sons (triplets, then 13 years old) and two bodyguards, and me. When Musk is in a good mood, an interview can be a delight. He is funny, sharp, and a good storyteller. When Musk is in a bad mood, well, an interview is usually counterproductive. So I fretted. What if Musk was in a bad mood? It would be a super-awkward three and a half hours on the small jet.

Two Teslas drove up to the plane, the first with Musk driving his boys and the second with two security guys. Musk strode onto the jet, saw me, and said he didn’t realize I was going to be on the plane. (A great start to things!) Musk then took out his phone and started a heated conversation about digging tunnels. By this point, I was willing myself to disappear. I just wanted to melt into the leather seat I was sitting in about three feet from Musk.

So much for a good mood for the interview.

As the jet climbed, the phone conversation got worse, but then Musk lost his connection. He put away his phone and turned to me, saying he was free to talk. His mood, almost as if by magic, changed. Since we were discussing the early days of SpaceX at Kwajalein, he gathered the boys around so they could hear about their dad’s earlier days. The interview went shockingly well, and at least part of the reason has to be that I knew the subject matter deeply, had prepared, and was passionate about it. We spoke for nearly two hours before Musk asked if he might have some time with his kids. They spent the rest of the flight playing video games, yucking it up.

April 2025: Butch Wilmore

When they’re on the record, astronauts mostly stick to a script. As a reporter, you’re just not going to get too much from them. (Off the record is a completely different story, of course, as astronauts are generally delightful, hilarious, and earnest people.)

Last week, dozens of journalists were allotted 10-minute interviews with Wilmore and, separately, Suni Williams. It was the first time they had spoken in depth with the media since their launch on Starliner and return to Earth aboard a Crew Dragon vehicle. As I waited outside Studio A at Johnson Space Center, I overheard Wilmore completing an interview with a Tennessee-based outlet, where he is from. As they wrapped up, the public affairs officer said he had just one more interview left and said my name. Wilmore said something like, “Oh good, I’ve been waiting to talk with him.”

That was a good sign. Out of all the interviews that day, it was good to know he wanted to speak with me. The easy thing for him to do would have been to use “astronaut speak” for 10 minutes and then go home. I was the last interview of the day.

As I prepared to speak with Wilmore and Williams, I didn’t want to ask the obvious questions they’d answered many times earlier. If you ask, “What was it like to spend nine months in space when you were expecting only a short trip?” you’re going to get a boring answer. Similarly, although the end of the mission was highly politicized by the Trump White House, two veteran NASA astronauts were not going to step on that landmine.

I wanted to go back to the root cause of all this, the problems with Starliner’s propulsion system. My strategy was simply to ask what it was like to fly inside the spacecraft. Williams gave me some solid answers. But Wilmore had actually been at the controls. And he apparently had been holding in one heck of a story for nine months. Because when I asked about the launch, and then what it was like to fly Starliner, he took off without much prompting.

Butch Wilmore has flown on four spacecraft: the Space Shuttle, Soyuz, Starliner, and Crew Dragon. Credit: NASA/Emmett Given

I don’t know exactly why Wilmore shared so much with me. We are not particularly close and have never interacted outside of an official NASA setting. But he knows of my work and interest in spaceflight. Not everyone at the space agency appreciates my journalism, but they know I’m deeply interested in what they’re doing. They know I care about NASA and Johnson Space Center. So I asked Wilmore a few smart questions, and he must have trusted that I would tell his story honestly and accurately, and with appropriate context. I certainly tried my best. After a quarter of a century, I have learned well that the most sensational stories are best told without sensationalism.

Even as we spoke, I knew the interview with Wilmore was one of the best I had ever done. A great scientist once told me that the best feeling in the world is making some little discovery in a lab and for a short time knowing something about the natural world that no one else knows. The equivalent, for me, is doing an interview and knowing I’ve got gold. And for a little while, before sharing it with the world, I’ve got that little piece of gold all to myself.

But I’ll tell you what. It’s even more fun to let the cat out of the bag. The best part about journalism is not collecting information. It’s sharing that information with the world.

Eric Berger is the senior space editor at Ars Technica, covering everything from astronomy to private space to NASA policy, and author of two books: Liftoff, about the rise of SpaceX; and Reentry, on the development of the Falcon 9 rocket and Dragon. A certified meteorologist, Eric lives in Houston.