“Go generate a bridge and jump off it”: How video pros are navigating AI

In 2016, the legendary Japanese filmmaker Hayao Miyazaki was shown a bizarre AI-generated video of a misshapen human body crawling across a floor.

Miyazaki declared himself “utterly disgusted” by the technology demo, which he considered an “insult to life itself.”

“If you really want to make creepy stuff, you can go ahead and do it,” Miyazaki said. “I would never wish to incorporate this technology into my work at all.”

Many fans interpreted Miyazaki’s remarks as rejecting AI-generated video in general. So they didn’t like it when, in October 2024, filmmaker PJ Accetturo used AI tools to create a fake trailer for a live-action version of Miyazaki’s animated classic Princess Mononoke. The trailer earned him 22 million views on X. It also earned him hundreds of insults and death threats.

“Go generate a bridge and jump off of it,” said one of the funnier retorts. Another urged Accetturo to “throw your computer in a river and beg God’s forgiveness.”

Someone tweeted that Miyazaki “should be allowed to legally hunt and kill this man for sport.”

PJ Accetturo is a director and founder of Genre AI, an AI ad agency. Credit: PJ Accetturo

The development of AI image and video generation models has been controversial, to say the least. Artists have accused AI companies of stealing their work to build tools that put people out of a job. Using AI tools openly is stigmatized in many circles, as Accetturo learned the hard way.

But as these models have improved, they have sped up workflows and afforded new opportunities for artistic expression. Artists without AI expertise might soon find themselves losing work.

Over the last few weeks, I’ve spoken to nine actors, directors, and creators about how they are navigating these tricky waters. Here’s what they told me.

Actors have emerged as a powerful force against AI. In 2023, SAG-AFTRA, the Hollywood actors’ union, had its longest-ever strike, partly to establish more protections for actors against AI replicas.

Actors have lobbied to regulate AI in their industry and beyond. One actor I talked with, Erik Passoja, has testified before the California Legislature in favor of several bills, including for greater protections against pornographic deepfakes. SAG-AFTRA endorsed SB 1047, an AI safety bill regulating frontier models. The union also organized against the proposed moratorium on state AI bills.

A recent flashpoint came in September, when Deadline Hollywood reported that talent agencies were interested in signing “AI actress” Tilly Norwood.

Actors weren’t happy. Emily Blunt told Variety, “This is really, really scary. Come on agencies, don’t do that.”

Natasha Lyonne, star of Russian Doll, posted on an Instagram Story: “Any talent agency that engages in this should be boycotted by all guilds. Deeply misguided & totally disturbed.”

The backlash was partly specific to Tilly Norwood—Lyonne is no AI skeptic, having cofounded an AI studio—but it also reflects a set of concerns around AI common to many in Hollywood and beyond.

Here’s how SAG-AFTRA explained its position:

Tilly Norwood is not an actor, it’s a character generated by a computer program that was trained on the work of countless professional performers — without permission or compensation. It has no life experience to draw from, no emotion and, from what we’ve seen, audiences aren’t interested in watching computer-generated content untethered from the human experience. It doesn’t solve any “problem” — it creates the problem of using stolen performances to put actors out of work, jeopardizing performer livelihoods and devaluing human artistry.

This statement reflects three broad criticisms that come up over and over in discussions of AI art:

Content theft: Most leading AI video models have been trained on broad swathes of the Internet, including images and films made by artists. In many cases, companies have not asked artists for permission to use this content, nor compensated them. Courts are still working out whether this is fair use under copyright law. But many people I talked to consider AI companies’ training efforts to be theft of artists’ work.

Job loss: If AI tools can make passable video quickly or drastically speed up editing tasks, that potentially takes jobs away from actors or film editors. While past technological advancements have also eliminated jobs—the adoption of digital cameras drastically reduced the number of people cutting physical film—AI could have an even broader impact.

Artistic quality: A lot of people told me they just didn’t think AI-generated content could ever be good art. Tess Dinerstein stars in vertical dramas—episodic programs optimized for viewing on smartphones. She told me that AI is “missing that sort of human connection that you have when you go to a movie theater and you’re sobbing your eyes out because your favorite actor is talking about their dead mom.”

The concern about theft is potentially solvable by changing how models are trained. Around the time Accetturo released the “Princess Mononoke” trailer, he called for generative AI tools to be “ethically trained on licensed datasets.”

Some companies have moved in this direction. For instance, independent filmmaker Gille Klabin told me he “feels pretty good” using Adobe products because the company trains its AI models on stock images that it pays royalties for.

But the other two issues—job losses and artistic integrity—will be harder to finesse. Many creators—and fans—believe that AI-generated content misses the fundamental point of art, which is about creating an emotional connection between creators and viewers.

But while that point is compelling in theory, the details can be tricky.

Dinerstein, the vertical drama actress, told me that she’s “not fundamentally against AI”—she admits “it provides a lot of resources to filmmakers” in specialized editing tasks—but she takes a hard stance against it on social media.

“It’s hard to ever explain gray areas on social media,” she said, and she doesn’t want to “come off as hypocritical.”

Even though she doesn’t think that AI poses a risk to her job—“people want to see what I’m up to”—she does fear people (both fans and vertical drama studios) making an AI representation of her without her permission. And she has found it easiest to just say, “You know what? Don’t involve me in AI.”

Others see it as a much broader issue. Actress Susan Spano told me it was “an issue for humans, not just actors.”

“This is a world of humans and animals,” she said. “Interaction with humans is what makes it fun. I mean, do we want a world of robots?”

It’s relatively easy for actors to take a firm stance against AI because they inherently do their work in the physical world. But things are more complicated for other Hollywood creatives, such as directors, writers, and film editors. AI tools can genuinely make them more productive, and they’re at risk of losing work if they don’t stay on the cutting edge.

So the non-actors I talked to took a range of approaches to AI. Some still reject it. Others have used the tools reluctantly and tried to keep their heads down. Still others have openly embraced the technology.

Kavan Cardoza is a director and AI filmmaker. Credit: Phantom X

Take Kavan Cardoza, for example. He worked as a music video director and photographer for close to a decade before getting his break into filmmaking with AI.

After the image model Midjourney was first released in 2022, Cardoza started playing around with image generation and later video generation. Eventually, he “started making a bunch of fake movie trailers” for existing movies and franchises. In December 2024, he made a fan film in the Batman universe that “exploded on the Internet,” before Warner Bros. took it down for copyright infringement.

Cardoza acknowledges that he re-created actors in former Batman movies “without their permission.” But he insists he wasn’t “trying to be malicious or whatever. It was truly just a fan film.”

Whereas Accetturo received death threats, the response to Cardoza’s fan film was quite positive.

“Every other major studio started contacting me,” Cardoza said. He set up an AI studio, Phantom X, with several of his close friends. Phantom X started by making ads (where AI video is catching on quickest), but Cardoza wanted to focus back on films.

In June, Cardoza made a short film called Echo Hunter, a blend of Blade Runner and The Matrix. Some shots look clearly AI-generated, but Cardoza used motion-capture technology from Runway to put the faces of real actors into his AI-generated world. Overall, the piece pretty much hangs together.

Cardoza wanted to work with real actors because their artistic choices can help elevate the script he’s written: “There’s a lot more levels of creativity to it.” But he needed SAG-AFTRA’s approval to make a film that blends AI techniques with the likenesses of SAG-AFTRA actors. To get it, he had to promise not to reuse the actors’ likenesses in other films.

In Cardoza’s view, AI is “giving voices to creators that otherwise never would have had the voice.”

But Cardoza isn’t wedded to AI. When an interviewer asked him whether he’d make a non-AI film if required to, he responded, “Oh, 100 percent.” Cardoza added that if he had the budget to do it now, “I’d probably still shoot it all live action.”

He acknowledged to me that there will be losers in the transition—“there’s always going to be changes”—but he compares the rise of AI with past technological developments in filmmaking, like the rise of visual effects. This created new jobs making visual effects digitally, but reduced jobs making elaborate physical sets.

Cardoza expressed interest in reducing the amount of job loss. In another interview, Cardoza said that for his film project, “we want to make sure we include as many people as possible,” not just actors, but sound designers, script editors, and other specialized roles.

But he believes that eventually, AI will get good enough to do everyone’s job. “Like I say with tech, it’s never about if, it’s just when.”

Accetturo’s entry into AI was similar. He told me that he worked for 15 years as a filmmaker, “mostly as a commercial director and former documentary director.” During the pandemic, he “raised millions” for an animated TV series, but it got caught up in development hell.

AI gave him a new chance at success. Over the summer of 2024, he started playing around with AI video tools. He realized that he was in the sweet spot to take advantage of AI: experienced enough to make something good, but not so established that he was risking his reputation. After Google released Veo 3 in May, Accetturo released a fake medicine ad that went viral. His studio now produces ads for prominent companies like Oracle and Popeyes.

Accetturo says the backlash against him has subsided: “It truly is nothing compared to what it was.” And he says he’s committed to working on AI: “Everyone understands that it’s the future.”

Between the anti- and pro-AI extremes, there are a lot of editors and artists quietly using AI tools without disclosing it. Unsurprisingly, it’s difficult to find people who will speak about this on the record.

“A lot of people want plausible deniability right now,” according to Ryan Hayden, a Hollywood talent agent. “There is backlash about it.”

But if editors don’t use AI tools, they risk becoming obsolete. Hayden says that he knows a lot of people in the editing field trying to master AI because “there’s gonna be a massive cut” in the total number of editors. Those who know AI might survive.

As one comedy writer involved in an AI project told Wired, “We wanted to be at the table and not on the menu.”

Clandestine AI usage extends into the upper reaches of the industry. Hayden knows an editor who works with a major director who has directed $100 million films. “He’s already using AI, sometimes without people knowing.”

Some artists feel morally conflicted but don’t think they can effectively resist. Vinny Dellay, a storyboard artist who has worked on Marvel films and Super Bowl ads, released a video detailing his views on the ethics of using AI as a working artist. Dellay said that he agrees that “AI being trained off of art found on the Internet without getting permission from the artist, it may not be fair, it may not be honest.” But refusing to use AI products won’t stop their general adoption. Believing otherwise is “just being delusional.”

Instead, Dellay said that the right course is to “adapt like cockroaches after a nuclear war.” If they’re lucky, using AI in storyboarding workflows might even “let a storyboard artist pump out twice the boards in half the time without questioning all your life’s choices at 3 am.”

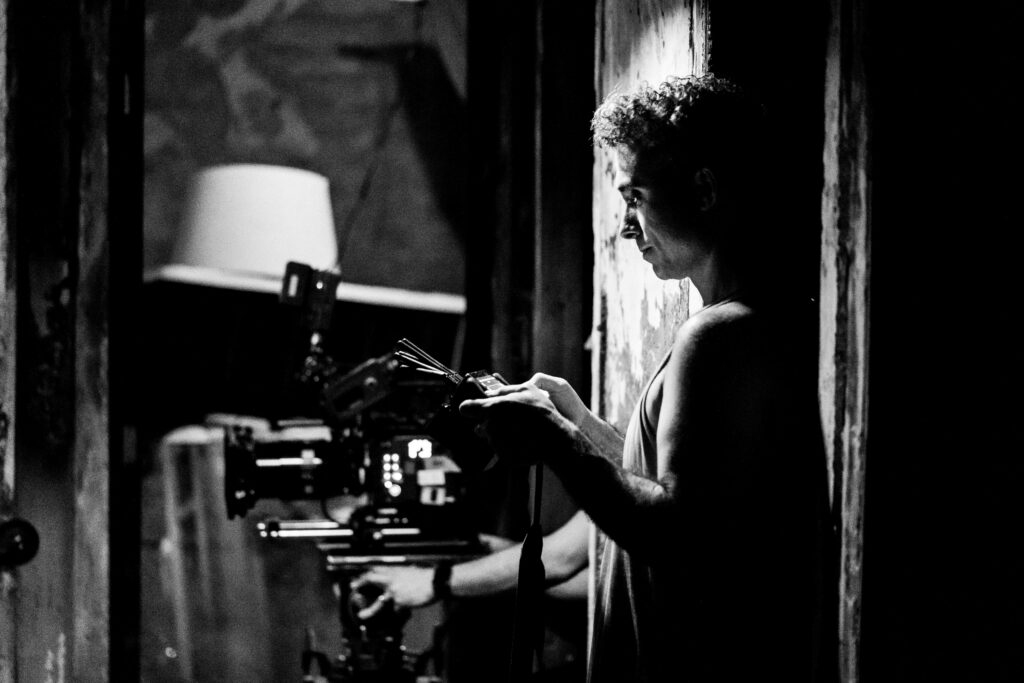

Gille Klabin is an independent writer, director, and visual effects artist. Credit: Gille Klabin

Gille Klabin is an indie director and filmmaker currently working on a feature called Weekend at the End of the World.

As an independent filmmaker, Klabin can’t afford to hire many people. There are many labor-intensive tasks—like making a pitch deck for his film—that he’d otherwise have to do himself. An AI tool “essentially just liberates us to get more done and have more time back in our life.”

But he’s careful to stick to his own moral lines. Any time he mentioned using an AI tool during our interview, he’d explain why he thought that was an appropriate choice. He said he was fine with AI use “as long as you’re using it ethically in the sense that you’re not copying somebody’s work and using it for your own.”

Drawing these lines can be difficult, however. Hayden, the talent agent, told me that as AI tools make low-budget films look better, it gets harder to make high-budget films, which employ the most people at the highest wage levels.

If anything, Klabin’s AI uptake is limited more by the current capabilities of AI models. Klabin is an experienced visual effects artist, and he finds AI products to generally be “not really good enough to be used in a final project.”

He gave me a concrete example. Rotoscoping is a process in which you trace out the subject of the shot so you can edit the background independently. It’s very labor-intensive—one has to edit every frame individually—so Klabin has tried using Runway’s AI-driven rotoscoping. While it can make for a decent first pass, the result is just too messy to use as a final project.

Klabin sent me this GIF of a series of rotoscoped frames from his upcoming movie. While the model does a decent job of identifying the people in the frame, its boundaries aren’t consistent from frame to frame. The result is noisy.

Current AI tools are full of these small glitches, so Klabin only uses them for tasks that audiences don’t see (like creating a movie pitch deck) or in contexts where he can clean up the result afterward.

Stephen Robles reviews Apple products on YouTube and other platforms. He uses AI in some parts of the editing process, such as removing silences or transcribing audio, but doesn’t see it as disruptive to his career.

Stephen Robles is a YouTuber, podcaster, and creator covering tech, particularly Apple. Credit: Stephen Robles

“I am betting on the audience wanting to trust creators, wanting to see authenticity,” he told me. AI video tools don’t really help him with that and can’t replace the reputation he’s sought to build.

Recently, he experimented with using ChatGPT to edit a video thumbnail (the image used to advertise a video). He got a couple of negative reactions about his use of AI, so he said he “might slow down a little bit” with that experimentation.

Robles didn’t seem as concerned about AI models stealing from creators like him. When I asked him about how he felt about Google training on his data, he told me that “YouTube provides me enough benefit that I don’t think too much about that.”

Professional thumbnail artist Antioch Hwang has a similarly pragmatic view toward using AI. Some channels he works with have audiences that are “very sensitive to AI images.” Even using “an AI upscaler to fix up the edges” can provoke strong negative reactions. For those channels, he’s “very wary” about using AI.

Antioch Hwang is a YouTube thumbnail artist. Credit: Antioch Creative

But for most channels he works for, he’s fine using AI, at least for technical tasks. “I think there’s now been a big shift in the public perception of these AI image generation tools,” he told me. “People are now welcoming them into their workflow.”

He’s still careful with his AI use, though, because he thinks that having human artistry helps in the YouTube ecosystem. “If everyone has all the [AI] tools, then how do you really stand out?” he said.

Recently, top creators have started using more rough-looking thumbnails for their videos. AI has made polished thumbnails too easy to create, so top creators are using what Hwang would call “poorly made thumbnails” to help videos stand out.

Hwang told me something surprising: even as AI makes it easier for creators to make thumbnails themselves, business has never been better for thumbnail artists, even at the lower end. He said that demand has soared because “AI as a whole has lowered the barriers for content creation, and now there’s more creators flooding in.”

Still, Hwang doesn’t expect the good times to last forever. “I don’t see AI completely taking over for the next three-ish years. That’s my estimated timeline.”

Everyone I talked to had different answers to when—if ever—AI would meaningfully disrupt their part of the industry.

Some, like Hwang, were pessimistic. Actor Erik Passoja told me he thought the big movie studios—like Warner Bros. or Paramount—would be gone in three to five years.

But others were more optimistic. Tess Dinerstein, the vertical drama actor, said, “I don’t think that verticals are ever going to go fully AI.” Even if it becomes technologically feasible, she argued, “that just doesn’t seem to be what the people want.”

Gille Klabin, the independent filmmaker, thought there would always be a place for high-quality human films. If someone’s work is “fundamentally derivative,” then they are at risk. But he thinks the best human-created work will still stand out. “I don’t know how AI could possibly replace the borderline divine element of consciousness,” he said.

The people who were most bullish on AI were, if anything, the least optimistic about their own career prospects. “I think at a certain point it won’t matter,” Kavan Cardoza told me. “It’ll be that anyone on the planet can just type in some sentences” to generate full, high-quality videos.

This might explain why Accetturo has become something of an AI evangelist; his newsletter tries to teach other filmmakers how to adapt to the coming AI revolution.

AI “is a tsunami that is gonna wipe out everyone” he told me. “So I’m handing out surfboards—teaching people how to surf. Do with it what you will.”

Kai Williams is a reporter for Understanding AI, a Substack newsletter founded by Ars Technica alum Timothy B. Lee. His work is supported by a Tarbell Fellowship. Subscribe to Understanding AI to get more from Tim and Kai.

“Go generate a bridge and jump off it”: How video pros are navigating AI Read More »