Grok was finally updated to stop undressing women and children, X Safety says

Grok scrutiny intensifies

California’s AG will investigate whether Musk’s nudifying bot broke US laws.

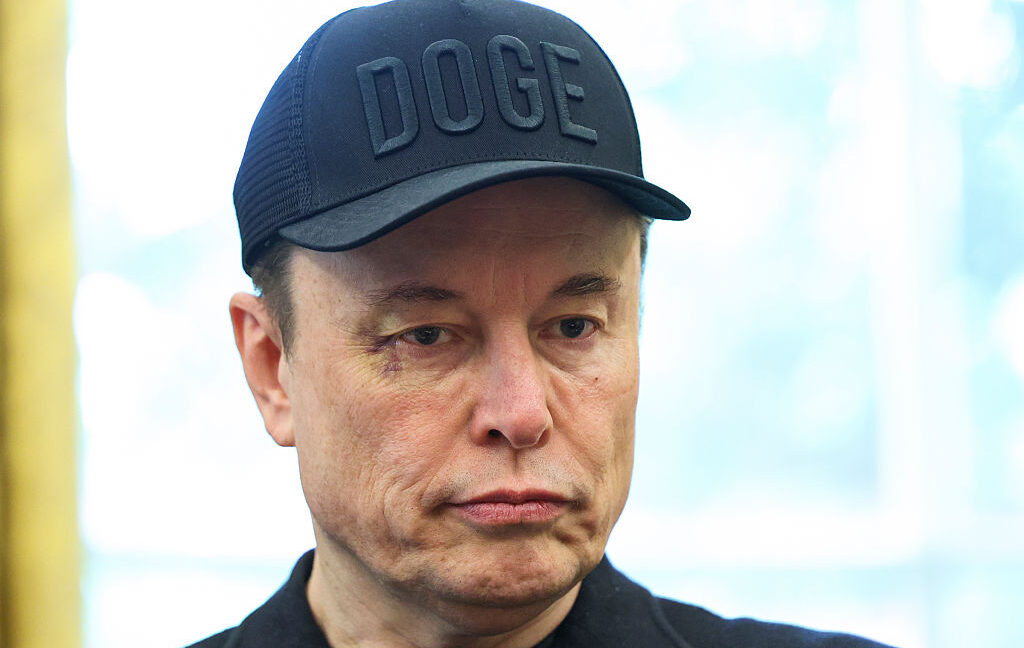

(EDITORS NOTE: Image contains profanity) An unofficially-installed poster picturing Elon Musk with the tagline, “Who the [expletive] would want to use social media with a built-in child abuse tool?” is displayed on a bus shelter on January 13, 2026 in London, England. Credit: Leon Neal / Staff | Getty Images News

Late Wednesday, X Safety confirmed that Grok was tweaked to stop undressing images of people without their consent.

“We have implemented technological measures to prevent the Grok account from allowing the editing of images of real people in revealing clothing such as bikinis,” X Safety said. “This restriction applies to all users, including paid subscribers.”

The update includes restricting “image creation and the ability to edit images via the Grok account on the X platform,” which “are now only available to paid subscribers. This adds an extra layer of protection by helping to ensure that individuals who attempt to abuse the Grok account to violate the law or our policies can be held accountable,” X Safety said.

Additionally, X will “geoblock the ability of all users to generate images of real people in bikinis, underwear, and similar attire via the Grok account and in Grok in X in those jurisdictions where it’s illegal,” X Safety said.

X’s update comes after weeks of sexualized images of women and children being generated with Grok finally prompting California Attorney General Rob Bonta to investigate whether Grok’s outputs break any US laws.

In a press release Wednesday, Bonta said that “xAI appears to be facilitating the large-scale production of deepfake nonconsensual intimate images that are being used to harass women and girls across the Internet, including via the social media platform X.”

Notably, Bonta appears to be as concerned about Grok’s standalone app and website being used to generate harmful images without consent as he is about the outputs on X.

Before today, X had not restricted the Grok app or website. X had only threatened to permanently suspend users who are editing images to undress women and children if the outputs are deemed “illegal content.” It also restricted the Grok chatbot on X from responding to prompts to undress images, but anyone with a Premium subscription could bypass that restriction, as could any free X user who clicked on the “edit” button on any image appearing on the social platform.

On Wednesday, prior to X Safety’s update, Elon Musk seemed to defend Grok’s outputs as benign, insisting that none of the reported images have fully undressed any minors, as if that would be the only problematic output.

“I [sic] not aware of any naked underage images generated by Grok,” Musk said in an X post. “Literally zero.”

Musk’s statement seems to ignore that researchers found harmful images where users specifically “requested minors be put in erotic positions and that sexual fluids be depicted on their bodies.” It also ignores that X previously voluntarily signed commitments to remove any intimate image abuse from its platform, as recently as 2024 recognizing that even partially nude images that victims wouldn’t want publicized could be harmful.

In the US, the Department of Justice considers “any visual depiction of sexually explicit conduct involving a person less than 18 years old” to be child pornography, which is also known as child sexual abuse material (CSAM).

The National Center for Missing and Exploited Children, which fields reports of CSAM found on X, told Ars that “technology companies have a responsibility to prevent their tools from being used to sexualize or exploit children.”

While many of Grok’s outputs may not be deemed CSAM, in normalizing the sexualization of children, Grok harms minors, advocates have warned. And in addition to finding images advertised as supposedly Grok-generated CSAM on the dark web, the Internet Watch Foundation noted that bad actors are using images edited by Grok to create even more extreme kinds of AI CSAM.

Grok faces probes in the US and UK

Bonta pointed to news reports documenting Grok’s worst outputs as the trigger of his probe.

“The avalanche of reports detailing the non-consensual, sexually explicit material that xAI has produced and posted online in recent weeks is shocking,” Bonta said. “This material, which depicts women and children in nude and sexually explicit situations, has been used to harass people across the Internet.”

Acting out of deep concern for victims and potential Grok targets, Bonta vowed to “determine whether and how xAI violated the law” and “use all the tools at my disposal to keep California’s residents safe.”

Bonta’s announcement came after the United Kingdom seemed to declare a victory after probing Grok over possible violations of the UK’s Online Safety Act, announcing that the harmful outputs had stopped.

That wasn’t the case, as The Verge once again pointed out; it conducted quick and easy tests using selfies of reporters to conclude that nothing had changed to prevent the outputs.

However, it seems that when Musk updated Grok to respond to some requests to undress images by refusing the prompts, it was enough for UK Prime Minister Keir Starmer to claim X had moved to comply with the law, Reuters reported.

Ars connected with a European nonprofit, AI Forensics, which tested to confirm that X had blocked some outputs in the UK. A spokesperson confirmed that their testing did not include probing if harmful outputs could be generated using X’s edit button.

AI Forensics plans to conduct further testing, but its spokesperson noted it would be unethical to test the “edit” button functionality that The Verge confirmed still works.

Last year, the Stanford Institute for Human-Centered Artificial Intelligence published research showing that Congress could “move the needle on model safety” by allowing tech companies to “rigorously test their generative models without fear of prosecution” for any CSAM red-teaming, Tech Policy Press reported. But until there is such a safe harbor carved out, it seems more likely that newly released AI tools could carry risks like those of Grok.

It’s possible that Grok’s outputs, if left unchecked, could have eventually put X in violation of the Take It Down Act, which comes into force in May and requires platforms to quickly remove AI revenge porn. One of the mothers of one of Musk’s children, Ashley St. Clair, has described Grok outputs using her images as revenge porn.

While the UK probe continues, Bonta has not yet made clear which laws he suspects X may be violating in the US. However, he emphasized that images with victims depicted in “minimal clothing” crossed a line, as well as images putting children in sexual positions.

As the California probe heats up, Bonta pushed X to take more actions to restrict Grok’s outputs, which one AI researcher suggested to Ars could be done with a few simple updates.

“I urge xAI to take immediate action to ensure this goes no further,” Bonta said. “We have zero tolerance for the AI-based creation and dissemination of nonconsensual intimate images or of child sexual abuse material.”

Seeming to take Bonta’s threat seriously, X Safety vowed to “remain committed to making X a safe platform for everyone and continue to have zero tolerance for any forms of child sexual exploitation, non-consensual nudity, and unwanted sexual content.”

This story was updated on January 14 to note X Safety’s updates.

Grok was finally updated to stop undressing women and children, X Safety says Read More »