The first stars may not have been as uniformly massive as we thought

Collapsing gas clouds in the early universe may have formed lower-mass stars as well.

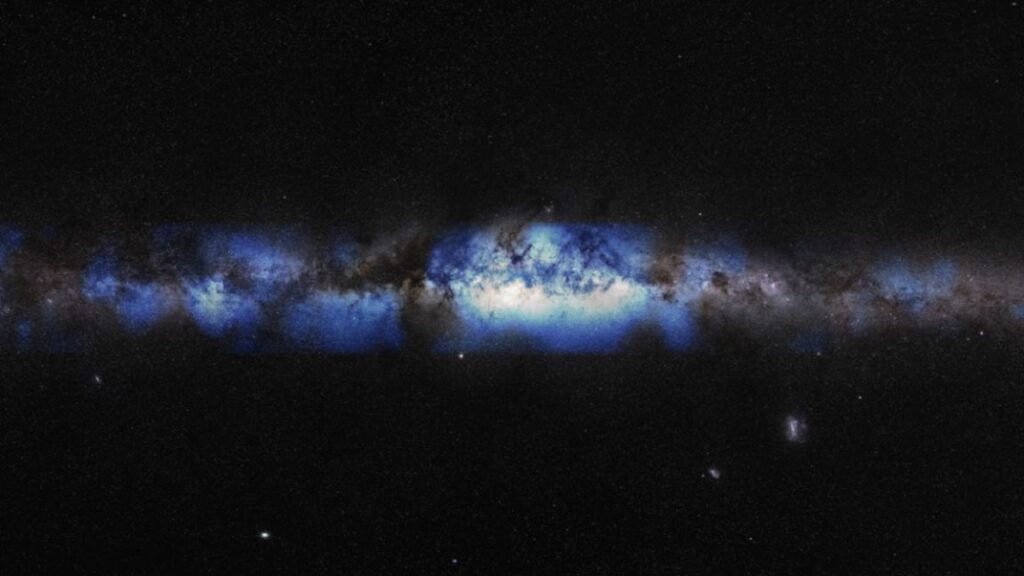

Stars form in the universe from massive clouds of gas. Credit: European Southern Observatory, CC BY-SA

For decades, astronomers have wondered what the very first stars in the universe were like. These stars formed new chemical elements, which enriched the universe and allowed the next generations of stars to form the first planets.

The first stars were initially composed of pure hydrogen and helium, and they were massive—hundreds to thousands of times the mass of the Sun and millions of times more luminous. Their short lives ended in enormous explosions called supernovae, so they had neither the time nor the raw materials to form planets, and they should no longer exist for astronomers to observe.

At least that’s what we thought.

Two studies published in the first half of 2025 suggest that collapsing gas clouds in the early universe may have formed lower-mass stars as well. One study uses a new astrophysical computer simulation that models turbulence within the cloud, causing fragmentation into smaller, star-forming clumps. The other study—an independent laboratory experiment—demonstrates how molecular hydrogen, a molecule essential for star formation, may have formed earlier and in larger abundances. The process involves a catalyst that may surprise chemistry teachers.

As an astronomer who studies star and planet formation and their dependence on chemical processes, I am excited at the possibility that chemistry in the first 50 million to 100 million years after the Big Bang may have been more active than we expected.

These findings suggest that the second generation of stars—the oldest stars we can currently observe and possibly the hosts of the first planets—may have formed earlier than astronomers thought.

Primordial star formation

Video illustration of the star and planet formation process. Credit: Space Telescope Science Institute.

Stars form when massive clouds of hydrogen many light-years across collapse under their own gravity. The collapse continues until a luminous sphere surrounds a dense core that is hot enough to sustain nuclear fusion.

Nuclear fusion happens when two or more atoms gain enough energy to fuse together. This process creates a new element and releases an incredible amount of energy, which heats the stellar core. In the first stars, hydrogen atoms fused together to create helium.

The new star shines because its surface is hot, but the energy fueling that luminosity percolates up from its core. The luminosity of a star is its total energy output in the form of light. The star’s brightness is the small fraction of that luminosity that we directly observe.

This process where stars form heavier elements by nuclear fusion is called stellar nucleosynthesis. It continues in stars after they form as their physical properties slowly change. The more massive stars can produce heavier elements such as carbon, oxygen, and nitrogen, all the way up to iron, in a sequence of fusion reactions that end in a supernova explosion.

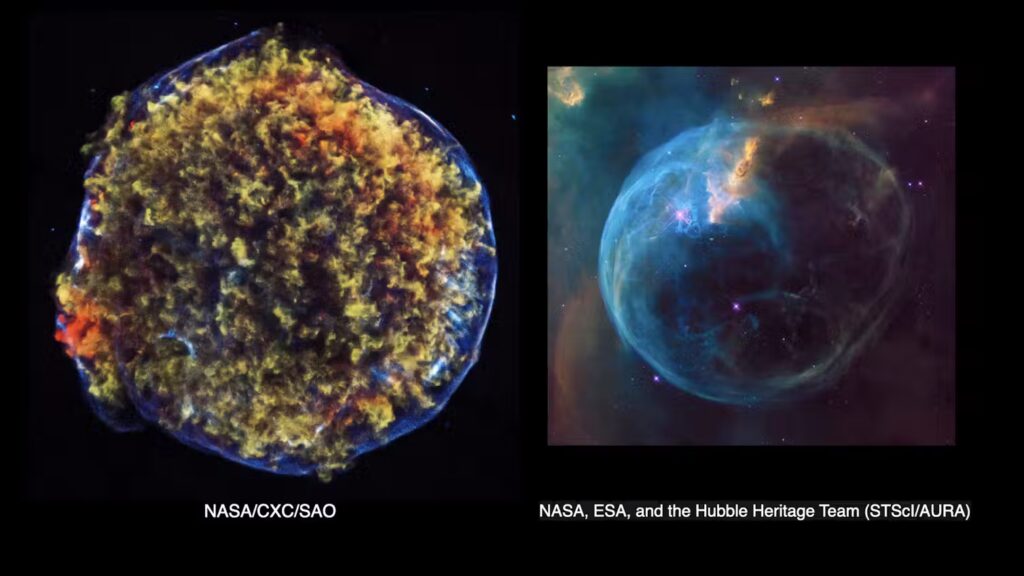

Supernovae can create even heavier elements, completing the periodic table of elements. Lower-mass stars like the Sun, with their cooler cores, can sustain fusion only up to carbon. As they exhaust the hydrogen and helium in their cores, nuclear fusion stops, and the stars slowly evaporate.

The remnant of a high-mass star supernova explosion imaged by the Chandra X-ray Observatory, left, and the remnant of a low-mass star evaporating in a blue bubble, right. Credit: CC BY 4.0

High-mass stars have high pressure and temperature in their cores, so they burn bright and use up their gaseous fuel quickly. They last only a few million years, whereas low-mass stars—those less than two times the Sun’s mass—evolve much more slowly, with lifetimes of billions or even trillions of years.

If the earliest stars were all high-mass stars, then they would have exploded long ago. But if low-mass stars also formed in the early universe, they may still exist for us to observe.

Chemistry that cools clouds

The first star-forming gas clouds, called protostellar clouds, were warm—roughly room temperature. Warm gas has internal pressure that pushes outward against the inward force of gravity trying to collapse the cloud. A hot air balloon stays inflated by the same principle. If the flame heating the air at the base of the balloon stops, the air inside cools, and the balloon begins to collapse.

Stars form when clouds of dust collapse inward and condense around a small, bright, dense core. Credit: NASA, ESA, CSA, and STScI, J. DePasquale (STScI), CC BY-ND

Only the most massive protostellar clouds with the most gravity could overcome the thermal pressure and eventually collapse. In this scenario, the first stars were all massive.

The only way to form the lower-mass stars we see today is for the protostellar clouds to cool. Gas in space cools by radiation, which transforms thermal energy into light that carries the energy out of the cloud. Hydrogen and helium atoms are not efficient radiators below several thousand degrees, but molecular hydrogen, H₂, is great at cooling gas at low temperatures.

When energized, H₂ emits infrared light, which cools the gas and lowers the internal pressure. That process would make gravitational collapse more likely in lower-mass clouds.

For decades, astronomers have reasoned that a low abundance of H₂ early on resulted in hotter clouds whose internal pressure would be too hot to easily collapse into stars. They concluded that only clouds with enormous masses, and therefore higher gravity, would collapse, leaving more massive stars.

Helium hydride

In a July 2025 journal article, physicist Florian Grussie and collaborators at the Max Planck Institute for Nuclear Physics demonstrated that the first molecule to form in the universe, helium hydride, HeH⁺, could have been more abundant in the early universe than previously thought. They used a computer model and conducted a laboratory experiment to verify this result.

Helium hydride? In high school science you probably learned that helium is a noble gas, meaning it does not react with other atoms to form molecules or chemical compounds. As it turns out, it does—but only under the extremely sparse and dark conditions of the early universe, before the first stars formed.

HeH⁺ reacts with hydrogen deuteride—HD, which is one normal hydrogen atom bonded to a heavier deuterium atom—to form H₂. In the process, HeH⁺ also acts as a coolant and releases heat in the form of light. So the high abundance of both molecular coolants earlier on may have allowed smaller clouds to cool faster and collapse to form lower-mass stars.

Gas flow also affects stellar initial masses

In another study, published in July 2025, astrophysicist Ke-Jung Chen led a research group at the Academia Sinica Institute of Astronomy and Astrophysics using a detailed computer simulation that modeled how gas in the early universe may have flowed.

The team’s model demonstrated that turbulence, or irregular motion, in giant collapsing gas clouds can form lower-mass cloud fragments from which lower-mass stars condense.

The study concluded that turbulence may have allowed these early gas clouds to form stars either the same size or up to 40 times more massive than the Sun’s mass.

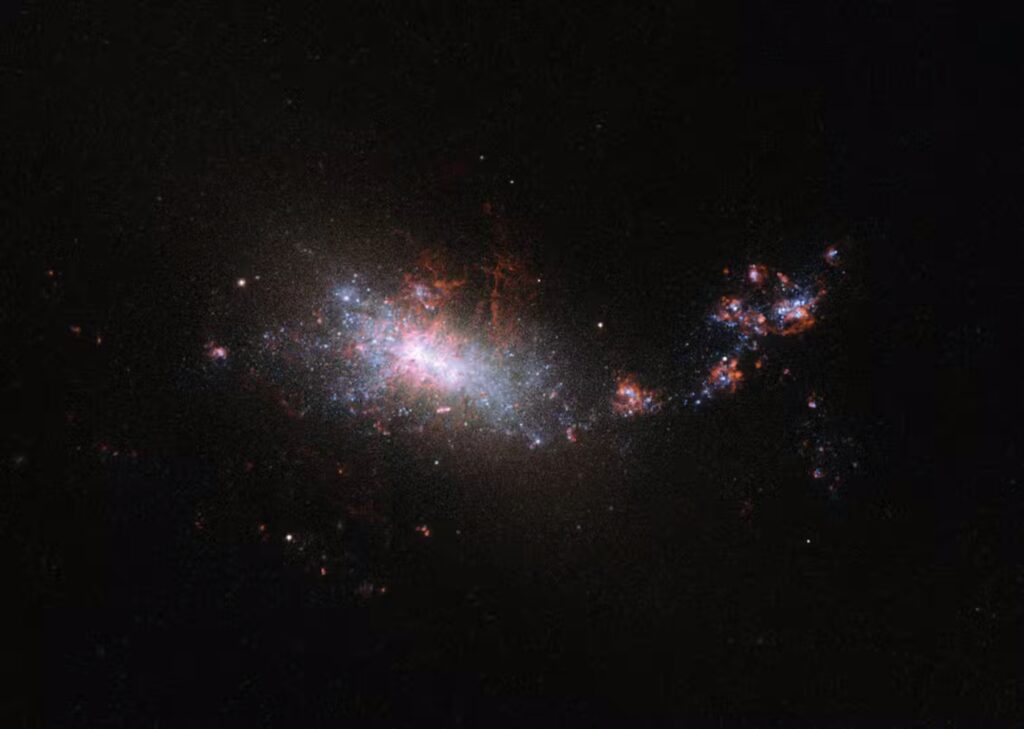

The galaxy NGC 1140 is small and contains large amounts of primordial gas with far fewer elements heavier than hydrogen and helium than are present in our Sun. This composition makes it similar to the intensely star-forming galaxies found in the early universe. These early universe galaxies were the building blocks for large galaxies such as the Milky Way. Credit: ESA/Hubble & NASA, CC BY-ND

The two new studies both predict that the first population of stars could have included low-mass stars. Now, it is up to us observational astronomers to find them.

This is no easy task. Low-mass stars have low luminosities, so they are extremely faint. Several observational studies have recently reported possible detections, but none are yet confirmed with high confidence. If they are out there, though, we will find them eventually.![]()

Luke Keller is a professor of physics and astronomy at Ithaca College.

This article is republished from The Conversation under a Creative Commons license. Read the original article.

The Conversation is an independent source of news and views, sourced from the academic and research community. Our team of editors work with these experts to share their knowledge with the wider public. Our aim is to allow for better understanding of current affairs and complex issues, and hopefully improve the quality of public discourse on them.

The first stars may not have been as uniformly massive as we thought Read More »