Leaker reveals which Pixels are vulnerable to Cellebrite phone hacking

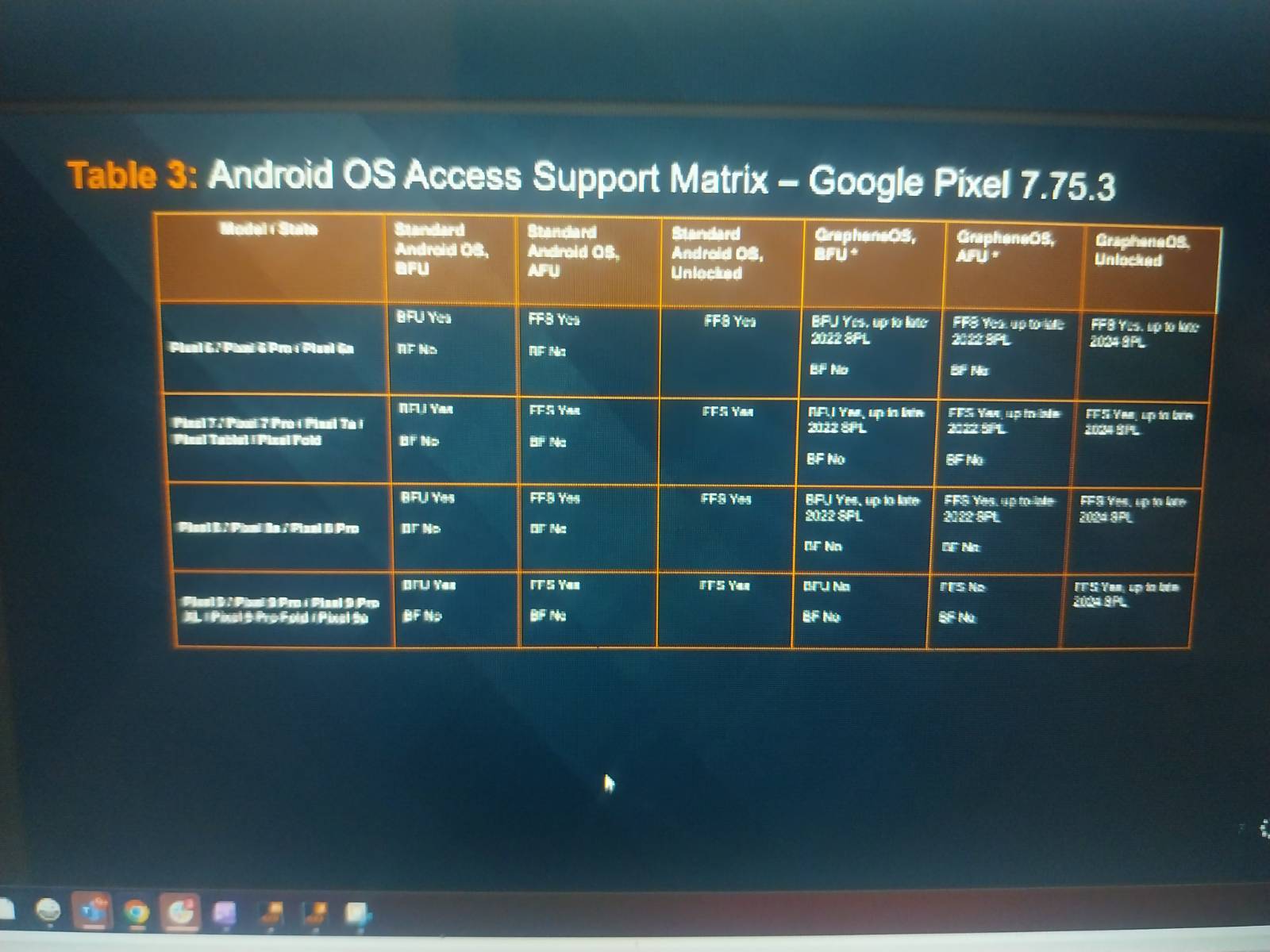

This blurry screenshot appears to list which Pixel phones Cellebrite devices can hack. Credit: rogueFed

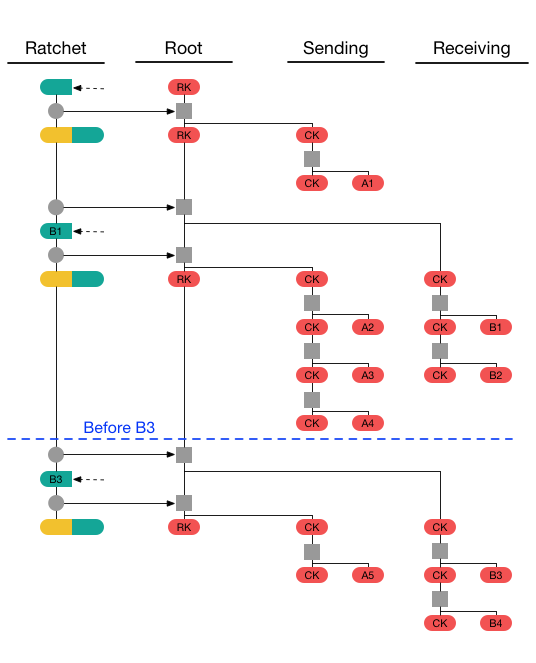

At least according to Cellebrite, GrapheneOS is more secure than what Google offers out of the box. The company is telling law enforcement in these briefings that its technology can extract data from Pixel 6, 7, 8, and 9 phones in unlocked, AFU, and BFU states on stock software. However, it cannot brute-force passcodes to enable full control of a device. The leaker also notes law enforcement is still unable to copy an eSIM from Pixel devices. Notably, the Pixel 10 series is moving away from physical SIM cards.

For those same phones running GrapheneOS, police can expect to have a much harder time. The Cellebrite table says that Pixels with GrapheneOS are only accessible when running software from before late 2022—both the Pixel 8 and Pixel 9 were launched after that. Phones in both BFU and AFU states are safe from Cellebrite on updated builds, and as of late 2024, even a fully unlocked GrapheneOS device is immune from having its data copied. An unlocked phone can be inspected in plenty of other ways, but data extraction in this case is limited to what the user can access.

The original leaker claims to have dialed into two calls so far without detection. However, rogueFed also called out the meeting organizer by name (the second screenshot, which we are not reposting). Odds are that Cellebrite will be screening meeting attendees more carefully now.

We’ve reached out to Google to inquire about why a custom ROM created by volunteers is more resistant to industrial phone hacking than the official Pixel OS. We’ll update this article if Google has anything to say.

Leaker reveals which Pixels are vulnerable to Cellebrite phone hacking Read More »