Rocket Report: A good week for Blue Origin; Italy wants its own launch capability

Blue Origin is getting ready to test-fire its first fully integrated New Glenn rocket in Florida.

Blue Origin’s first fully integrated New Glenn rocket rolls out to its launch pad at Cape Canaveral Space Force Station, Florida. Credit: Blue Origin

Welcome to Edition 7.21 of the Rocket Report! We’re publishing the Rocket Report a little early this week due to the Thanksgiving holiday in the United States. We don’t expect any Thanksgiving rocket launches this year, but still, there’s a lot to cover from the last six days. It seems like we’ve seen the last flight of the year by SpaceX’s Starship rocket. A NASA filing with the Federal Aviation Administration requests approval to fly an aircraft near the reentry corridor over the Indian Ocean for the next Starship test flight. The application suggests the target launch date is January 11, 2025.

As always, we welcome reader submissions. If you don’t want to miss an issue, please subscribe using the box below (the form will not appear on AMP-enabled versions of the site). Each report will include information on small-, medium-, and heavy-lift rockets as well as a quick look ahead at the next three launches on the calendar.

Another grim first in Ukraine. For the first time in warfare, Russia launched an Intermediate Range Ballistic Missile against a target in Ukraine, Ars reports. This attack on November 21 followed an announcement from Russian President Vladimir Putin earlier the same week that the country would change its policy for employing nuclear weapons in conflict. The IRBM, named Oreshnik, is the longest-range weapon ever used in combat in Europe, and could be refitted to carry nuclear warheads on future strikes.

Putin’s rationale … Putin says his ballistic missile attack on Ukraine is a warning to the West after the US and UK governments approved Ukraine’s use of Western-supplied ATACMS and Storm Shadow tactical ballistic missiles against targets on Russian territory. The Russian leader said his forces could attack facilities in Western countries that supply weapons for Ukraine to use on Russian territory, continuing a troubling escalatory ladder in the bloody war in Eastern Europe. Interestingly, this attack has another rocket connection. The target was apparently a factory in Dnipro that, not long ago, produced booster stages for Northrop Grumman’s Antares rocket.

Blue Origin hops again. Blue Origin launched its ninth suborbital human spaceflight over West Texas on November 22, CollectSpace reports. Six passengers rode the company’s suborbital New Shepard booster to the edge of space, reaching an altitude of 347,661 feet (65.8 miles or 106 kilometers), flying 3 miles (4.8 km) above the Kármán line that serves as the internationally-accepted border between Earth’s atmosphere and outer space. The pressurized capsule carrying the six passengers separated from the booster, giving them a taste of microgravity before parachuting back to Earth.

Dreams fulfilled … These suborbital flights are getting to be more routine, and may seem insignificant compared to Blue Origin’s grander ambitions of flying a heavy-lift rocket and building a human-rated Moon lander. However, we’ll likely have to wait many years before truly routine access to orbital flights becomes available for anyone other than professional astronauts or multimillionaires. This means tickets to ride on suborbital spaceships from Blue Origin or Virgin Galactic are currently the only ways to get to space, however briefly, for something on the order of $1 million or less. That puts the cost of one of these seats within reach for hundreds of thousands of people, and within the budgets of research institutions and non-profits to fund a flight for a scientist, student, or a member of the general public. The passengers on the November 22 flight included Emily Calandrelli, known online as “The Space Gal,” an engineer, Netflix host, and STEM education advocate who became the 100th woman to fly to space. (submitted by Ken the Bin)

The easiest way to keep up with Eric Berger’s and Stephen Clark’s reporting on all things space is to sign up for our newsletter. We’ll collect their stories and deliver them straight to your inbox.

Rocket Lab flies twice in one day. Two Electron rockets took flight Sunday, one from New Zealand’s Mahia Peninsula and the other from Wallops Island, Virginia, making Rocket Lab the first commercial space company to launch from two different hemispheres in a 24-hour period, Payload reports. One of the missions was the third of five launches for the French Internet of Things company Kinéis, which is building a satellite constellation. The other launch was an Electron modified to act as a suborbital technology demonstrator for hypersonic research. Rocket Lab did not disclose the customer, but speculation is focused on the defense contractor Leidos, which signed a four-launch deal with Rocket Lab last year.

Building cadence … SpaceX first launched two Falcon 9 rockets in 24 hours in 2021. This year, the company launched three Falcon 9s in a single day from pads at Cape Canaveral Space Force Station, Florida, and Vandenberg Space Force Base, California. Rocket Lab has now launched 14 Electron rockets this year, more than any other Western company other than SpaceX. “Two successful launches less than 24 hours apart from pads in different hemispheres. That’s unprecedented capability in the small launch market and one we’re immensely proud to deliver at Rocket Lab,” said Peter Beck, the company’s founder and CEO. (submitted by Ken the Bin)

Italy to reopen offshore launch site. An Italian-run space center located in Kenya will once again host rocket launches from an offshore launch platform, European Spaceflight reports. The Italian minister for enterprises, Adolfo Urso, recently announced that the country decided to move ahead with plans to again launch rockets from the Luigi Broglio Space Center near Malindi, Kenya. “The idea is to give a new, more ambitious mission to this base and use it for the launch of low-orbit microsatellites,” Urso said.

Decades of dormancy … Between 1967 and 1988, the Italian government and NASA partnered to launch nine US-made Scout rockets from the Broglio Space Center to place small satellites into orbit. The rockets lifted off from the San Marco platform, a converted oil platform in equatorial waters off the Kenyan coast. Italian officials have not said what rocket might be used once the San Marco platform is reactivated, but Italy is the leading contributor on the Vega C rocket, a solid-fueled launcher somewhat larger than the Scout. Italy will manage the reactivation of the space center, which has remained in service as a satellite tracking station, under the country’s Mattei Plan, an initiative aimed at fostering stronger economic partnerships with African nations. (submitted by Ken the Bin)

SpaceX flies same rocket twice in two weeks. Less than 14 days after its previous flight, a Falcon 9 booster took off again from Florida’s Space Coast early Monday to haul 23 more Starlink internet satellites into orbit, Spaceflight Now reports. The booster, numbered B1080 in SpaceX’s fleet of reusable rockets, made its 13th trip to space before landing on SpaceX’s floating drone ship in the Atlantic Ocean. The launch marked a turnaround of 13 days, 12 hours, and 44 minutes from this booster’s previous launch November 11, also with a batch of Starlink satellites. The previous record turnaround time between flights of the same Falcon 9 booster was 21 days.

400 and still going … SpaceX’s launch prior to this one was on Saturday night, when a Falcon 9 carried a set of Starlinks aloft from Vandenberg Space Force Base, California. The flight Saturday night was the 400th launch of a Falcon 9 rocket since 2010, and SpaceX’s 100th launch from the West Coast. (submitted by Ken the Bin)

Chinese firm launches upgraded rocket. Chinese launch startup LandSpace put two satellites into orbit late Tuesday with the first launch of an improved version of the Zhuque-2 rocket, Space News reports. The enhanced rocket, named the Zhuque-2E, replaces vernier steering thrusters with a thrust vector control system on the second stage engine, saving roughly 880 pounds (400 kilograms) in mass. The Zhuque-2E rocket is capable of placing a payload of up to 8,800 pounds (4,000 kilograms) into a polar Sun-synchronous orbit, according to LandSpace.

LandSpace in the lead … Founded in 2015, LandSpace is a leader among China’s crop of quasi-commercial launch startups. The company hasn’t launched as often as some of its competitors, but it became the first launch operator in the world to successfully reach orbit with a methane/liquid oxygen (methalox) rocket last year. Now, LandSpace has improved on its design to create the Zhuque-2E rocket, which also has a large niobium allow nozzle extension on the second stage engine for reduced weight. LandSpace also claims the Zhuque-2E is China’s first rocket to use fully supercooled propellant loading, similar to the way SpaceX loads densified propellants into its rockets to achieve higher performance. (submitted by Ken the Bin)

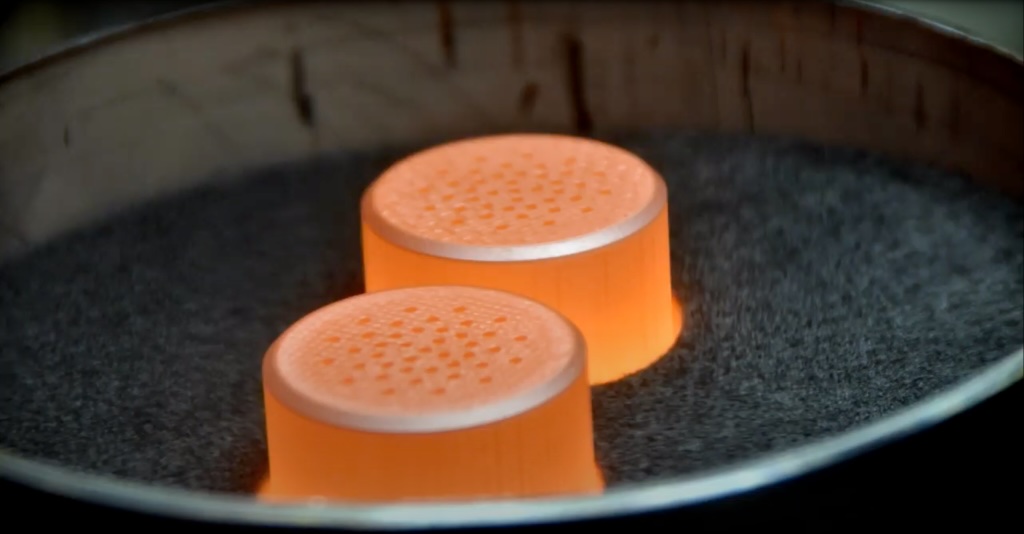

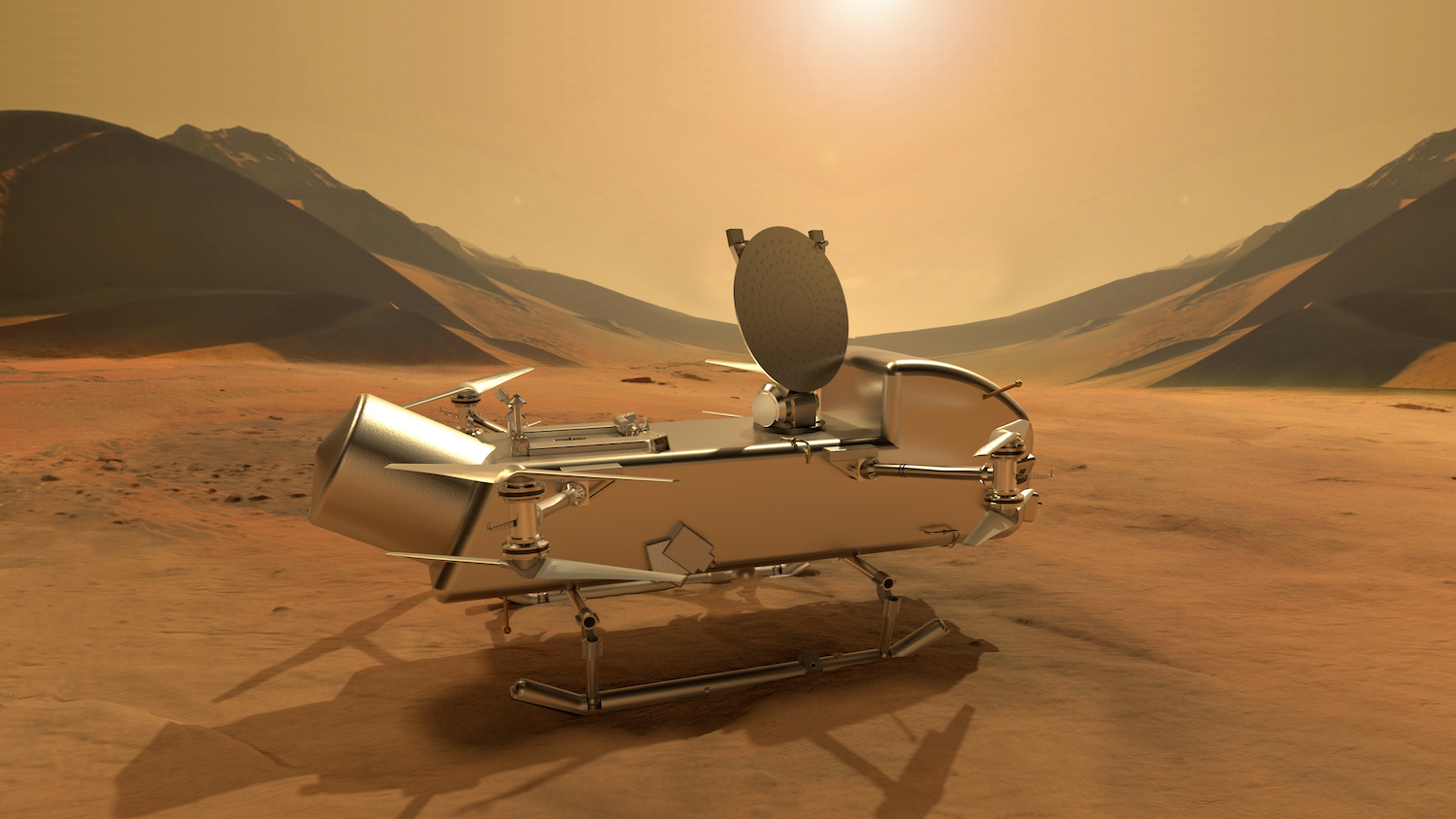

NASA taps Falcon Heavy for another big launch. A little more than a month after SpaceX launched NASA’s flagship Europa Clipper mission on a Falcon Heavy rocket, the space agency announced its next big interplanetary probe will also launch on a Falcon Heavy, Ars reports. What’s more, the Dragonfly mission the Falcon Heavy will launch in 2028 is powered by a plutonium power source. This will be the first time SpaceX launches a rocket with nuclear materials onboard, requiring an additional layer of safety certification by NASA. The agency’s most recent nuclear-powered spacecraft have all launched on United Launch Alliance Atlas V rockets, which are nearing retirement.

The details … Dragonfly is one of the most exciting robotic missions NASA has ever developed. The mission is to send an automated rotorcraft to explore Saturn’s largest moon, Titan, where Dragonfly will soar through a soupy atmosphere in search of organic molecules, the building blocks of life. It’s a hefty vehicle, about the size of a compact car, and much larger than NASA’s Ingenuity Mars helicopter. The launch period opens July 5, 2028, to allow Dragonfly to reach Titan in 2034. NASA is paying SpaceX $256.6 million to launch the mission on a Falcon Heavy. (submitted by Ken the Bin)

New Glenn is back on the pad. Blue Origin has raised its fully stacked New Glenn rocket on the launch pad at Cape Canaveral Space Force Station ahead of pre-launch testing, Florida Today reports. The last time this new 322-foot-tall (98-meter) rocket was visible to the public eye was in March. Since then, Blue Origin has been preparing the rocket for its inaugural launch, which could yet happen before the end of the year. Blue Origin has not announced a target launch date.

But first, more tests … Blue Origin erected the New Glenn rocket vertical on the launch pad earlier this year for ground tests, but this is the first time a flight-ready (or close to it) New Glenn has been spotted on the pad. This time, the first stage booster has its full complement of seven methane-fueled BE-4 engines. Before the first flight, Blue Origin plans to test-fire the seven BE-4 engines on the pad and conduct one or more propellant loading tests to exercise the launch team, the rocket, and ground systems before launch day.

Second Ariane 6 incoming. ArianeGroup has confirmed that the first and second stages for the second Ariane 6 flight have begun the transatlantic voyage from Europe to French Guiana aboard the sail-assisted transport ship Canopée, European Spaceflight reports. The second Ariane 6 launch, previously targeted before the end of this year, has now been delayed to no earlier than February 2025, according to Arianespace, the rocket’s commercial operator. This follows a mostly successful debut launch in July.

An important passenger … While the first Ariane 6 launch carried a cluster of small experimental satellites, the second Ariane 6 rocket will carry a critical spy satellite into orbit for the French armed forces. Shipping the core elements of the second Ariane 6 to the launch site in Kourou, French Guiana, is a significant step in the launch campaign. Once in Kourou, the stages will be connected together and rolled out to the launch pad, where technicians will install two strap-on solid rocket boosters and the payload fairing containing France’s CSO-3 military satellite.

Next three launches

Nov. 29: Soyuz-2.1a | Kondor-FKA 2 | Vostochny Cosmodrome, Russia | 21: 50 UTC

Nov. 30: Falcon 9 | Starlink 6-65 | Cape Canaveral Space Force Station, Florida | 05: 00 UTC

Nov. 30: Falcon 9 | NROL-126 | Vandenberg Space Force Base, California | 08: 08 UTC

Rocket Report: A good week for Blue Origin; Italy wants its own launch capability Read More »