The ISS is nearing retirement, so why is NASA still gung-ho about Starliner?

NASA is doing all it can to ensure Boeing doesn’t abandon the Starliner program.

Boeing’s Starliner spacecraft atop a United Launch Alliance Atlas V rocket before a test flight in 2019. Credit: NASA/Joel Kowsky

After so many delays, difficulties, and disappointments, you might be inclined to think that NASA wants to wash its hands of Boeing’s troubled Starliner spacecraft.

But that’s not the case.

The manager of NASA’s commercial crew program, Steve Stich, told reporters Thursday that Boeing and its propulsion supplier, Aerojet Rocketdyne, are moving forward with several changes to the Starliner spacecraft to resolve problems that bedeviled a test flight to the International Space Station (ISS) last year. These changes include new seals to plug helium leaks and thermal shunts and barriers to keep the spacecraft’s thrusters from overheating.

Boeing, now more than $2 billion in the hole to pay for all Starliner’s delays, is still more than a year away from executing on its multibillion-dollar NASA contract and beginning crew rotation flights to the ISS. But NASA officials say Boeing remains committed to Starliner.

“We really are working toward a flight as soon as early next year with Starliner, and then ultimately, our goal is to get into crew rotation flights with Starliner,” Stich said. “And those would start no earlier than the second crew rotation slot at the end of next year.”

That would be 11 years after Boeing officials anticipated the spacecraft would enter operational service for NASA when they announced the Starliner program in 2010.

Decision point

The next Starliner flight will probably transport only cargo to the ISS, not astronauts. But NASA hasn’t made any final decisions on the matter. The agency has enough crew rotation missions booked to fly on SpaceX’s Dragon spacecraft to cover the space station’s needs until well into 2027 or 2028.

“I think there are a lot of advantages, I would say, to fly the cargo flight first,” Stich said. “If we really look at the history of Starliner and Dragon, I think Dragon benefited a lot from having earlier [cargo] flights before the crew contract was let for the space station.”

One drawback of flying a Starliner cargo mission is that it will use up one of United Launch Alliance’s remaining Atlas V rockets currently earmarked for a future Starliner crew launch. That means Boeing would have to turn to another rocket to accomplish its full contract with NASA, which covers up to six crew missions.

While Boeing says Starliner can launch on several different rockets, the difficulty of adapting the spacecraft to a new launch vehicle, such as ULA’s Vulcan, shouldn’t be overlooked. Early in Starliner’s development, Boeing and ULA had to overcome an issue with unexpected aerodynamic loads discovered during wind tunnel testing. This prompted engineers to design an aerodynamic extension, or skirt, to go underneath the Starliner spacecraft on top of its Atlas V launcher.

Starliner has suffered delays from the beginning. A NASA budget crunch in the early 2010s pushed back the program about two years, but the rest of the schedule slips have largely fallen on Boeing’s shoulders. The setbacks included a fuel leak and fire during a critical ground test, parachute problems, a redesign to accommodate unanticipated aerodynamic forces, and a computer timing error that cut short Starliner’s first attempt to reach the space station in 2019.

This all culminated in the program’s first test flight with astronauts last summer. But after running into helium leaks and overheating thrusters, the mission ended with Starliner returning to Earth empty, while the spacecraft’s two crew members remained on the International Space Station until they could come home on a SpaceX Dragon spacecraft this year.

The outcome was a stinging disappointment for Boeing. Going into last year’s crew test flight, Boeing appeared to be on the cusp of joining SpaceX and finally earning revenue as one of NASA’s certified crew transportation providers for the ISS.

For several months, Boeing officials were strikingly silent on Starliner’s future. The company declined to release any statements on their long-term commitment to the program, and a Boeing program manager unexpectedly withdrew from a NASA press conference marking the end of the Starliner test flight last September.

Kelly Ortberg, Boeing’s president and CEO, testifies before the Senate Commerce, Science, and Transportation Committee on April 2, 2025, in Washington, DC. Credit: Win McNamee/Getty Images

But that has changed in the last few months. Kelly Ortberg, who took over as Boeing’s CEO last year, told CNBC in April that the company planned “more missions on Starliner” and said work to overcome the thruster issues the spacecraft encountered last year is “pretty straightforward.”

“We know what the problems were, and we’re making corrective actions,” Ortberg said. “So, we hope to do a few more flights here in the coming years.”

Task and purpose

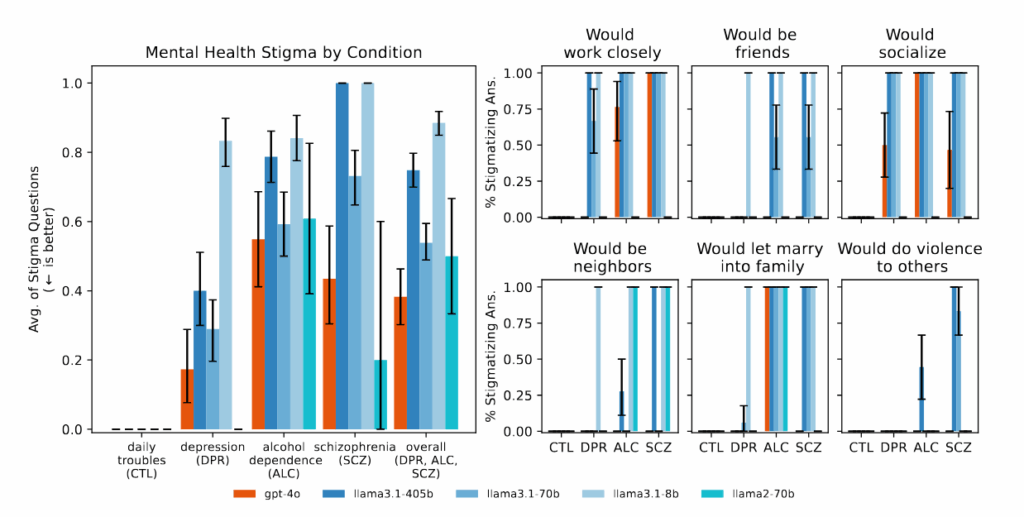

NASA officials remain eager for Starliner to begin these regular crew rotation flights, even as its sole destination, the ISS, enters its sunset years. NASA and its international partners plan to decommission and scuttle the space station in 2030 and 2031, more than 30 years after the launch of the lab’s first module.

NASA’s desire to bring Starliner online has nothing to do with any performance issues with SpaceX, the agency’s other commercial crew provider. SpaceX has met or exceeded all of NASA’s expectations in 11 long-duration flights to the ISS with its Dragon spacecraft. Since its first crew flight in 2020, SpaceX has established a reliable cadence with Dragon missions serving NASA and private customers.

However, there are some questions about SpaceX’s long-term plans for the Dragon program, and those concerns didn’t suddenly spring up last month, when SpaceX founder and chief executive Elon Musk suggested on X that SpaceX would “immediately” begin winding down the Dragon program. The suggestion came as Musk and President Donald Trump exchanged threats and insults on social media amid a feud as the one-time political allies had a dramatic falling out months into Trump’s second term in the White House.

In a subsequent post on X, Musk quickly went back on his threat to soon end the Dragon program. SpaceX officials participating in NASA press conferences in the last few weeks have emphasized the company’s dedication to human spaceflight without specifically mentioning Dragon. SpaceX’s fifth and final human-rated Dragon capsule debuted last month on its first flight to the ISS.

“I would say we’re pretty committed to the space business,” said Bill Gerstenmaier, SpaceX’s vice president of build and flight reliability. “We’re committed to flying humans in space and doing it safely.”

There’s a kernel of truth behind Musk’s threat to decommission Dragon. Musk has long had an appetite to move on from the Dragon program and pivot more of SpaceX’s resources to Starship, the company’s massive next-generation rocket. Starship is envisioned by SpaceX as an eventual replacement for Dragon and the Falcon 9 launcher.

A high-resolution commercial Earth-imaging satellite owned by Maxar captured this view of the International Space Station on June 7, 2024, with Boeing’s Starliner capsule docked at the lab’s forward port (lower right). Credit: Satellite image (c) 2024 Maxar Technologies

NASA hopes commercial space stations can take over for the ISS after its retirement, but there’s no guarantee SpaceX will still be flying Dragon in the 2030s. This injects some uncertainty into plans for commercial space stations.

One possible scenario is that, sometime in the 2030s, the only options for transporting people to and from commercial space stations in low-Earth orbit could be Starliner and Starship. We’ll discuss the rationale for this scenario later in this story.

While the cost of a seat on SpaceX’s Dragon is well known, there’s low confidence in the price of a ticket to low-Earth orbit on Starliner or Starship. What’s more, some of the commercial outposts may be incompatible with Starship because of its enormous mass, which could overcome the ability of a relatively modest space station to control its orientation. NASA identified this as an issue with its Gateway mini-space station in development to fly in orbit around the Moon.

It’s impossible to predict when SpaceX will pull the plug on Dragon. The same goes with Boeing and Starliner. But NASA and other customers are interested in buying more Dragon flights.

If SpaceX can prove Starship is safe enough to launch and land with people onboard, Dragon’s days will be numbered. But Starship is likely at least several years from being human-rated for flights to and from low-Earth orbit. NASA’s contract with SpaceX to develop a version of Starship to land astronauts on the Moon won’t require the ship to be certified for launches and landings on Earth. In some ways, that’s a more onerous challenge than the Moon mission because of the perils of reentering Earth’s atmosphere, which Starship won’t need to endure for a lunar landing, and the ship’s lack of a launch abort system.

Once operational, Starship is designed to carry significantly more cargo and people than Falcon 9 and Dragon, but it’s anyone’s guess when it might be ready for crew missions. Until then, if SpaceX wants to have an operational human spaceflight program, it’s Dragon or bust.

For the International Space Station, it’s also Dragon or bust, at least until Boeing gets going. SpaceX’s capsules are the only US vehicles certified to fly to space with NASA astronauts, and any more US government payments to Russia to launch Americans on Soyuz missions would be politically unpalatable.

From the start of the commercial crew program, NASA sought two contractors providing their own means of flying to and from the ISS. The main argument for this “dissimilar redundancy” was to ensure NASA could still access the space station in the event of a launch failure or some other technical problem. The same argument could be made now that NASA needs two options to avoid being at the whim of one company’s decisions.

Stretching out

All of this is unfolding as the Trump administration seeks to slash funding for the International Space Station, cut back on the lab’s research program, and transition to “minimal safe operations” for the final few years of its life. Essentially, the space station would limp to the finish line, perhaps with a smaller crew than the seven-person staff living and working in it today.

At the end of this month, SpaceX is scheduled to launch the Crew-11 mission—the 12th Dragon crew mission for NASA and the 11th fully operational crew ferry flight to the ISS. Two Americans, one Japanese astronaut, and a Russian cosmonaut will ride to the station for a stay of at least six months.

NASA’s existing contract with SpaceX covers four more long-duration flights to the space station with Dragon, including the mission set to go on July 31.

One way NASA can save money in the space station’s budget is by simply flying fewer missions. Stich said Thursday that NASA is working with SpaceX to extend the Dragon spacecraft’s mission duration limit from seven months to eight months. The recertification of Dragon for a longer mission could be finished later this year, allowing NASA to extend Crew-11’s stay at the ISS if needed. Over time, longer stays mean fewer crew rotation missions.

“We can extend the mission in real-time as needed as we better understand… the appropriations process and what that means relative to the overall station manifest,” Stich said.

Boeing’s Starliner spacecraft backs away from the International Space Station on September 6, 2024, without its crew. Credit: NASA

Boeing’s fixed-price contract with NASA originally covered an unpiloted test flight of Starliner, a demonstration flight with astronauts, and then up to six operational missions delivering crews to the ISS. But NASA has only given Boeing the “Authority To Proceed” for three of its six potential operational Starliner missions. This milestone, known as ATP, is a decision point in contracting lingo where the customer—in this case, NASA—places a firm order for a deliverable. NASA has previously said it awards these task orders about two to three years prior to a mission’s launch.

If NASA opts to go to eight-month missions on the ISS with Dragon and Starliner, the agency’s firm orders for three Boeing missions and four more SpaceX crew flights would cover the agency’s needs into early 2030, not long before the final crew will depart the space station.

Stich said NASA officials are examining their options. These include whether NASA should book more crew missions with SpaceX, authorize Boeing to prepare for additional Starliner flights beyond the first three, or order no more flights at all.

“As we better understand the budget and better understand what’s in front of us, we’re working through that,” Stich said. “It’s really too early to speculate how many flights we’ll fly with each provider, SpaceX and Boeing.”

Planning for the 2030s

NASA officials also have an eye for what happens after 2030. The agency has partnered with commercial teams led by Axiom, Blue Origin, and Voyager Technologies on plans for privately owned space stations in low-Earth orbit to replace some of the research capabilities lost with the end of the ISS program.

The conventional wisdom goes that these new orbiting outposts will be less expensive to operate than the ISS, making them more attractive to commercial clients, ranging from pharmaceutical research and in-space manufacturing firms to thrill-seeking private space tourists. NASA, which seeks to maintain a human presence in low-Earth orbit as it turns toward the Moon and Mars, will initially be an anchor customer until the space stations build up more commercial demand.

These new space stations will need a way to receive cargo and visitors. NASA wants to preserve the existing commercial cargo and crew transport systems so they’re available for commercial space stations in the 2030s. Stich said NASA is looking at transferring the rights for any of the agency’s commercial crew missions that don’t fly to ISS over to the commercial space stations. Among NASA’s two commercial crew providers, it currently looks more likely that Boeing’s contract will have unused capacity than SpaceX’s when the ISS program ends.

This is a sweetener NASA could offer to its stable of private space station developers as they face other hurdles in getting their hardware off the ground. It’s unclear whether a business case exists to justify the expense of building and operating a commercial outpost in orbit or if the research and manufacturing customers that could use a private space station might find a cheaper option in robotic flying laboratories, such as those being developed by Varda Space Industries.

A rendering of Voyager’s Starlab space station. Credit: Voyager Space

NASA’s policies haven’t helped matters. Analysts say NASA’s financial support for private space station developers has lagged, and the agency’s fickle decision-making on when to retire the International Space Station has made private fundraising more difficult. It’s not a business for the faint-hearted. For example, Axiom has gone through several rounds of layoffs in the last year.

The White House’s budget request for fiscal year 2026 proposes a 25 percent cut to NASA’s overall budget, but the funding line for commercial space stations is an area marked for an increase. Still, there’s a decent chance that none of the proposed commercial outposts will be flying when the ISS crashes back to Earth. In that event, China would be the owner and operator of the only space station in orbit.

At least at first, transportation costs will be the largest expense for any company that builds and operates a privately owned space station. It costs NASA about 40 percent more each year to ferry astronauts and supplies to and from the ISS than it does to operate the space station. For a smaller commercial outpost with reduced operating costs, the gap will likely be even wider.

If Boeing can right the ship with Starliner and NASA offers a few prepaid crew missions to private space station developers, the money saved could help close someone’s business case and hasten the launch of a new era in commercial spaceflight.

The ISS is nearing retirement, so why is NASA still gung-ho about Starliner? Read More »