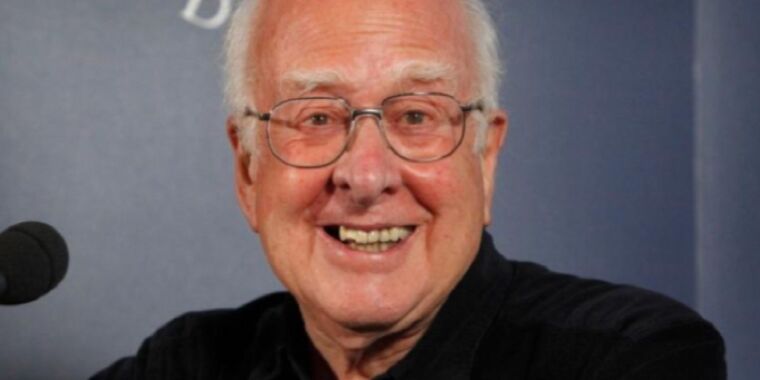

RIP Peter Higgs, who laid foundation for the Higgs boson in the 1960s

A particle physics hero —

Higgs shared the 2013 Nobel Prize in Physics with François Englert.

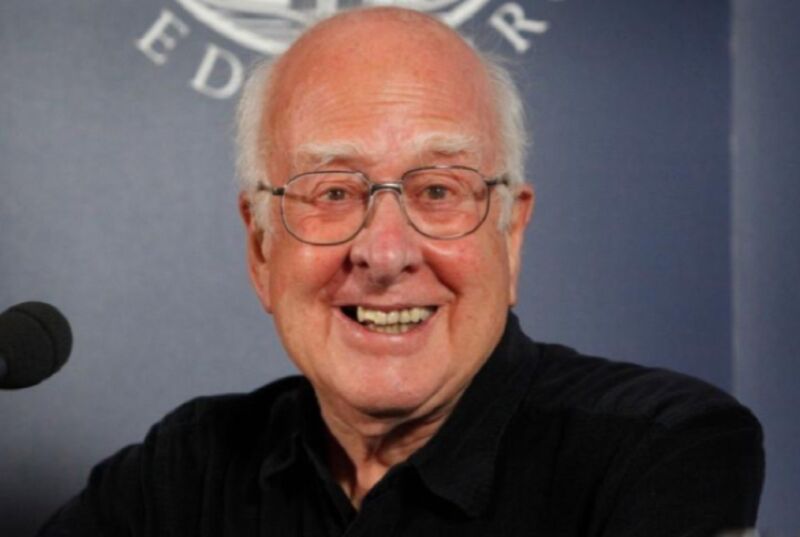

Enlarge / A visibly emotional Peter Higgs was present when CERN announced Higgs boson discovery in July 2012.

University of Edinburgh

Peter Higgs, the shy, somewhat reclusive physicist who won a Nobel Prize for his theoretical work on how the Higgs boson gives elementary particles their mass, has died at the age of 94. According to a statement from the University of Edinburgh, the physicist passed “peacefully at home on Monday 8 April following a short illness.”

“Besides his outstanding contributions to particle physics, Peter was a very special person, a man of rare modesty, a great teacher and someone who explained physics in a very simple and profound way,” Fabiola Gianotti, director general at CERN and former leader of one of the experiments that helped discover the Higgs particle in 2012, told The Guardian. “An important piece of CERN’s history and accomplishments is linked to him. I am very saddened, and I will miss him sorely.”

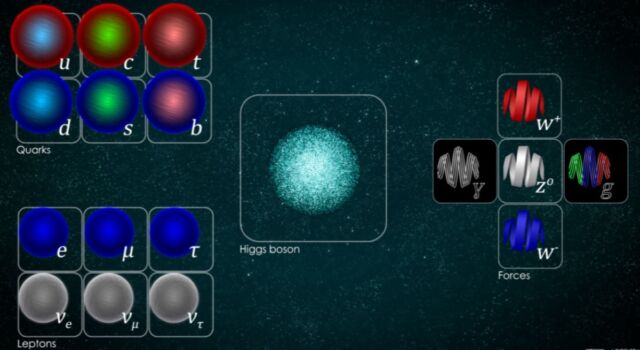

The Higgs boson is a manifestation of the Higgs field, an invisible entity that pervades the Universe. Interactions between the Higgs field and particles help provide particles with mass, with particles that interact more strongly having larger masses. The Standard Model of Particle Physics describes the fundamental particles that make up all matter, like quarks and electrons, as well as the particles that mediate their interactions through forces like electromagnetism and the weak force. Back in the 1960s, theorists extended the model to incorporate what has become known as the Higgs mechanism, which provides many of the particles with mass. One consequence of the Standard Model’s version of the Higgs boson is that there should be a force-carrying particle, called a boson, associated with the Higgs field.

Despite its central role in the function of the Universe, the road to predicting the existence of the Higgs boson was bumpy, as was the process of discovering it. As previously reported, the idea of the Higgs boson was a consequence of studies on the weak force, which controls the decay of radioactive elements. The weak force only operates at very short distances, which suggests that the particles that mediate it (the W and Z bosons) are likely to be massive. While it was possible to use existing models of physics to explain some of their properties, these predictions had an awkward feature: just like another force-carrying particle, the photon, the resulting W and Z bosons were massless.

Enlarge / Schematic of the Standard Model of particle physics.

Over time, theoreticians managed to craft models that included massive W and Z bosons, but they invariably came with a hitch: a massless partner, which would imply a longer-range force. In 1964, however, a series of papers was published in rapid succession that described a way to get rid of this problematic particle. If a certain symmetry in the models was broken, the massless partner would go away, leaving only a massive one.

The first of these papers, by François Englert and Robert Brout, proposed the new model in terms of quantum field theory; the second, by Higgs (then 35), noted that a single quantum of the field would be detectable as a particle. A third paper, by Gerald Guralnik, Carl Richard Hagen, and Tom Kibble, provided an independent validation of the general approach, as did a completely independent derivation by students in the Soviet Union.

At that time, “There seemed to be excitement and concern about quantum field theory (the underlying structure of particle physics) back then, with some people beginning to abandon it,” David Kaplan, a physicist at Johns Hopkins University, told Ars. “There were new particles being regularly produced at accelerator experiments without any real theoretical structure to explain them. Spin-1 particles could be written down comfortably (the photon is spin-1) as long as they didn’t have a mass, but the massive versions were confusing to people at the time. A bunch of people, including Higgs, found this quantum field theory trick to give spin-1 particles a mass in a consistent way. These little tricks can turn out to be very useful, but also give the landscape of what is possible.”

“It wasn’t clear at the time how it would be applied in particle physics.”

Ironically, Higgs’ seminal paper was rejected by the European journal Physics Letters. He then added a crucial couple of paragraphs noting that his model also predicted the existence of what we now know as the Higgs boson. He submitted the revised paper to Physical Review Letters in the US, where it was accepted. He examined the properties of the boson in more detail in a 1966 follow-up paper.

RIP Peter Higgs, who laid foundation for the Higgs boson in the 1960s Read More »