The Splay is a subpar monitor but an exciting portable projector

Since I’m fascinated by new display technologies and by improving image quality, I’ve never been a fan of home projectors. Projectors lack the image quality compared to good TVs and monitors, and they’re pretty needy. Without getting into the specific requirements of different models, you generally want a darker room with a large, blank wall for a projector to look its best. That can be a lot to ask for, especially in small, densely decorated homes like mine.

That said, a projector can be a space-efficient alternative to a big-screen TV or help you watch TV or movies outside. A projector can be versatile when paired with the right space, especially if that projector makes sure the “right space” is included in the device.

The Splay was crowdfunded in 2021, and its maker, Arovia, describes it as the “first fully collapsible monitor and projector.” In short, it’s a portable projector with an integrated fabric shroud that can serve as a big-screen (24.5 or 34.5 inches diagonally, depending on the model) portable monitor. Or, you can take off the fabric shroud and use the Splay as an ultra-short-throw projector and cast a display that measures up to 80 inches diagonally onto a wall.

At its core, the Splay is a projector, meaning it can’t compete with high-end LCD-LED or OLED monitors. It costs $1,300; the device is currently sold out, but an Arovia representative told me that it will be restocked this month.

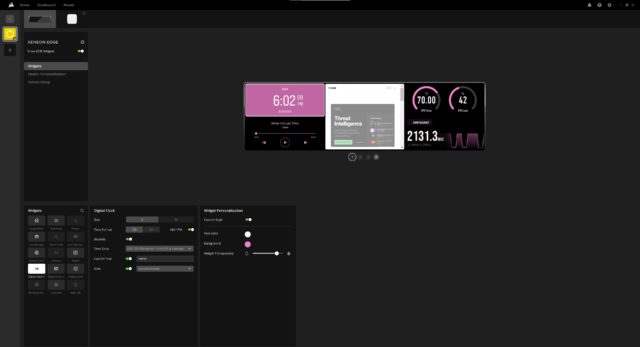

Here’s how the device works, per one of Arovia’s patents:

The … collapsible, portable display device, has a housing member having a sliding member aligned on the exterior of the housing member, and sliding along the exterior of said housing member between two operating positions, a collapsible screen containing one or more sheets of flexible, wrinkle resistant silicone or rubber materials containing optical enhancing components and capable of displaying an image when in an expanded operating position, and multiple collapsible members connected to said screen …

Arovia’s representative pointed to the Splay being used for mobile workspaces, gaming, and enterprise use cases, like trade shows.

Because it uses lightweight and springy fabric materials and bendable arms, the whole gadget can be folded into an included case that’s 4×4 inches and weighs 2.5 pounds. Once extended to its max size, the device is a bit unwieldy; I had to be mindful to avoid poking or tearing the fabric when I set up the device.

Still, it can be rather advantageous to access such large display options from something as portable as the collapsed Splay.

Splay as a monitor

The Splay isn’t what people typically picture when thinking of a “portable monitor.” It connects to PCs, iOS and Android devices, and gaming consoles via HDMI (or an HDMI adapter) and is chargeable via USB-C, so you can use it without a wall charger. But this isn’t the type of display you would set up at a coffee shop or even in a small home office.

Compared to a traditional portable monitor, the Splay is bulky. That’s partly because the display is bigger than a typical portable monitor (around 14 inches). Most of the bulk, however, comes from how much the back of the “monitor” protrudes (about 19 to 21 inches from the front of the display).

A profile view of the Splay in monitor mode. Credit: Scharon Harding

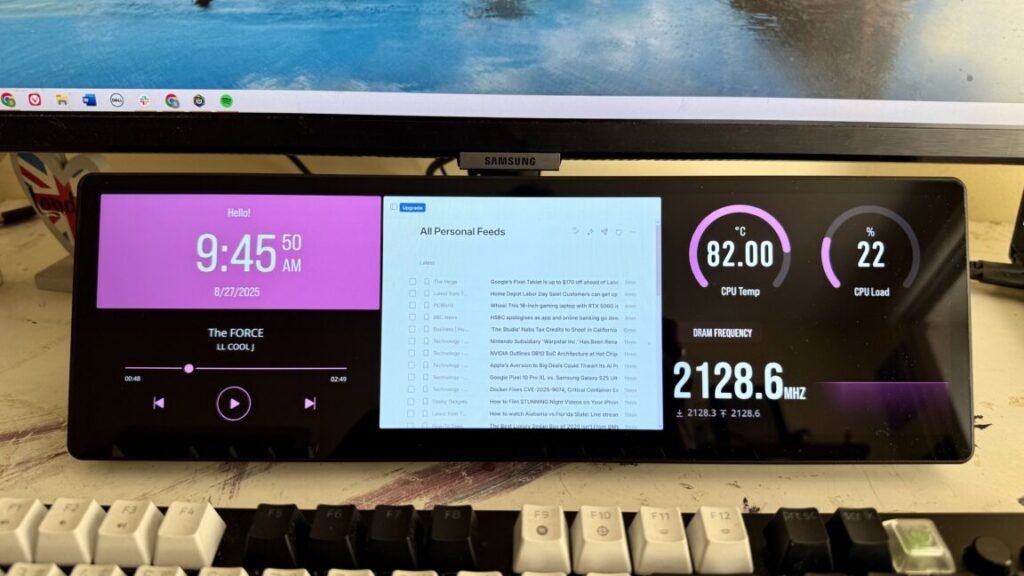

When extended, the device is mostly fabric, but its control center, where there is a power button, sharpness adjuster, and controls for brightness, and the integrated speakers go back pretty far (about 6.25 inches) even before you insert an HDMI or USB-C cable.

You will also want to use the Splay with a tripod (a small, tabletop one’s included) so that it’s at a proper height and you can swivel and tilt the display.

The Splay’s control center. Credit: Scharon Harding

That all makes the Splay cumbersome to find space for and, once opened, to transport. Once I set it up, I wasn’t eager to pack it away or to bring it to another room.

Still, the Splay is a novel attempt at bringing a monitor-sized display to more areas. Despite its bulky maximum size, it weighs little and doesn’t have to be plugged into a wall.

Splay claims the monitor has a max brightness of 760 nits. When I used the display in a well-lit room or in a sunny room, it still looked sufficiently bright, even when perpendicular to a window. All colors were somewhat washed out compared to how they appear on my computer monitor but were still acceptable for a secondary display. If I look closely enough, though, I can see the subtle texture of the fabric in the image.

The Splay also supports portrait mode. Credit: Scharon Harding

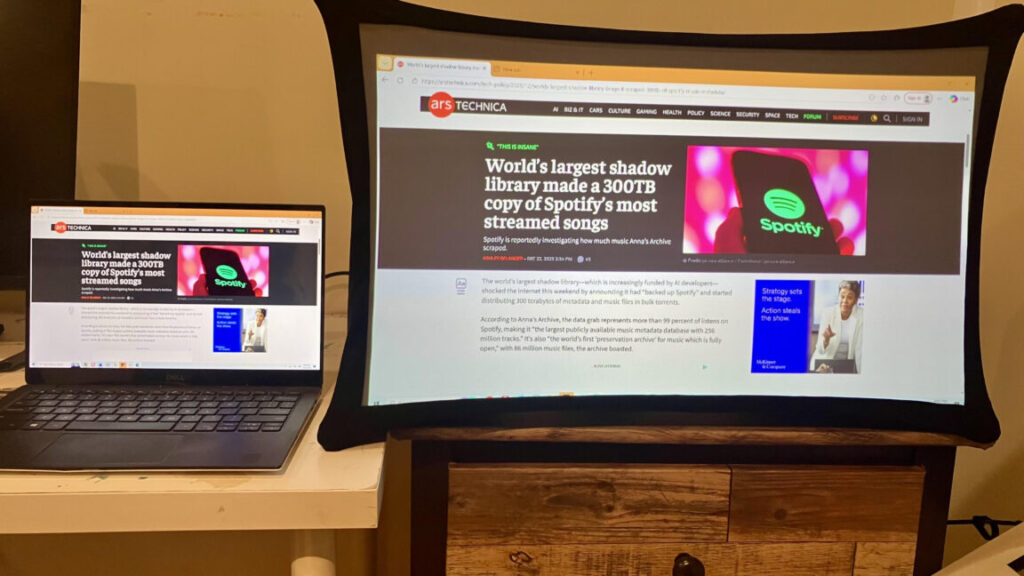

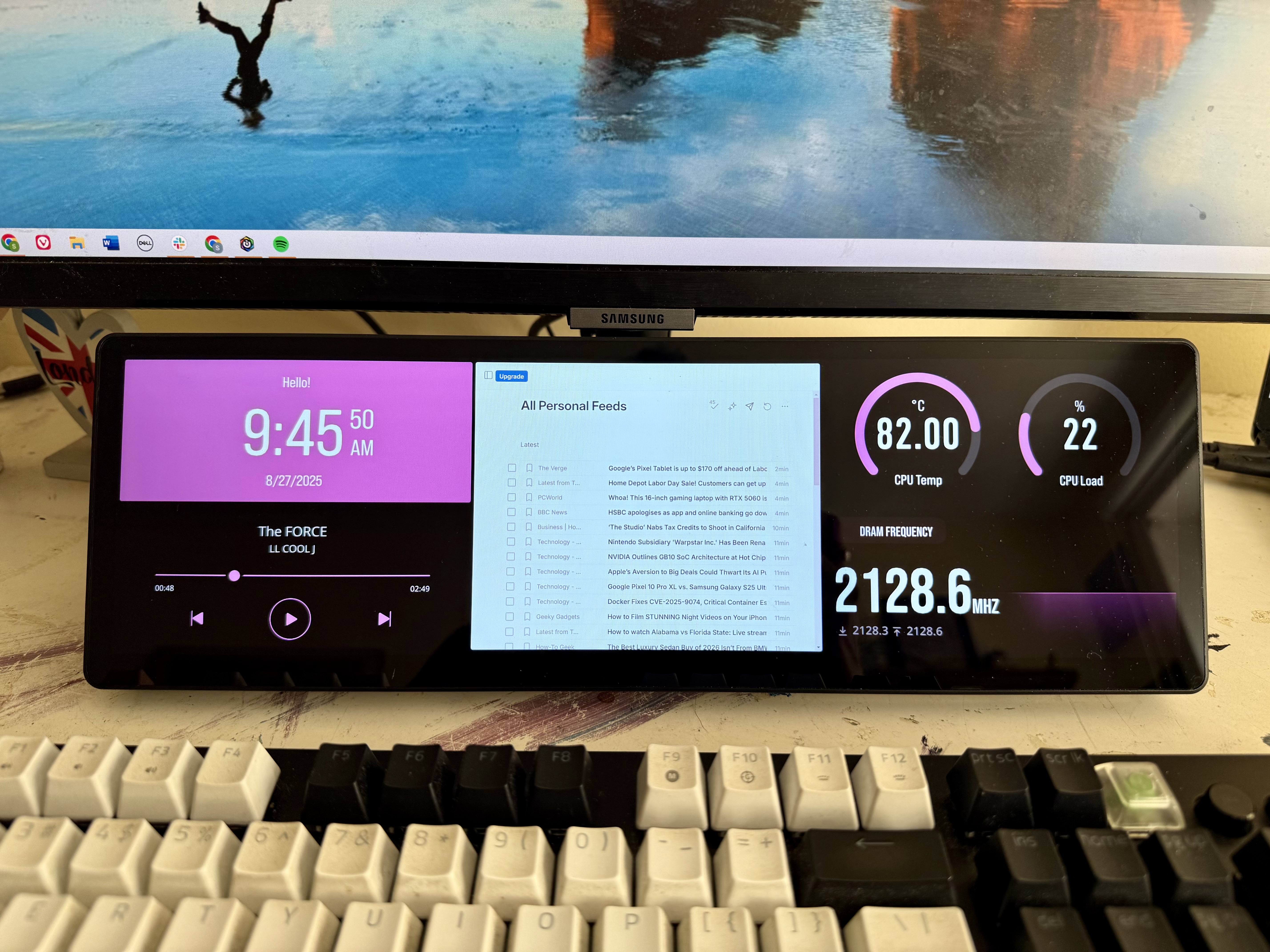

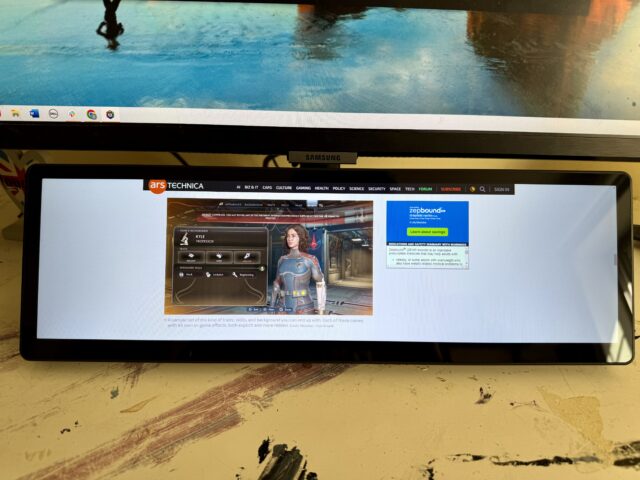

The Splay struggles mightily with text. It’s not sharp enough, so trying to read more than a couple of sentences on the Splay was a strain. This could be due to the projector technology, as well as the lower pixel density. With a display resolution equivalent to 1920×1080, the 24.5-inch “portable monitor” has a pixel density of 89.9 pixels per inch.

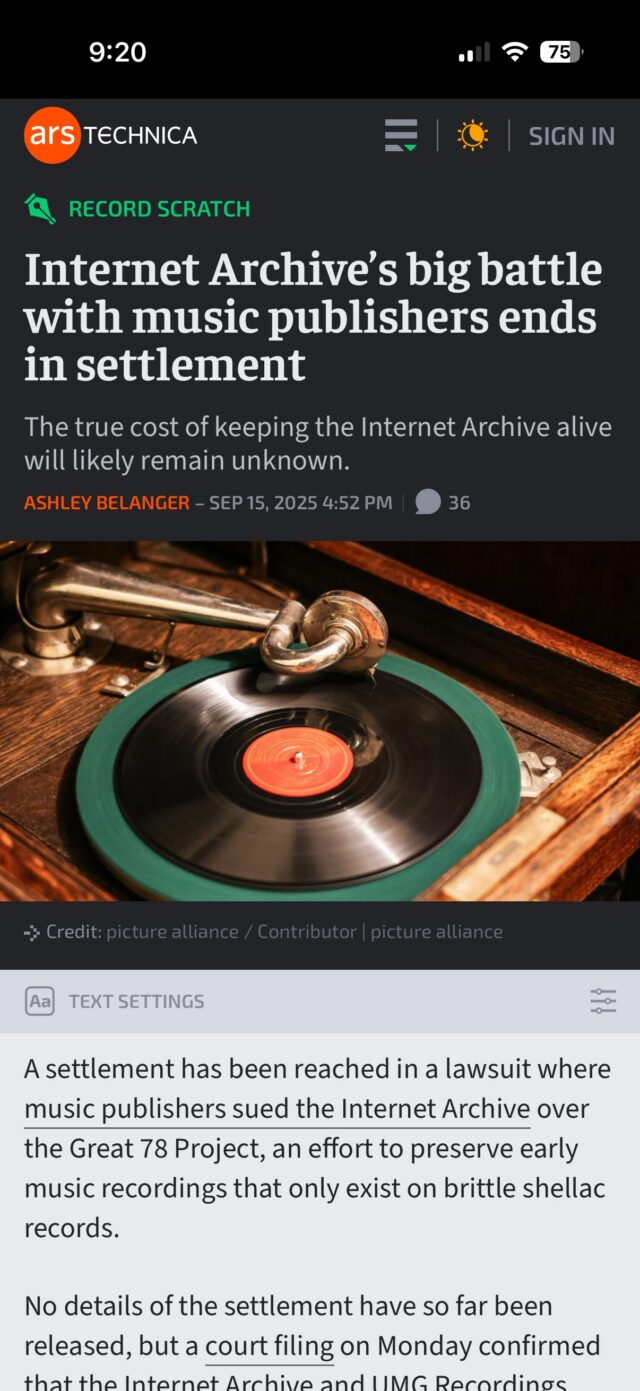

The Splay displaying an Ars Technica article. Credit: Scharon Harding

Considering there are portable monitors that are in the 24-inch size range and easier to set up, it’s hard to see a reason to opt for a Splay—unless you also want a projector.

Splay as a projector

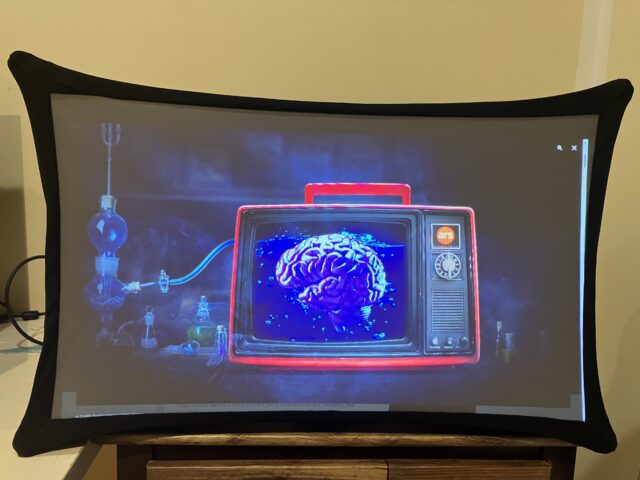

To use the Splay as a projector, you have to unzip the fabric shroud and pull the device out of its four-armed holster. Once set up, the Splay works as an ultra-short-throw pico projector with automatic keystone projection, which helps ensure that the display looks like a rectangle instead of a trapezoid or parallelogram.

The Splay as a portable projector. Credit: Scharon Harding

Arovia claims the projector can reach up to 285 lumens and display an image that measures up to 80 inches diagonally.

Now, we start to see the Splay’s value. Unlike other projectors, the Splay remains useful in tight, crowded spaces. Not only does the Splay wrap up neatly for transport, but the integrated screen means you never have to worry about whether you’ll have the right space for the projector to work properly.

There’s always a need for portable displays, and different use cases warrant exploring new approaches and form factors. While there are simpler 24-inch portable monitors with better image quality, the Splay brings remarkable portability and independence to portable projectors.

The Splay is niche and expensive, which is probably why the product’s website currently focuses on more B2B applications, like sports coaches and analysts using it to review footage and data. Similar to the big-screen tablets on wheels that more companies have been making lately, for now, the Splay will probably find the most relevance among businesses or public sector entities.

However, I’m inclined to think about how the Splay’s unique properties could apply to personal projectors. The Splay is a subpar “portable monitor,” but its duality makes it a more valuable projector. There are still too many obstacles preventing me from regularly using a projector, but the Splay has at least shown me that projectors can pack more than I expected.

The Splay is a subpar monitor but an exciting portable projector Read More »