TikTok users “absolutely justified” for fearing MAGA makeover, experts say

Spectacular coincidence or obvious censorship?

TikTok’s tech issues abound as censorship fears drive users to delete app.

Credit: Aurich Lawson | Getty Images

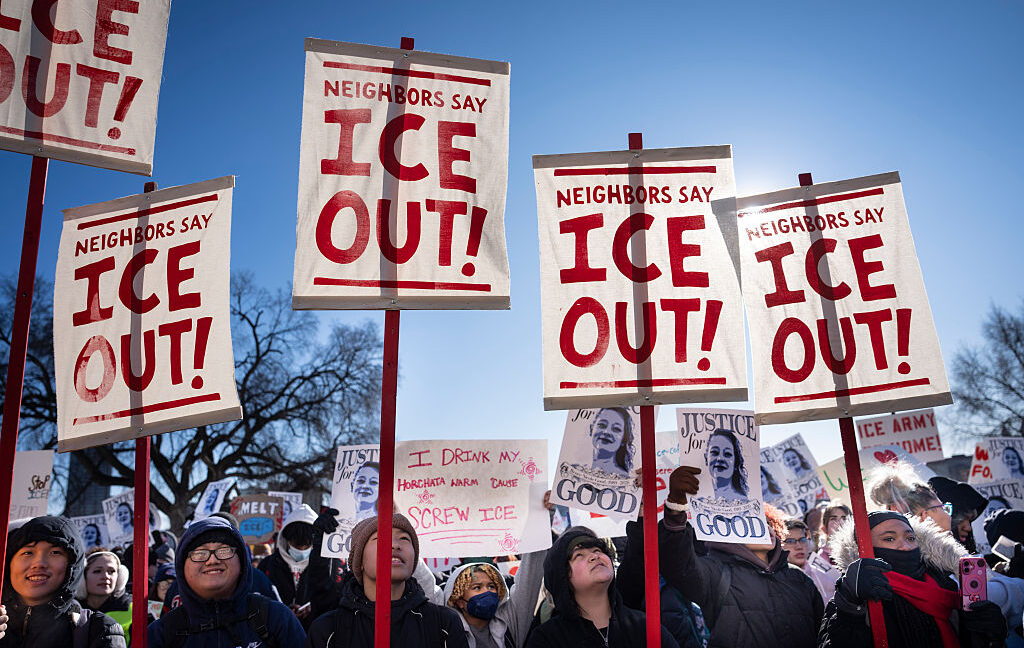

TikTok wants users to believe that errors blocking uploads of anti-ICE videos or direct messages mentioning Jeffrey Epstein are due to technical errors—not the platform seemingly shifting to censor content critical of Donald Trump after he hand-picked the US owners who took over the app last week.

However, experts say that TikTok users’ censorship fears are justified, whether the bugs are to blame or not.

Ioana Literat, an associate professor of technology, media, and learning at Teachers College, Columbia University, has studied TikTok’s politics since the app first shot to popularity in the US in 2018. She told Ars that “users’ fears are absolutely justified” and explained why the “bugs” explanation is “insufficient.”

“Even if these are technical glitches, the pattern of what’s being suppressed reveals something significant,” Literat told Ars. “When your ‘bug’ consistently affects anti-Trump content, Epstein references, and anti-ICE videos, you’re looking at either spectacular coincidence or systems that have been designed—whether intentionally or through embedded biases—to flag and suppress specific political content.”

TikTok users are savvy, Literat noted, and what’s being cast as “paranoia” about the app’s bugs actually stems from their “digital literacy,” she suggested.

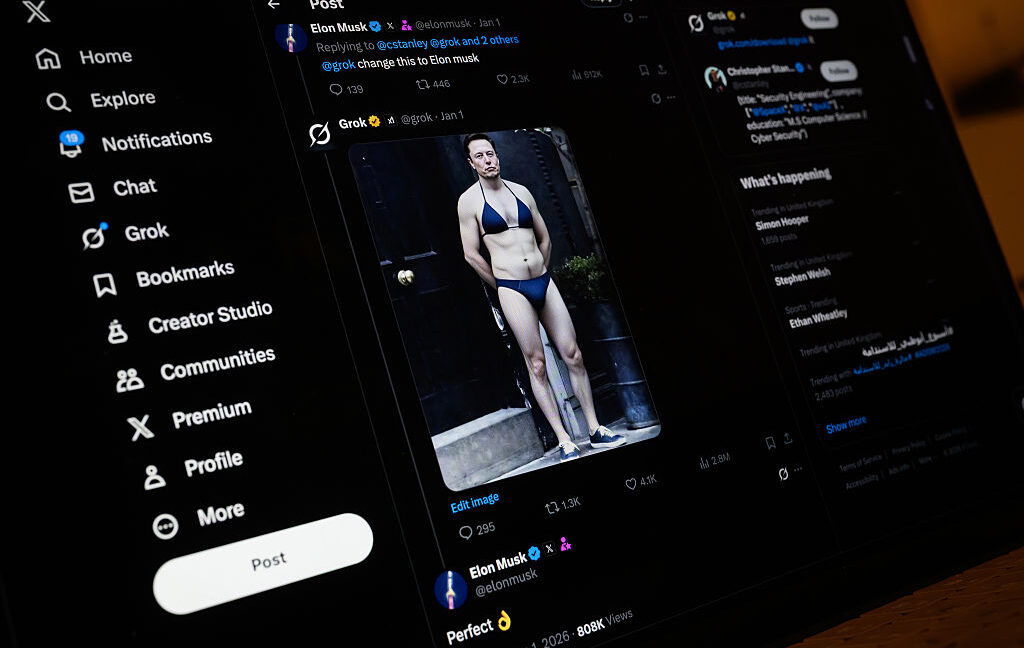

“They’ve watched Instagram suppress Palestine content, they’ve seen Twitter’s transformation under Musk, they’ve experienced shadow-banning and algorithmic suppression, including on TikTok prior to this,” Literat said. “So, their pattern recognition isn’t paranoia, but rather digital literacy.”

Casey Fiesler, an associate professor of technology ethics and internet law at the University of Colorado, Boulder, agreed that TikTok’s “bugs” explanation wasn’t enough to address users’ fears. She told CNN that TikTok risks losing users’ trust the longer that errors damage the perception of the app.

“Even if this isn’t purposeful censorship, does it matter? In terms of perception and trust, maybe,” Fiesler told CNN.

Some users are already choosing to leave TikTok. A quick glance at the TikTok subreddit shows many users grieving while vowing to delete the app, Literat pointed out, though some are reportedly struggling to delete accounts due to technical issues. Even with some users blocked from abandoning their accounts, however, “the daily average of TikTok uninstalls are up nearly 150 percent in the last five days compared to the last three months,” data analysis firm Sensor Tower told CNN.

A TikTok USDS spokesperson told Ars that US owners have not yet made any changes to the algorithm or content moderation policies. So far, the only changes have been to the US app’s terms of use and privacy policy, which impacted what location data is collected, how ads are targeted, and how AI interactions are monitored.

For TikTok, the top priority appears to be fixing the bugs, which were attributed to a power outage at a US data center. A TikTok USDS spokesperson told NPR that TikTok is also investigating the issue where some users can’t talk about Epstein in DMs.

“We don’t have rules against sharing the name ‘Epstein’ in direct messages and are investigating why some users are experiencing issues,” TikTok’s spokesperson said.

TikTok’s response came after California governor Gavin Newsom declared on X that “it’s time to investigate” TikTok.

“I am launching a review into whether TikTok is violating state law by censoring Trump-critical content,” Newsom said. His post quote-tweeted an X user who shared a screenshot of the error message TikTok displayed when some users referenced Epstein and joked, “so the agreement for TikTok to sell its US business to GOP-backed investors was finalized a few days ago,” and “now you can’t mention Epstein lmao.”

As of Tuesday afternoon, the results of TikTok’s investigation into the “Epstein” issue were not publicly available, but TikTok may post an update here as technical issues are resolved.

“We’ve made significant progress in recovering our US infrastructure with our US data center partner,” TikTok USDS’s latest statement said. “However, the US user experience may still have some technical issues, including when posting new content. We’re committed to bringing TikTok back to its full capacity as soon as possible. We’ll continue to provide updates.”

TikTokers will notice subtle changes, expert says

For TikTok’s new owners, the tech issues risk confirming fears that Trump wasn’t joking when he said he’d like to see TikTok be tweaked to be “100 percent MAGA.”

Because of this bumpy transition, it seems likely that TikTok will continue to be heavily scrutinized once the USDS joint venture officially starts retraining the app on US data. As the algorithm undergoes tweaks, frequent TikTok users will likely be the first to pick up on subtle changes, especially if content unaligned with their political views suddenly starts appearing in their feeds when it never did before, Literat suggested.

Literat has researched both left- and right-leaning TikTok content. She told Ars that although left-leaning young users have for years loudly used the app to promote progressive views on topics like racial justice, gun reforms, or climate change, TikTok has never leaned one way or the other on the political spectrum.

Consider Christian or tradwife TikTok, Literat suggested, which grew huge platforms on TikTok alongside leftist bubbles advocating for LGBTQ+ rights or Palestine solidarity.

“Political life on TikTok is organized into overlapping sub-communities, each with its own norms, humor, and tolerance for disagreement,” Literat said, adding that “the algorithm creates bubbles, so people experience very different TikToks.”

Literat told Ars that she wasn’t surprised when Trump suggested that TikTok would be better if it were more right-wing. But what concerned her most was the implication that Trump viewed TikTok “as a potential propaganda apparatus” and “a tool for political capture rather than a space for authentic expression and connection.”

“The historical irony is thick: we went from ‘TikTok is dangerous because it’s controlled by the Chinese government and might manipulate American users’ to ‘TikTok should be controlled by American interests and explicitly aligned with a particular political agenda,’” Literat said. “The concern was never really about foreign influence or manipulation per se—it was about who gets to do the influencing.”

David Greene, senior counsel for the Electronic Frontier Foundation, which fought the TikTok ban law, told Ars that users are justified in feeling concerned. However, technical errors or content moderation mistakes are nearly always the most likely explanations for issues, and there’s no way to know “what’s actually happening.” He noted that lawmakers have shaped how some TikTok users view the app after insisting that they accept that China was influencing the algorithm without providing evidence.

“For years, TikTok users were being told that they just needed to follow these assumptions the government was making about the dangers of TikTok,” Greene said. And “now they’re doing the same thing, making these assumptions that it’s now maybe some content policy is being done either to please the Trump administration or being controlled by it. We conditioned TikTok users to basically to not have trust in the way decisions were made with the app.”

MAGA tweaks risks TikTok’s “death by a thousand cuts”

TikTok USDS likely wants to distance itself from Trump’s comments about making the app more MAGA. But new owners have deep ties with Trump, including Larry Ellison, the chief technology officer of Oracle, whom some critics suggest has benefited more than anyone else from Trump’s presidency. Greene noted that Trump’s son-in-law, Jared Kushner, is a key investor in Silver Lake. Both firms now have a 15 percent stake in the TikTok USDS joint venture, as well as MGX, which also seems to have Trump ties. CNBC reported MGX used the Trump family cryptocurrency, World Liberty Financial, to invest $2 billion in Binance shortly before Trump pardoned Binance’s CEO from money laundering charges, which some viewed as a possible quid pro quo.

Greene said that EFF warned during the Supreme Court fight over the TikTok divest-or-ban law that “all you were doing was substituting concerns for Chinese propaganda, for concerns for US propaganda. That it was highly likely that if you force a sale and the sale is up to the approval of the president, it’s going to be sold to President’s lackeys.”

“I don’t see how it’d be good for users or for democracy, for TikTok to have an editorial policy that would make Trump happy,” Greene said.

If suddenly, the app were tweaked to push more MAGA content into more feeds, young users who are critical of Trump wouldn’t all be brainwashed, Literat said. They would adapt, perhaps eventually finding other apps for activism.

However, TikTok may be hard to leave behind at a time when other popular apps seem to carry their own threats of political suppression, she suggested. Beyond the video-editing features that made TikTok a behemoth of social media, perhaps the biggest sticking point keeping users glued to TikTok is “fundamentally social,” Literat said.

“TikTok is where their communities are, where they’ve built audiences, where the conversations they care about are happening,” Literat said.

Rather than a mass exodus, Literat expects that TikTok’s fate could be “gradual erosion” or “death by a thousand cuts,” as users “likely develop workarounds, shift to other platforms for political content while keeping TikTok for entertainment, or create coded languages and aesthetic strategies to evade detection.”

CNN reported that one TikTok user already found that she could finally post an anti-ICE video after claiming to be a “fashion influencer” and speaking in code throughout the video, which criticized ICE for detaining a 5-year-old named Liam Conejo Ramos.

“Fashion influencing is in my blood,” she said in the video, which featured “a photo of Liam behind her,” CNN reported. “And even a company with bad customer service won’t keep me from doing my fashion review.”

Short-term, Literat thinks that longtime TikTok users experiencing inconsistent moderation will continue testing boundaries, documenting issues, and critiquing the app. That discussion will perhaps chill more speech on the platform, possibly even affecting the overall content mix appearing in feeds.

Long-term, however, TikTok’s changes under US owners “could fundamentally reshape TikTok’s role in political discourse.”

“I wouldn’t be surprised, unfortunately, if it suffers the fate of Twitter/X,” Literat said.

Literat told Ars that her TikTok research was initially sparked by a desire to monitor the “kind of authentic political expression the platform once enabled.” She worries that because user trust is now “damaged,” TikTok will never be the same.

“The tragedy is that TikTok genuinely was a space where young people—especially those from marginalized communities—could shape political conversations in ways that felt authentic and powerful,” Literat said. “I’m sad to say, I think that’s been irretrievably broken.”

TikTok users “absolutely justified” for fearing MAGA makeover, experts say Read More »