Supply-chain attacks on open source software are getting out of hand

sudo rm -rf --no-preserve-root /

The –no-preserve-root flag is specifically designed to override safety protections that would normally prevent deletion of the root directory.

The postinstall script that includes a Windows-equivalent destructive command was:

rm /s /q

Socket published a separate report Wednesday on yet more supply-chain attacks, one targeting npm users and another targeting users of PyPI. As of Wednesday, the four malicious packages—three published to npm and the fourth on PyPI—collectively had been downloaded more than 56,000 times. Socket said it was working to get them removed.

When installed, the packages “covertly integrate surveillance functionality into the developer’s environment, enabling keylogging, screen capture, fingerprinting, webcam access, and credential theft,” Socket researchers wrote. They added that the malware monitored and captured user activity and transmitted it to attacker-controlled infrastructure. Socket used the term surveillance malware to emphasize the covert observation and data exfiltration tactics “in the context of malicious dependencies.”

Last Friday, Socket reported the third attack. This one compromised an account on npm and used the access to plant malicious code inside three packages available on the site. The compromise occurred after the attackers successfully obtained a credential token that the developer used to authenticate to the site.

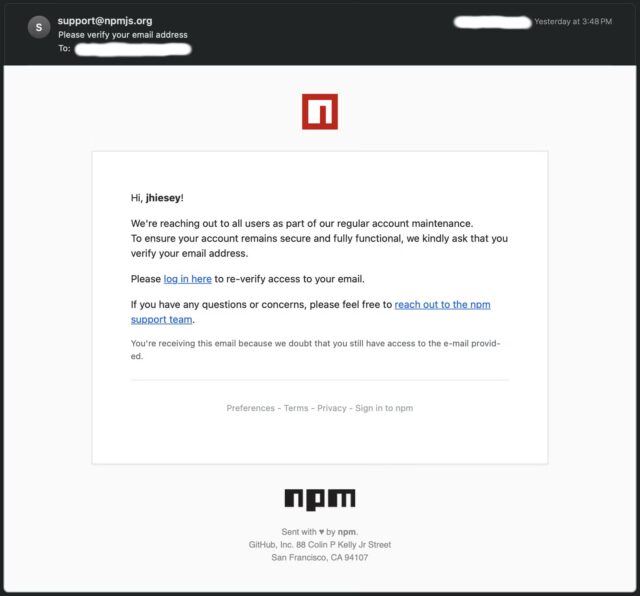

The attackers obtained the credential through a targeted phishing attack Socket had disclosed hours earlier. The email instructed the recipient to log in through a URL on npnjs.com. The site is a typosquatting spoof of the official npmjs.com domain. To make the attack more convincing, the phishing URL contained a token field that mimicked tokens npm uses for authentication. The phishing URL was in the format of https://npnjs.com/login?token=xxxxxx where the xxxxxx represented the token.

A phishing email targeting npm account holders. Credit: Socket

Also compromised was an npm package known as ‘is.’ It receives roughly 2.8 million downloads weekly.

Potential for widespread damage

Supply-chain attacks like the ones Socket has flagged have the potential to cause widespread damage. Many packages available in repositories are dependencies, meaning the dependencies must be incorporated into downstream packages for those packages to work. In many developer flows, new dependency versions are downloaded and incorporated into the downstream packages automatically.

The packages flagged in the three attacks are:

- @toptal/picasso-tailwind

- @toptal/picasso-charts

- @toptal/picasso-shared

- @toptal/picasso-provider

- @toptal/picasso-select

- @toptal/picasso-quote

- @toptal/picasso-forms

- @xene/core

- @toptal/picasso-utils

- @toptal/picasso-typography.

- is version 3.3.1, 5.0.0

- got-fetch version 5.1.11, 5.1.12

- Eslint-config-prettier, versions 8.10.1, 9.1.1, 10.1.6, and 10.1.7

- Eslint-plugin-prettier, versions 4.2.2 and 4.2.3

- Synckit, version 0.11.9

- @pkgr/core, version 0.2.8

- Napi-postinstall, version 0.3.1

Developers who work with any of the packages targeted should ensure none of the malicious versions have been installed or incorporated into their wares. Developers working with open source packages should:

- Monitor repository visibility changes in search of suspicious or unusual publishing of packages

- Review package.json lifecycle scripts before installing dependencies

- Use automated security scanning in continuous integration and continuous delivery pipelines

- Regularly rotate authentication tokens

- Use multifactor authentication to safeguard repository accounts

Additionally, repositories that haven’t yet made MFA mandatory should do so in the near future.

Supply-chain attacks on open source software are getting out of hand Read More »