ICE’s forced face scans to verify citizens is unconstitutional, lawmakers say

“A 2024 test by the National Institute of Standards and Technology found that facial recognition tools are less accurate when images are low quality, blurry, obscured, or taken from the side or in poor light—exactly the kind of images an ICE agent would likely capture when using a smartphone in the field,” their letter said.

If ICE’s use continues to expand, mistakes “will almost certainly proliferate,” senators said, and “even if ICE’s facial recognition tools were perfectly accurate, these technologies would still pose serious threats to individual privacy and free speech.”

Matthew Guariglia, senior policy analyst at the Electronic Frontier Foundation, told 404 Media that ICE’s growing use of facial recognition confirms that “we should have banned government use of face recognition when we had the chance because it is dangerous, invasive, and an inherent threat to civil liberties.” It also suggests that “any remaining pretense that ICE is harassing and surveilling people in any kind of ‘precise’ way should be left in the dust,” Guariglia said.

ICE scans faces, even if shown an ID

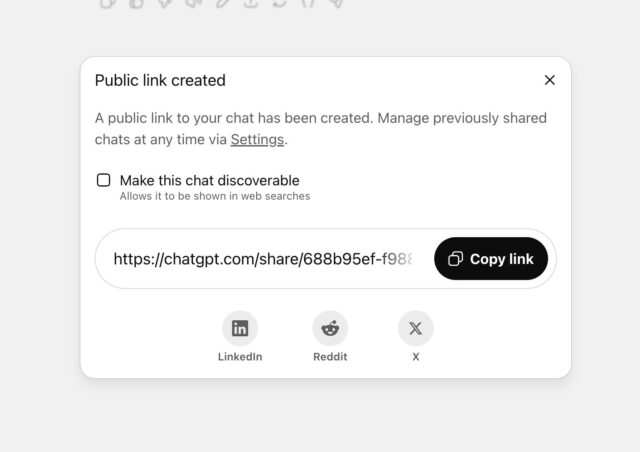

In their letter to ICE acting director Todd Lyons, senators sent a long list of questions to learn more about “ICE’s expanded use of biometric technology systems,” which senators suggested risked having “a sweeping and lasting impact on the public’s civil rights and liberties.” They demanded to know when ICE started using face scans in domestic deployments, as previously the technology was only known to be used at the border, and what testing was done to ensure apps like Mobile Fortify are accurate and unbiased.

Perhaps most relevant to 404 Media’s recent report, senators asked, “Does ICE have any policies, practices, or procedures around the use of the Mobile Fortify app to identify US citizens?” Lyons was supposed to respond by October 2, but Ars was not able to immediately confirm whether that deadline was met.

DHS declined “to confirm or deny law enforcement capabilities or methods” in response to 404 Media’s report, while CBP confirmed that Mobile Fortify is still being used by ICE, along with “a variety of technological capabilities” that supposedly “enhance the effectiveness of agents on the ground.”

ICE’s forced face scans to verify citizens is unconstitutional, lawmakers say Read More »