YouTube’s likeness detection has arrived to help stop AI doppelgängers

AI content has proliferated across the Internet over the past few years, but those early confabulations with mutated hands have evolved into synthetic images and videos that can be hard to differentiate from reality. Having helped to create this problem, Google has some responsibility to keep AI video in check on YouTube. To that end, the company has started rolling out its promised likeness detection system for creators.

Google’s powerful and freely available AI models have helped fuel the rise of AI content, some of which is aimed at spreading misinformation and harassing individuals. Creators and influencers fear their brands could be tainted by a flood of AI videos that show them saying and doing things that never happened—even lawmakers are fretting about this. Google has placed a large bet on the value of AI content, so banning AI from YouTube, as many want, simply isn’t happening.

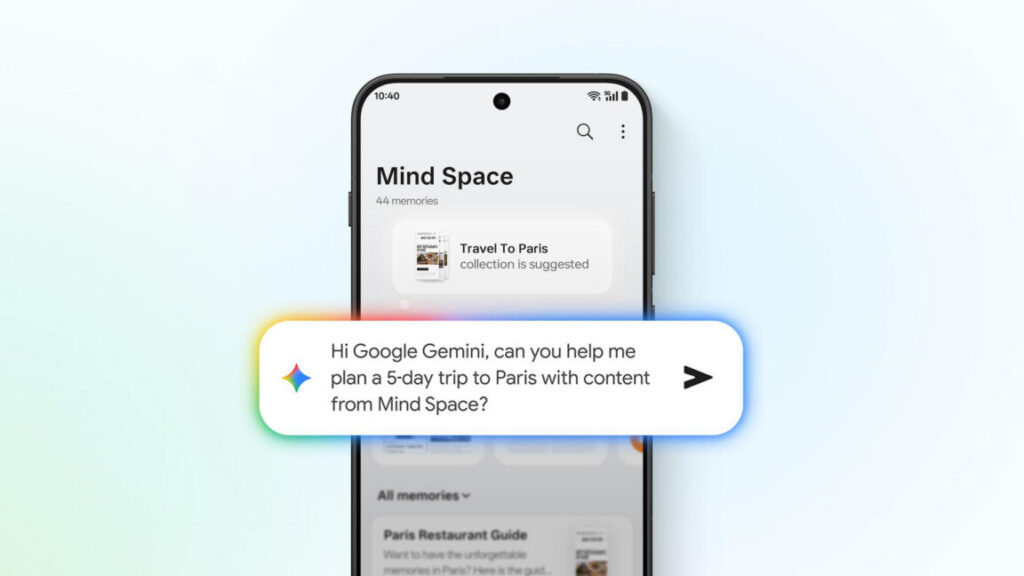

Earlier this year, YouTube promised tools that would flag face-stealing AI content on the platform. The likeness detection tool, which is similar to the site’s copyright detection system, has now expanded beyond the initial small group of testers. YouTube says the first batch of eligible creators have been notified that they can use likeness detection, but interested parties will need to hand Google even more personal information to get protection from AI fakes.

Sneak Peek: Likeness Detection on YouTube.

Currently, likeness detection is a beta feature in limited testing, so not all creators will see it as an option in YouTube Studio. When it does appear, it will be tucked into the existing “Content detection” menu. In YouTube’s demo video, the setup flow appears to assume the channel has only a single host whose likeness needs protection. That person must verify their identity, which requires a photo of a government ID and a video of their face. It’s unclear why YouTube needs this data in addition to the videos people have already posted with their oh-so stealable faces, but rules are rules.

YouTube’s likeness detection has arrived to help stop AI doppelgängers Read More »