Law enforcement operation takes down 22,000 malicious IP addresses worldwide

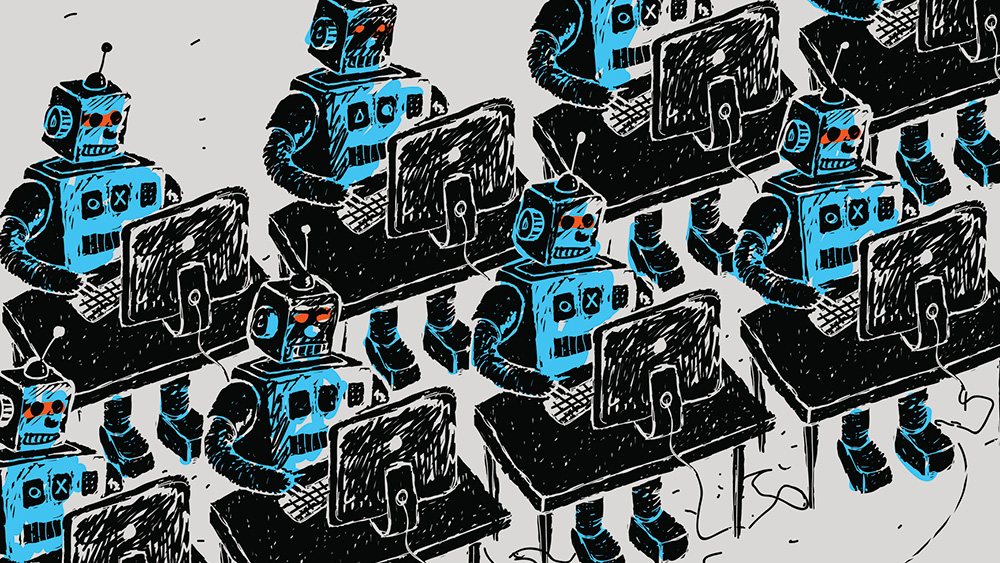

An international coalition of police agencies has taken a major whack at criminals accused of running a host of online scams, including phishing, the stealing of account credentials and other sensitive data, and the spreading of ransomware, Interpol said recently.

The operation, which ran from the beginning of April through the end of August, resulted in the arrest of 41 people and the takedown of 1,037 servers and other infrastructure running on 22,000 IP addresses. Synergia II, as the operation was named, was the work of multiple law enforcement agencies across the world, as well as three cybersecurity organizations.

A global response

“The global nature of cybercrime requires a global response which is evident by the support member countries provided to Operation Synergia II,” Neal Jetton, director of the Cybercrime Directorate at INTERPOL, said. “Together, we’ve not only dismantled malicious infrastructure but also prevented hundreds of thousands of potential victims from falling prey to cybercrime. INTERPOL is proud to bring together a diverse team of member countries to fight this ever-evolving threat and make our world a safer place.”

Among the highlights of Operation Synergia II were:

Hong Kong (China): Police supported the operation by taking offline more than 1,037 servers linked to malicious services.

Mongolia: Investigations included 21 house searches, the seizure of a server and the identification of 93 individuals with links to illegal cyber activities.

Macau (China): Police took 291 servers offline.

Madagascar: Authorities identified 11 individuals with links to malicious servers and seized 11 electronic devices for further investigation.

Estonia: Police seized more than 80GB of server data, and authorities are now working with INTERPOL to conduct further analysis of data linked to phishing and banking malware.

The three private cybersecurity organizations that were part of Operation Synergia II were Group-IB, Kaspersky, and Team Cymru. All three used the telemetry intelligence in their possession to identify malicious servers and made it available to participating law enforcement agencies. The law enforcement agencies conducted investigations that resulted in house searches, the disruption of malicious cyber activities, the lawful seizures of servers and other electronic devices, and arrests.

Law enforcement operation takes down 22,000 malicious IP addresses worldwide Read More »