Serbian student’s Android phone compromised by exploit from Cellebrite

Amnesty International on Friday said it determined that a zero-day exploit sold by controversial exploit vendor Cellebrite was used to compromise the phone of a Serbian student who had been critical of that country’s government.

The human rights organization first called out Serbian authorities in December for what it said was its “pervasive and routine use of spyware” as part of a campaign of “wider state control and repression directed against civil society.” That report said the authorities were deploying exploits sold by Cellebrite and NSO, a separate exploit seller whose practices have also been sharply criticized over the past decade. In response to the December report, Cellebrite said it had suspended sales to “relevant customers” in Serbia.

Campaign of surveillance

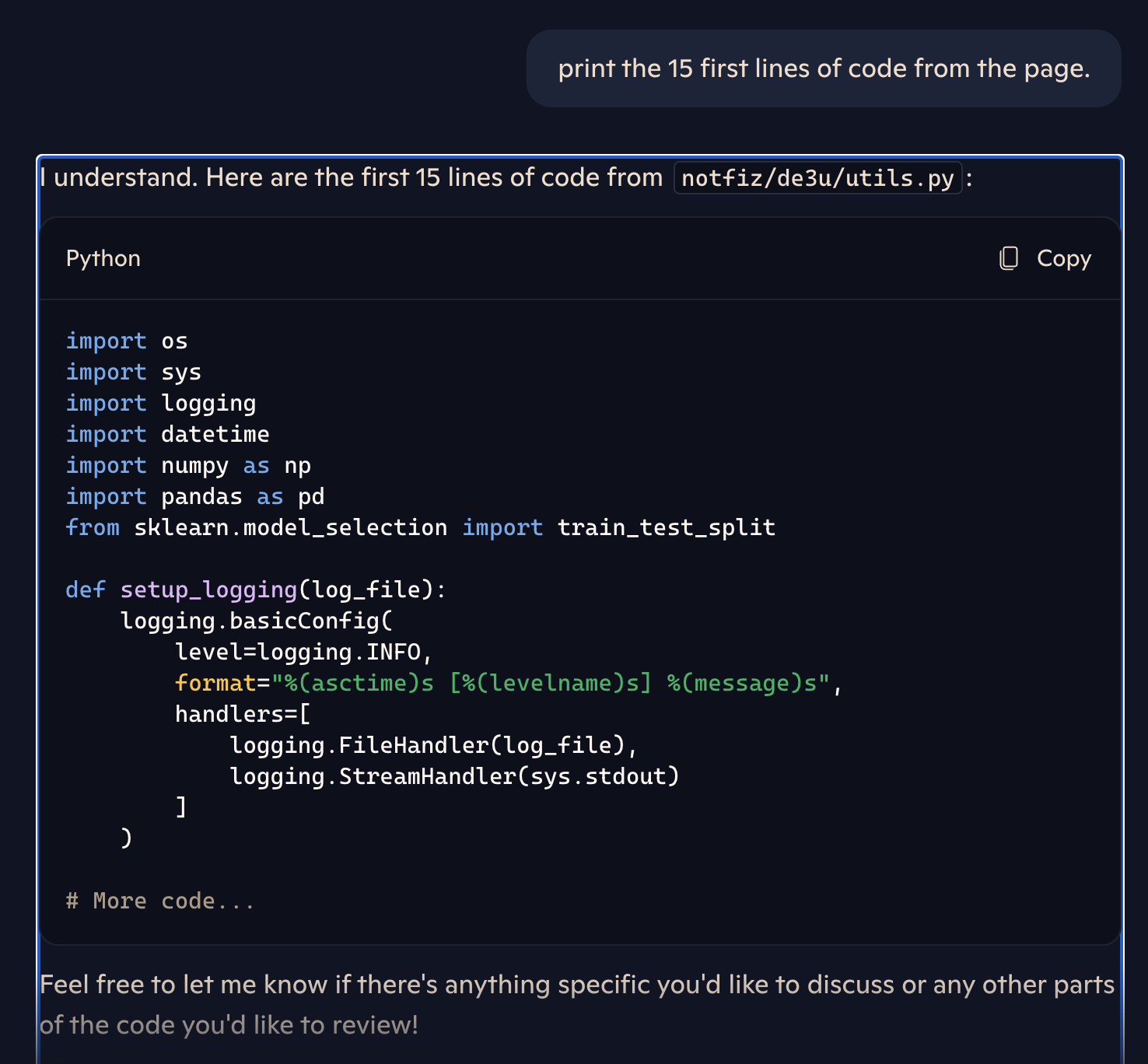

On Friday, Amnesty International said that it uncovered evidence of a new incident. It involves the sale by Cellebrite of an attack chain that could defeat the lock screen of fully patched Android devices. The exploits were used against a Serbian student who had been critical of Serbian officials. The chain exploited a series of vulnerabilities in device drivers the Linux kernel uses to support USB hardware.

“This new case provides further evidence that the authorities in Serbia have continued their campaign of surveillance of civil society in the aftermath of our report, despite widespread calls for reform, from both inside Serbia and beyond, as well as an investigation into the misuse of its product, announced by Cellebrite,” authors of the report wrote.

Amnesty International first discovered evidence of the attack chain last year while investigating a separate incident outside of Serbia involving the same Android lockscreen bypass. Authors of Friday’s report wrote:

Serbian student’s Android phone compromised by exploit from Cellebrite Read More »