OpenAI scraps controversial plan to become for-profit after mounting pressure

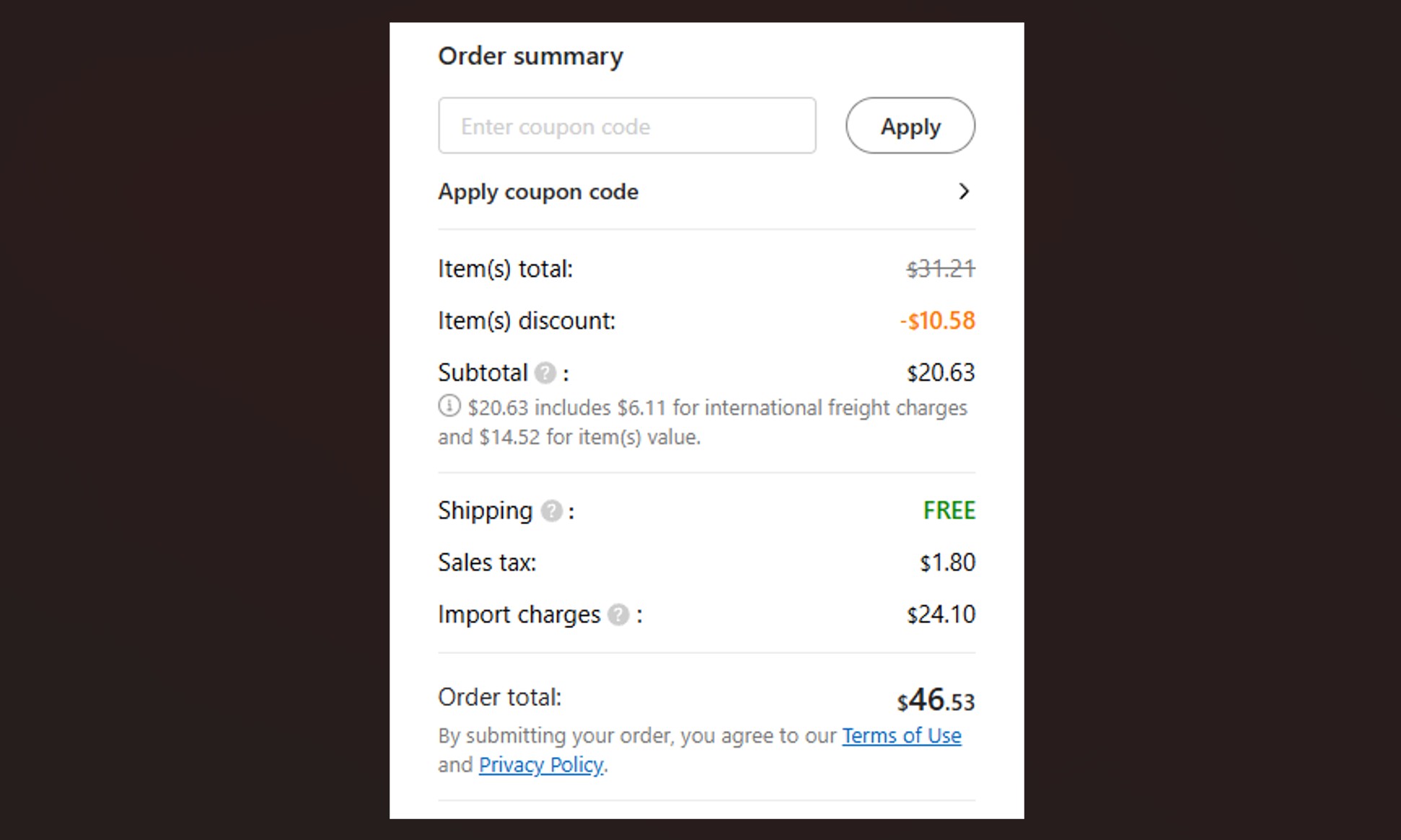

The restructuring would have also allowed OpenAI to remove the cap on returns for investors, potentially making the firm more appealing to venture capitalists, with the nonprofit arm continuing to exist but only as a minority stakeholder rather than maintaining governance control. This plan emerged as the company sought a funding round that would value it at $150 billion, which later expanded to the $40 billion round at a $300 billion valuation.

However, the new change in course follows months of mounting pressure from outside the company. In April, a group of legal scholars, AI researchers, and tech industry watchdogs openly opposed OpenAI’s plans to restructure, sending a letter to the attorneys general of California and Delaware.

Former OpenAI employees, Nobel laureates, and law professors also sent letters to state officials requesting that they halt the restructuring efforts out of safety concerns about which part of the company would be in control of hypothetical superintelligent future AI products.

“OpenAI was founded as a nonprofit, is today a nonprofit that oversees and controls the for-profit, and going forward will remain a nonprofit that oversees and controls the for-profit,” he added. “That will not change.”

Uncertainty ahead

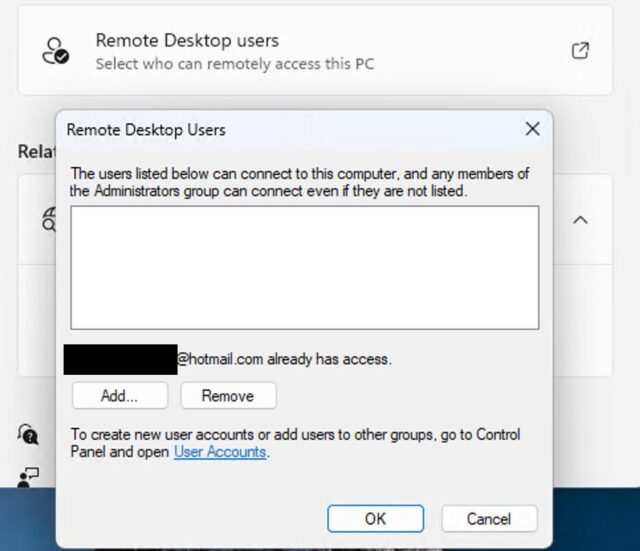

While abandoning the restructuring that would have ended nonprofit control, OpenAI still plans to make significant changes to its corporate structure. “The for-profit LLC under the nonprofit will transition to a Public Benefit Corporation (PBC) with the same mission,” Altman explained. “Instead of our current complex capped-profit structure—which made sense when it looked like there might be one dominant AGI effort but doesn’t in a world of many great AGI companies—we are moving to a normal capital structure where everyone has stock. This is not a sale, but a change of structure to something simpler.”

But the plan may cause some uncertainty for OpenAI’s financial future. When OpenAI secured a massive $40 billion funding round in March, it came with strings attached: Japanese conglomerate SoftBank, which committed $30 billion, stipulated that it would reduce its contribution to $20 billion if OpenAI failed to restructure into a fully for-profit entity by the end of 2025.

Despite the challenges ahead, Altman expressed confidence in the path forward: “We believe this sets us up to continue to make rapid, safe progress and to put great AI in the hands of everyone.”

OpenAI scraps controversial plan to become for-profit after mounting pressure Read More »