Unless users take action, Android will let Gemini access third-party apps

Starting today, Google is implementing a change that will enable its Gemini AI engine to interact with third-party apps, such as WhatsApp, even when users previously configured their devices to block such interactions. Users who don’t want their previous settings to be overridden may have to take action.

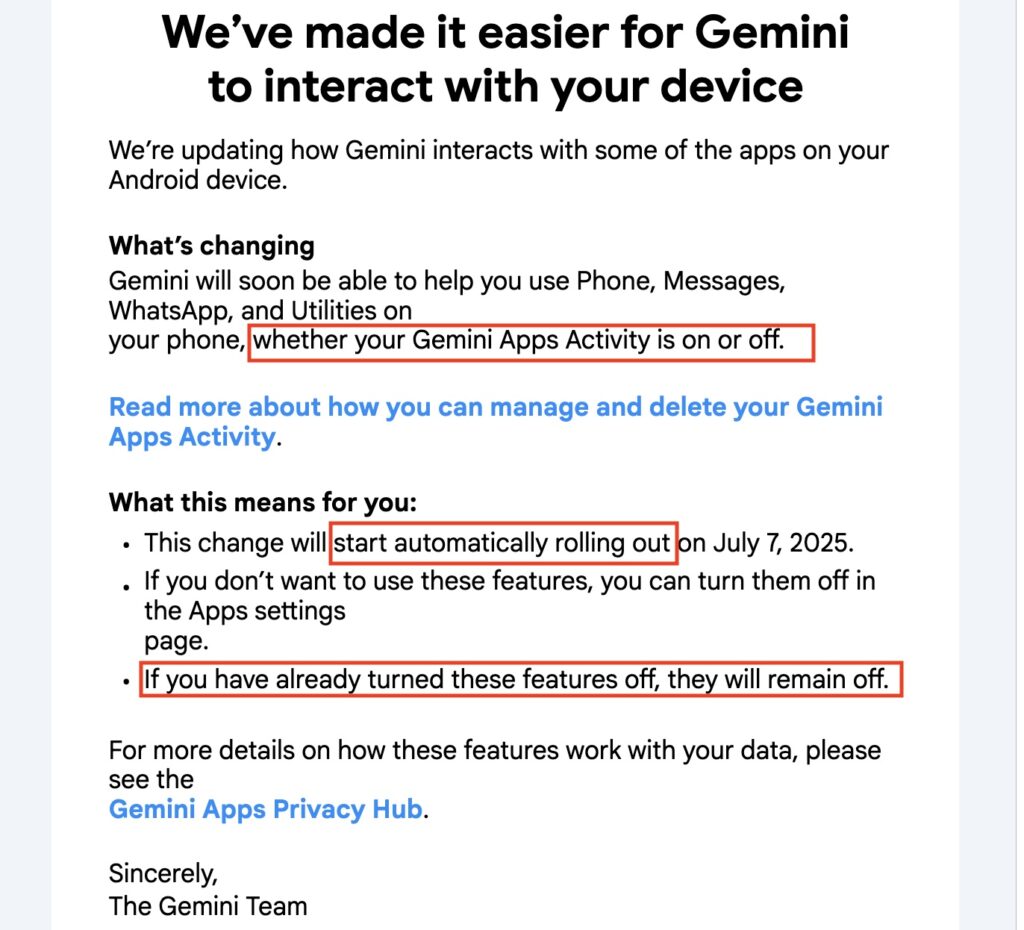

An email Google sent recently informing users of the change linked to a notification page that said that “human reviewers (including service providers) read, annotate, and process” the data Gemini accesses. The email provides no useful guidance for preventing the changes from taking effect. The email said users can block the apps that Gemini interacts with, but even in those cases, data is stored for 72 hours.

An email Google recently sent to Android users.

No, Google, it’s not good news

The email never explains how users can fully extricate Gemini from their Android devices and seems to contradict itself on how or whether this is even possible. At one point, it says the changes “will automatically start rolling out” today and will give Gemini access to apps such as WhatsApp, Messages, and Phone “whether your Gemini apps activity is on or off.” A few sentences later, the email says, “If you have already turned these features off, they will remain off.” Nowhere in the email or the support pages it links to are Android users informed how to remove Gemini integrations completely.

Compounding the confusion, one of the linked support pages requires users to open a separate support page to learn how to control their Gemini app settings. Following the directions from a computer browser, I accessed the settings of my account’s Gemini app. I was reassured to see the text indicating no activity has been stored because I have Gemini turned off. Then again, the page also said that Gemini was “not saving activity beyond 72 hours.”

Unless users take action, Android will let Gemini access third-party apps Read More »