AWE USA 2023 Day Three: Eyes on Apple

The last, third day of AWE USA 2023 took place on Friday, June 2. The first day of AWE is largely dominated by keynotes. A lot of air on the second day is taken up by the expo floor opening. By the third day, the keynotes are done, the expo floor starts to get packed away, and panel discussions and developer talks rule the day. And Apple ruled a lot of those talks.

Bracing for Impact From Apple

A big shift is expected this week as Apple is expected to announce its entrance into the XR market. The writing has been on the wall for a long time.

Rumors have probably been circulating for longer than many readers have even been watching XR. ARPost started speculating in 2018 on a 2019 release. Five years of radio silence later and we had reports that the product would be delayed indefinitely.

The rumor mill is back in operation with an expected launch this week (Apple’s WWDC23 starts today) – with many suggesting that Meta’s sudden announcement of the Quest 3 is a harbinger. Whether an Apple entrance is real this time or not, AWE is bracing itself.

Suspicion on Standards

Let’s take a step back and look at a conversation that happened on AWE USA 2023 Day Two, but is very pertinent to the emerging Apple narrative.

The “Building Open Standards for the Metaverse” panel moderated by Moor Insights and Strategy Senior Analyst Anshel Sag brought together XR Safety Initiative (XRSI) founder and CEO Kavya Pearlman, XRSI Advisor Elizabeth Rothman, and Khronos Group President Neil Trevett.

Apple’s tendency to operate outside of standards was discussed. Even prior to their entrance into the market, this has caused problems for XR app developers – Apple devices even have a different way of sensing depth than Android devices. XR glasses tend to come out first or only on Android in part because of Android’s more open ecosystem.

“Apple currently holds so much power that they could say ‘This is the way we’re going to go.’ and the Metaverse Standards Forum could stand up and say ‘No.’,” said Pearlman, expressing concern over accessibility of “the next generation of the internet”.

Trevett expressed a different approach, saying that standards should present the best option, not the only option. While standards are more useful the more groups use them, competition is helpful and shows diversity in the industry. And diversity in the industry is what sets Apple apart.

“If Apple does announce something, they’ll do a lot of education … it will progress how people use the tech whether they use open standards or not,” said Trevett. “If you don’t have a competitor on the proprietary end of the spectrum, that’s when you should start to worry because it means that no one cares enough about what you’re doing.”

Hope for New Displays

On Day Three, KGOn Tech LLC’s resident optics expert Karl Guttag presented an early morning developer session on “Optical Versus Passthrough Mixed Reality.” Guttag has been justifiably critical of Meta Quest Pro’s passthrough in particular. Even for optical XR, he expressed skepticism about a screen replacement, which is what the Apple headset is largely rumored to be.

“One of our biggest issues in the market is expectations vs. reality,” said Guttag. “What is hard in optical AR is easy in passthrough and vice versa. I see very little overlap in applications … there is also very little overlap in device requirements.”

A New Generation of Interaction

“The Quest 3 has finally been announced, which is great for everyone in the industry,” 3lbXR and 3lb Games CEO Robin Moulder said in her talk “Expand Your Reach: Ditch the Controllers and Jump into Mixed Reality.” “Next week is going to be a whole new level when Apple announces something – hopefully.”

Moulder presented the next round of headsets as the first of a generation that will hopefully be user-friendly enough to increase adoption and deployment bringing more users and creators into the XR ecosystem.

“By the time we have the Apple headset and the new Quest 3, everybody is going to be freaking out about how great hand tracking is and moving into this new world of possibilities,” said Moulder.

More on AI

AI isn’t distracting anyone from XR and Apple isn’t distracting anyone from AI. Apple appearing as a conference theme doesn’t mean that anyone was done talking about AI. If you’re sick of reading about AI, at least read the first section below.

Lucid Realities: A Glimpse Into the Current State of Generative AI

After two full days of people talking about how AI is a magical world generator that’s going to take the task of content creation off of the shoulders of builders, Microsoft Research Engineer Jasmine Roberts set the record straight.

“We’ve passed through this techno-optimist state into dystopia and neither of those are good,” said Roberts. “When people think that [AI] can replace writers, it’s not really meant to do that. You still need human supervisors.”

AI not being able to do everything that a lot of people think it can isn’t the end of the world. A lot of the things that people want AI to do is already possible through other less glamorous tools.

“A lot of what people want from generative AI, they can actually get from procedural generation,” said Roberts. “There are some situations where you need bespoke assets so generative AI wouldn’t really cut it.”

Roberts isn’t against AI – her presentation was simply illustrating that it doesn’t work the way that some industry outsiders are being led to believe. That isn’t the same as saying that it doesn’t work. In fact, she brought a demo of an upcoming AI-powered Clippy. (You remember Clippy, right?)

Augmented Ecologies

Roberts was talking about the limitations of AI. The “Augmented Ecologies” panel moderated by AWE co-founder Tish Shute, saw Three Dog Labs founder Sean White, Morpheus XR CTO Anselm Hook, and Croquet founder and CTO David A. Smith talking about what happens when AI is the new dominant life form on planet Earth.

“We’re kind of moving to a probabilistic model, it’s less deterministic, which is much more in line with ecological models,” said White.

This talk presented the scenario in which developers are no longer the ones running the show. AI takes on a life of its own, and that life is more capable than ours.

“In an ecology, we’re not necessarily at the center, we’re part of the system,” said Hook. “We’re not necessarily able to dominate the technologies that are out there anymore.”

This might scare you, but it doesn’t scare Smith. Smith described a future in which AI becomes the legacy that can live in environments that humans never can, like the reaches of space.

“The metaverse and AI are going to redefine what it means to be human,” said Smith. “Ecosystems are not healthy if they are not evolving.”

“No Longer the Apex”

On the morning of Day Two, the Virtual World Society and the VR/AR Association hosted a very special breakfast. Invited were some of the most influential leaders in the immersive technology space. The goal was to discuss the health and future of the XR industry.

The findings will be presented in a report, but some of the concepts were also presented at “Spatial Computing for All” – a fireside chat with Virtual World Society Founder Tom Furness, HTC China President Alvin Graylin, and moderated by technology consultant Linda Ricci.

The major takeaway was that the industry insiders aren’t particularly worried about the next few years. After that, the way in which we do work might start to change and that might have to change the ways that we think about ourselves and value our identities in a changing society.

AWE Is Changing Too

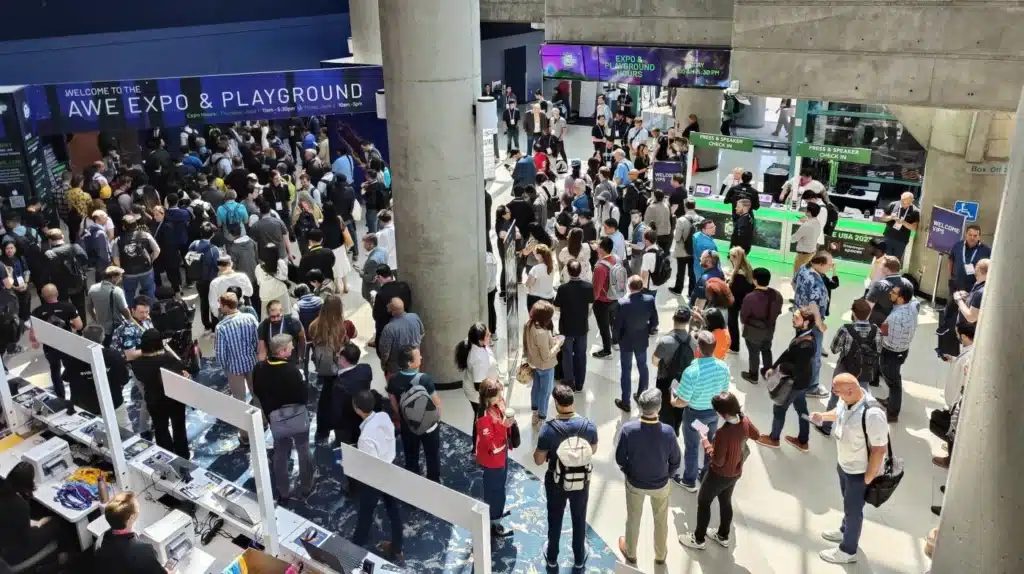

During the show wrap-up, Ori Inbar had some big news. “AWE is leveling up to LA.” This was the fourteenth AWE. Every AWE, except for one year when the entire conference was virtual because of the COVID-19 pandemic, has been in Santa Clara. But, the conference has grown so much that it’s time to move.

“I think we realized this year that we were kind of busting at the seams,” said Inbar. “We need a lot more space.”

The conference, which will take place from June 18-20 will be in Long Beach, with “super, super early bird tickets” available for the next few weeks.

Yes, There’s Still More

Most of the Auggie Awards and the winners of Inbar’s climate challenge were announced during a ceremony on the evening of Day Two. During the event wrap-up, the final three Auggies were awarded. We didn’t forget, we just didn’t have room for them in our coverage.

So, there is one final piece of AWE coverage just on the Auggies. Keep an eye out. Spoiler alert, Apple wasn’t nominated in any of the categories.

AWE USA 2023 Day Three: Eyes on Apple Read More »