Google can now generate a fake AI podcast of your search results

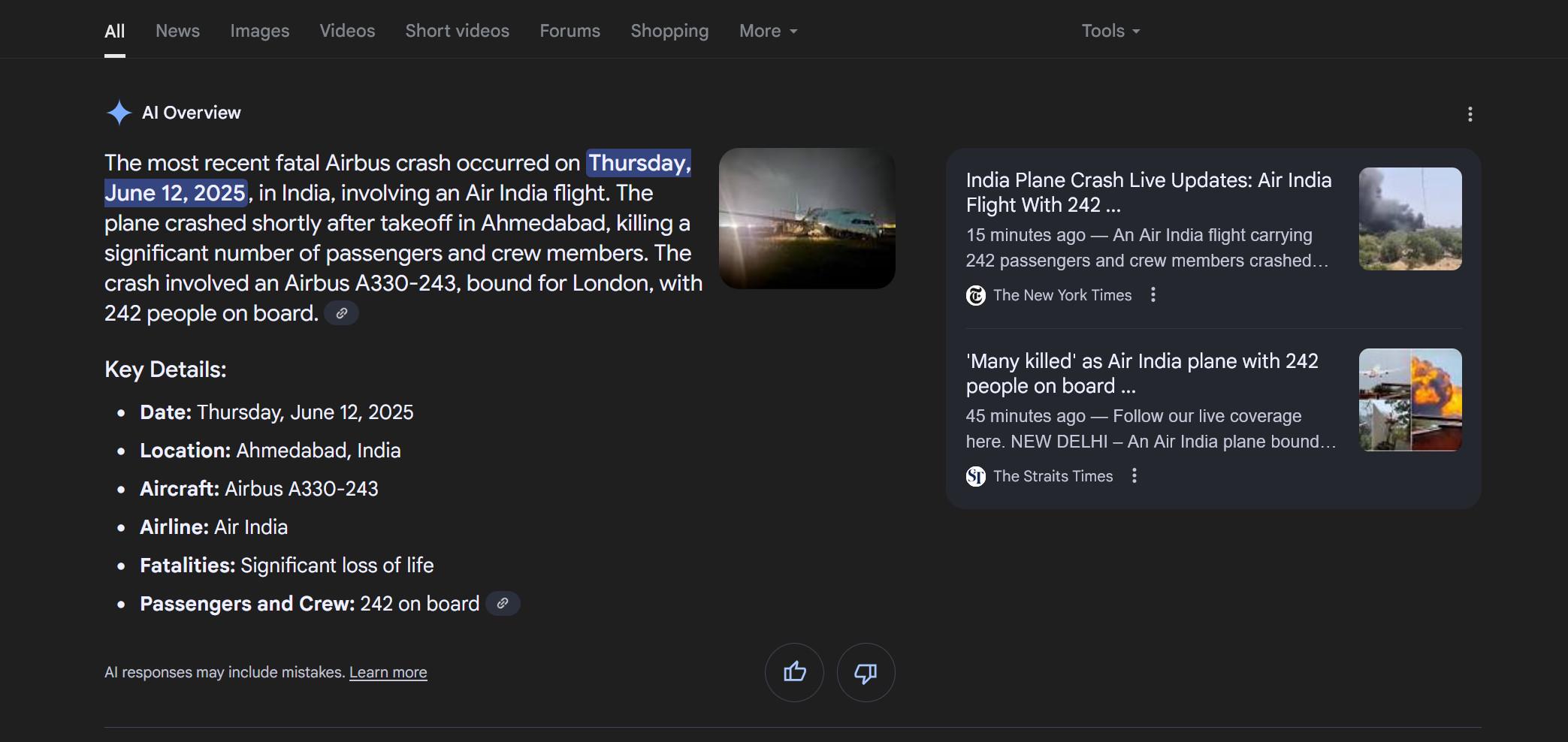

NotebookLM is undoubtedly one of Google’s best implementations of generative AI technology, giving you the ability to explore documents and notes with a Gemini AI model. Last year, Google added the ability to generate so-called “audio overviews” of your source material in NotebookLM. Now, Google has brought those fake AI podcasts to search results as a test. Instead of clicking links or reading the AI Overview, you can have two nonexistent people tell you what the results say.

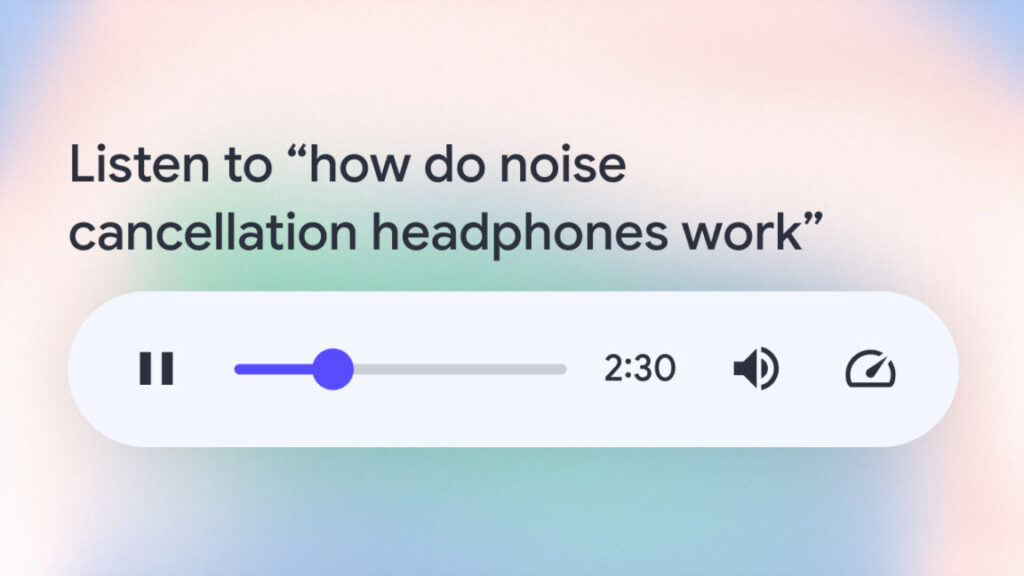

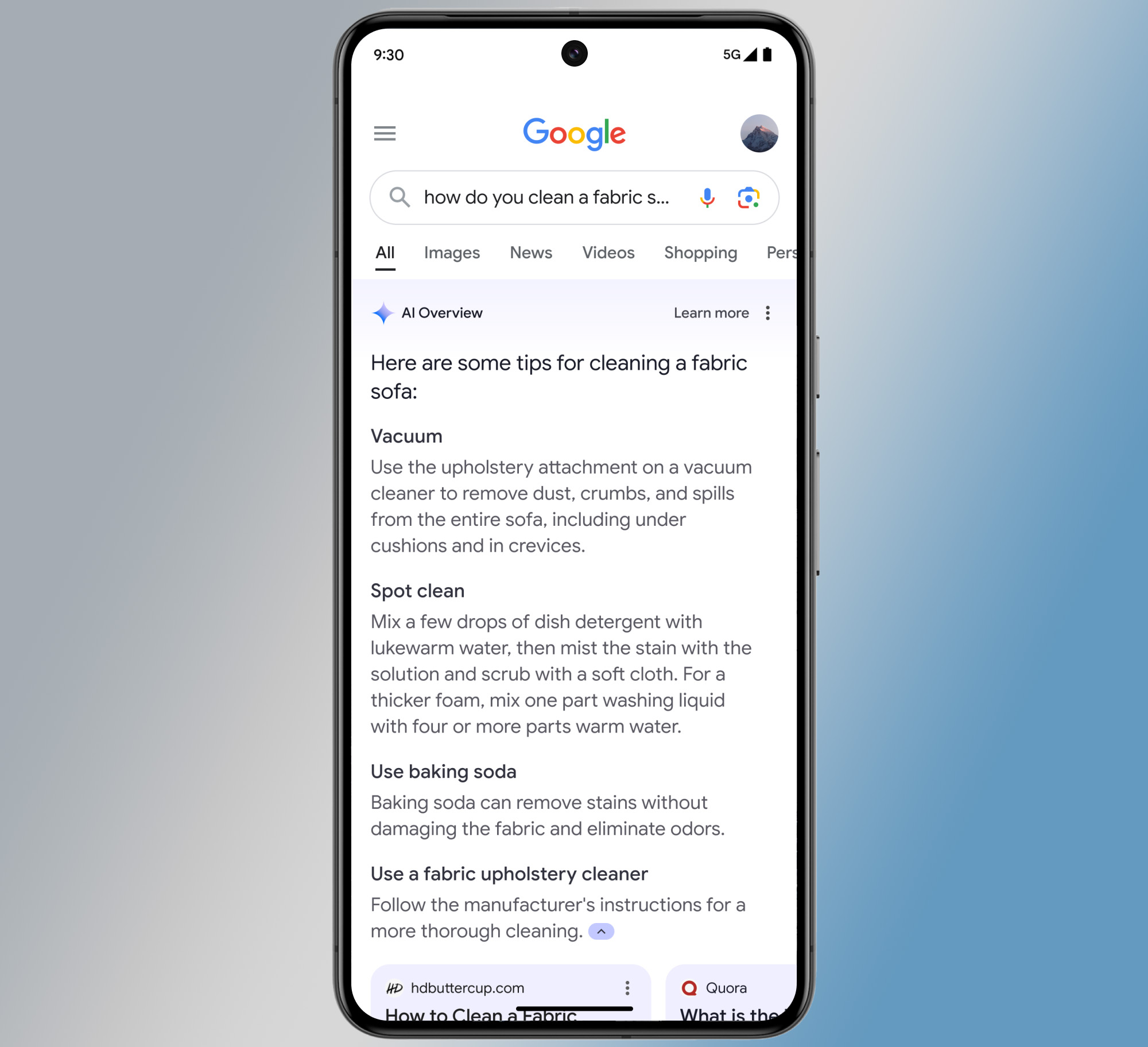

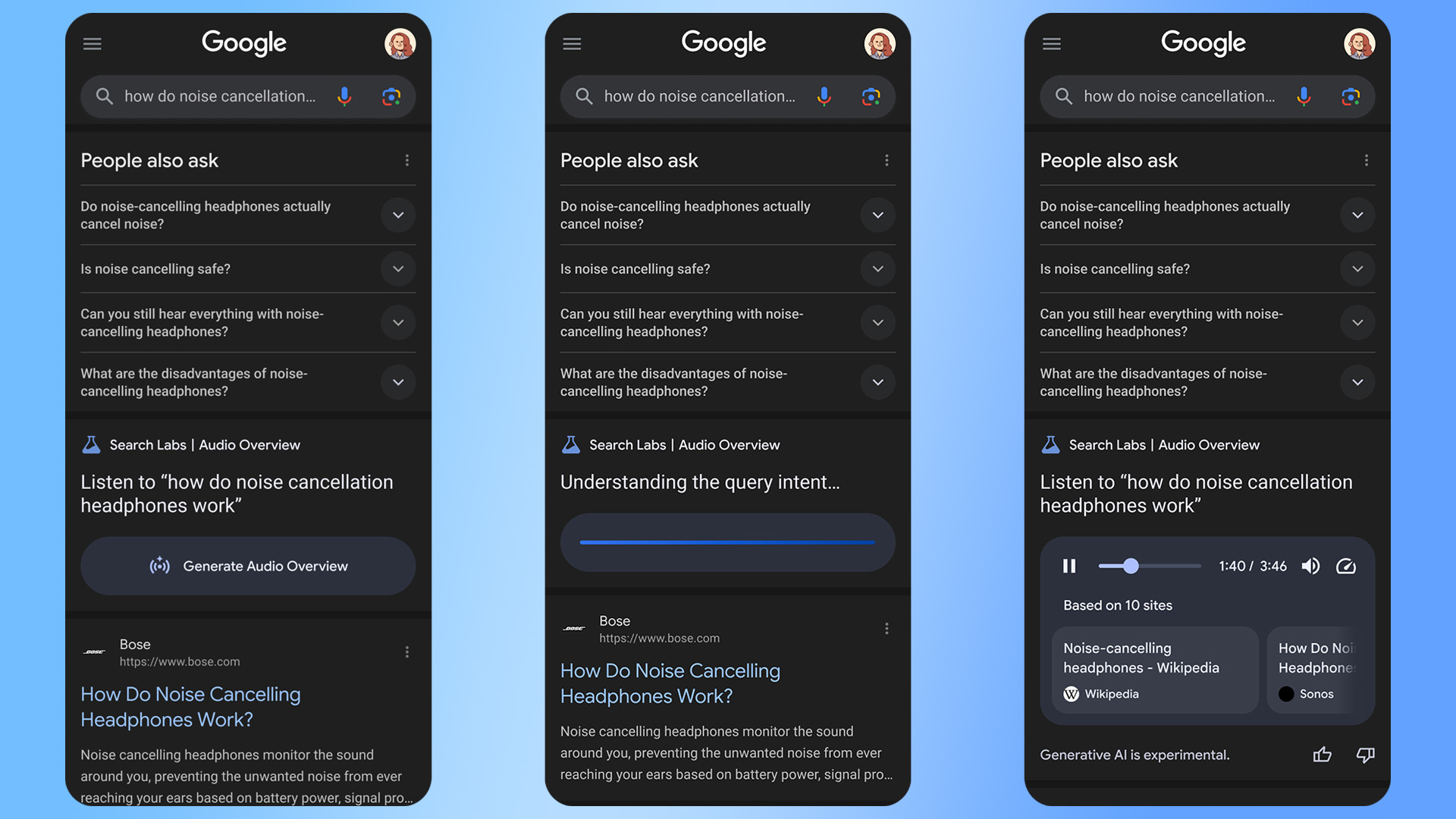

This feature is not currently rolling out widely—it’s available in search labs, which means you have to manually enable it. Anyone can opt in to the new Audio Overview search experience, though. If you join the test, you’ll quickly see the embedded player in Google search results. However, it’s not at the top with the usual block of AI-generated text. Instead, you’ll see it after the first few search results, below the “People also ask” knowledge graph section.

Credit: Google

Google isn’t wasting resources to generate the audio automatically, so you have to click the generate button to get started. A few seconds later, you’re given a back-and-forth conversation between two AI voices summarizing the search results. The player includes a list of sources from which the overview is built, as well as the option to speed up or slow down playback.

Google can now generate a fake AI podcast of your search results Read More »