Apple and OpenAI currently have the most misunderstood partnership in tech

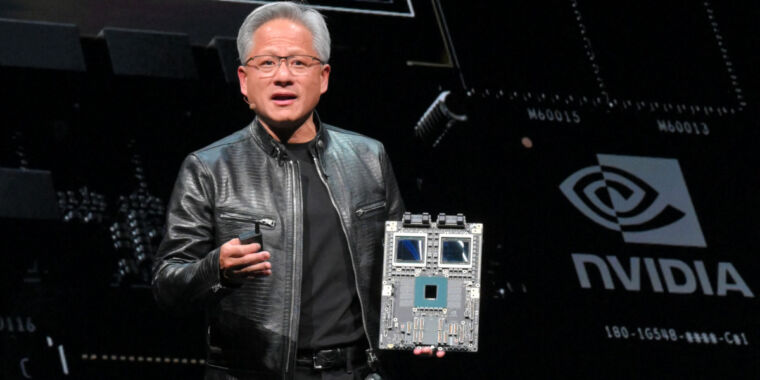

Enlarge / He isn’t using an iPhone, but some people talk to Siri like this.

On Monday, Apple premiered “Apple Intelligence” during a wide-ranging presentation at its annual Worldwide Developers Conference in Cupertino, California. However, the heart of its new tech, an array of Apple-developed AI models, was overshadowed by the announcement of ChatGPT integration into its device operating systems.

Since rumors of the partnership first emerged, we’ve seen confusion on social media about why Apple didn’t develop a cutting-edge GPT-4-like chatbot internally. Despite Apple’s year-long development of its own large language models (LLMs), many perceived the integration of ChatGPT (and opening the door for others, like Google Gemini) as a sign of Apple’s lack of innovation.

“This is really strange. Surely Apple could train a very good competing LLM if they wanted? They’ve had a year,” wrote AI developer Benjamin De Kraker on X. Elon Musk has also been grumbling about the OpenAI deal—and spreading misinformation about it—saying things like, “It’s patently absurd that Apple isn’t smart enough to make their own AI, yet is somehow capable of ensuring that OpenAI will protect your security & privacy!”

While Apple has developed many technologies internally, it has also never been shy about integrating outside tech when necessary in various ways, from acquisitions to built-in clients—in fact, Siri was initially developed by an outside company. But by making a deal with a company like OpenAI, which has been the source of a string of tech controversies recently, it’s understandable that some people don’t understand why Apple made the call—and what it might entail for the privacy of their on-device data.

“Our customers want something with world knowledge some of the time”

While Apple Intelligence largely utilizes its own Apple-developed LLMs, Apple also realized that there may be times when some users want to use what the company considers the current “best” existing LLM—OpenAI’s GPT-4 family. In an interview with The Washington Post, Apple CEO Tim Cook explained the decision to integrate OpenAI first:

“I think they’re a pioneer in the area, and today they have the best model,” he said. “And I think our customers want something with world knowledge some of the time. So we considered everything and everyone. And obviously we’re not stuck on one person forever or something. We’re integrating with other people as well. But they’re first, and I think today it’s because they’re best.”

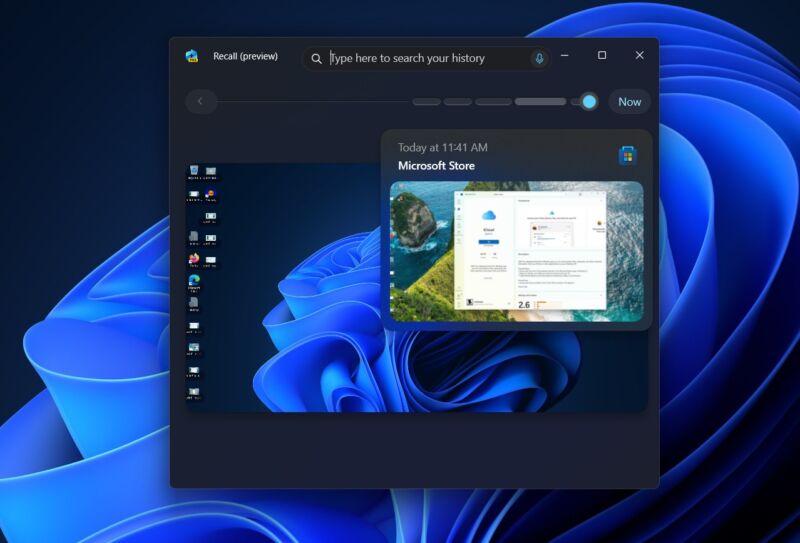

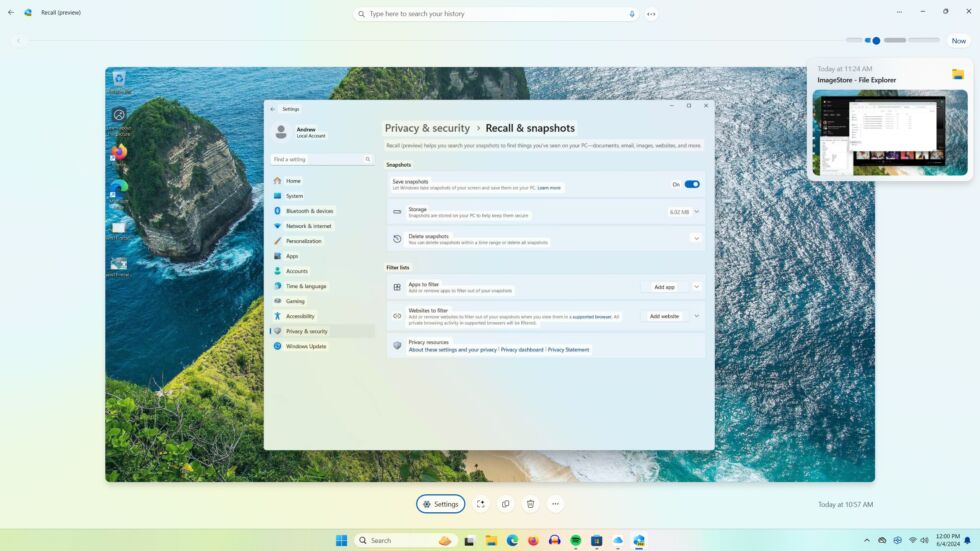

The proposed benefit of Apple integrating ChatGPT into various experiences within iOS, iPadOS, and macOS is that it allows AI users to access ChatGPT’s capabilities without the need to switch between different apps—either through the Siri interface or through Apple’s integrated “Writing Tools.” Users will also have the option to connect their paid ChatGPT account to access extra features.

As an answer to privacy concerns, Apple says that before any data is sent to ChatGPT, the OS asks for the user’s permission, and the entire ChatGPT experience is optional. According to Apple, requests are not stored by OpenAI, and users’ IP addresses are hidden. Apparently, communication with OpenAI servers happens through API calls similar to using the ChatGPT app on iOS, and there is reportedly no deeper OS integration that might expose user data to OpenAI without the user’s permission.

We can only take Apple’s word for it at the moment, of course, and solid details about Apple’s AI privacy efforts will emerge once security experts get their hands on the new features later this year.

Apple’s history of tech integration

So you’ve seen why Apple chose OpenAI. But why look to outside companies for tech? In some ways, Apple building an external LLM client into its operating systems isn’t too different from what it has previously done with streaming video (the YouTube app on the original iPhone), Internet search (Google search integration), and social media (integrated Twitter and Facebook sharing).

The press has positioned Apple’s recent AI moves as Apple “catching up” with competitors like Google and Microsoft in terms of chatbots and generative AI. But playing it slow and cool has long been part of Apple’s M.O.—not necessarily introducing the bleeding edge of technology but improving existing tech through refinement and giving it a better user interface.

Apple and OpenAI currently have the most misunderstood partnership in tech Read More »