OpenAI’s Murati shocks with sudden departure announcement

thinning crowd —

OpenAI CTO’s resignation coincides with news about the company’s planned restructuring.

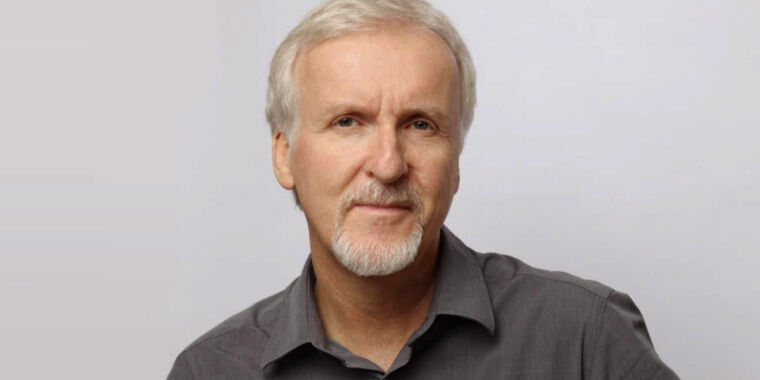

Enlarge / Mira Murati, Chief Technology Officer of OpenAI, speaks during The Wall Street Journal’s WSJ Tech Live Conference in Laguna Beach, California on October 17, 2023.

On Wednesday, OpenAI Chief Technical Officer Mira Murati announced she is leaving the company in a surprise resignation shared on the social network X. Murati joined OpenAI in 2018, serving for six-and-a-half years in various leadership roles, most recently as the CTO.

“After much reflection, I have made the difficult decision to leave OpenAI,” she wrote in a letter to the company’s staff. “While I’ll express my gratitude to many individuals in the coming days, I want to start by thanking Sam and Greg for their trust in me to lead the technical organization and for their support throughout the years,” she continued, referring to OpenAI CEO Sam Altman and President Greg Brockman. “There’s never an ideal time to step away from a place one cherishes, yet this moment feels right.”

At OpenAI, Murati was in charge of overseeing the company’s technical strategy and product development, including the launch and improvement of DALL-E, Codex, Sora, and the ChatGPT platform, while also leading research and safety teams. In public appearances, Murati often spoke about ethical considerations in AI development.

Murati’s decision to leave the company comes when OpenAI finds itself at a major crossroads with a plan to alter its nonprofit structure. According to a Reuters report published today, OpenAI is working to reorganize its core business into a for-profit benefit corporation, removing control from its nonprofit board. The move, which would give CEO Sam Altman equity in the company for the first time, could potentially value OpenAI at $150 billion.

Murati stated her decision to leave was driven by a desire to “create the time and space to do my own exploration,” though she didn’t specify her future plans.

Proud of safety and research work

Enlarge / OpenAI CTO Mira Murati seen debuting GPT-4o during OpenAI’s Spring Update livestream on May 13, 2024.

OpenAI

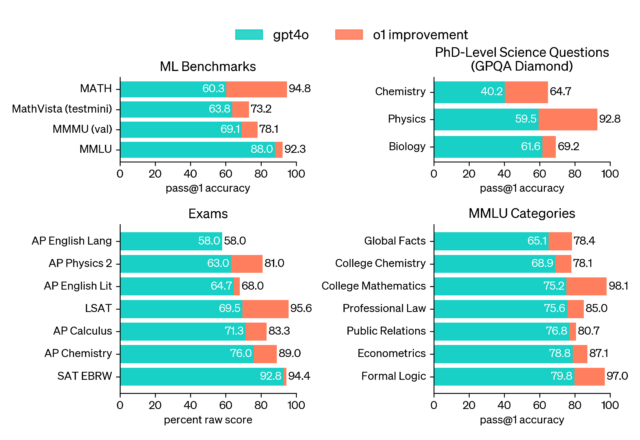

In her departure announcement, Murati highlighted recent developments at OpenAI, including innovations in speech-to-speech technology and the release of OpenAI o1. She cited what she considers the company’s progress in safety research and the development of “more robust, aligned, and steerable” AI models.

Altman replied to Murati’s tweet directly, expressing gratitude for Murati’s contributions and her personal support during challenging times, likely referring to the tumultuous period in November 2023 when the OpenAI board of directors briefly fired Altman from the company.

“It’s hard to overstate how much Mira has meant to OpenAI, our mission, and to us all personally,” he wrote. “I feel tremendous gratitude towards her for what she has helped us build and accomplish, but I most of all feel personal gratitude towards her for the support and love during all the hard times. I am excited for what she’ll do next.”

Not the first major player to leave

Enlarge / An image Ilya Sutskever tweeted with this OpenAI resignation announcement. From left to right: OpenAI Chief Scientist Jakub Pachocki, President Greg Brockman (on leave), Sutskever (now former Chief Scientist), CEO Sam Altman, and soon-to-be-former CTO Mira Murati.

With Murati’s exit, Altman remains one of the few long-standing senior leaders at OpenAI, which has seen significant shuffling in its upper ranks recently. In May 2024, former Chief Scientist Ilya Sutskever left to form his own company, Safe Superintelligence, Inc. (SSI), focused on building AI systems that far surpass humans in logical capabilities. That came just six months after Sutskever’s involvement in the temporary removal of Altman as CEO.

John Schulman, an OpenAI co-founder, departed earlier in 2024 to join rival AI firm Anthropic, and in August, OpenAI President Greg Brockman announced he would be taking a temporary sabbatical until the end of the year.

The leadership shuffles have raised questions among critics about the internal dynamics at OpenAI under Altman and the state of OpenAI’s future research path, which has been aiming toward creating artificial general intelligence (AGI)—a hypothetical technology that could potentially perform human-level intellectual work.

“Question: why would key people leave an organization right before it was just about to develop AGI?” asked xAI developer Benjamin De Kraker in a post on X just after Murati’s announcement. “This is kind of like quitting NASA months before the moon landing,” he wrote in a reply. “Wouldn’t you wanna stick around and be part of it?”

Altman mentioned that more information about transition plans would be forthcoming, leaving questions about who will step into Murati’s role and how OpenAI will adapt to this latest leadership change as the company is poised to adopt a corporate structure that may consolidate more power directly under Altman. “We’ll say more about the transition plans soon, but for now, I want to take a moment to just feel thanks,” Altman wrote.

OpenAI’s Murati shocks with sudden departure announcement Read More »