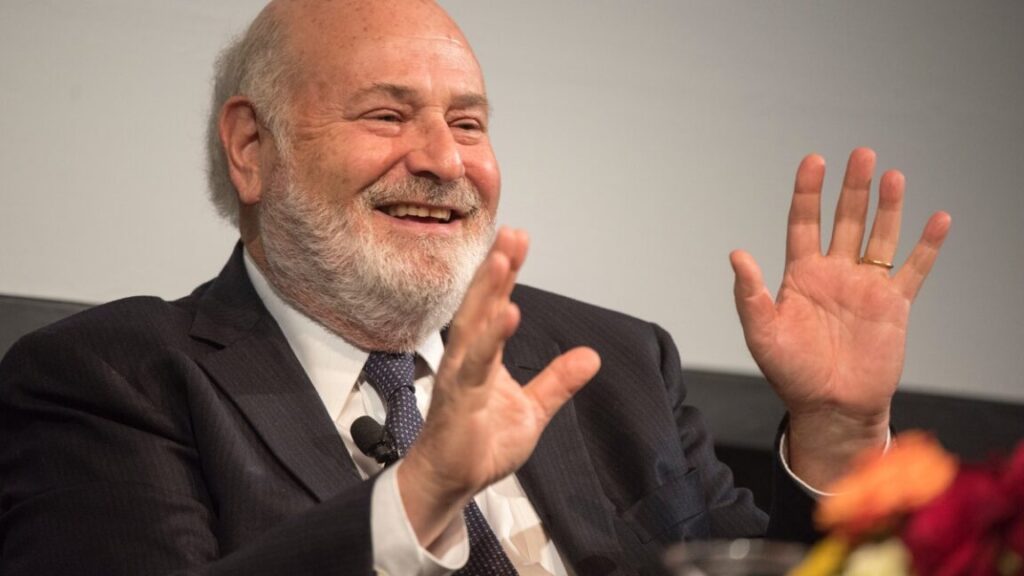

Filmmaker Rob Reiner, wife, killed in horrific home attack

We woke up this morning to the horrifying news that beloved actor and director Rob Reiner and his wife Michele were killed in their Brentwood home in Los Angeles last night. Both had been stabbed multiple times. Details are scarce, but the couple’s 32-year-old son, Nick—who has long struggled with addiction and recently moved back in with his parents—has been arrested in connection with the killings, with bail set at $4 million. [UPDATE: Nick Reiner’s bail has been revoked and he faces possible life in prison.]

“As a result of the initial investigation, it was determined that the Reiners were the victims of homicide,” the LAPD said. “The investigation further revealed that Nick Reiner, the 32-year-old son of Robert and Michele Reiner, was responsible for their deaths. Nick Reiner was located and arrested at approximately 9: 15 p.m. He was booked for murder and remains in custody with no bail. On Tuesday, December 16, 2025, the case will be presented to the Los Angeles County District Attorney’s Office for filing consideration.”

“It is with profound sorrow that we announce the tragic passing of Michele and Rob Reiner,” the family said in a statement confirming the deaths. “We are heartbroken by this sudden loss, and we ask for privacy during this unbelievably difficult time.”

Reiner started his career as an actor, best known for his Emmy-winning role as Meathead, son-in-law to Archie Bunker, on the 1970s sitcom All in the Family. (“I could win the Nobel Prize and they’d write ‘Meathead wins the Nobel Prize,’” Reiner once joked about the enduring popularity of the character.) Then Reiner turned to directing, although he continued to make small but memorable appearances in films such as Throw Momma from the Train, Sleepless in Seattle, The First Wives Club, and The Wolf of Wall Street, as well as TV’s The New Girl.

His first feature film as a director was an instant classic: 1984’s heavy metal mockumentary This Is Spinal Tap (check out the ultra-meta four-minute alt-trailer). He followed that up with a string of hits: The Sure Thing, Stand by Me, The Princess Bride, When Harry Met Sally…, Misery, the Oscar-nominated A Few Good Men, The American President, The Bucket List, and Ghosts of Mississippi. His 2015 film Being Charlie was co-written with his son Nick and was loosely based on Nick’s experience with addiction. Reiner’s most recent films were a 2024 political documentary about the rise of Christian nationalism and this year’s delightful Spinal Tap II: The End Continues.

Filmmaker Rob Reiner, wife, killed in horrific home attack Read More »