Researchers engineer bacteria to produce plastics

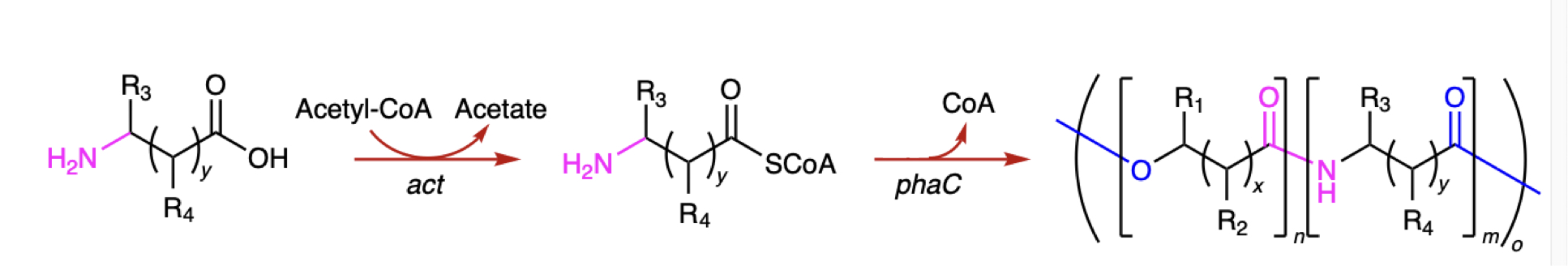

One of the enzymes used in this system takes an amino acid (left) and links it to Coenzyme A. The second takes these items and links them into a polymer. Credit: Chae et. al.

Normally, PHA synthase forms links between molecules that run through an oxygen atom. But it’s also possible to form a related chemical link that instead runs through a nitrogen atom, like those found on amino acids. There were no known enzymes, however, that catalyze these reactions. So, the researchers decided to test whether any existing enzymes could be induced to do something they don’t normally do.

The researchers started with an enzyme from Clostridium that links chemicals to Coenzyme A that has a reputation for not being picky about the chemicals it interacts with. This worked reasonably well at linking amino acids to Coenzyme A. For linking the amino acids together, they used an enzyme from Pseudomonas that had four different mutations that expanded the range of molecules it would use as reaction materials. Used in a test tube, the system worked: Amino acids were linked together in a polymer.

The question was whether it would work in cells. Unfortunately, one of the two enzymes turns out to be mildly toxic to E. coli, slowing its growth. So, the researchers evolved a strain of E. coli that could tolerate the protein. With both of these two proteins, the cells produced small amounts of an amino acid polymer. If they added an excess of an amino acid to the media the cells were growing in, the polymer would be biased toward incorporating that amino acid.

Boosting polymer production

However, the yield of the polymer by weight of bacteria was fairly low. “It was reasoned that these [amino acids] might be more efficiently incorporated into the polymer if generated within the cells from a suitable carbon source,” the researchers write. So, the researchers put in extra copies of the genes needed to produce one specific amino acid (lysine). That worked, producing more polymer, with a higher percentage of the polymer being lysine.

Researchers engineer bacteria to produce plastics Read More »