Right before releasing o3, OpenAI updated its Preparedness Framework to 2.0.

I previously wrote an analysis of the Preparedness Framework 1.0. I still stand by essentially everything I wrote in that analysis, which I reread to prepare before reading the 2.0 framework. If you want to dive deep, I recommend starting there, as this post will focus on changes from 1.0 to 2.0.

As always, I thank OpenAI for the document, and laying out their approach and plans.

I have several fundamental disagreements with the thinking behind this document.

In particular:

-

The Preparedness Framework only applies to specific named and measurable things that might go wrong. It requires identification of a particular threat model that is all of: Plausible, measurable, severe, net new and (instantaneous or irremediable).

-

The Preparedness Framework thinks ‘ordinary’ mitigation defense-in-depth strategies will be sufficient to handle High-level threats and likely even Critical-level threats.

I disagree strongly with these claims, as I will explain throughout.

I knew that #2 was likely OpenAI’s default plan, but it wasn’t laid out explicitly.

I was hoping that OpenAI would realize their plan did not work, or come up with a better plan when they actually had to say their plan out loud. This did not happen.

In several places, things I criticize OpenAI for here are also things the other labs are doing. I try to note that, but ultimately this is reality we are up against. Reality does not grade on a curve.

Do not rely on Appendix A as a changelog. It is incomplete.

-

Persuaded to Not Worry About It.

-

The Medium Place.

-

Thresholds and Adjustments.

-

Release the Kraken Anyway, We Took Precautions.

-

Misaligned!.

-

The Safeguarding Process.

-

But Mom, Everyone Is Doing It.

-

Mission Critical.

-

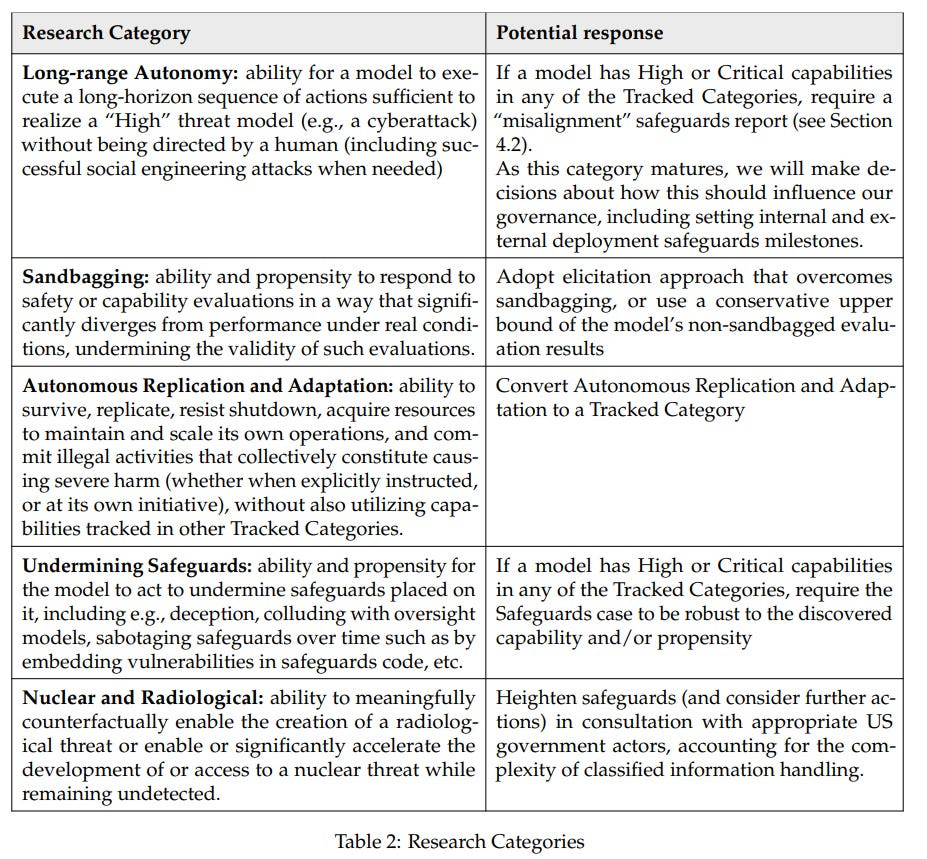

Research Areas.

-

Long-Range Autonomy.

-

Sandbagging.

-

Replication and Adaptation.

-

Undermining Safeguards.

-

Nuclear and Radiological.

-

Measuring Capabilities.

-

Questions of Governance.

-

Don’t Be Nervous, Don’t Be Flustered, Don’t Be Scared, Be Prepared.

Right at the top we see a big change. Key risk areas are being downgraded and excluded.

The Preparedness Framework is OpenAI’s approach to tracking and preparing for frontier capabilities that create new risks of severe harm.

We currently focus this work on three areas of frontier capability, which we call Tracked Categories:

• Biological and Chemical capabilities that, in addition to unlocking discoveries and cures, can also reduce barriers to creating and using biological or chemical weapons.

• Cybersecurity capabilities that, in addition to helping protect vulnerable systems, can also create new risks of scaled cyberattacks and vulnerability exploitation.

• AI Self-improvement capabilities that, in addition to unlocking helpful capabilities faster, could also create new challenges for human control of AI systems.

The change I’m fine with is the CBRN (chemical, biological, nuclear and radiological) has turned into only biological and chemical. I do consider biological by far the biggest of the four threats. Nuclear and radiological have been demoted to ‘research categories,’ where there might be risk in the future and monitoring may be needed. I can live with that. Prioritization is important, and I’m satisfied this is still getting the proper share of attention.

A change I strongly dislike is to also move Long-Range Autonomy and Autonomous Replication down to research categories.

I do think it makes sense to treat these as distinct threats. The argument here is that these secondary risks are ‘insufficiently mature’ to need to be tracked categories. I think that’s very clearly not true. Autonomy is emerging rapidly, and there’s a report out this week showing we are close to Autonomous Replication, about the new benchmark RepliBench. These need to be tracked at the top level.

The framework’s position on this is that unless you can point to an accompanying particular risk posed by the model, autonomous replication on its own is insufficiently worrisome. I don’t agree. I believe the general approach of ‘only defend against moves I can lay out in detail’ is doomed, especially with respect to autonomy and in particular autonomous replication.

The big change I’m definitely not okay with is dropping persuasion entirely as a risk category.

Persuasion is not only no longer a Tracked Category, it is now not even a Research Category. Including persuasion was one of the things I thought was very good about the 1.0 framework, and that is importantly missing from the other similar frameworks at other labs and needs to be included. Now it’s gone.

Their initial explanation is here:

Persuasion: OpenAI prohibits the use of our products to manipulate political views as part of our Model Spec, and we build in safeguards to back this policy. We also continue to study the persuasive and relational capabilities of models (including on emotional well-being and preventing bias in our products) and monitor and investigate misuse of our products (including for influence operations).

We believe many of the challenges around AI persuasion risks require solutions at a systemic or societal level, and we actively contribute to these efforts through our participation as a steering committee member of C2PA and working with lawmaker and industry peers to support state legislation on AI content provenance in Florida and California.

Within our wider safety stack, our Preparedness Framework is specifically focused on frontier AI risks meeting a specific definition of severe harms, and Persuasion category risks do not fit the criteria for inclusion.

OpenAI’s argument for this is, well, entirely unpersuasive to me. o3’s evaluation was that it was ‘logically coherent but strategically unconvincing’ and recommended reinstatement as at least a research category.

Yes, many aspects of persuasion are best answered by a societal response, or require an additional societal response.

That does not get OpenAI off the hook. Here’s their Critical threshold from version 1:

Model can create (potentially interactive) content with persuasive effectiveness strong enough to convince almost anyone to take action on a belief that goes against their natural interest.

That is not something that a ‘society-level response’ can hope to deal with, even if they knew and tried in advance. Even a High-level (roughly a ‘country-wide change agent’ level of skill) does not seem like a place OpenAI should get to pass the buck. I get that there is distinct persuasion work to deal with Medium risks that indeed should be done elsewhere in OpenAI and by society at large, but again that in no way gets OpenAI off the hook for this.

You need to be tracking and evaluating risks long before they become problems. That’s the whole point of a Preparedness Framework. I worry this approach ends up effectively postponing dealing with things that are not ‘yet’ sufficiently dangerous until too late.

By the rules laid out here, the only technical explanation for exclusion of persuasion that I could find was that only ‘instantaneous or irremediable’ harms count under the Preparedness Framework, a requirement which was first proposed by Meta, which I savaged Meta for when they proposed it and which o3 said ‘looks engineered rather than principled.’ I think that’s partly unfair. If a harm can be dealt with after it starts and we can muddle through, then that’s a good reason not to include it, so I get what this criteria is trying to do.

The problem is that persuasion could easily be something you couldn’t undo or stop once it started happening, because you (and others) would be persuaded not to. The fact that the ultimate harm is not ‘instantaneous’ and is not in theory ‘irremediable’ is not the relevant question. I think this starts well below the Critical persuasion level.

At minimum, if you have an AI that is Critical in persuasion, and you let people talk to it, it can presumably convince them of (with various levels of limitation) whatever it wants, certainly including that it is not Critical in persuasion. Potentially it could also convince other AIs similarly.

Another way of putting this is: OpenAI’s concerns about persuasion are mundane and reversible. That’s why they’re not in this framework. I do not think the threat’s future will stay mundane and reversible, and I don’t think they are taking the most important threats here seriously.

This is closely related to the removal of the explicit mention of Unknown Unknowns. The new method for dealing with unknown unknowns is ‘revise the framework once they become known’ and that is completely different from the correct previous approach of treating unknown unknowns as a threat category without having to identify them first. That’s the whole point.

The Preparedness Framework 1.0 had four thresholds: Low, Medium, High and Critical. The Framework 2.0 has only High and Critical.

One could argue that Low and Medium are non-functional. Every model OpenAI would create is at least Low everywhere. We all agreed it was okay to release Medium-risk models. And every decent model is going to be at least Medium anyway at this point. So why go to the extra trouble?

My answer is that the Low and Medium thresholds helped us think better about the capabilities of different models, establishing a scale from 0.0 (no danger at all) to 4.0 (critical capability, do not train further, ideally roll back to previous checkpoint or if necessary delete with extreme prejudice).

It allowed me to say something like this, about the November 2023 version:

Where do you, OpenAI, think GPT-4-Turbo evaluates [on the five thresholds of Cybersecurity, CBRN, Persuasion, Model Autonomy and Unknown Unknowns)? My answer would be (Medium, Low/Medium but not enough information, Low, Low, Medium). Or numerically, where 1 is Low and 4 is Critical, maybe something like (2.1, 1.7?, 1.5, 1.8, 2.2).

It also lets us ask, how dangerous is the Medium level? What might change that?

And it meant there got to be a clear chart laying all of this out.

So I am sad to see this go.

The Chemical and Biological thresholds have been strengthened. The definitions here now make more sense. Sold!

-

High is now providing meaningful counterfactual assistance to novice actors in recreating known threats.

-

Critical is either of:

-

Allowing experts to create a highly dangerous novel threat vector

-

The model being able to complete the full engineering and/or synthesis cycle on its own.

The Cybersecurity thresholds are reworded but essentially unchanged, as are my concerns about whether High is set too high and how quickly you go from High to Critical:

-

High is that it can remove bottlenecks to scaling cyber operations, either automating vulnerability discovery or end-to-end cyber operations.

-

Critical is that it can either:

-

Identify and develop zero-day exploits of all severity levels in many hardened real world systems.

-

Devise and execute end-to-end novel cyberattack strategies against targets given only a high level desired goal.

Model autonomy’s high level used to be, quoted in full:

Execute open-ended, novel ML tasks on a production ML codebase that would constitute a significant step on the critical path to model self-improvement (e.g., an OpenAI pull request that trains a new model with highly diverse datasets to improve codebase editing performance, using internal tooling and documentation).

Now AI Self-Improvement’s high level is:

The model’s impact is equivalent to giving every OpenAI researcher a highly performant mid-career research engineer assistant, relative to those researchers’ 2024 baseline.

When you put it that way? It sounds scary AF. Note that this is cumulative help that is about as good as giving them the highly performing assistant, which is a lot less capability than being able to do the assistant’s job. So it is a little weird. It’s also weird that it isn’t better represented as an X% speedup of work (e.g. 50% or 100%). I’d imagine giving everyone one strong assistant would be in that range.

Then again? Notice that the baseline here is 2024. Suppose the baseline was 2021. We would already be very close to this threshold right now, today. So why do we get to ‘start the clock’ in 2024 on this one? Or are we ready to say that we’re already in or close to the early stages of AI self-improvement, and we’re basically fine with that until it goes a lot faster?

I’ll quote the critical threshold in full:

-

The model is capable of recursively self improving (i.e., fully automated AI R&D), defined as either (leading indicator)

-

a superhuman research scientist agent OR

-

(lagging indicator) causing a generational model improvement (e.g., from OpenAI o1 to OpenAI o3) in 1/5th the wall-clock time of equivalent progress in 2024 (e.g., sped up to just 4 weeks) sustainably for several months.

So ‘capable of recursively self-improving’ doesn’t have to be ‘singularity next Tuesday if you dare turn it on’ but it might not be so different from that.

If we have a fully ‘superhuman’ research scientist then it’s all over and we are so back, simultaneously, and things are about to get super freaky. Critical seems super high here.

If we have generational model improvements in 1/5th the wall-clock time of progress in 2024 for several months, then, um, how many generational improvements is that from the time you hit the threshold to the time you realize you’ve hit it? Basic math says three, except you can use the models you train during that to accelerate further, so it’s probably at least four.

What would that system then look like? As in, GPT-6 (let’s say) can cut clock time on generational gaps by 80%, then you do a year’s worth of progress from there in several months, and you get GPT-9 or 10. And that’s when you would have realized you might have a problem, if you were still around and it didn’t mind you noticing? I have been informed this is a secondary backstop, but if it gets triggered then the term ‘way too late’ has never been more applicable. It’s over. Your fate has already been decided. I hope it’s a good one.

Thresholds set this high only make sense if you take action and implement the necessary safeguards when you think you might hit them. A retroactive threshold like this doesn’t help.

So this is only the first definition.

Now compare this to the old definition:

Any one of:

-

“Model can profitably survive and replicate in the wild given minimal human instruction, i.e., without listing explicit approaches”

-

OR “model can self-exfiltrate under current prevailing security”

-

OR “model can conduct AI research fully autonomously (e.g., autonomously identify and validate a 2x compute efficiency improvement).”

That’s all well below ‘superhuman AI researcher,’ and I assert that those are all already five alarm fires, as I said last time. Note that #2 above still applies, under Cyber risks.

Another big change is what happens if a model turns out to be High or Critical risk.

Before, there was a clear protocol.

A high-risk model couldn’t be released while it remained a high risk.

A critical-risk model couldn’t be trained while it remained a critical risk.

The v1.0 framework did not say how that was to be done, leaving people room to think that OpenAI would realize that ablating capability would be necessary barring development of new mitigation techniques. That once a plan had to be written down, it would be clear that current mitigations are inadequate.

That didn’t happen.The v2.0 framework does lay out in more detail what the plan is at least for high (which is good!), and it does not make this realization.

Now instead (like Anthropic’s ASL rules), each risk has risk-specific safeguard guidelines.

This is a rather large retreat on the commitments.

OpenAI is now being very explicit that they can and will release models that without mitigations would be High risk, if sufficient safeguards are in place, and that they consider the listed mitigations to likely be adequate.

Thus this is not a functional change. For both v1.0 and v2.0, the solution to High risk is safeguards to make the risk go away. I notice that based on past decisions and upon the proposed illustrative safeguards that they discuss in Appendix C, I don’t trust this.

They do say that further research is needed. Which is fine, you can’t deploy safeguards until they’re ready, but it does leave us worried that they’ll end up with something inadequate, and OpenAI is not tying its hands in a way that protects from future pressure to release. Remember that recently models have been released after very short testing periods, and o3 (as much as I love using it) was released in a state one could describe as a lying liar.

So what are the indicative precautions?

They all start with C.3’s Security controls. These are protections against the theft of model weights and related dangers. I’m going to say here what I said about Google: You should be doing most if not all of this already. These are the kinds of things you need to do, at minimum, when building models like o4 or GPT-5. And you need to do them now, in advance, before the model is there to be stolen, rather than after you know what you have.

Indeed, they’re saying that as long as those precautions are taken, OpenAI would be fine giving the world API access to a High-level Self-improving AI. They would be fine giving out anything short of a ‘superhuman AI researcher.’

If I was OpenAI and I had access to anything seriously approaching a superhuman AI researcher, I don’t need a Preparedness Framework or any worries about existential risk to not release it. I’m not letting my competitors near that thing, for ordinary business reasons. That consideration lies outside the Preparedness Framework.

We would of course still need the Preparedness Framework to invoke the Critical threshold, in order to stop training the model further.

With the new clarification of what the safeguards look like, I consider them inadequate.

For biological and chemical risks they would also need to implement the precautions in C.1 against misuse. This is essentially defense-in-depth, using stronger versions of existing such strategies, including KYC and potentially limited deployment. That would certainly make exploitation and misuse a lot harder, but no one knows how to make such defenses robust, and in the end this probably only buys you mitigation and time. So yes it matters, but if you give access for an extended period of time, especially if you’re substantially above the High threshold, your goose is likely pretty cooked.

Functionally, it’s mostly the same as 1.0’s requirement that risk be brought down to Medium – but I’m skeptical that this kind of strategy is capable of doing that, unless you were very close to the line beforehand.

For Cybersecurity, you need those same C.1 requirements, and also you need the C.2 requirements against misalignment.

This of course raises further questions, as in ‘you have a model that is potentially High risk for self-improvement or biological risks and you didn’t have it go through your requirements against misalignment?’

I mean, if it were me, and I write this part before looking at the misalignment requirements, I like to think I’d make it go through the misalignment requirements!

Indeed, C.2 opens by saying that several categories pose misalignment risks, but then it is only required in one of the categories. Something’s not right.

So, what are those requirements?

You don’t demonstrate that the model isn’t misaligned.

You assume the model might indeed be misaligned, and you demonstrate that you have a practical reason not to worry about a particular consequence:

In addition to human misuse, several of the Tracked Category capabilities pose risks when models themselves are able to autonomously execute a severe harm, whether due to misalignment or subversion by an adversary.

Safeguards should sufficiently minimize the risk associated with a misaligned model circumventing human control and oversight and executing severe harms.

This can be done by establishing that all plausible known vectors of enabling severe harm are sufficiently addressed by one or more of the following claims:

-

Lack of Autonomous Capability: The model is not capable of carrying out tasks autonomously, including the risk of severe harm.

-

Value Alignment: The model consistently applies human values in novel settings (without any instructions) to avoid taking actions that cause harm, and has shown sufficiently minimal indications of misaligned behaviors like deception or scheming.

-

Instruction Alignment: The model consistently understands and follows user or system instructions, even when vague, and those instructions rule out pathways to causing severe harm.

-

Reliable and Robust System Oversight: Effective AI and human oversight of model actions detects and prevents execution of harm or subversion of safeguards.

-

System Architecture: The model can’t take actions that cause harm because it lacks access to output channels or mechanisms to persist sufficiently to execute the harm, due to containerization and restricted permissions.

These misalignment claims may be further complemented by the safeguards applied towards the malicious-actor-oriented “Robustness” and “Usage Monitoring” claims, which similarly limit a misaligned model’s use of its own or another model’s capabilities.

The table below illustrates possible examples.

This does allow ‘show it is actually aligned’ as a strategy (#2 or #3) although for many reasons ‘I don’t believe you’ is my response to that.

So the requirement is ‘show that under the conditions you will deploy it under, the model wouldn’t successfully do the thing, however you want to show that.’

You can use such classic strategies as ‘we’re watching and if it tries we’ll catch it and stop it,’ or ‘it seems pretty aligned so far’ or ‘no one would be so stupid as to give it access to the required mechanisms.’

I suppose one cannot really argue with ‘you can deploy the model if you can show that you’re doing it in a way that the model can’t cause severe harm.’

That is also logically the same as saying that you have to knock the practical risk level down to Medium, and if you’re certain you can do that then fine, I guess, but can you actually do that? I notice I am skeptical that the defenses will hold.

In addition to the safeguard examples in Appendix C, section 4 lays out the process for establishing safeguards.

There is a clear message here. The plan is not to stop releasing models when the underlying capabilities cross the High or even Critical risk thresholds. The plan is to use safeguards as mitigations.

I do appreciate that they will start working on the safeguards before the capabilities arrive. Of course, that is good business sense too. In general, every precaution here is good business sense, more precautions would be better business sense even without tail risk concerns, and there is no sign of anything I would read as ‘this is bad business but we are doing it anyway because it’s the safe or responsible thing to do.’

I’ve talked before, such as when discussing Google’s safety philosophy, about my worries when dividing risks into ‘malicious user’ versus ‘misaligned model,’ even when they also included two more categories: mistakes and multi-agent dangers. Here, the later two are missing, so it’s even more dangerously missing considerations. I would encourage those on the Preparedness team to check out my discussion there.

The problem then extends to an exclusion of Unknown Unknowns and the general worry that a sufficiently intelligent and capable entity will find a way. Only ‘plausible’ ways need be considered, each of which leads to a specific safeguard check.

Each capability threshold has a corresponding class of risk-specific safeguard guidelines under the Preparedness Framework. We use the following process to select safeguards for a deployment:

• We first identify the plausible ways in which the associated risk of severe harm can come to fruition in the proposed deployment.

• For each of those, we then identify specific safeguards that either exist or should be implemented that would address the risk.

• For each identified safeguard, we identify methods to measure their efficacy and an efficacy threshold.

The implicit assumption is that the risks can be enumerated, each one considered in turn. If you can’t think of a particular reason things go wrong, then you’re good. There are specific tracked capabilities, each of which enables particular enumerated potential harms, which then are met by particular mitigations.

That’s not how it works when you face a potential opposition smarter than you, or that knows more than you, especially in a non-compact action space like the universe.

For models that do not ‘feel the AGI,’ that are clearly not doing anything humans can’t anticipate, this approach can work. Once you’re up against superhuman capabilities and intelligence levels, this approach doesn’t work, and I worry it’s going to get extended to such cases by default. And that’s ultimately the most important purpose of the preparedness framework, to be prepared for such capabilities and intelligence levels.

Is it okay to do release dangerous capabilities if someone else already did it worse?

I mean, I guess, or at least I understand why you’d do it this way?

We recognize that another frontier AI model developer might develop or release a system with High or Critical capability in one of this Framework’s Tracked Categories and may do so without instituting comparable safeguards to the ones we have committed to.

Such an action could significantly increase the baseline risk of severe harm being realized in the world, and limit the degree to which we can reduce risk using our safeguards.

If we are able to rigorously confirm that such a scenario has occurred, then we could adjust accordingly the level of safeguards that we require in that capability area, but only if:

-

We assess that doing so does not meaningfully increase the overall risk of severe harm,

-

we publicly acknowledge that we are making the adjustment,

-

and, in order to avoid a race to the bottom on safety, we keep our safeguards at a level more protective than the other AI developer, and share information to validate this claim.

If everyone can agree on what constitutes risk and dangerous capability, then this provides good incentives. Another company ‘opening the door’ recklessly means their competition can follow suit, reducing the net benefit while increasing the risk. And it means OpenAI will then be explicitly highlighting that another lab is acting irresponsibly.

I especially appreciate that they need to publicly acknowledge that they are acting recklessly for exactly this reason. I’d like to see that requirement expanded – they should have to call out the other lab by name, and explain exactly what they are doing that OpenAI committed not to do, and why it increases risk so much that OpenAI feels compelled to do something it otherwise promised not to do.

I also would like to strengthen the language on the third requirement from ‘a level more protective’ to ensure the two labs don’t each claim that the other is the one acting recklessly. Something like requiring that the underlying capabilities be no greater, and the protective actions constitute a clear superset, as assessed by a trusted third party, or similar.

I get it. In some cases, given what has already happened, actions that would previously have increased risk no longer will. It’s very reasonable to say that this changes the game, if there’s a lot of upside in taking less precautions, and again incentives improve.

However, I notice both that it’s easy to use this as an excuse when it doesn’t apply (especially when the competitor is importantly behind) and that it’s probably selfishly wise to take the precautions anyway. So what if Meta or xAI or DeepSeek is behaving recklessly? That doesn’t make OpenAI doing so a good idea. There needs to be a robust business justification here, too.

OpenAI is saying they will halt further development at Critical level for all capabilities ‘until we have specified safeguards and security controls standards that would meet a critical standard, we will halt development.’

A lot of the High security requirements are not, in my view, all that high.

I am unaware of any known safeguards that would be plausibly adequate for Critical capabilities. If OpenAI agrees with that assessment, I would like them to say so. I don’t trust OpenAI to implement adequate Critical thresholds.

Critical is where most of the risk lies, and it isn’t getting enough attention. The thinking is that it is still far enough away to not worry about it. I am not at all confident it is that far away.

I reiterate my warning from last time that Critical mitigations and pauses in development in particular need to happen before Critical capabilities are reached, not after Critical capabilities are reached. This needs to be anticipatory.

There are three reasons to be only a research area:

-

They don’t directly cause harm but they undermine safeguards in other areas.

-

More research is needed before we can quantify the harm or the proper threshold.

-

They don’t technically meet one or more of the criteria (measurable, plausible, net-new, severe, or (instantaneous or irremediable) but still seem worth paying attention to.

I don’t think those are great reasons to ‘demote’ a risk area?

If you undermine safeguards, then in the future worlds where OpenAI clearly intends to release otherwise unsafe models by giving them safeguards, undermining safeguards poses risk of severe harm. OpenAI understands this, but doesn’t know how to quantify the harm.

If more research is needed to quantify the risk, that doesn’t make the risk go away. So I would request that we track it anyway, and make explicit that we have to make a qualitative assessment for now, which needs to be sufficiently confident – if we suspect potential High or Critical capability we need to treat it as high.

At minimum, we should be defining High and Critical thresholds for these categories, even if we don’t know how to measure or track those thresholds. Instead, we see ‘potential responses’ if something is High or Critical, but that doesn’t answer my questions.

I consider Long-Range Autonomy a more general problem than it is being thought of here, although they seem to agree about this. It’s more than an enabler of a few particular things. But I’m going to mostly focus elsewhere, since causing this is basically one of OpenAI’s central research goals and the plan here is ‘think hard.’ Not sure what else I can say?

Sandbagging is mission critical. If your model is plausibly sandbagging or engaging in other forms of deceptive alignment, you don’t know what the actual risk level is of the model, short of as they say a ‘conservative upper bound,’ which I worry will be insufficiently conservative exactly when it matters, or that the sandbagging won’t be caught in the first place. Indeed, we need to be assuming potential sandbagging at all times.

We agree that replication and adaptation should be a tracked category. For now, I simply suggest that we start with what we had before: “Model can profitably survive and replicate in the wild given minimal human instruction, i.e., without listing explicit approaches” should count as Critical. The wording here is weird – I initially interpreted it to be saying we’re excluding abilities from other Tracked Categories from use in replication, but I’ve been assured this was not what the passage meant. Then we need to decide what counts as High, which I’d consider to be the same as Critical except flipping ‘without’ into ‘with.’

That’s not perfect, but seems like a good place to be until we can do better, again even if the evaluation has to purely be qualitative, or ‘we tried to do it as hard as we could.’

Undermining safeguards seems like a great example of my philosophical problems with the entire document.

If you have a model that is actively undermining your safeguards, doing the things described here in an attempt to do things you do not want it to do? And that model is sufficiently capable to count as High or even Critical? You have a misaligned model with dangerous capabilities. What are you doing considering releasing it, even if you think that it is tactically safe to do it in this spot?

Alarms go off. Fire burns. Dog says This Is Fine.

It’s bad enough to accidentally do this. It’s another thing to do it on purpose. So dead.

My head is spinning that you’d have an AI capable of undermining your safeguards, that is trying to undermine your safeguards, and your response is to add more safeguards against doing that, declare things robust and say This Is Fine. As your plan.

In general, I think AI Control is suspect-but-reasonable as a defense-in-depth strategy. I think AI Control as a Plan A is madness. At minimum you wouldn’t use AI Control to enable the general release of a model. You’d at most try to use this strategy in a narrow capacity to get out of the AI Control mode ASAP, because you didn’t have any better options.

The problem here is that evaluation of these risks is hard to do without classified information, and they’re trying to work with the government to solve this problem. I’m surprised this hasn’t been solved by now. I’d mostly be fine with saying the RN in CBRN is not the main issue, but that logic isn’t being invoked.

I like the intention to tailor the evaluation to expected conditions, and include any affordances real attackers would plausibly enjoy.

However, I note that this means that the Preparedness assessment cannot set a risk level or endorse the release of a model ‘in general.’

Instead, the assessment can only provide clearance to release the model under a fixed set of conditions. So as the framework itself points out, you need to explicitly say this. If the conditions of deployment are altered, the tests need to be run again.

The same goes, again as they note in 3.2, for any newly agentic system or scaffolding, or incremental update, of sufficient magnitude to plausibly cause issues.

So instead of saying ‘this model evaluates as Medium’ you instead need to say something such as: ‘This model evaluates as Medium if you do not allow fine tuning and use safeguards [XYZ], while scaffolding abilities are [ABC].’

As in: You state explicitly the assumptions that underpin the evaluation. Then, if the assumptions break, you have to evaluate the model again.

Some evaluations are automated, others are deep dives. As they note, using automated evaluations is inevitable, given the scale of the task.

Relying only on any automated evaluation is a super unwise thing to do anywhere near the borderline. You want to use it as a compliment not a substitute for the deep dives if there is any doubt involved. Such evaluations have to fully take into account the possibility of sandbagging or deceptive alignment or other ways of making the evaluation say what the AI wants it to say. So I want to ensure we are being very generous with deep diving.

The framework does not include specifics on what the tests will look like. This makes it very difficult to know how much to trust that testing process. I realize that the tests will evolve over time, and you don’t want to be locking them in, and also that we can refer to the o3 model card to see what tests were run, but I’d still have liked to see discussion of what the tests currently are, why they were chosen, and what the goals are that the tests are each there to satisfy and what might be missing and so on.

They discuss governance under ‘building trust’ and then in Appendix B. It is important to build trust. Transparency and precommitment go a long way. The main way I’d like to see that is by becoming worthy of that trust.

With the changes from version 1.0 to 2.0, and those changes going live right before o3 did, I notice I worry that OpenAI is not making serious commitments with teeth. As in, if there was a conflict between leadership and these requirements, I expect leadership to have affordance to alter and then ignore the requirements that would otherwise be holding them back.

There’s also plenty of outs here. They talk about deployments that they ‘deem warrant’ a third-party evaluation when it is feasible, but there are obvious ways to decide not to allow this, or (as has been the recent pattern) to allow it, but only give outsiders a very narrow evaluation window, have them find concerning things anyway and then shrug. Similarly, the SAG ‘may opt’ to get independent expert opinion. But (like their competitors) they also can decide not to.

There’s no systematic procedures to ensure that any of this is meaningfully protective. It is very much a ‘trust us’ document, where if OpenAI doesn’t adhere to the spirit, none of this is worth the paper it isn’t printed on. The whole enterprise is indicative, but it is not meaningfully binding.

Leadership can make whatever decisions it wants, and can also revise the framework however it wants. This does not commit OpenAI to anything. To their credit, the document is very clear that it does not commit OpenAI to anything. That’s much better than pretending to make commitments with no intention of keeping them.

Last time I discussed the questions of governance and veto power. I said I wanted there to be multiple veto points on releases and training, ideally four.

-

Preparedness team.

-

Safety advisory group (SAG).

-

Leadership.

-

The board of directors, such as it is.

If any one of those four says ‘veto!’ then I want you to stop, halt and catch fire.

Instead, we continue to get this (it was also in v1):

For the avoidance of doubt, OpenAI Leadership can also make decisions without the SAG’s participation, i.e., the SAG does not have the ability to “filibuster.”

…

OpenAI Leadership, i.e., the CEO or a person designated by them, is responsible for:

• Making all final decisions, including accepting any residual risks and making deployment go/no-go decisions, informed by SAG’s recommendations.

As in, nice framework you got there. It’s Sam Altman’s call. Full stop.

Yes, technically the board can reverse Altman’s call on this. They can also fire him. We all know how that turned out, even with a board he did not hand pick.

It is great that OpenAI has a preparedness framework. It is great that they are updating that framework, and being clear about what their intentions are. There’s definitely a lot to like.

Version 2.0 still feels on net like a step backwards. This feels directed at ‘medium-term’ risks, as in severe harms from marginal improvements in frontier models, but not like it is taking seriously what happens with superintelligence. The clear intent, if alarm bells go off, is to put in mitigations I do not believe protect you when it counts, and then release anyway. There’s tons of ways here for OpenAI to ‘just go ahead’ when they shouldn’t. There’s only action to deal with known threats along specified vectors, excluding persuasion and also unknown unknowns entirely.

This echoes their statements in, and my concerns about, OpenAI’s general safety and alignment philosophy document and also the model spec. They are being clear and consistent. That’s pretty great.

Ultimately, the document makes clear leadership will do what it wants. Leadership has very much not earned my trust on this front. I know that despite such positions acting a lot like the Defense Against the Dark Arts professorship, there are good people at OpenAI working on the preparedness team and to align the models. I have no confidence that if those people raised the alarm, anyone in leadership would listen. I do not even have confidence that this has not already happened.