Our government is determined to lose the AI race in the name of winning the AI race.

The least we can do, if prioritizing winning the race, is to try and actually win it.

It is one thing to prioritize ‘winning the AI race’ against China over ensuring that humanity survives, controls and can collectively steer our future. I disagree with that choice, but I understand it. This mistake is very human.

I also believe that more alignment and security efforts at anything like current margins not only do not slow our AI efforts, they would actively help us win the race against China, by enabling better diffusion and use of AI, and ensuring we can proceed with its development. So the current path is a mistake even if you do not worry about humanity dying or losing control over the future.

However, if you look at the idea of building smarter, faster, more capable, more competitive, freely copyable digital minds we don’t understand that can be given goals and think ‘oh that future will almost certainly stay under humanity’s control and not be a danger to us in any way’ (and when you put it like that, um, what are you thinking?) then I understand the second half of this mistake as well.

What is not an understandable mistake, what I struggle to find a charitable and patriotic explanation for, is to systematically cripple or give away many of America’s biggest and most important weapons in the AI race, in exchange for thirty pieces of silver and some temporary market share.

To continue alienating our most important and trustworthy allies with unnecessary rhetoric and putting up trading barriers with them. To attempt to put tariffs even on services like movies where we already dominate and otherwise give the most important markets, like the EU, every reason in their minds to put up barriers to our tech companies and question our reliability as an ally. And simultaneously in the name of building alliances put the most valuable resources with unreliable partners like Malaysia, Saudi Arabia, Qatar and the UAE.

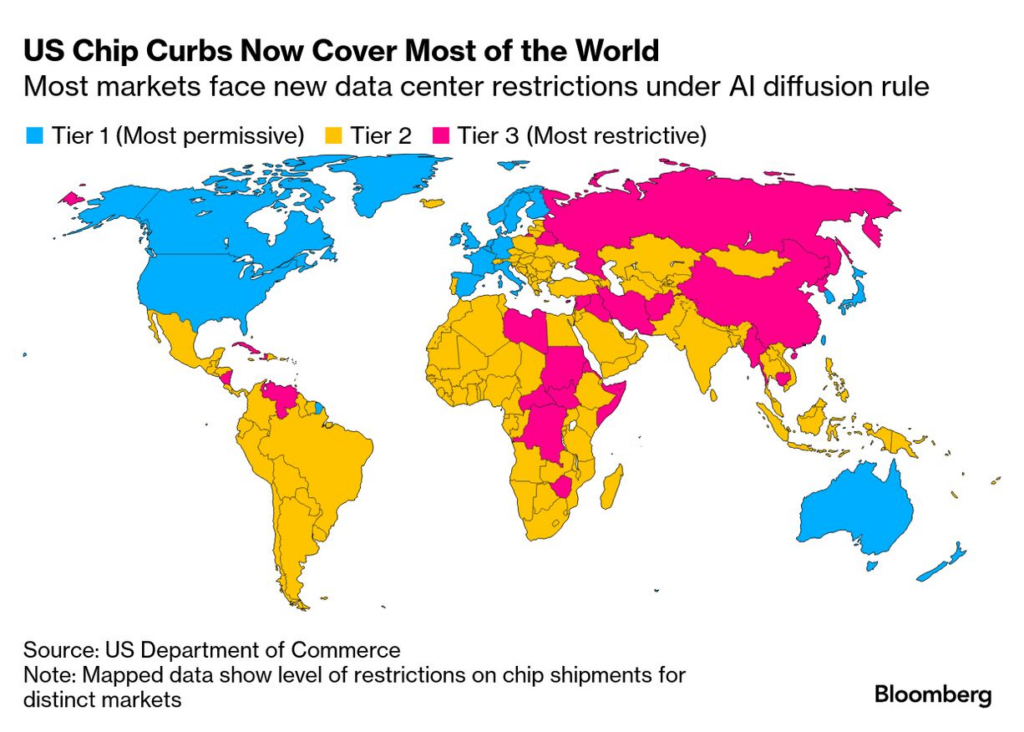

Indeed, we have now scrapped the old Biden ‘AI diffusion’ rule with no sign of its replacement, and where did David Sacks gloat about this? Saudi Arabia, of course. This is what ‘trusted partners’ means to them. Meanwhile, we are warning sterny against use of Huawai’s AI chips, ensuring China keeps all those chips itself. Our future depends on who has the compute, who ends up with the chips. We seem to instead think the future is determined by the revenue from chip manufacturing? Why would that be a priority? What do these people even think is going on?

To not only fail to robustly support and bring down regulatory and permitting barriers to the nuclear power we urgently need to support our data centers, but to actively wipe out the subsidies on which the nuclear industry depends, as the latest budget aims to do with remarkably little outcry via gutting the LPO and tax credits, while China of course ramps up its nuclear power plant construction efforts, no matter what the rhetoric on this might say. Then to use our inability to power the data centers as a reason to put our strategically vital data centers, again, in places like the UAE, because they can provide that power. What do you even call that?

To fail to let our AI companies have the ability to recruit the best and brightest, who want to come here and help make America great, instead throwing up more barriers and creating a climate of fear I’m hearing is turning many of the best people away.

And most of all, to say that the edge America must preserve, the ‘race’ that we must ‘win,’ is somehow the physical production of advanced AI chips. So, people say, in order to maintain our edge in chip production, we should give that edge entirely away right now, allowing those chips to be diverted to China, as would be inevitable in the places that are looking to buy where we seem most eager to enable sales. Nvidia even outright advocates that it should be allowed to sell to China openly, and no one in Washington seems to hold them accountable for this.

And we are doing all this while many perpetuate the myth that our AI efforts are not very solidly ahead of China in the places that matter most, or threaten to lock in the world’s customers, because DeepSeek which is impressive but still very clearly substantially behind our top labs, or because TikTok and Temu exist while forgetting that the much bigger Amazon and Meta also exist.

Temu’s sales are less than a tenth of Amazon’s, and the rest of the world’s top four e-commerce websites are Shopify, Walmart.com and eBay. As worrisome as it is, TikTok is only the fourth largest social media app behind Facebook, YouTube and Instagram, and there aren’t signs of that changing. Imagine if that situation was reversed.

Earlier this week I did an extensive readthrough and analysis of the Senate AI Hearing.

Here, I will directly lay out my response to various claims by and cited by US AI Czar David Sacks about the AI Diffusion situation and the related topics discussed above.

-

Some of What Is Being Incorrectly Claimed.

-

Response to Eric Schmidt.

-

China and the AI Missile Gap.

-

To Preserve Your Tech Edge You Should Give Away Your Tech Edge.

-

To Preserve Your Compute Edge You Should Sell Off Your Compute.

-

Shouting From The Rooftops: The Central Points to Know.

-

The Longer Explanations.

-

The Least We Can Do.

There are multiple distinct forms of Obvious Nonsense to address, either as text or very directly implied, whoever you attribute the errors to:

David Sacks (US AI Czar): Writing in NYT, former Google CEO Eric Schmidt warns that “China Tech Is Starting to Pull Ahead”:

“China is at parity or pulling ahead of the United States in a variety of technologies, notably at the A.I. frontier. And it has developed a real edge in how it disseminates, commercializes and manufactures tech. History has shown us that those who adopt and diffuse a technology the fastest win.”

As he points out, diffusing a technology the fastest — and relatedly, I would add, building the largest partner ecosystem — are the keys to winning. Yet when Washington introduced an “AI Diffusion Rule”, it was almost 200 pages of regulation hindering adoption of American technology, even by close partners.

The Diffusion Rule is on its way out, but other regulations loom.

President Trump committed to rescind 10 regulations for every new regulation that is added.

If the U.S. doesn’t embrace this mentality with respect to AI, we will lose the AI race.

Sriram Krishnan: Something @DavidSacks and I and many others here have been emphasizing is the need to have broad partner ecosystems using American AI stack rather than onerous complicated regulations.

If the discussion was ‘a bunch of countries like Mexico, Poland and Portugal are in Tier 2 that should instead have been in Tier 1’ then I agree there are a number of countries that probably should have been Tier 1. And I agree that there might well be a simpler implementation waiting to be fond.

And yet, why is it that in practice, these ‘broad partner ecosystems using American AI’ always seem to boil down to a handful of highly questionably allied and untrustworthy Gulf States with oil money trying to buy global influence, perhaps with a side of Malaysia and other places that are very obviously going to leak to China? David Sacks literally seems to think that if you do not literally put the data center in specifically China, then that keeps it in friendly hands and out of China’s grasp, and that we can count on our great friendships and permanent alliances with places like Saudi Arabia. Um, no. Why would you think that?

That Eric Schmidt editorial quoted above is a royal mess. For example, you have this complete non-sequitur.

Eric Schmidt and Selina Xu: History has shown us that those who adopt and diffuse a technology the fastest win.

So it’s no surprise that China has chosen to forcefully retaliate against America’s recent tariffs.

China forcefully retaliated against America’s tariffs for completely distinct reasons. The story Schmidt is trying to imply here doesn’t make any sense. His vibe reports are Just So Stories, not backed up at all by economic or other data.

‘By some benchmarks’ you can show pretty much anything, but I mean wow:

Eric Schmidt and Selina Xu: Yet, as with smartphones and electric vehicles, Silicon Valley failed to anticipate that China would find a way to swiftly develop a cheap yet state-of-the-art competitor. Today’s Chinese models are very close behind U.S. versions. In fact, DeepSeek’s March update to its V3 large language model is, by some benchmarks, the best nonreasoning model.

Look. No. Stop.

He then pivots to pointing out that there are other ‘tech’ areas where China is competitive, and goes into full scaremonger mode:

Apps for the Chinese online retailers Shein and Temu and the social media platforms RedNote and TikTok are already among the most downloaded globally. Combine this with the continuing popularity of China’s free open-source A.I. models, and it’s not hard to imagine teenagers worldwide hooked on Chinese apps and A.I. companions, with autonomous Chinese-made agents organizing our lives and businesses with services and products powered by Chinese models.

As I noted above, ‘American online retailers like Amazon and Shopify and the social media platforms Facebook and Instagram are already not only among but the most used globally.’

There is a stronger case one can make with physical manufacturing, when Eric then pivots to electric cars (and strangely focuses on Xiaomi over BYD) and industrial robotics.

Then, once again, he makes the insane ‘the person behind is giving away their inferior tech so we should give away our superior tech to them, that’ll show them’ argument:

We should learn from what China has done well. The United States needs to openly share more of its A.I. technologies and research, innovate even faster and double down on diffusing A.I. throughout the economy.

When you are ahead and you share your model, you give your rivals that model for free, killing your lead and your business for some sort of marketing win, and also you’re plausibly creating catastrophic risk. When you are behind, and you share it, sure, I mean why not.

In any case, he’s going to get his wish. OpenAI is going to release an open weight reasoning model, reducing America’s lead in order to send the clear message that yes we are ahead. Hope you all think it was worth it.

The good AI argument is that China is doing a better job in some ways of AI diffusion, of taking its AI capabilities and using them for mundane utility.

Similarly, I keep seeing forms of an argument that says:

-

America’s export controls have given us an important advantage in compute.

-

China’s companies have been slowed down by this, but have managed to stay only somewhat behind us in spite of it (largely because following is much easier).

-

Therefore, we should lift the controls and give up our compute edge.

I’m sorry, what?

At lunch during Selina’s trip to China, when U.S. export controls were brought up, someone joked, “America should sanction our men’s soccer team, too, so they will do better.” So that they will do better.

It’s a hard truth to swallow, but Chinese tech has become better despite constraints, as Chinese entrepreneurs have found creative ways to do more with less. So it should be no surprise that the online response in China to American tariffs has been nationalistic and surprisingly optimistic: The public is hunkering down for a battle and thinks time is on Beijing’s side.

I don’t know why Eric keeps talking about the general tariffs or trade war with China here, or rather I do and it’s very obviously a conflation designed as a rhetorical trick. That’s a completely distinct issue, and I here take no position on that fight other than to note that our actions were not confined to China, and we very obviously shouldn’t be going after our trading partners and allies in these ways – including by Sacks’s logic.

The core proposal here is that, again:

-

We gave China less to work with, put them at a disadvantage.

-

They are managing to compete with us despite (his word) the disadvantage.

-

Therefore we should take away their disadvantage.

It’s literal text. “America should sanction our men’s soccer team, too, so they will do better.” Should we also go break their legs? Would that help?

Then there’s a strange mix of ‘China is winning so we should become a centrally planned economy,’ mixed with ‘China is winning so we cannot afford to ever have any regulations on everything.’ Often both are coming from the same people. It’s weird.

So, shouting from the rooftops, once more with feeling for the people in the back:

-

America is ahead of China in AI.

-

Diffusion rules serve to protect America’s technological lead where it matters.

-

UAE, Qatar and Saudi Arabia are not reliable American allies, nor are they important markets for our technology. We should not be handing them large shares of the world’s most valuable resource, compute.

-

The exact diffusion rule is gone but something similar must take its place, to do otherwise would be how America ‘loses the AI race.’

-

Not having any meaningful regulations at all on AI, or ‘building machines that are smarter and more capable than humans,’ is not a good idea, nor would it mean America would ‘lose the AI race.’

-

AI is currently virtually unregulated as a distinct entity, so ‘repeal 10 regulations for every one you add’ is to not regulate at all building machines that are soon likely to be smarter and more capable than humans, or anything else either.

-

‘Winning the AI race’ is about racing to superintelligence. It is not about who gets to build the GPU. The reason to ‘win’ the ‘race’ is not market share in selling big tech solutions. It is especially not about who gets to sell others the AI chips.

-

If we care about American dominance in global markets, including tech markets, stop talking about how what we need to do is not regulate AI, and start talking about the things that will actually help us, or at least stop doing the things that actively hurt us and could actually make us lose.

-

American AI chips dominate and will continue to dominate. Our access to compute dominates, and will dominate if we enact and enforce strong export controls. American models dominate, we are in 1st, 2nd and 3rd with (in some order) OpenAI, Google and Anthropic. We are at least many months ahead.

-

There was this one time DeepSeek put out an excellent reasoning model called r1 and an app for it.

-

Through a confluence of circumstances (including misinterpretation of its true training costs, its making a good clean app where it showed its chain of thought, Google being terrible at marketing, it beating several other releases by a few weeks, OpenAI’s best models being behind paywalls, China ‘missile gap’ background fears, comparing only in the realms where r1 was relevant, acting as if only open models count, etc), this caught fire for a bit.

-

But after a while it became clear that while r1 was a great achievement and indicated DeepSeek was a serious competitor, it was still even at their highest point 4-6 months behind, fundamentally it was a ‘fast follow’ achievement which is very different from taking a lead or keeping pace, and as training costs are scaled up it will be very difficult for DeepSeek to keep pace.

-

That doesn’t mean DeepSeek doesn’t matter. Without DeepSeek the company, China would be much further behind than this.

-

In response to this, a lot of jingoism and fearmongering akin to Kennedy’s ‘missile gap’ happened, which continues to this day.

-

There are of course other tech and no-tech areas where China is competitive, such as Temu and TikTok in tech. But that’s very different.

-

China does have advantages, especially its access to energy, and if they were allowed to access large amounts of compute that would be worrisome.

-

The diffusion rules serve to protect America’s technological lead where it matters.

-

America makes the best AI chips.

-

The reason this matters is that it lets us be the ones who have those chips.

-

America’s lead has many causes but one main cause is that we have far more and better compute, due to superior access to the best AI chips.

-

Biden’s Diffusion Rule placed some countries in Tier 2 that could reasonably have and probably should have (based on what I know) been placed in Tier 1, or at worst a kind of Tier 1.5 with only mildly harsher supervision.

-

If you want to move places like the remaining NATO members into Tier 1, or do something with a similar effect? That seems reasonable to me.

-

However this very clearly does not include the very countries that we keep talking about allowing to build massive data centers with American AI chips, like the UAE, Saudi Arabia and Qatar.

-

When there is talk of robust American allies and who will build and use our technology, somehow the talk is almost always about gulf states and other unreliable allies that are trying to turn their wealth into world influence.

-

I leave why this might be so as an exercise to the reader.

-

Even if such states do stay on our side, you had better believe they will use the leverage this brings to extract various other concessions from us.

-

There is also very real concern that placing these resources in such locations would cause them to be misused by bad actors, including for terrorism, including via CBRN risks. It is foolish not to realize this.

-

There is a conflation of selling other countries American AI chips and having them build AI data centers, with those countries using America’s AIs and other American tech company products. We should care mostly about them using our software products. The main reason to build AI data centers in other countries that are not our closest most trustworthy allies is if we are unable to build those data centers in America or in our closest most trustworthy allies, which mostly comes down to issues of permitting and power supply, which we could do a lot more to solve.

-

If you’re going to say ‘the two are closely related we don’t want to piss off our allies’ right about now, I am going to be rather speechless given what else we have been up to lately including in trade, you cannot be serious right now. Or, if you want to actually get serious about this across the board, good, let’s talk.

-

Is this a sign we don’t fully trust some of these countries? Yes. Yes it is.

-

The exact diffusion rule is going away but something similar must and will take its place, to do otherwise would be how America ‘loses the AI race.’

-

If China could effectively access the best AI chips, that would get rid of one of our biggest and most important advantages. Given their edge in energy, it could over time reverse that advantage.

-

The point of trying to prevent China from improving its chip production is to prevent China from having the resulting compute. If we sell the chips to prevent this, then they already have the compute now. You lose.

-

It is very clear that our exports to the ‘tier 2’ countries that look to buy what looks suspiciously like a lot of chips are often diverted to use by China, with the most obvious example being those sold to Malaysia.

-

We should also worry about what happens to data centers built in places like Saudi Arabia or the UAE.

-

I will believe the replacement rule will have the needed teeth when I see it.

-

That doesn’t mean we can’t find a better, simpler implementation that protects American chips from falling into Chinese hands. But we need some diffusion rule that we can enforce, and that in practice actually prevents the Chinese from buying or getting access to our AI chips in quantity.

-

Yes, if we sell our best AI chips to everyone freely, as Nvidia wants to do, or do it in ways that are effectively the same thing, then that helps protect Nvidia’s profits and market share, and by denying others markets we do gain some edge in the ability to maintain our dominance in making AI chips.

-

But so what? All we do is make a little money on the AI chips, and China gets to catch up in actually having and using the AI chips, which is what matters. We’d be sacrificing the future on the altar of Nvidia’s stock price. This is the capitalist selling the rope with which to hang him. ‘Winning the race’ to an ordinary tech market is not what matters. If the only way to protect our lead for a little longer there is to give away the benefits of the lead, of what use was the lead?

-

It also would make very little difference to either Nvidia or its Chinese competitors.

-

Nvidia can still sell as many chips as it can produce, well above cost. All the chips Nvidia is not allowed to sell to China, even the crippled A20s, will happily be purchased in Western markets at profitable prices, if Nvidia allows it, giving America and its allies more compute and China less compute.

-

I would be happy, if necessary, to have USG purchase any chips that Nvidia or AMD or anyone else is unable to sell due to diffusion rules. We would have many good uses for them, we can use them for public compute resources for universities and startups or whatever if the military doesn’t want them. The cost is peanuts relative to the stakes. (Disclosure, I am a shareholder of Nvidia, etc, but also I am writing this entire post).

-

Demand in China for AI chips greatly outstrips supply. They have no need for export markets for their chips, and indeed we should be happy if they choose to export some of them rather than keeping them for domestic use.

-

China already sees AI chip production as central to its future and national security. They are already pushing as hard as they dare.

-

Not having any meaningful regulations at all on AI, or ‘building machines that are smarter and more capable than humans,’ is not a good idea, nor would it mean America would ‘lose the AI race.’

-

This is not a strawman position. The House is trying to impose a 10-year moratorium on state and local enforcement of any laws whatsoever related to AI, even a potential law banning CSAM, without offering anything to replace that in any way, and Congress notoriously can’t pass laws these days. We also have the call to ‘repeal 10 regulations for every new one,’ which is again de facto a call for no regulations at all (see #6).

-

Highly capable AI represents an existential risk to humanity.

-

If we ‘win the race’ by simply going ahead as fast as possible, it’s not America that win the future. The AIs win the future.

-

I can’t go over all the arguments about that here, but seriously it should be utterly obvious that building more intelligent, capable, competitive, faster, cheaper minds and optimization engines, that can be freely copied and given whatever goals and tasks, is not a safe thing for humanity to do.

-

I strongly believe it turns out it’s far more dangerous than I made it sound there, for many many reasons. I don’t have the space here to talk about why but seriously how do people claims this is a ‘safe’ action. What?

-

Even if highly capable AI remains under our control, it is going to transform the world and all aspects of our civilization and way of life. The idea that we would not want to steer that at all seems rather crazy.

-

Regulations do not need to ‘slow down’ AI in a meaningful way. Indeed, a total lack of meaningful regulations would slow down diffusion and practical use of AI, including for national security and core economic purposes, more than wise regulation, because no one is going to use AI they cannot trust.

-

That goes to both people knowing that they can trust AI, and also to requiring the AIs be made trustworthy. Security is capability. We also need to protect our technology and intellectual property from theft if we want to keep a lead.

-

A lack of such regulations would also mean falling back upon the unintended consequences of ordinary law as they then happen to apply to AI, which will often be extremely toxic for our ability to apply AI to the most valuable tasks.

-

If we try to not regulate AI at all, the public will turn against AI. Americans already dislike AI, in a way the Chinese do not. We must build trust.

-

China, like everyone else, already regulates AI. The idea that if we had a fraction of the regulations they do, or if we interfere with companies or the market a fraction of how much they constantly do so everywhere, that we suddenly ‘lose the race,’ is silly.

-

We have a substantial lead in AI, despite many efforts to lose I discuss later. We are not in danger of ‘losing’ every time we breathe on the situation.

-

Most of the regulations that are being pushed for are about transparency, often even transparency to the government, so we can know what the hell is going on, and so people can critique the safety and security plans of labs. They are about building state capacity to evaluate models, and using that, which actively benefits AI companies in various ways as discussed above.

-

There are also real and important mundane harms to deal with now.

-

Yes, if we were to impose highly onerous, no good, very bad regulations, in the style of the European Union, that would threaten our AI lead and be very bad. This is absolutely a real risk. But this type of accusation consistently gets levied against any bill attempting to do anything, anywhere, for any reason – or that someone is trying to ‘ban math’ or ‘kill AI’ or whatever. Usually this involves outright hallucinations about what is in the bill, or its consequences.

-

AI is currently virtually unregulated as a distinct entity, so ‘repeal 10 regulations for every one you add’ is to not regulate at all building machines that are soon likely to be smarter and more capable than humans, or anything else either.

-

There are many regulations that impact AI in various ways.

-

Many of those regulations are worth repealing or reforming. For example, permitting reform on power plants and transmission lines. And there are various consequences of copyright, or of common law, that should be reconsidered for the AI age.

-

What almost all these rules have in common is that they are not rules about AI. They are rules that are already in place in general, for other reasons. And again, I’d be happy to get rid of many of them, in general or for AI in particular.

-

But yes, you are going to want to regulate AI, and not merely in the ‘light touch’ ways that are code words for doing nothing, or actively working to protect AI from existing laws.

-

AI is soon going to be the central fact about the world. To suggest this level of non-intervention is not classical liberalism, it is anarchism.

-

Anarchism does not tend to go well for the uncompetitive and disadvantaged, which in the future age of ASI would be the humans, and it fails to solve various important market failures, collective action and public goods problems and so on.

-

The reason why a general hands-off approach has in the past tended to benefit humans, so long as you work to correct key market failures and solve particular collective action problems, is that humans are the most powerful optimization engines, and most intelligent and powerful minds, on the planet, and we have various helpful social dynamics and characteristics. All of that, and some other key underpinnings I could go into, often won’t apply to a future world with very powerful AI.

-

If we don’t do sensible regulations now, while we can all navigate this calmly, it will get done after something goes wrong, and not calmly or wisely.

-

‘Winning the AI race’ is not about who gets to build the GPU. ‘Winning’ the ‘race’ is not important because of who gets market share in selling big tech solutions. It is especially not about who gets to sell others the AI chips. Winning the race is about the race to superintelligence.

-

The major AI labs say we will likely reach AGI within Trump’s second term, with superintelligence (ASI) following soon thereafter. David Sacks himself endorses this view explicitly.

-

‘Winning the race’ to superintelligence is indeed very important. The way in which humanity reaches superintelligence (assuming we do reach it) will determine the future.

-

That future might be anything from wonderful to worthless. It might or might not involve humanity surviving, or being in control over the future. It might or might not reflect different values, or be something we would find valuable.

-

If we build a superintelligence before we know how to align it, meaning before we know how to get it to do what we want it to do, everyone dies, or at minimum we lose control over the future.

-

If we build a superintelligence and know how to align it, but we don’t choose a good thing to align it to, meaning we don’t wisely choose how it will act, then the same thing happens. We die, or we lose control over the future.

-

If we build a superintelligence and know how to align it, and align it in general to ‘whatever the local human tells it to do,’ even with restrictions on that, and give out copies, this results at best in gradual disempowerment of humanity and us losing control over the future and the future likely losing all value. This problem is hard.

-

This is very different from a question like ‘who gets better market share for their AI products,’ whether that is hardware or software, and questions about things like commercial adaptation and lockin or tech stack usage or what not, as if AI was some ordinary technology.

-

AI actually has remarkably little lock-in. You can mostly swap one model out for another at will if someone comes out with a better one. There’s no need to run a model that matches the particular AI chips you own, either. AI itself will be able to simplify the ‘migration’ process or any lock-in issues.

-

It’s not that whose AI models people use doesn’t matter at all. But in a world in which we will soon reach superintelligence, it’s mostly about market share in the meantime to fund AI development.

-

If we don’t soon reach superintelligence, then we’re dealing with a far more ‘ordinary’ technology, and yes we want market share, but it’s no longer an existentially important race, it won’t have dramatic lock-in effects, and getting to the better AI products first will still depend on us retaining our compute advantages as long as possible.

-

If we care about American dominance in global markets, including tech markets, and especially if we care about winning the race to AGI and superintelligence and otherwise protecting American national security, stop talking about how what we need to do is not regulate AI, and start talking about the things that will actually help us, or at least stop doing the things that actively hurt us and could actually make us lose.

-

Straight talk. While it’s not my primary focus because development of AGI and ASI is more important, I strongly agree that we want American tech, especially American software, being used as widely as possible, especially by allies, across as much of the tech stack as possible. Even more than that, I strongly want America to have the lead in frontier highly capable AI, including AGI and then ASI, in the ways that determine the future.

-

If we want to do that, what is most important to accomplishing this?

-

We need allies to work with us and use our tech. Everyone says this. That means we need to have allies! That means working with them, building trust. Make them want to build on our tech stacks, and buy our products.

-

That also means not imposing tariffs on them, or making them lose trust in us and our technology. Various recent actions have made our allies lose trust, in ways that are causing them to be less trusting of American tech stacks. And when we go to trade wars with them, you know what our main exports are that they will go after? Things like AI.

-

It also means focusing most on our most important and trustworthy allies that have the most important markets. That means places like our NATO allies, Japan, South Korea and Australia, not Saudi Arabia, Qatar and the UAE. Those later markets don’t matter zero, but they are relatively tiny.

-

Yes, avoiding hypothetical sufficiently onerous regulation on AI directly, and I will absolutely be keeping an eye out for this. Most of the regulatory and legal barriers that matter lie elsewhere.

-

The key barriers are in the world of atoms, not the world of bits.

-

Energy generation and transmission, permitting reform.

-

High-skilled immigration, letting talent come to America.

-

Education reform so AI helps teach rather than helping students cheat.

-

Want reshoring? Repeal the Jones Act so we can transmit the resulting goods. Automate the ports. Allow self-driving cars and trucks broadly. And so on.

-

Regulations that prevent the application of AI to high value sectors, or otherwise hold back America. Broad versions of YIMBY for housing. Occupational licensing. FDA requirements. The list goes on. Unleash the abundance agenda, it mostly lines up with what AI needs. It’s time to build.

-

Dealing with various implications of other laws that often were crazy already and definitely don’t make sense in an AI world.

-

The list goes on.

Or, as Derek Thompson put it:

Derek Thompson: Trump’s new AI directive (quoted below from David Sacks) argues the US should take care to:

– respect our trading partners/allies rather than punish them with dumb rules that restrict trade

– respect “due process”

It’d be interesting to apply these values outside of AI!

Jordan Schneider: It’s an NVDA press release. Just absurd.

David Sacks continues to beat the drum that the diffusion rule ‘undermines the goal of winning the AI race,’ as if the AI race is about Nvidia’s market share. It isn’t.

If we want to avoid allocations of resources by governmental decision, overreach of our executive branch authorities to restrict trade, alienating US allies and lack of due process, Sacks’s key points here? Yeah, those generally sound like good ideas.

To that end, yes, I do believe we can improve on Biden’s proposed diffusion rules, especially when it comes to US allies that we can trust. I like the idea that we should impose less trade restrictions on these friendly countries, so long as we can ensure that the chips don’t effectively fall into the wrong hands. We can certainly talk price.

Alas, in practice, it seems like the actual plans are to sell massive amounts of AI chips to places like UAE, Saudi Arabia and Malaysia. Those aren’t trustworthy American allies. Those are places with close China ties. We all know what those sales really mean, and where they could easily be going. And those are chips we could have kept in more trustworthy and friendly hands, that are eager to buy them, especially if they have help facilitating putting those chips to good use.

The policy conversations I would like to be having would focus not only on how to best superchange American AI and the American economy, but also on how to retain humanity’s ability to steer the future and ensure AI doesn’t take control, kill everyone or otherwise wipe out all value. And ideally, to invest enough in AI alignment, security, transparency and reliability that there would start to be a meaningful tradeoff where going safer would also mean going slower.

Alas. We massively underinvesting in reliability and alignment and security purely from a practical utility perspective and we not even having that discussion.

Instead we are having a discussion about how, even if your only goal is ‘America must beat China and let the rest handle itself,’ to stop shooting ourselves in the foot on that basis alone.

The very least we can do is not shoot ourselves in the foot, and not sell out our future for a little bit of corporate market share or some amount of oil money.