Sam Altman talked recently to Theo Von.

Theo is genuinely engaging and curious throughout. This made me want to consider listening to his podcast more. I’d love to hang. He seems like a great dude.

The problem is that his curiosity has been redirected away from the places it would matter most – the Altman strategy of acting as if the biggest concerns, risks and problems flat out don’t exist successfully tricks Theo into not noticing them at all, and there are plenty of other things for him to focus on, so he does exactly that.

Meanwhile, Altman gets away with more of this ‘gentle singularity’ lie without using that term, letting it graduate to a background assumption. Dwarkesh would never.

Quotes are all from Altman.

Sam Altman: But also [kids born a few years ago] will never know a world where products and services aren’t way smarter than them and super capable, they can just do whatever you need.

Thank you, sir. Now actually take that to heart and consider the implications. It goes way beyond ‘maybe college isn’t a great plan.’

Sam Altman: The kids will be fine. I’m worried about the parents.

Why do you think the kids will be fine? Because they’re used to it? So it’s fine?

This is just a new tool that exists in the tool chain.

A new tool that is smarter than you are and super capable? Your words, sir.

No one knows what happens next.

True that. Can you please take your own statements seriously?

How long until you can make an AI CEO for OpenAI? Probably not that long.

No, I think it’s awesome, I’m for sure going to figure out something else to do.

Again, please, I am begging you, take your own statements seriously.

There will be some jobs that totally go away. But mostly I think we will rely on the fact that people’s desire for more stuff for better experiences for you know a higher social status or whatever seems basically limitless, human creativity seems basically limitless and human desire to like be useful to each other and to connect.

And AI will be better at doing all of that. Yet Altman goes through all the past falsified predictions as if they apply here. He keeps going on and on as if the world he’s talking about is a bunch of humans with access to cool tools, except by his own construction those tools can function as OpenAI’s CEO and are smarter than people. It is all so absurd.

What people really want is the agency to co-create the future together.

Highly plausible this is important to people. I don’t see any plan for giving it to them? The solution here is redistribution of a large percentage of world compute, but even if you pull that off under ideal circumstances no, that does not do it.

I haven’t heard any [software engineer] say their job lacks meaning [due to AI]. And I’m hopeful at least for a long time, you know, 100 years, who knows? But I’m hopeful that’s what it’ll feel like with AI is even if we’re asking it to solve huge problems for us. Even if we tell it to go develop a cure for cancer there will still be things to do in that process that feel valuable to a human.

Well, sure, not at this capability level. Where is this hope coming from that it would continue for 100 years? Why does one predict the other? What will be the steps that humans will meaningfully do?

We are going to find a way in our own telling of the story to feel like the main characters.

I think the actual plan is for the AI to lie to us? And for us to lie to ourselves? We’ll set it up so we have this idea that we matter, that we are important, and that will be fine? I disagree that this would be fine.

Altman discusses the parallel to discovering that Earth is not the center of the solar system, and the solar system is not the center of the galaxy, and so on, little blue dot. Well sure, but that wasn’t all that load bearing, we’re still the center of our own universes, and if there’s no other life out there we’re the only place that matters. This is very different.

Theo asks what Altman’s fears are about AI. Altman responds with a case where he couldn’t do something and GPT-5 could do it. But then he went on with his day. His second answer is impact on user mental health with heavy usage, which is a real concern and I’m glad he’s scared about that.

And then… that’s it. That’s what scares you, Altman? There’s nothing else you want to share with the rest of us? Nothing about loss of control issues, nothing about existential risks, and so on? I sure as hell hope that he is lying. I do think he is?

When asked about a legal framework for AI, Altman asks for AI privilege, sees this as urgent, and there is absolutely nothing else he thinks is worth mentioning that requires the law to adjust.

The last few months have felt very fast.

Theo then introduces Yoshua Bengio into the conversation, bringing up deception and sycophancy and neurolese.

We think it’s going to be great. There’s clearly real risks. It kind of feels like you should be able to say something more than that, But in truth, I think all we know right now is that we have discovered, invented, whatever you want to call it, something extraordinary that is going to reshape the course of human history. Dear God, man. But if you don’t know, we don’t know.

Well, of course. I mean, I think no one can predict the future. Like human society is very complex. This is an amazing new technology. Maybe a less dramatic example than the atomic bomb is when they discovered the transistor a few years later.

Yes, we can all agree we don’t know. We get a lot of good attitude, the missing mood is present, but it doesn’t cash out in the missing concerns. ‘There’s clearly real risks’ but that in context seems to apply to things like jobs and meaning and distribution given all the context.

There’s no time in human history at the beginning of the century when the people ever knew what the end of the century was going to be like. Yeah. So maybe it’s I do think it goes faster and faster each century.

The first half of this seems false for quite a lot of times and places? Sure, you don’t know how the fortunes of war might go but for most of human history ‘100 years from now looks a lot like today’ was a very safe bet. Nothing ever happens (other than cycling wars and famines and plagues and so on) did very well. But yes, in 1800 or 1900 or 2000 you would have remarkably little idea.

It certainly feels like [there is a race between companies.]

Theo equates this race to Formula 1 and asks what the race is for. AGI? ASI? Altman says benchmarks are saturated and it’s all about what you get out of the models, but we are headed for some model.

Maybe it’s a system that is capable of doing its own AI research. Maybe it’s a system that is smarter than all of humans put together… some finish line we are going to cross… maybe you call that superintelligence. I don’t have a finish line in mind.

Yeah, those do seem like important things that represent effective ‘finish lines.’

I assume that what will happen, like with every other kind of technology, is we’ll realize there’s this one thing that the tool’s way better than us at. Now, we get to go solve some other problems.

NO NO NO NO NO! That is not what happens! The whole idea is this thing becomes better at solving all the problems, or at least a rapidly growing portion of all problems. He mentions this possibility shortly thereafter but says he doesn’t think ‘the simplistic thing works.’ The ‘simplistic thing’ will be us, the humans.

You say whatever you want. It happens, and you figure out amazing new things to build for the next generation and the next.

Please take this seriously, consider the implications of what you are saying and solve for the equilibrium or what happens right away, come on man. The world doesn’t sit around acting normal while you get to implement some cool idea for an app.

Theo asks, would regular humans vote to keep AI or stop AI? Altman says users would say go ahead and users would say stop. Theo predicts most people would say stop it. My understanding is Theo is right for the West, but not for the East.

Altman asks Theo what he is afraid of with AI, Theo seems worried about They Took Our Jobs and loss of economic survival and also meaning, that we will be left to play zero-sum games of extraction. With Theo staying in Altman’s frame, Altman can pivot back to humans liking to be creative and help each other and so on and pour on the hopium that we’ll all get to be creatives.

Altman says, you get less enjoyment from a ghost robotic kitchen setup, something is missing, you’d rather get the food from the dude who has been making it. To which I’d reply that most of this is that the authentic dude right now makes a better product, but that ten years from now the robot will make a better product than the authentic dude. And yeah, there will still be some value you get from patronizing the dude, but mostly what you want is the food and thus will the market speak, and then we’ve got Waymos with GLP-1 dart guns and burrito cannons for unknown reasons when what you actually get is a highly cheap and efficient delicious food supply chain that I plan on enjoying very much thank you.

We realized actually this is not helping me be my best. you know, like doing the equivalent of getting the like burrito cannon into my mouth on my phone at night, like that’s not making me long-term happy, right? And that’s not helping me like really accomplish my true goals in life. And I think if AI does that, people will reject it.

I mean I think a thing that efficiently gives you burritos does help you with your goals and people will love it, if it’s violently shooting burritos into your face unprompted at random times then no but yeah it’s not going to work like that.

However, if Chhat GBT really helps you to figure out what your true goals in life are and then accomplish those, you know, it says, “Hey, you’ve said you want to be a better father or a better, you know, you want to be in better shape or you, you know, want to like grow your business.

I refer Altman to the parable of the whispering earring, but also this idea that the AI will remain a tool that helps individual humans accomplish their normal goals in normal ways only smarter is a fairy tale. Altman is providing hopium via the implicit overall static structure of the world, then assuming your personal AI is aligned to your goals and well being, and then making additional generous assumptions, and then saying that the result might turn out well.

On the moratorium on all AI regulations that was stripped from the BBB:

There has to be some sort of regulation at some point. I think it’d be a mistake to let each state do this kind of crazy patchwork of stuff. I think like one countrywide approach would be much easier for us to be able to innovate and still have some guardrails, but there have to be guardrails.

The proposal was, for all practical purposes, to have no guardrails. Lawmakers will say ‘it would be better to have one federal regulation than fifty state regulations’ and then ban the fifty state regulations but have zero federal regulation.

The concerns [politicians come to us with] are like, what is this going to do to our kids? Are they going to stop learning? Is this going to spread fake information? Is this going to influence elections? But we’ve never had ‘you can’t say bad things about the president.’

That’s good to hear versus the alternative, better those real concerns than an attempt to put a finger on the scale, although of course these are not the important concerns.

We could [make it favor one candidate over another]. We totally could. I mean, we don’t, but we totally could. Yeah… a lot of people do test it and we need to be held to a very high standard here… we can tell.

As Altman points out, it would be easy to tell if they made the model biased. And I think doing it ‘cleanly’ is not so simple, as Musk has found out. Try to put your finger on the scale and you get a lot of side effects and it is all likely deeply embarrassing.

Maybe we build a big Dyson sphere on the solar system.

I’m noting that because I’m tired of people treating ‘maybe we build a Dyson sphere’ as a statement worthy of mockery and dismissal of a person’s perspective. Please note that Altman thinks this is very possibly the future.

You have to be both [excited and scared]. I don’t think anyone could honestly look at the trajectory humanity is on and not feel both excited and scared.

Being chased by a goose, asking scared of what. But yes.

I think people get blinded by ambition. I think people get blinded by competition. I think people get caught up like very well-meaning people can get caught up in very negative incentives. Negative for society as a whole. By the way, I include us in this.

…

I think people come in with good intentions. They clearly sometimes do bad stuff.

…

I think Palantir and Peter Thiel do a lot of great stuff… We’re very close friends…. His brain just works differently… I’m grateful he exists because he thinks the things no one else does.

…

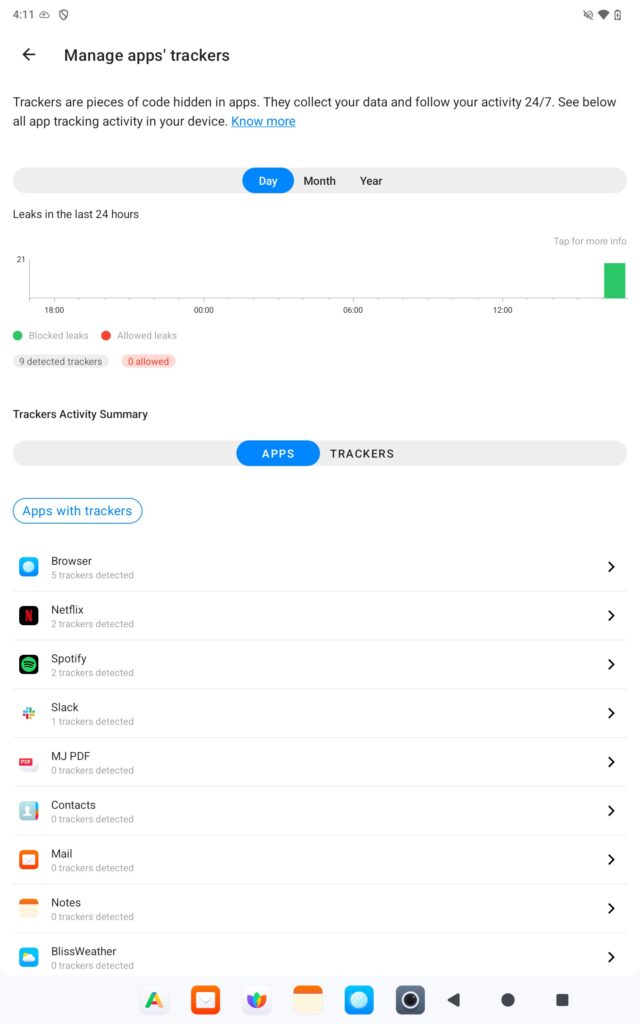

I think we really need to prioritize the right to privacy.

I’m skipping over a lot of interactions that cover other topics.

Altman is a great guest, engaging, fun to talk to, shares a lot of interesting thoughts and real insights, except it is all in the service of painting a picture that excludes the biggest concerns. I don’t think the deflections I care about most (as in, flat out ignoring them hoping they will go away) are the top item on his agenda in such an interview, or in general, but such deflections are central to the overall strategy.

The problem is that those concerns are part of reality.

As in, something that, when you stop looking at it, doesn’t go away.

If you are interviewing Altman in the future, you want to come in with Theo’s curiosity and friendly attitude. You want to start by letting Altman describe all the things AI will be able to do. That part is great.

Except also do your homework, so you are ready when Altman gives answers that don’t make sense, and that don’t take into account what Altman says that AI will be able to do. That notices the negative space being not mentioned, and that points it out. Not as a gotcha or an accusation, but to not let him get away with ignoring it.

At minimum, you have to point out that the discussion is making one hell of a set of assumptions, ask Altman if he agrees that those assumptions are being made, and check if how confident he is those assumptions are true, and why, even if that isn’t going to be your focus. Get the crucial part on the record. If you ask in a friendly way I don’t think there is a reasonable way to dodge answering.