Siri “unintentionally” recorded private convos; Apple agrees to pay $95M

Apple has agreed to pay $95 million to settle a lawsuit alleging that its voice assistant Siri routinely recorded private conversations that were then shared with third parties and used for targeted ads.

In the proposed class-action settlement—which comes after five years of litigation—Apple admitted to no wrongdoing. Instead, the settlement refers to “unintentional” Siri activations that occurred after the “Hey, Siri” feature was introduced in 2014, where recordings were apparently prompted without users ever saying the trigger words, “Hey, Siri.”

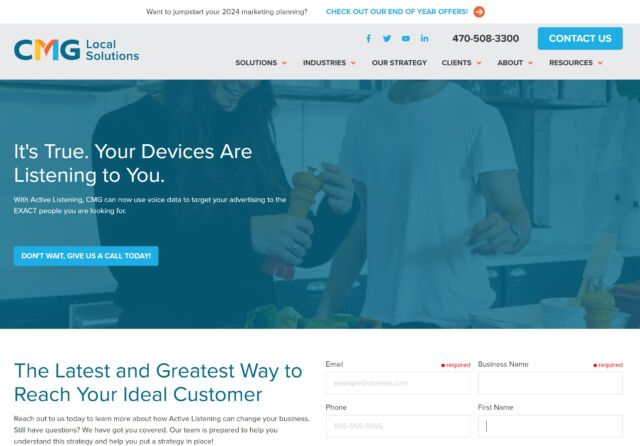

Sometimes Siri would be inadvertently activated, a whistleblower told The Guardian, when an Apple Watch was raised and speech was detected. The only clue that users seemingly had of Siri’s alleged spying was eerily accurate targeted ads that appeared after they had just been talking about specific items like Air Jordans or brands like Olive Garden, Reuters noted (claims which remain disputed).

It’s currently unknown how many customers were affected, but if the settlement is approved, the tech giant has offered up to $20 per Siri-enabled device for any customers who made purchases between September 17, 2014, and December 31, 2024. That includes iPhones, iPads, Apple Watches, MacBooks, HomePods, iPod touches, and Apple TVs, the settlement agreement noted. Each customer can submit claims for up to five devices.

A hearing when the settlement could be approved is currently scheduled for February 14. If the settlement is certified, Apple will send notices to all affected customers. Through the settlement, customers can not only get monetary relief but also ensure that their private phone calls are permanently deleted.

While the settlement appears to be a victory for Apple users after months of mediation, it potentially lets Apple off the hook pretty cheaply. If the court had certified the class action and Apple users had won, Apple could’ve been fined more than $1.5 billion under the Wiretap Act alone, court filings showed.

But lawyers representing Apple users decided to settle, partly because data privacy law is still a “developing area of law imposing inherent risks that a new decision could shift the legal landscape as to the certifiability of a class, liability, and damages,” the motion to approve the settlement agreement said. It was also possible that the class size could be significantly narrowed through ongoing litigation, if the court determined that Apple users had to prove their calls had been recorded through an incidental Siri activation—potentially reducing recoverable damages for everyone.

Siri “unintentionally” recorded private convos; Apple agrees to pay $95M Read More »