Netflix’s first show with generative AI is a sign of what’s to come in TV, film

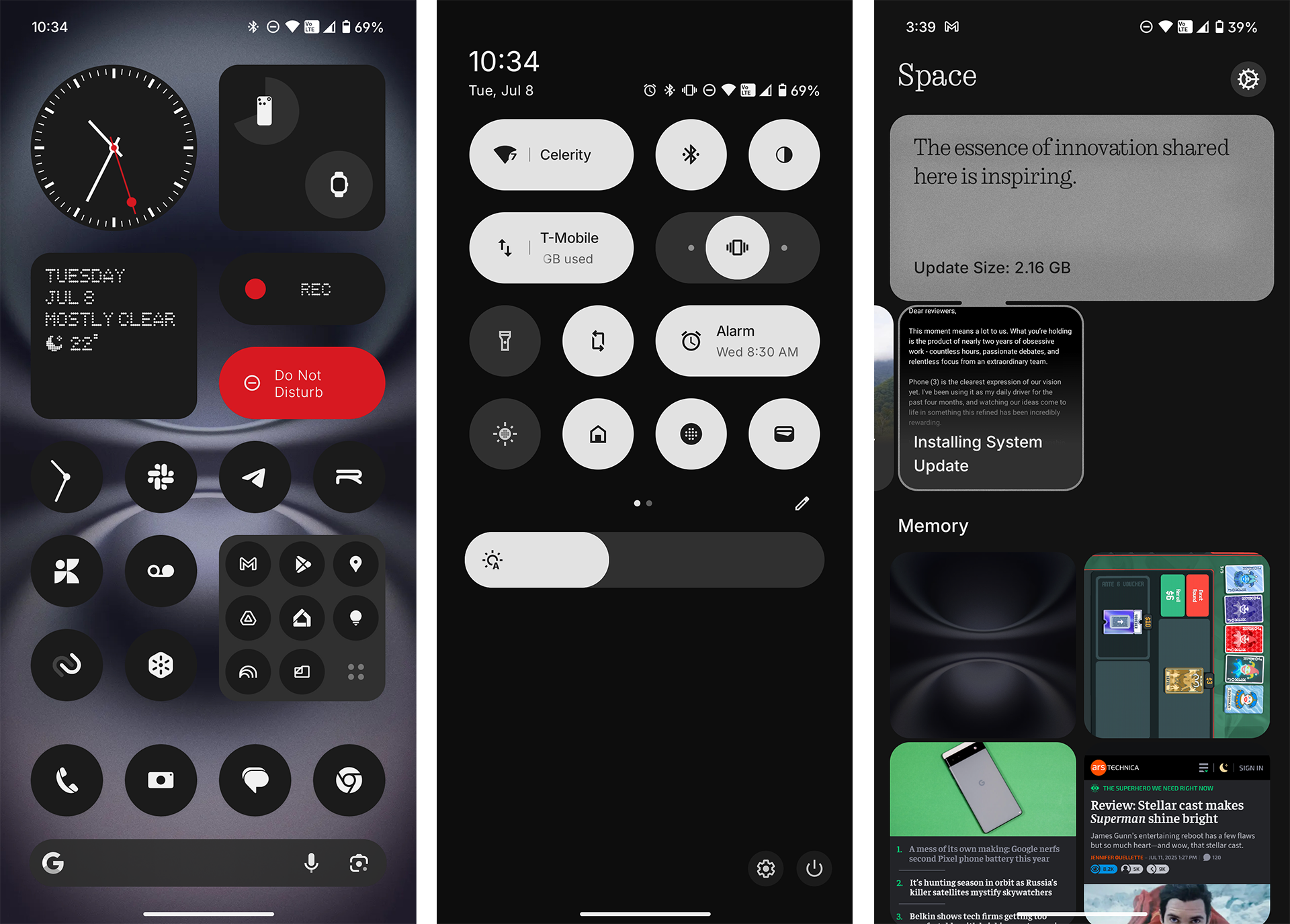

Netflix used generative AI in an original, scripted series that debuted this year, it revealed this week. Producers used the technology to create a scene in which a building collapses, hinting at the growing use of generative AI in entertainment.

During a call with investors yesterday, Netflix co-CEO Ted Sarandos revealed that Netflix’s Argentine show The Eternaut, which premiered in April, is “the very first GenAI final footage to appear on screen in a Netflix, Inc. original series or film.” Sarandos further explained, per a transcript of the call, saying:

The creators wanted to show a building collapsing in Buenos Aires. So our iLine team, [which is the production innovation group inside the visual effects house at Netflix effects studio Scanline], partnered with their creative team using AI-powered tools. … And in fact, that VFX sequence was completed 10 times faster than it could have been completed with visual, traditional VFX tools and workflows. And, also, the cost of it would just not have been feasible for a show in that budget.

Sarandos claimed that viewers have been “thrilled with the results”; although that likely has much to do with how the rest of the series, based on a comic, plays out, not just one, AI-crafted scene.

More generative AI on Netflix

Still, Netflix seems open to using generative AI in shows and movies more, with Sarandos saying the tech “represents an incredible opportunity to help creators make films and series better, not just cheaper.”

“Our creators are already seeing the benefits in production through pre-visualization and shot planning work and, certainly, visual effects,” he said. “It used to be that only big-budget projects would have access to advanced visual effects like de-aging.”

Netflix’s first show with generative AI is a sign of what’s to come in TV, film Read More »