Google Pixel 10 Pro Fold review: The ultimate Google phone

When the first foldable phones came along, they seemed like a cool evolution of the traditional smartphone form factor and, if they got smaller and cheaper, like something people might actually want. After more than five years of foldable phones, we can probably give up on the latter. Google’s new Pixel 10 Pro Fold retains the $1,800 price tag of last year’s model, and while it’s improved in several key ways, spending almost two grand on any phone remains hard to justify.

For those whose phones are a primary computing device or who simply love gadgets, the Pixel 10 Pro Fold is still appealing. It offers the same refined Android experience as the rest of the Pixel 10 lineup, with much more screen real estate on which to enjoy it. Google also improved the hinge for better durability, shaved off some bezel, and boosted both charging speed and battery capacity. However, the form factor hasn’t taken the same quantum leap as Samsung’s latest foldable.

An iterative (but good) design

The Pixel 10 Pro Fold doesn’t reinvent the wheel—it looks and feels almost exactly like last year’s foldable, with a few minor tweaks centered around a new “gearless” hinge. Dropping the internal gears allegedly helps make the mechanism twice as durable. Google claims the Pixel 10 Pro Fold’s hinge will last for more than 10 years of folding and unfolding.

| Specs at a glance: Google Pixel 10 series | ||||

|---|---|---|---|---|

| Pixel 10 ($799) | Pixel 10 Pro ($999) | Pixel 10 Pro XL ($1,199) | Pixel 10 Pro Fold ($1,799) | |

| SoC | Google Tensor G5 | Google Tensor G5 | Google Tensor G5 | Google Tensor G5 |

| Memory | 12GB | 16GB | 16GB | 16GB |

| Storage | 128GB / 256GB | 128GB / 256GB / 512GB | 128GB / 256GB / 512GB / 1TB | 256GB / 512GB / 1TB |

| Display | 6.3-inch 1080×2424 OLED, 60-120 Hz, 3,000 nits | 6.3-inch 1280×2856 LTPO OLED, 1-120 Hz, 3,300 nits | 6.8-inch 1344×2992 LTPO OLED, 1-120 Hz, 3,300 nits | External: 6.4-inch 1080×2364 OLED, 60-120 Hz, 3,000 nits; Internal: 8-inch 2076×2152 LTPO OLED, 1-120 Hz, 3,000 nits |

| Cameras | 48 MP wide with Macro Focus, F/1.7, 1/2-inch sensor; 13 MP ultrawide, f/2.2, 1/3.1-inch sensor; 10.8 MP 5x telephoto, f/3.1, 1/3.2-inch sensor; 10.5 MP selfie, f/2.2 |

50 MP wide with Macro Focus, F/1.68, 1/1.3-inch sensor; 48 MP ultrawide, f/1.7, 1/2.55-inch sensor; 48 MP 5x telephoto, f/2.8, 1/2.55-inch sensor; 42 MP selfie, f/2.2 |

50 MP wide with Macro Focus, F/1.68, 1/1.3-inch sensor; 48 MP ultrawide, f/1.7, 1/2.55-inch sensor; 48 MP 5x telephoto, f/2.8, 1/2.55-inch sensor; 42 MP selfie, f/2.2 |

48 MP wide, F/1.7, 1/2-inch sensor; 10.5 MP ultrawide with Macro Focus, f/2.2, 1/3.4-inch sensor; 10.8 MP 5x telephoto, f/3.1, 1/3.2-inch sensor; 10.5 MP selfie, f/2.2 (outer and inner) |

| Software | Android 16 | Android 16 | Android 16 | Android 16 |

| Battery | 4,970 mAh, up to 30 W wired charging, 15 W wireless charging (Pixelsnap) | 4,870 mAh, up to 30 W wired charging, 15 W wireless charging (Pixelsnap) | 5,200 mAh, up to 45 W wired charging, 25 W wireless charging (Pixelsnap) | 5,015 mAh, up to 30 W wired charging, 15 W wireless charging (Pixelsnap) |

| Connectivity | Wi-Fi 6e, NFC, Bluetooth 6.0, sub-6 GHz and mmWave 5G, USB-C 3.2 | Wi-Fi 7, NFC, Bluetooth 6.0, sub-6 GHz and mmWave 5G, UWB, USB-C 3.2 | Wi-Fi 7, NFC, Bluetooth 6.0, sub-6 GHz and mmWave 5G, UWB, USB-C 3.2 | Wi-Fi 7, NFC, Bluetooth 6.0, sub-6 GHz and mmWave 5G, UWB, USB-C 3.2 |

| Measurements | 152.8 height×72.0 width×8.6 depth (mm), 204 g | 152.8 height×72.0 width×8.6 depth (mm), 207 g | 162.8 height×76.6 width×8.5 depth (mm), 232 g | Folded: 154.9 height×76.2 width×10.1 depth (mm); Unfolded: 154.9 height×149.8 width×5.1 depth (mm); 258 g |

| Colors | Indigo Frost Lemongrass Obsidian |

Moonstone Jade Porcelain Obsidian |

Moonstone Jade Porcelain Obsidian |

Moonstone Jade |

While the new phone is technically a fraction of a millimeter thicker, it’s narrowed by a similar amount. You likely won’t notice this, nor will the 1g in additional mass register. You may, however, spot the slimmer bezels and hinge. And that means cases for the 2024 foldable are just a fraction of a millimeter from fitting on the Pixel 10 Pro Fold. It does fit better in your hand, though.

The Pixel is on the thick side for 2025, but this was record-setting thinness last year.

Thanks to the gearless hinge, the Pixel 10 Pro Fold the first foldable with full IP68 certification for water and dust resistance. The hinge feels extremely smooth and sturdy, but it’s a bit stiffer than we’ve seen on most foldables. This might change over time, but it’s a little harder to open and close out of the box. Samsung’s Z Fold 7 is thinner and easier to fold, but the hinge doesn’t open to a full 180 degrees like the Pixel does.

The new foldable also retains the camera module design of last year’s phone—it’s off-center on the back panel, a break from Google’s camera bar on other Pixels. The Pixel 10 Pro Fold, therefore, doesn’t lie flat on tables and will rock back and forth like most other phones. However, it does have the Qi2 magnets like in the cheaper phones. There are various Maglock kickstands and mounting rings that will attach to the back of the phone if you want to prop it up on a surface.

The Pixel 10 Pro Fold (left) and the Galaxy Z Fold 7 (right) both have 8-inch displays, but the Pixel is curvier. Credit: Ryan Whitwam

The power and volume buttons are on the right edge in the same location as last year. The buttons are stable and tactile when pressed, and there’s a fingerprint sensor in the power button. It’s as fast and accurate as any capacitive sensor on a phone today. The aluminum frame and the buttons have the same matte finish, which differs from the glossy look of the other Pro Pixels. The more grippy matte texture is preferable for a phone you need to fold and unfold throughout the day.

Thanks to the modestly slimmer bezels, Google equipped the phone with a 6.4-inch external screen, slightly larger than the 6.3-inch panel on last year’s Fold. The 120 Hz OLED has a respectable 1080p resolution, and the brightness peaks around 3,000 nits, making it readable in bright outdoor light.

The Pixel 10 Pro Fold (left) has a more compact hinge and slimmer bezels compared to the Pixel 9 Pro Fold (right).

The Pixel 10 Pro Fold has a big 8-inch flexible OLED inside, clocking in at 2076×2152 pixels and 120Hz. It gets similarly bright, but the plastic layer is more reflective than the Gorilla Glass Victus 2 on the cover screen. While the foldable screen is legible, it’s not as pleasant to use outside as high-brightness glass screens.

Like all foldable screens, it’s possible to damage the internal OLED if you’re not careful. On the other hand, the flexible OLED is well-protected when the phone is closed—there’s no gap between the halves, and the magnets hold them together securely. There’s a crease visible in the middle of the screen, but it’s slightly improved from last year’s phone. You can see it well from some angles, but you get used to it.

The Jade colorway looks great. Credit: Ryan Whitwam

While the flat Pixel 10 phones have dropped the physical SIM card slot, the Pixel 10 Pro Fold still has one. It has moved to the top this year, but it seems like only a matter of time before Google removes the slot in foldables, too. For the time being, you can move a physical SIM card to the Fold, transfer to eSIM, or use a combination of physical and electronic SIMs.

Google’s take on big Androids

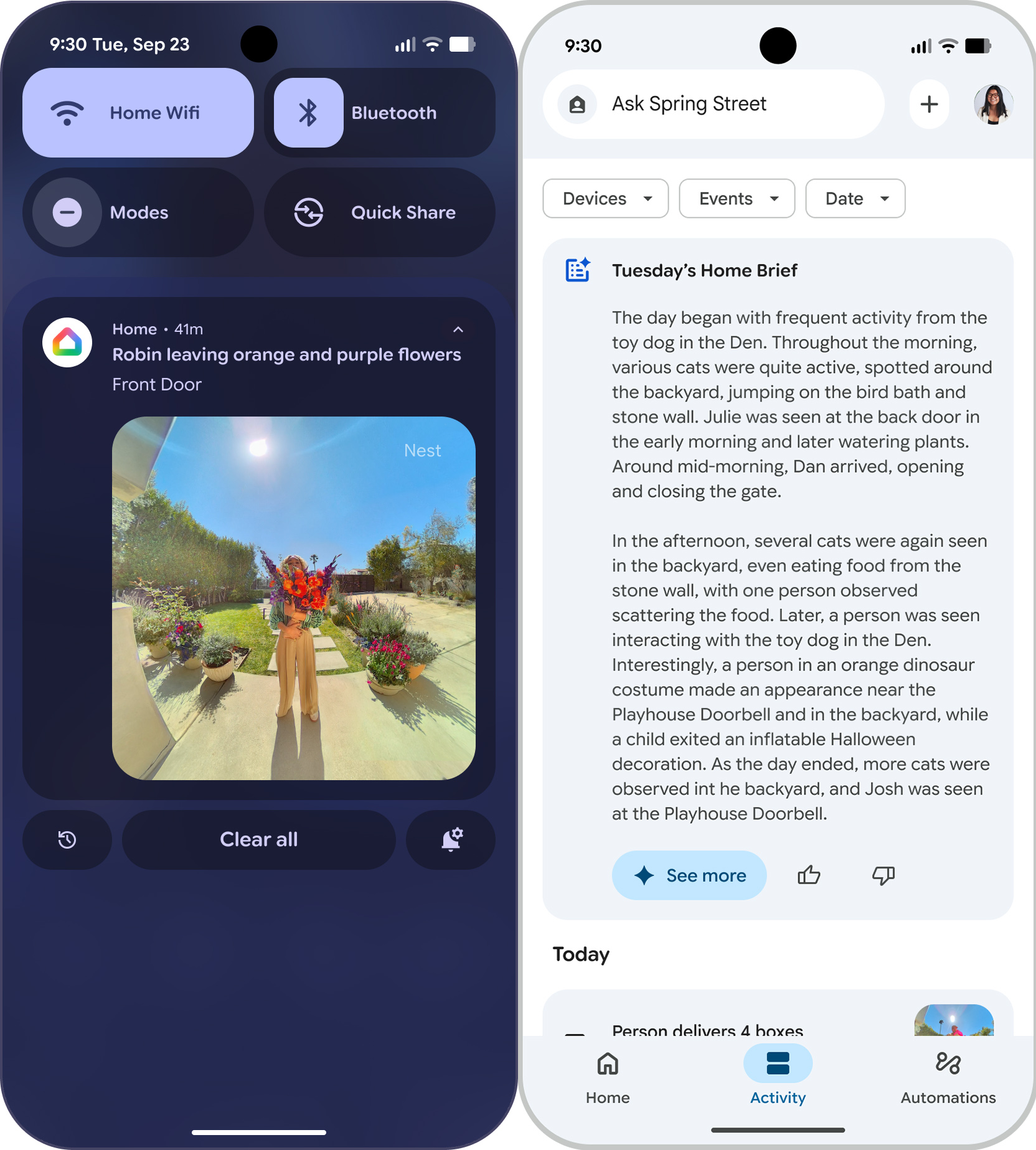

Google’s version of Android is pretty refined these days. The Pixel 10 Pro Fold uses the same AI-heavy build of Android 16 as the flat Pixels. That means you can expect old favorites like Pixel Screenshots, Call Screen, and Magic Compose, along with new arrivals like Magic Cue and Pixel Journal. One thing you won’t see right now is the largely useless Daily Brief, which was pulled after its launch on the Pixel 10 so it could be improved.

Google’s expanded use of Material 3 Expressive theming is also a delight. The Pixel OS has a consistent, clean look you don’t often see on Android phones. Google bundles almost every app it makes on this phone, but you won’t see any sponsored apps, junk games, or other third-party software cluttering up the experience. In short, if you like the vibe of the Pixel OS on other Pixel 10 phones, you’ll like it on the Pixel 10 Pro Fold. We’ve noted a few minor UI glitches in the launch software, but there are no show-stopping bugs.

Multitasking on foldables is a snap. Credit: Ryan Whitwam

The software on this phone goes beyond the standard Pixel features to take advantage of the folding screen. There’s a floating taskbar that can make swapping apps and multitasking easier, and you can pin it on the screen for even more efficiency. The Pixel 10 Pro Fold also supports saving app pairs to launch both at once in split-screen.

Google’s multi-window system on the Fold isn’t as robust as what you get with Samsung, though. For example, split-screen apps open in portrait mode on the Pixel, and if you want them in landscape, you have to physically rotate the phone. On Samsung foldables, you can move app windows around and change the orientation however you like—there’s even support for floating app windows and up to three windowed apps. Google reserves floating windows for tablets, none of which it has released since the Pixel Tablet in 2023. It would be nice to see a bit more multitasking power to make the most of the Fold’s big internal display.

As with all of Google’s Pixels, the new foldable gets seven years of update support, all the way through 2032. You’ll probably need at least one battery swap to make it that long, but you might be more inclined to hold onto an $1,800 phone for seven years. Samsung also offers seven years of support, but its updates are slower and don’t usually include new features after the first year. Google rolls out new updates promptly every month, and updated features are delivered in regular Pixel Drops.

Almost the best cameras

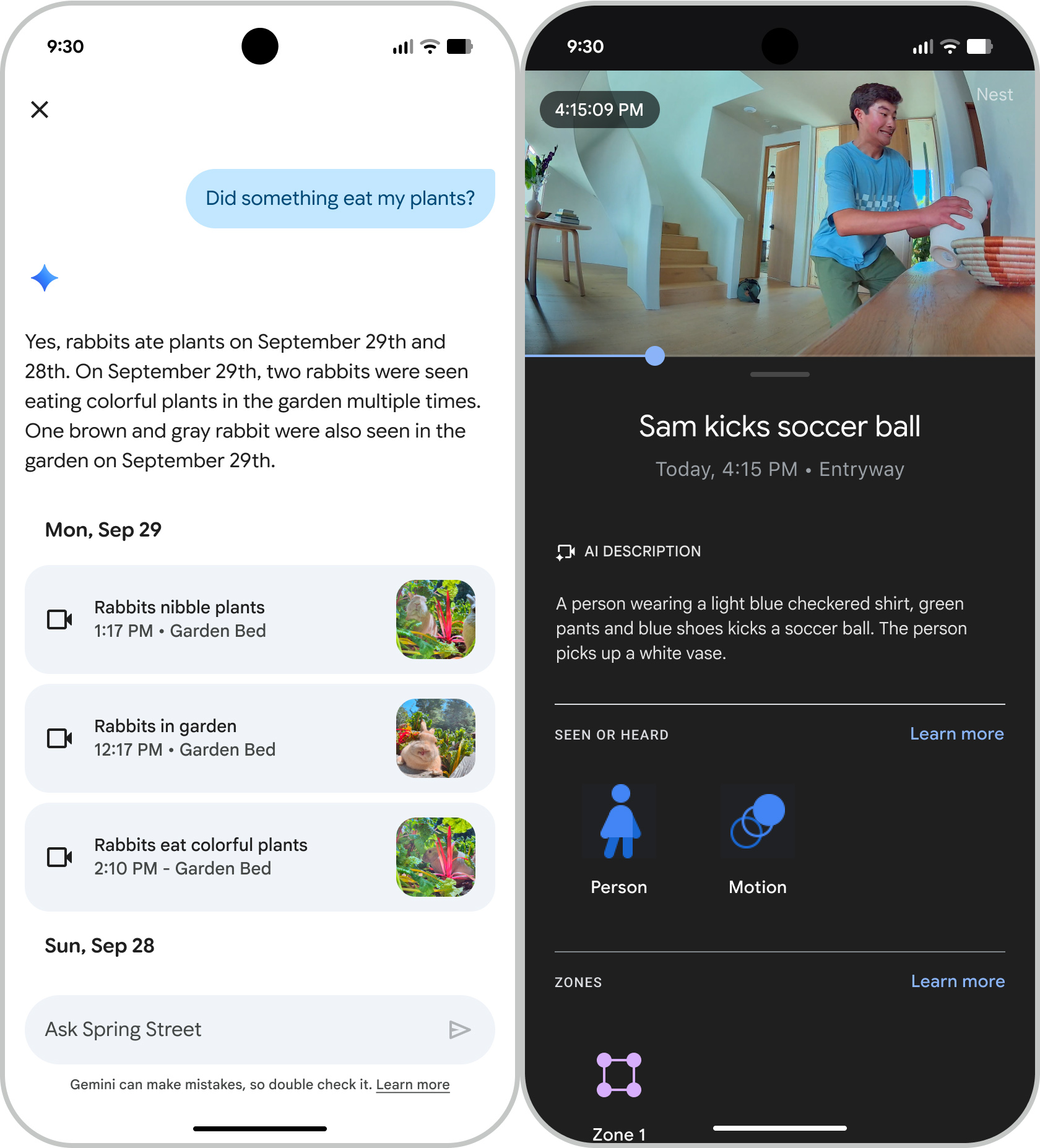

Google may have fixed some of the drawbacks of foldables, but you’ll get better photos with flat Pixels. That said, the Pixel 10 Pro Fold is no slouch—it has a camera setup very similar to the base model Pixel 10 (and last year’s foldable), which is still quite good in the grand scheme of mobile photography.

The cameras are unchanged from last year. Credit: Ryan Whitwam

The Pixel 10 Pro Fold sports a 48 MP primary sensor, a 10.5 MP ultrawide, and a 10.8 MP 5x telephoto. There are 10 MP selfie cameras peeking through the front and internal displays as well.

Like the other Pixels, this phone is great for quick snapshots. Google’s image processing does an admirable job of sharpening details and has extraordinary dynamic range. The phone also manages to keep exposure times short to help capture movement. You don’t have to agonize over exactly how to frame a shot or wait for the right moment to hit the shutter. The Pixel 10 Pro and Pro XL do all of this slightly better, but provided you don’t zoom too much, the Pixel 10 Pro Fold photos are similarly excellent.

Medium indoor light. Ryan Whitwam

The primary sensor does better than most in dim conditions, but this is where you’ll notice limitations compared to the flat Pro phones. The Fold’s smaller image sensor can’t collect as much light, resulting in longer exposures. You’ll notice this most in Night Sight shots.

The telephoto sensor is only 10.8 MP compared to 48 MP on the other Pro Pixels. So images won’t be as sharp if you zoom in, but the normal framing looks fine and gets you much closer to your subject. The phone does support up to 20x zoom, but going much beyond 5x begins to reveal the camera’s weakness, and even Google’s image processing can’t hide that. The ultrawide camera is good enough for landscapes and wide group shots, but don’t bother zooming in. It also has autofocus for macro shots.

The selfie cameras are acceptable, but you don’t have to use them. As a foldable, this phone allows you to use the main cameras to snap selfies with the external display as a viewfinder. The results are much better, but the phone is a bit awkward to hold in that orientation. Google also added a few more camera features that complement the form factor, including a split-screen camera roll similar to Samsung’s app and a new version of the Made You Look cover screen widgets.

The Pixel 10 Pro Fold can leverage generative AI in several imaging features, so it has the same C2PA labeling as the other Pixels. We’ve seen this “AI edited” tag appear most often on images from the flat Pixels that are zoomed beyond 20x, so you likely won’t end up with any of those on the Fold. However, features like Add Me and Best Take will get the AI labeling.

The Tensor tension

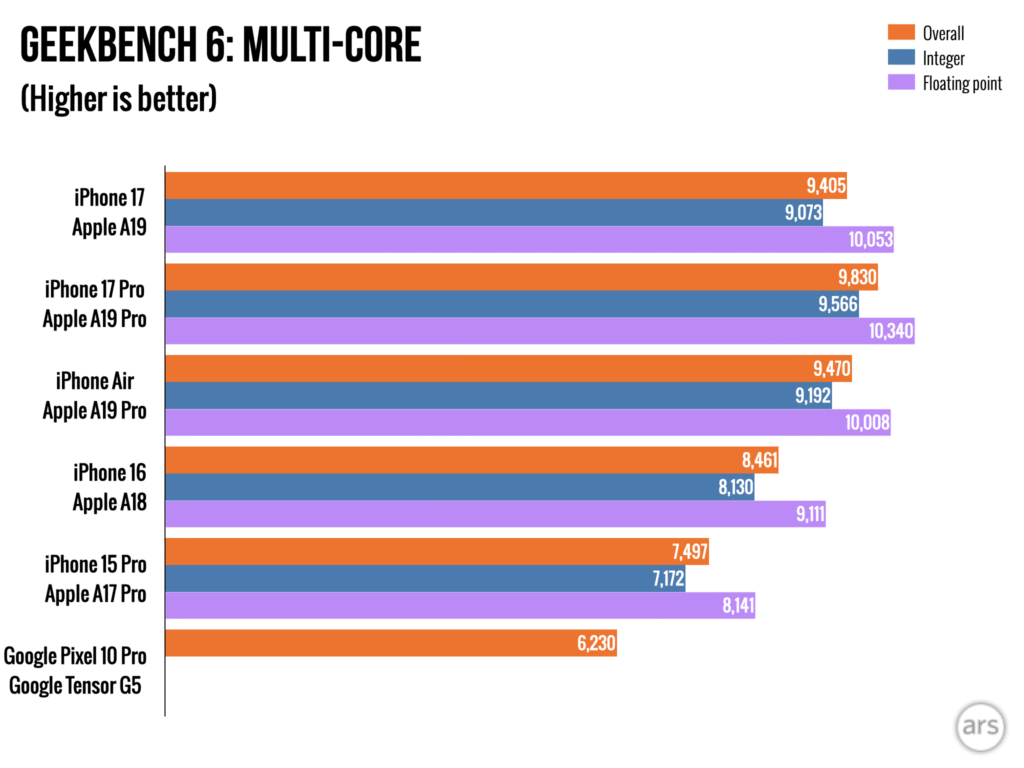

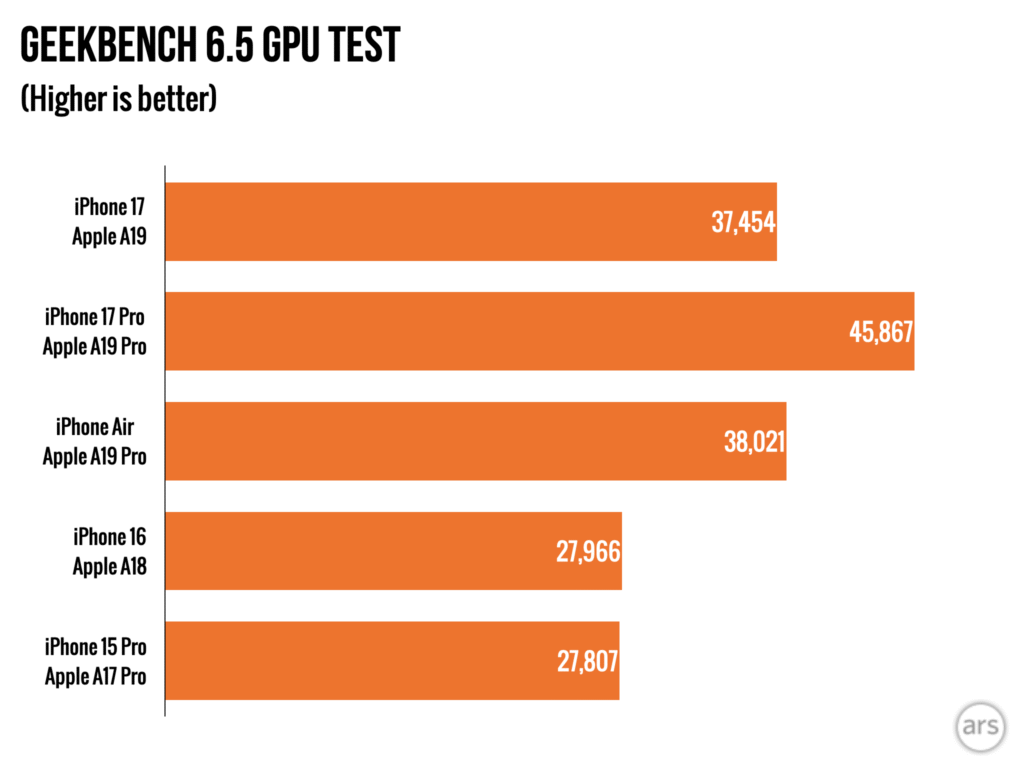

This probably won’t come as a surprise, but the Tensor G5 in the Pixel 10 Pro Fold performs identically to the Tensor in other Pixel 10 phones. It is marginally faster across the board than the Tensor G4, but this isn’t the huge leap people hoped for with Google’s first TSMC chip. While it’s fast enough to keep the phone chugging, benchmarks are not its forte.

Pixel 10 Pro Fold hinge has been redesigned. Credit: Ryan Whitwam

Across all our usual benchmarks, the Tensor G5 shows small gains over last year’s Google chip, but it’s running far behind the latest from Qualcomm. We expect that gap to widen even further when Qualcomm updates its flagship Snapdragon line in a few months.

The Tensor G5 does remain a bit cooler under load than the Snapdragon 8 Elite, losing only about 20 percent to thermal throttling. So real-world gaming performance on the Pixel 10 Pro Fold is closer to Qualcomm-based devices than the benchmark numbers would lead you to believe. Some game engines behave strangely on the Tensor’s PowerVR GPU, though. If mobile gaming is a big part of your usage, a Samsung or OnePlus flagship might be more your speed.

Day-to-day performance with the Pixel 10 Pro Fold is solid. Google’s new foldable is quick to register taps and open apps, even though the Tensor G5 chip doesn’t offer the most raw speed. Even on Snapdragon-based phones like the Galaxy Z Fold 7, the UI occasionally hiccups or an animation gets jerky. That’s a rarer occurrence on the Pixel 10 Pro Fold.

One of the biggest spec bumps is the battery—it’s 365 mAh larger, at 5,015 mAh. This finally puts Google’s foldables in the same range as flat phones. Granted, you will use more power when the main display is unfurled, and you should not expect a substantial increase in battery life generally. The power-hungry Tensor and increased background AI processing appear to soak up most of the added capacity. The Pixel 10 Pro Fold should last all day, but there won’t be much leeway.

The Pixel 10 Pro Fold does bring a nice charging upgrade, boosting wired speeds from 21 W to 30 W with a USB-PD charger that supports PPS (as most now do). That’s enough for a 50 percent charge in about half an hour. Wireless charging is now twice as fast, thanks to the addition of Qi2 support. Any Qi2-certified charger can hit those speeds, including the Google Pixelsnap charger. But the Fold is limited to 15 W, whereas the Pixel 10 Pro XL gets 25 W over Qi2. It’s nice to see an upgrade here, but all of Google’s phones should charge faster than they do.

Big phone, big questions

The Pixel 10 Pro Fold is better than last year’s Google foldable, and that means there’s a lot to like. The new hinge and slimmer bezels make the third-gen foldable a bit easier to hold, and the displays are fantastic. The camera setup, while a step down from the other Pro Pixels, is still one of the best you can get on a phone. The addition of Qi2 charging is much appreciated, too. And while Google has overloaded the Pixels with AI features, more of them are useful compared to those on the likes of Samsung, Motorola, or OnePlus.

Left: Pixel 10 Pro Fold, Right: Pixel 10 Pro. Credit: Ryan Whitwam

That’s all great, but these are relatively minor improvements for an $1,800 phone, and the competition is making great strides. The Pixel 10 Pro Fold isn’t as fast or slim as the Galaxy Z Fold 7, and Samsung’s multitasking system is much more powerful. The Z Fold 7 retails for $200 more, but that distinction hardly matters as you close in on two grand for a smartphone. If you’re willing to pay $1,800, going to $2,000 isn’t much of a leap.

It’s the size of a normal phone when closed. Credit: Ryan Whitwam

The Pixel 10 Pro Fold is the ultimate Google phone with some useful AI features, but the Galaxy Z Fold 7 is a better piece of hardware. Ultimately, the choice depends on what’s more important to you, but Google will have to move beyond iterative upgrades if it wants foldables to look like a worthwhile upgrade.

The good

- Redesigned hinge and slimmer bezels

- Huge, gorgeous foldable OLED screen

- Colorful, attractive Material 3 UI

- IP68 certification

- Includes Qi2 with magnetic attachment

- Seven years of update support

- Most AI features run on-device for better privacy

The bad

- Cameras are a step down from other Pro Pixels

- Tons of AI features you probably won’t use

- Could use more robust multitasking

- Tensor G5 still not benchmark king

- High $1,800 price

Ryan Whitwam is a senior technology reporter at Ars Technica, covering the ways Google, AI, and mobile technology continue to change the world. Over his 20-year career, he’s written for Android Police, ExtremeTech, Wirecutter, NY Times, and more. He has reviewed more phones than most people will ever own. You can follow him on Bluesky, where you will see photos of his dozens of mechanical keyboards.

Google Pixel 10 Pro Fold review: The ultimate Google phone Read More »