It’s too expensive to fight every AI copyright battle, Getty CEO says

Getty dumped “millions and millions” into just one AI copyright fight, CEO says.

In some ways, Getty Images has emerged as one of the most steadfast defenders of artists’ rights in AI copyright fights. Starting in 2022, when some of the most sophisticated image generators today first started testing new models offering better compositions, Getty banned AI-generated uploads to its service. And by the next year, Getty released a “socially responsible” image generator to prove it was possible to build a tool while rewarding artists, while suing an AI firm that refused to pay artists.

But in the years since, Getty Images CEO Craig Peters recently told CNBC that the media company has discovered that it’s simply way too expensive to fight every AI copyright battle.

According to Peters, Getty has dumped millions into just one copyright fight against Stability AI.

It’s “extraordinarily expensive,” Peters told CNBC. “Even for a company like Getty Images, we can’t pursue all the infringements that happen in one week.” He confirmed that “we can’t pursue it because the courts are just prohibitively expensive. We are spending millions and millions of dollars in one court case.”

Fair use?

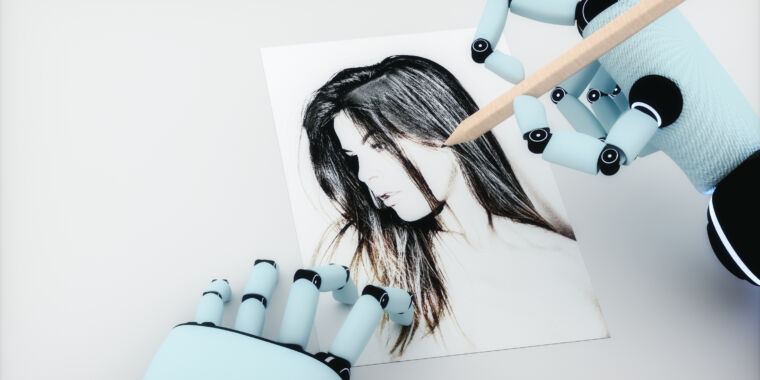

Getty sued Stability AI in 2023, after the AI company’s image generator, Stable Diffusion, started spitting out images that replicated Getty’s famous trademark. In the complaint, Getty alleged that Stability AI had trained Stable Diffusion on “more than 12 million photographs from Getty Images’ collection, along with the associated captions and metadata, without permission from or compensation to Getty Images, as part of its efforts to build a competing business.”

As Getty saw it, Stability AI had plenty of opportunity to license the images from Getty and seemingly “chose to ignore viable licensing options and long-standing legal protections in pursuit of their stand-alone commercial interests.”

Stability AI, like all AI firms, has argued that AI training based on freely scraping images from the web is a “fair use” protected under copyright law.

So far, courts have not settled this debate, while many AI companies have urged judges and governments globally to settle it for the courts, for the sake of safeguarding national security and securing economic prosperity by winning the AI race. According to AI companies, paying artists to train on their works threatens to slow innovation, while rivals in China—who aren’t bound by US copyright law—continue scraping the web to advance their models.

Peters called out Stability AI for adopting this stance, arguing that rightsholders shouldn’t have to spend millions fighting against a claim that paying out licensing fees would “kill innovation.” Some critics have likened AI firms’ argument to a defense of forced labor, suggesting the US would never value “innovation” about human rights, and the same logic should follow for artists’ rights.

“We’re battling a world of rhetoric,” Peters said, alleging that these firms “are taking copyrighted material to develop their powerful AI models under the guise of innovation and then ‘just turning those services right back on existing commercial markets.'”

To Peters, that’s simply “disruption under the notion of ‘move fast and break things,’” and Getty believes “that’s unfair competition.”

“We’re not against competition,” Peters said. “There’s constant new competition coming in all the time from new technologies or just new companies. But that [AI scraping] is just unfair competition, that’s theft.”

Broader Internet backlash over AI firms’ rhetoric

Peters’ comments come after a former Meta head of global affairs, Nick Clegg, received Internet backlash this week after making the same claim that AI firms raise time and again: that asking artists for consent for AI training would “kill” the AI industry, The Verge reported.

According to Clegg, the only viable solution to the tension between artists and AI companies would be to give artists ways to opt out of training, which Stability AI notably started doing in 2022.

“Quite a lot of voices say, ‘You can only train on my content, [if you] first ask,'” Clegg reportedly said. “And I have to say that strikes me as somewhat implausible because these systems train on vast amounts of data.”

On X, the CEO of Fairly Trained—a nonprofit that supports artists’ fight against nonconsensual AI training—Ed Newton-Rex (who is also a former Stability AI vice president of audio) pushed back on Clegg’s claim in a post viewed by thousands.

“Nick Clegg is wrong to say artists’ demands on AI & copyright are unworkable,” Newton-Rex said. “Every argument he makes could equally have been made about Napster:” First, that “the tech is out there,” second that “licensing takes time,” and third that, “we can’t control what other countries do.” If Napster’s operations weren’t legal, neither should AI firms’ training, Newton-Rex said, writing, “These are not reasons not to uphold the law and treat creators fairly.”

Other social media users mocked Clegg with jokes meant to destroy AI firms’ favorite go-to argument against copyright claims.

“Blackbeard says asking sailors for permission to board and loot their ships would ‘kill’ the piracy on the high seas industry,” an X user with the handle “Seanchuckle” wrote.

On Bluesky, a trial lawyer, Max Kennerly, effectively satirized Clegg and the whole AI industry by writing, “Our product creates such little value that it is simply not viable in the marketplace, not even as a niche product. Therefore, we must be allowed to unilaterally extract value from the work of others and convert that value into our profits.”

Other ways to fight

Getty plans to continue fighting against the AI firms that are impressing this “world of rhetoric” on judges and lawmakers, but court battles will likely remain few and far between due to the price tag, Peters has suggested.

There are other ways to fight, though. In a submission last month, Getty pushed the Trump administration to reject “those seeking to weaken US copyright protections by creating a ‘right to learn’ exemption” for AI firms when building Trump’s AI Action Plan.

“US copyright laws are not obstructing the path to continued AI progress,” Getty wrote. “Instead, US copyright laws are a path to sustainable AI and a path that broadens society’s participation in AI’s economic benefits, which reduces downstream economic burdens on the Federal, State and local governments. US copyright laws provide incentives to invest and create.”

In Getty’s submission, the media company emphasized that requiring consent for AI training is not an “overly restrictive” control on AI’s development such as those sought by stauncher critics “that could harm US competitiveness, national security or societal advances such as curing cancer.” And Getty claimed it also wasn’t “requesting protection from existing and new sources of competition,” despite the lawsuit’s suggestion that Stability AI and other image generators threaten to replace Getty’s image library in the market.

What Getty said it hopes Trump’s AI plan will ensure is a world where the rights and opportunities of rightsholders are not “usurped for the commercial benefits” of AI companies.

In 2023, when Getty was first suing Stability AI, Peters suggested that, otherwise, allowing AI firms to widely avoid paying artists would create “a sad world,” perhaps disincentivizing creativity.

It’s too expensive to fight every AI copyright battle, Getty CEO says Read More »