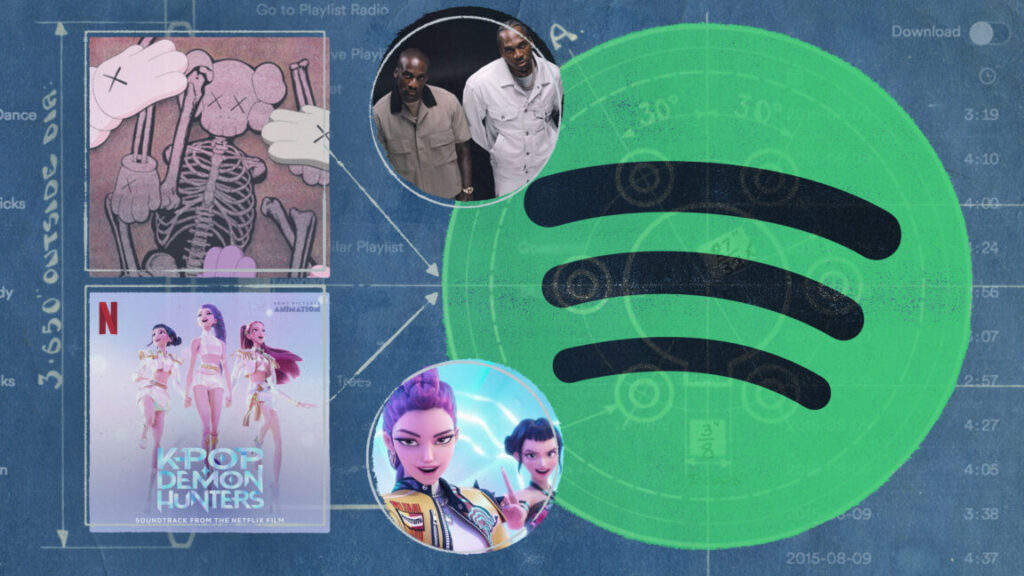

Spotify won court order against Anna’s Archive, taking down .org domain

When shadow library Anna’s Archive lost its .org domain in early January, the controversial site’s operator said the suspension didn’t appear to have anything to do with its recent mass scraping of Spotify.

But it turns out, probably not surprisingly to most people, that the domain suspension resulted from a lawsuit filed by Spotify, along with major record labels Sony, Warner, and Universal Music Group (UMG). The music companies sued Anna’s Archive in late December in US District Court for the Southern District of New York, and the case was initially sealed.

A judge ordered the case unsealed on January 16 “because the purpose for which sealing was ordered has been fulfilled.” Numerous documents were made public on the court docket yesterday, and they explain events around the domain suspension.

On January 2, the music companies asked for a temporary restraining order, and the court granted it the same day. The order imposed requirements on the Public Interest Registry (PIR), a US-based nonprofit that oversees .org domains, and Cloudflare.

“Together, PIR and Cloudflare have the power to shut off access to the three web domains that Anna’s Archive uses to unlawfully distribute copyrighted works,” the music companies told the court. They asked the court to issue “a temporary restraining order requiring that Anna’s Archive immediately cease and desist from all reproduction or distribution of the Record Company Plaintiffs’ copyrighted works,” and to “exercise its power under the All Writs Act to direct PIR and Cloudflare to facilitate enforcement of that order.”

Anna’s Archive notified of case after suspension

The companies further asked that Anna’s Archive receive notice of the case by email only after the “order is issued by the Court and implemented by PIR and Cloudflare, to prevent Anna’s Archive from following through with its plan to release millions of illegally obtained, copyrighted sound recordings to the public.” That is apparently what happened, given that the operator of Anna’s Archive initially said domain suspensions are just something that “unfortunately happens to shadow libraries on a regular basis,” and that “we don’t believe this has to do with our Spotify backup.”

Spotify won court order against Anna’s Archive, taking down .org domain Read More »