Frogfish reveals how it evolved the “fishing rod” on its head

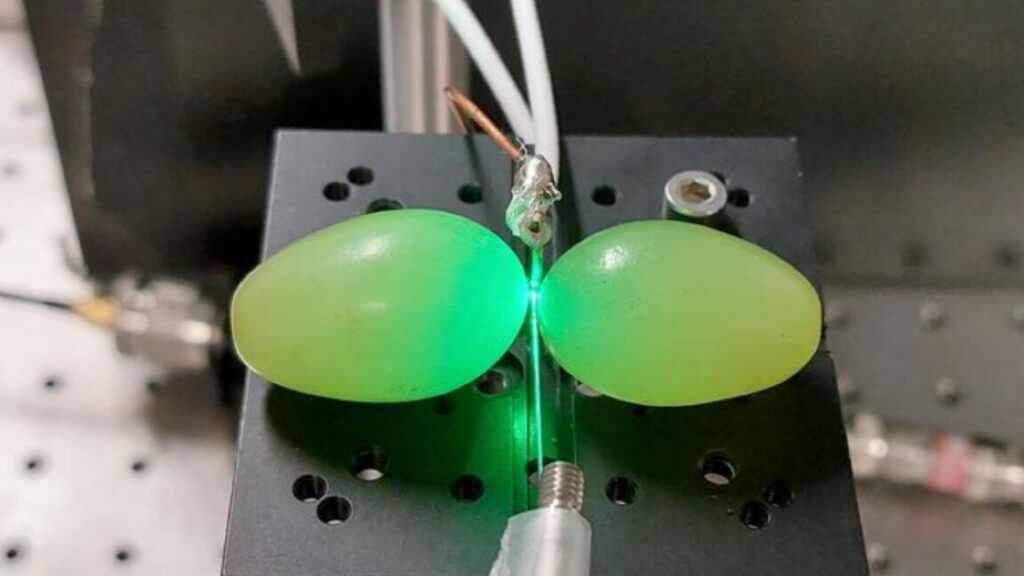

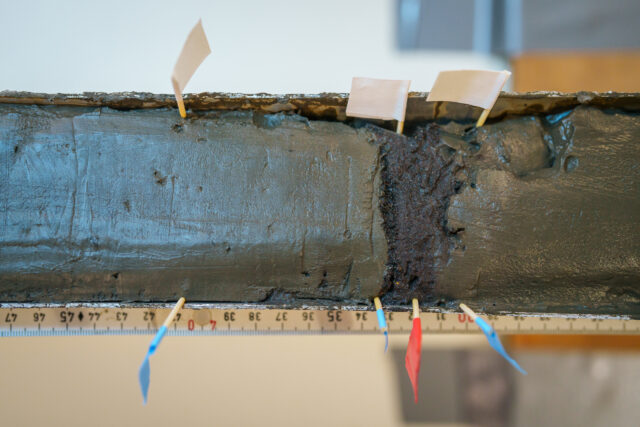

In most bony fish, or teleosts, motor neurons for fins are found on the sides (ventrolateral zone) of the underside (ventral horn) of the spinal cord. The motor neurons controlling the illicium of frogfish are in their own cluster and located in the dorsolateral zone. In fish, this is unusual.

“The peculiar location of fishing motor neurons, with little doubt, is linked with the specialization of the illicium serving fishing behavior,” the team said in a study recently published in the Journal of Comparative Neurology.

Fishing for answers

So what does this have to do with evolution? The white-spotted pygmy filefish might look nothing like a frogfish and has no built-in fishing lure, but it is still a related species and can possibly tell us something.

While the first dorsal fin of the filefish doesn’t really move—it is thought that its main purpose is to scare off predators by looking menacing—there are still motor neurons that control it. Motor neurons for the first dorsal fin of filefish were found in the same location as motor neurons for the second, third and fourth dorsal fins in frogfish. In frogfish, these fins also do not move much while swimming, but can appear threatening to a predator.

If the same types of motor neurons control non-moving fins in both species, the frogfish has something extra when it comes to the function and location of motor neurons controlling the illicium.

Yamamoto thinks the unique group of fishing motor neurons found in frogfish suggests that, as a result of evolution, “the motor neurons for the illicium [became] segregated from other motor neurons” to end up in their own distinct cluster away from motor neurons controlling other fins, as he said in the study.

What exactly caused the functional and locational shift of motor neurons that give the frogfish’s illicium its function is still a mystery. How the brain influences their fishing behavior is another area that needs to be investigated.

While Yamamoto and his team speculate that specific regions of the brain send messages to the fishing motor neurons, they do not yet know which regions are involved, and say that more studies need to be carried out on other species of fish and the groups of motor neurons that power each of their dorsal fins.

In the meantime, the frogfish will continue being its freaky self.

Journal of Comparative Neurology, 2024. DOI: 10.1002/cne.25674

Frogfish reveals how it evolved the “fishing rod” on its head Read More »