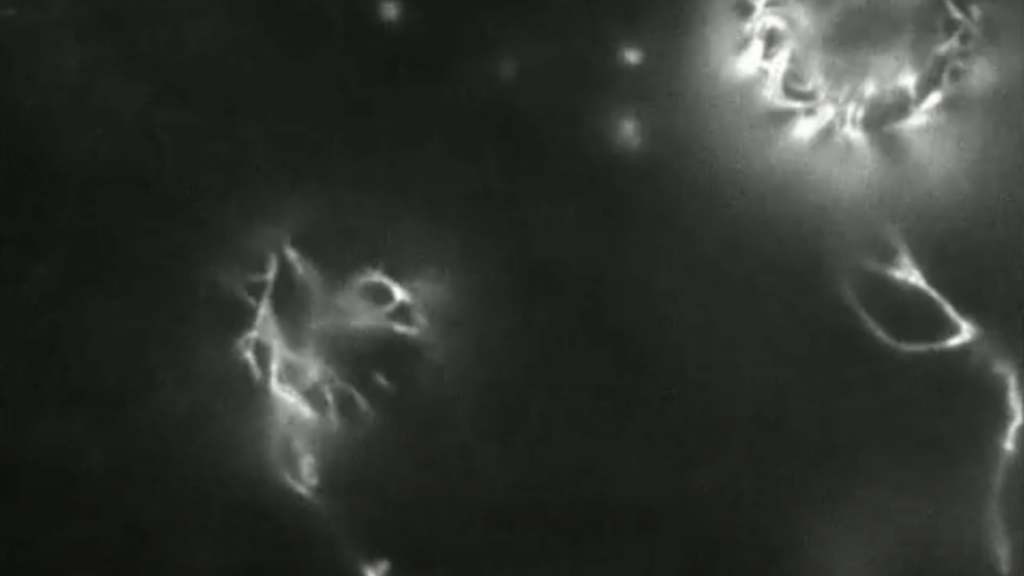

We have the first video of a plant cell wall being built

Plant cells are surrounded by an intricately structured protective coat called the cell wall. It’s built of cellulose microfibrils intertwined with polysaccharides like hemicellulose or pectin. We have known what plant cells look like without their walls, and we know what they look like when the walls are fully assembled, but we’ve never seen the wall-building process in action. “We knew the starting point and the finishing point, but had no idea what happens in between,” says Eric Lam, a plant biologist at Rutgers University. He’s a co-author of the study that caught wall-building plant cells in action for the first time. And once we saw how the cell wall building worked, it looked nothing like how we drew that in biology handbooks.

Camera-shy builders

Plant cells without walls, known as protoplasts, are very fragile, and it has been difficult to keep them alive under a microscope for the several hours needed for them to build walls. Plant cells are also very light-sensitive, and most microscopy techniques require pointing a strong light source at them to get good imagery.

Then there was the issue of tracking their progress. “Cellulose is not fluorescent, so you can’t see it with traditional microscopy,” says Shishir Chundawat, a biologist at Rutgers. “That was one of the biggest issues in the past.” The only way you can see it is if you attach a fluorescent marker to it. Unfortunately, the markers typically used to label cellulose were either bound to other compounds or were toxic to the plant cells. Given their fragility and light sensitivity, the cells simply couldn’t survive very long with toxic markers as well.

We have the first video of a plant cell wall being built Read More »