Review: Framework Desktop is a mash-up of a regular desktop PC and the Mac Studio

Framework’s main claim to fame is its commitment to modular, upgradeable, repairable laptops. The jury’s still out on early 2024’s Framework Laptop 16 and mid-2025’s Framework Laptop 12, neither of which has seen a hardware refresh, but so far, the company has released half a dozen iterations of its flagship Framework Laptop 13 in less than five years. If you bought one of the originals right when it first launched, you could go to Framework’s site, buy an all-new motherboard and RAM, and get a substantial upgrade in performance and other capabilities without having to change anything else about your laptop.

Framework’s laptops haven’t been adopted as industry-wide standards, but in many ways, they seem built to reflect the flexibility and modularity that has drawn me to desktop PCs for more than two decades.

That’s what makes the Framework Desktop so weird. Not only is Framework navigating into a product category where its main innovation and claim to fame is totally unnecessary. But it’s actually doing that with a desktop that’s less upgradeable and modular than any given self-built desktop PC.

The Framework Desktop has a lot of interesting design touches, and it’s automatically a better buy than the weird AMD Ryzen AI Max-based mini desktops you can buy from a couple of no-name manufacturers. But aside from being more considerate of PC industry standards, the Framework Desktop asks the same question that any gaming-focused mini PC does: Do you care about having a small machine so much that you would pay more money for less performance, and for a system you can’t upgrade much after you buy it?

Design and assembly

Opening the Framework Desktop’s box. The PC and all its accessories are neatly packed away in all-recyclable carboard and paper. Andrew Cunningham

My DIY Edition Framework Desktop arrived in a cardboard box that was already as small or a bit smaller than my usual desktop PC, a mini ITX build with a dedicated GPU inside a 14.67-liter SSUPD Meshlicious case. It’s not a huge system, especially for something that can fit a GeForce RTX 5090 in it. But three of the 4.5-liter Framework Desktops could fit inside my build’s case with a little space leftover.

The PC itself is buried a couple of layers deep in this box under some side panels and whatever fan you choose (Framework offers RGB and non-RGB options from Cooler Master and Noctua, but any 120 mm fan will fit on the heatsink). Even for the DIY Edition, the bulk of it is already assembled: the motherboard is in the case, a large black heatsink is already perched atop the SoC, and both the power supply and front I/O ports are already hooked up.

The aspiring DIYer mainly needs to install the SSD and the fan to get going. Putting in these components gives you a decent crash course in how the system goes together and comes apart. The primary M.2 SSD slot is under a small metal heat spreader next to the main heatsink—loosen one screw to remove it, and install your SSD of choice. The system’s other side panel can be removed to expose a second M.2 SSD slot and the Wi-Fi/Bluetooth module, letting you install or replace either.

Lift the small handles on the two top screws and loosen them by hand to remove them, and the case’s top panel slides off. This provides easier access to both the CPU fan header and RGB header, so you can connect the fan after you install it and its plastic shroud on top of the heatsink. That’s pretty much it for assembly, aside from sliding the various panels back in place to close the thing up and reinstalling the top screws (or, if you bought or printed one, adding a handle to the top of the case).

The Framework Desktop includes a beefier version of Framework’s usual screwdriver with a longer bit. Credit: Andrew Cunningham

Framework includes a beefier version of its typical screwdriver with the Desktop, including a bit that can be pulled out and reversed to be switched between Phillips and Torx heads. The iFixit-style install instructions are clearly written and include plenty of high-resolution sample images so you can always tell how things are supposedto look.

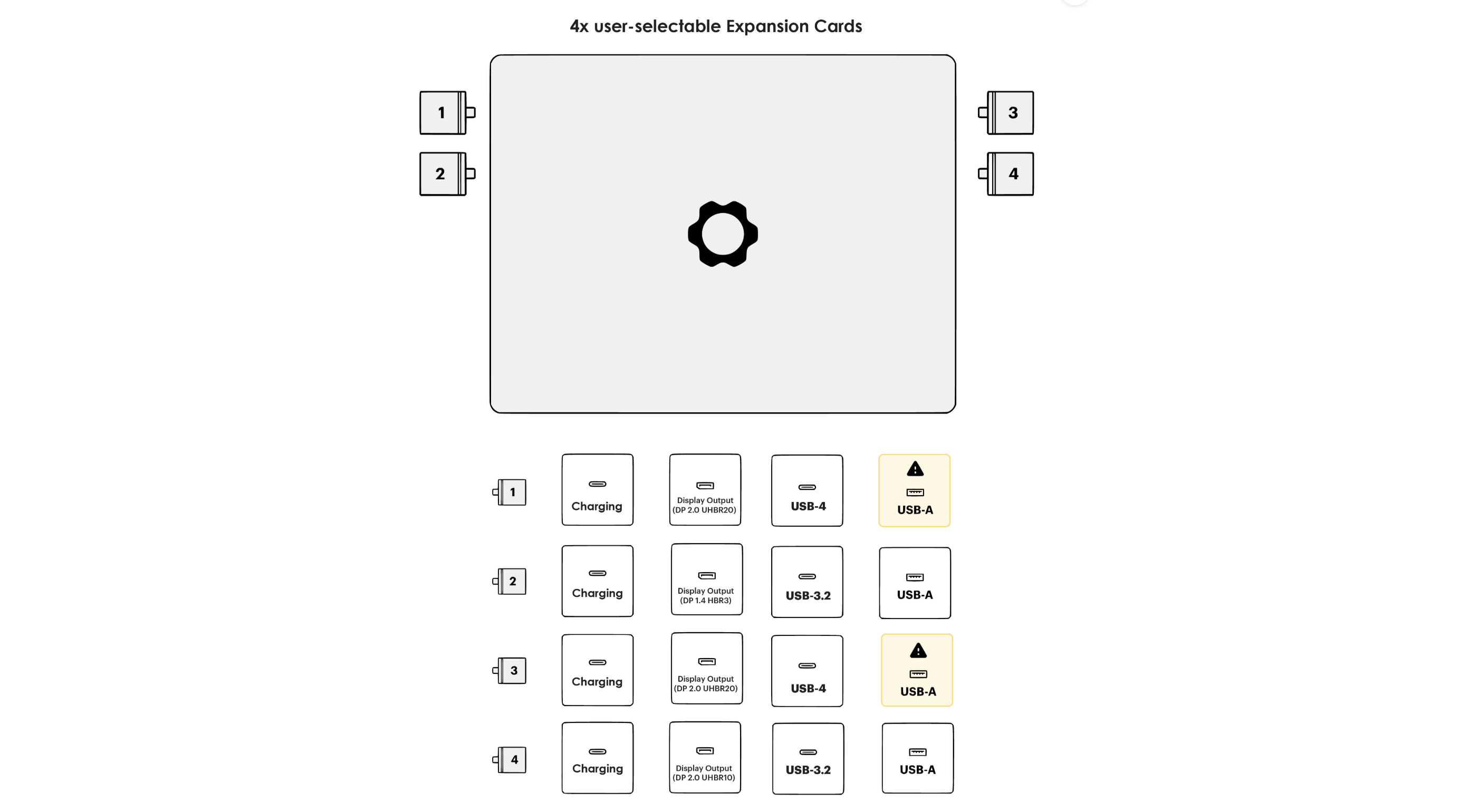

The front of the system requires some assembly, too, but all of this stuff can be removed and replaced easily without opening up the rest of the system. The front panel, where the system’s customizable tiles can be snapped on and popped off, attaches with magnets and can easily be pried away from the desktop with your fingernails. At the bottom are slots for two of Framework’s USB-C Expansion Cards, the same ones that all the Framework Laptops use.

By default, those ports are limited to 5 Gbps USB transfer speeds in the BIOS, something the system says reduces wireless interference; those with all-wired networking and accessories can presumably enable the full 10 Gbps speeds without downsides. The front ports should support all of the Expansion Cards except for display outputs, which they aren’t wired for. (I also had issues getting the Desktop to boot from a USB port on the front of the system while installing Windows, but your mileage may vary; using one of the rear USB ports solved the issue for me.)

Standards, sometimes

Putting in the M.2 SSD. There’s another SSD slot on the back of the motherboard. Andrew Cunningham

What puts the Framework Desktop above mini PCs from Amazon or the various gaming NUCs that Intel and Asus have released over the years is a commitment to standards.

For reasons we’ll explore later, there was no way to build the system around this specific AMD chip without using soldered-on memory. But the motherboard is a regular mini ITX-sized motherboard. Other ITX boards will fit into Framework’s case, and the Framework Laptop’s motherboard will fit into other systems (as long as they can also fit the fan and heatsink).

The 400 W power supply conforms to the FlexATX standard. The CPU fan is just a regular 120 mm fan, and the mounting holes for system fans on the front can take any 92 mm fan. The two case fan headers on the motherboard are the same ones you’d find on any motherboard you bought for yourself. The front panel ports can’t be used for display outputs, but anything else ought to work.

Few elements of the Framework Desktop are truly proprietary, and if Framework went out of business tomorrow, you’d still have a lot of flexibility for buying and installing replacement parts. The problem is that the soldered-down, non-replaceable, non-upgradeable parts are the CPU, GPU, and RAM. There’s at least a little flexibility with the graphics card if you move the board into a different case—there’s a single PCIe x4 slot on the board that you could put an external GPU into, though many PCIe x16 graphics cards will be bandwidth starved. But left in its original case, it’s an easy-to-work-on, standards-compliant system that will also never be any better or get any faster than it is the day you buy it.

Hope you like plastic

Snapping some tiles into the Framework Desktop’s plastic front panel. Credit: Andrew Cunningham

The interior of the Framework Desktop is built of sturdy metal, thoughtfully molded to give easy access to each of the ports and components on the motherboard. My main beef with the system is the outside.

The front and side panels of the Framework Desktop are all made out of plastic. The clear side panel, if you spring for it, is made of a thick acrylic instead of tempered glass (presumably because Framework has drilled holes in the side of it to improve airflow).

This isn’t the end of the world, but the kinds of premium ITX PC cases that the Desktop is competing with are predominantly made of nicer-looking and nicer-feeling metal rather than plastic. It just feels surprisingly cheap, which was an unpleasant surprise—even the plastic Framework Laptop 12 felt sturdy and high-quality, something I can’t really say of the Desktop’s exterior panels.

I do like the design on the front panel—a grid of 21 small square plastic tiles that users can rearrange however they want. Framework sells tiles with straight and diagonal lines on them, plus individual tiles with different logos or designs printed or embossed on them. If you install a fan in the front of the system, you’ll want to stick to the lined tiles in the top 9 x 9 section of the grid, which will allow air to pass through. The tiles with images on them are solid—putting a couple of them in front of a fan likely won’t hurt your airflow too much, but you won’t want to use too many.

Framework has also published basic templates for both the tiles and the top panel so that those with 3D printers can make their own.

PC testbed notes

We’ve compared the performance of the Framework Desktop to a bunch of other PCs to give you a sense of how it stacks up to full-size desktops. We’ve also compared it to the Ryzen 7 8700G in a Gigabyte B650I Aorus Ultra mini ITX motherboard with 32GB of DDR5-6400 to show the best performance you can expect from a similarly sized socketed desktop system.

Where possible, we’ve also included some numbers from the M4 Pro Mac mini and the M4 Max Mac Studio, two compact desktops in the same general price range as the Framework Desktop.

For our game benchmarks, the dedicated GPU results were gathered using our GPU testbed, which you can read about in our latest dedicated GPU review. The integrated GPUs were obviously tested with the CPUs they’re attached to.

| AMD AM5 | Intel LGA 1851 | Intel LGA 1700 | |

|---|---|---|---|

| CPUs | Ryzen 7000 and 9000 series | Core Ultra 200 series | 12th, 13th, and 14th-generation Core |

| Motherboard | ASRock X870E Taichi or MSI MPG X870E Carbon Wifi (provided by AMD) | MSI MEG Z890 Unify-X (provided by Intel) | Gigabyte Z790 Aorus Master X (provided by Intel) |

| RAM config | 32GB G.Skill Trident Z5 Neo (provided by AMD), running at DDR5-6000 | 32GB G.Skill Trident Z5 Neo (provided by AMD), running at DDR5-6000 | 32GB G.Skill Trident Z5 Neo (provided by AMD), running at DDR5-6000 |

Performance and power

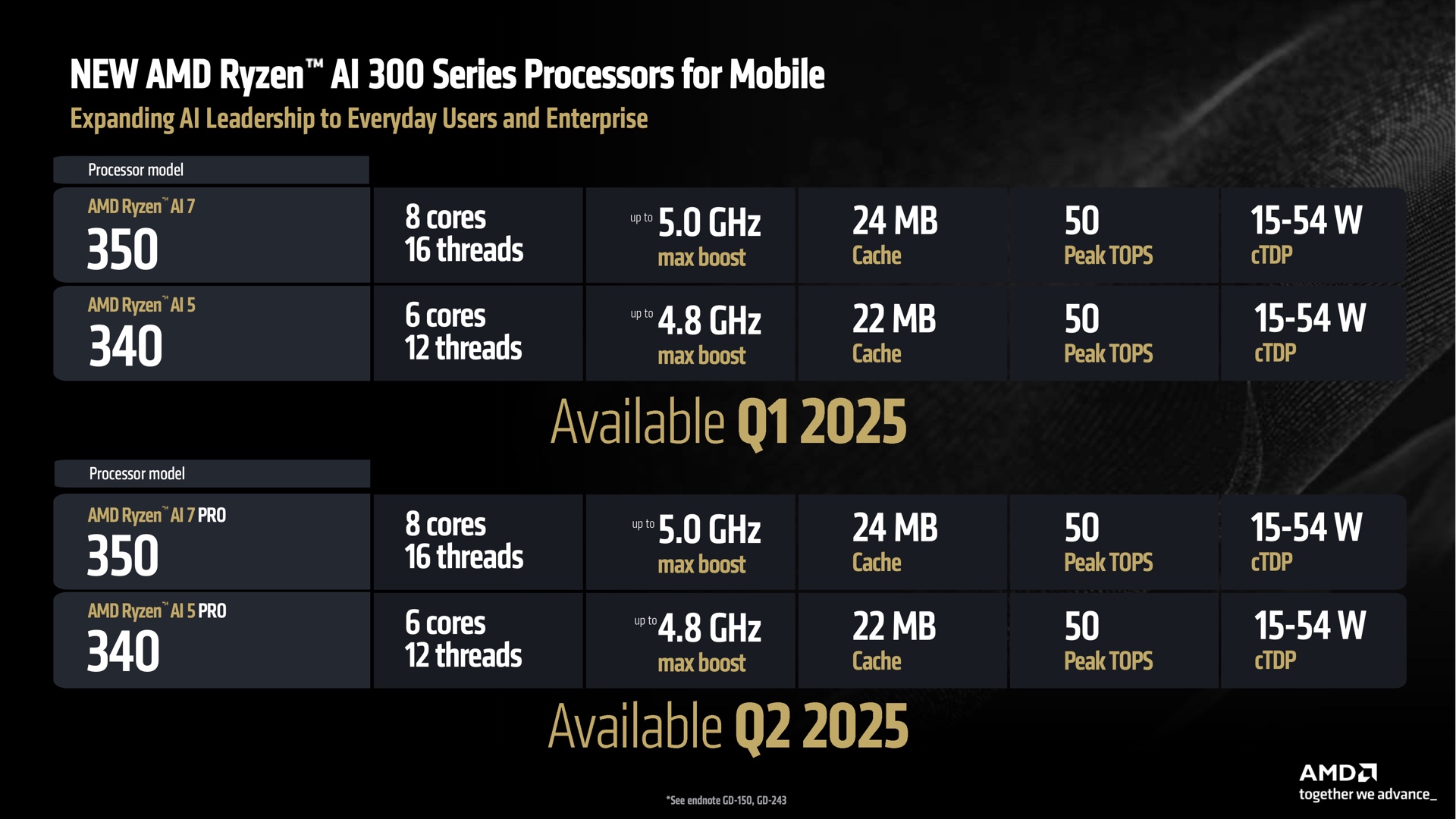

Our Framework-provided review unit was the highest-end option; it has a 16-core Ryzen AI Max+395 processor, 40 graphics cores, and 128GB of RAM. At $1,999 before adding an SSD, a fan, an OS, front tiles, or Expansion Cards, this is the best, priciest configuration Framework offers. The $1,599 configuration uses the same chip with the same performance, but with 64GB of RAM instead.

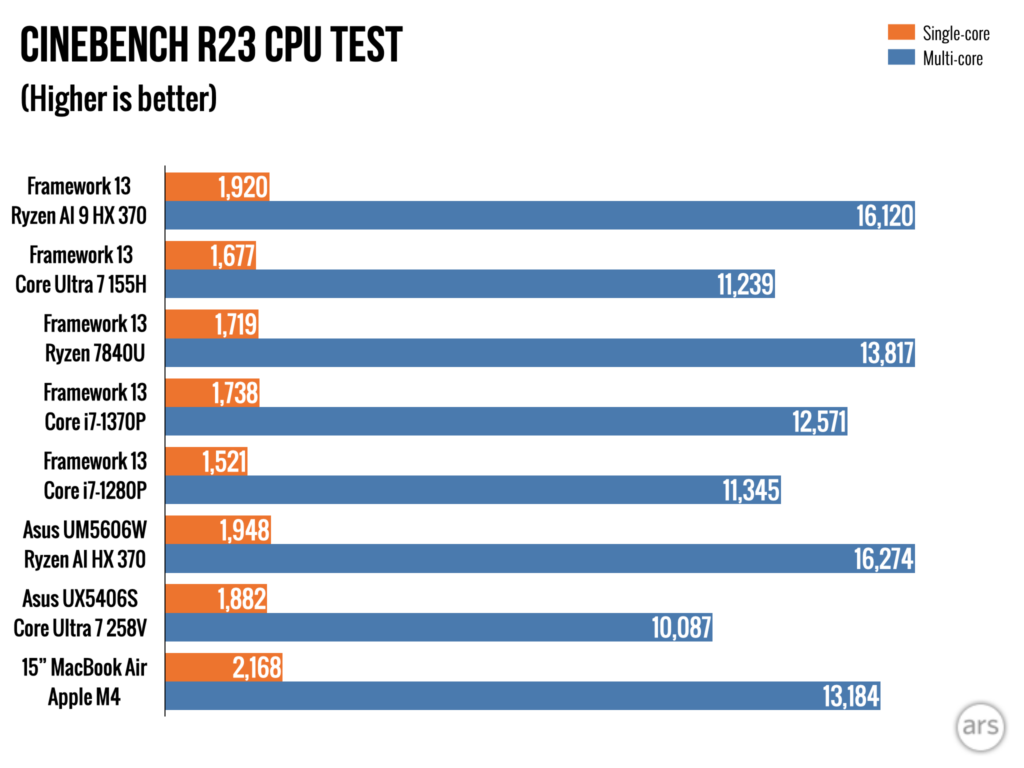

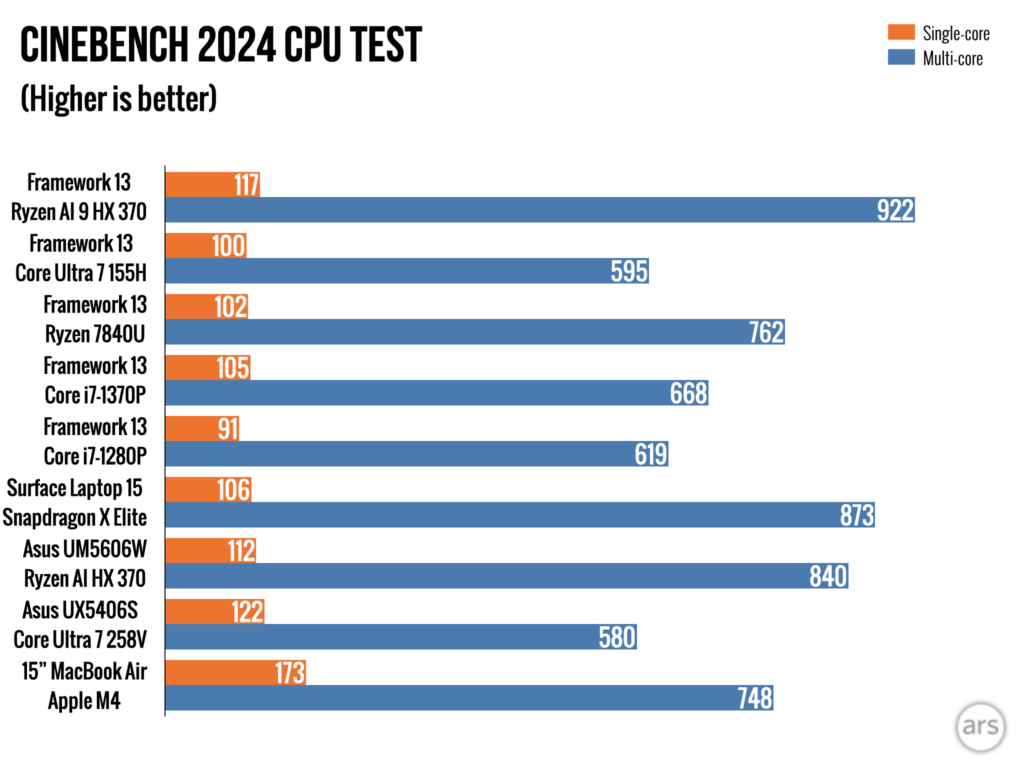

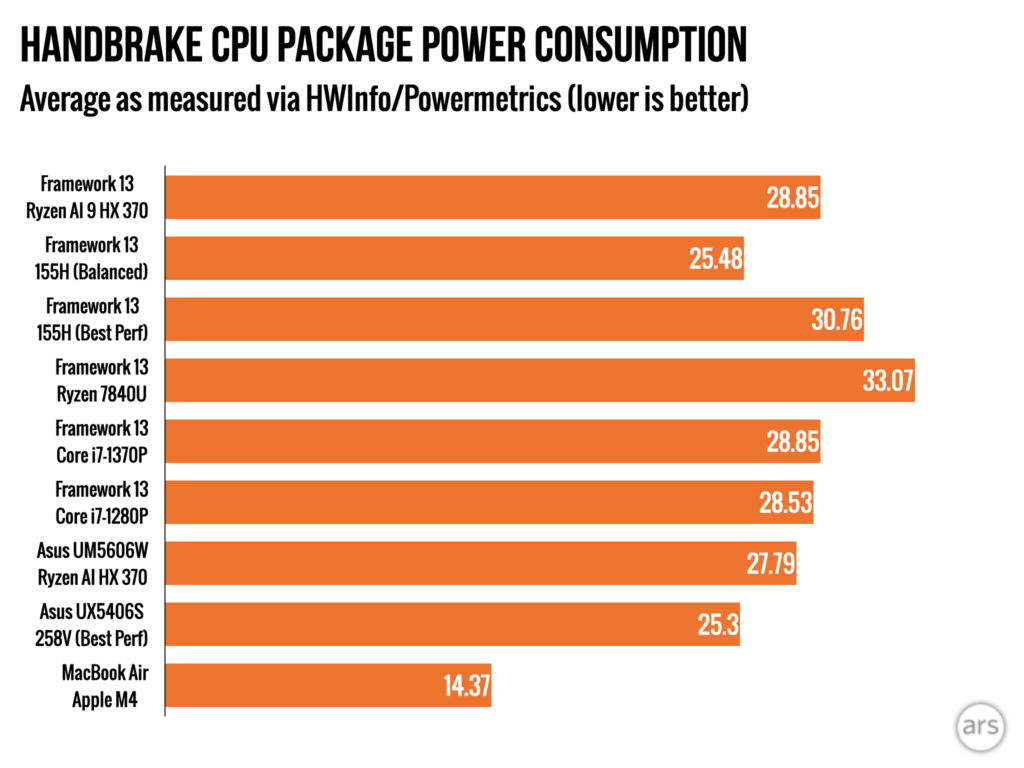

All 16 of those CPU cores are based on the Zen 5 architecture, with none of the smaller-but-slower Zen 5c cores. But its total TDP is also limited to 120 W in total, which will hold it back a bit compared to socketed 16-core desktop CPUs like the Ryzen 9 9950X, which has a 170 W default TDP for the CPU alone.

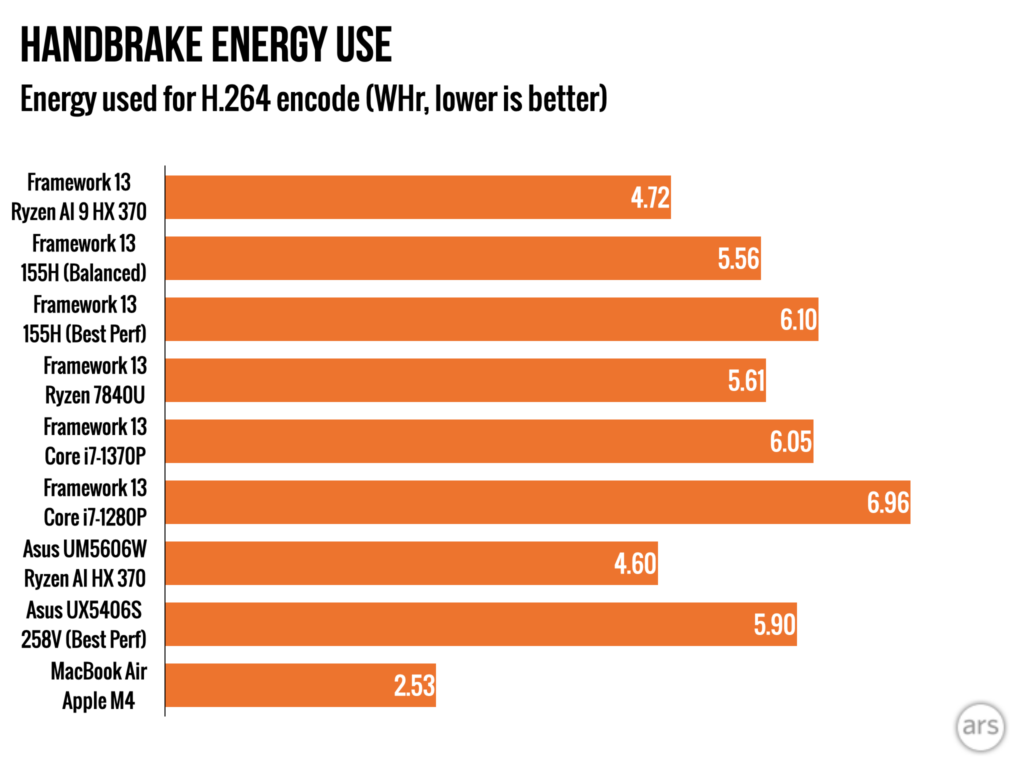

In our testing, it seems clear that the CPU throttles when being tasked with intensive multi-core work like our Handbrake test, with temperatures that spike to around 100 degrees Celsius and hang out at around or just under that number for the duration of our test runs. The CPU package uses right around 100 W on average (this will vary based on the tests you’re running and how long you’re running them), compared to the 160 W and 194 W that the 12- and 16-core Ryzen 9 9900X and 9950X can consume at their default power levels.

Those are socketed desktop chips in huge cases being cooled by large AIO watercooling loops, so it’s hardly a fair comparison. The Framework Desktop’s CPU is also quite efficient, using even less power to accomplish our video encoding test than the 9950X in its 105 W Eco Mode. But this is the consequence of prioritizing a small size—a 16-core processor that, under heavy loads, performs more like a 12-core or even an 8-core desktop processor.

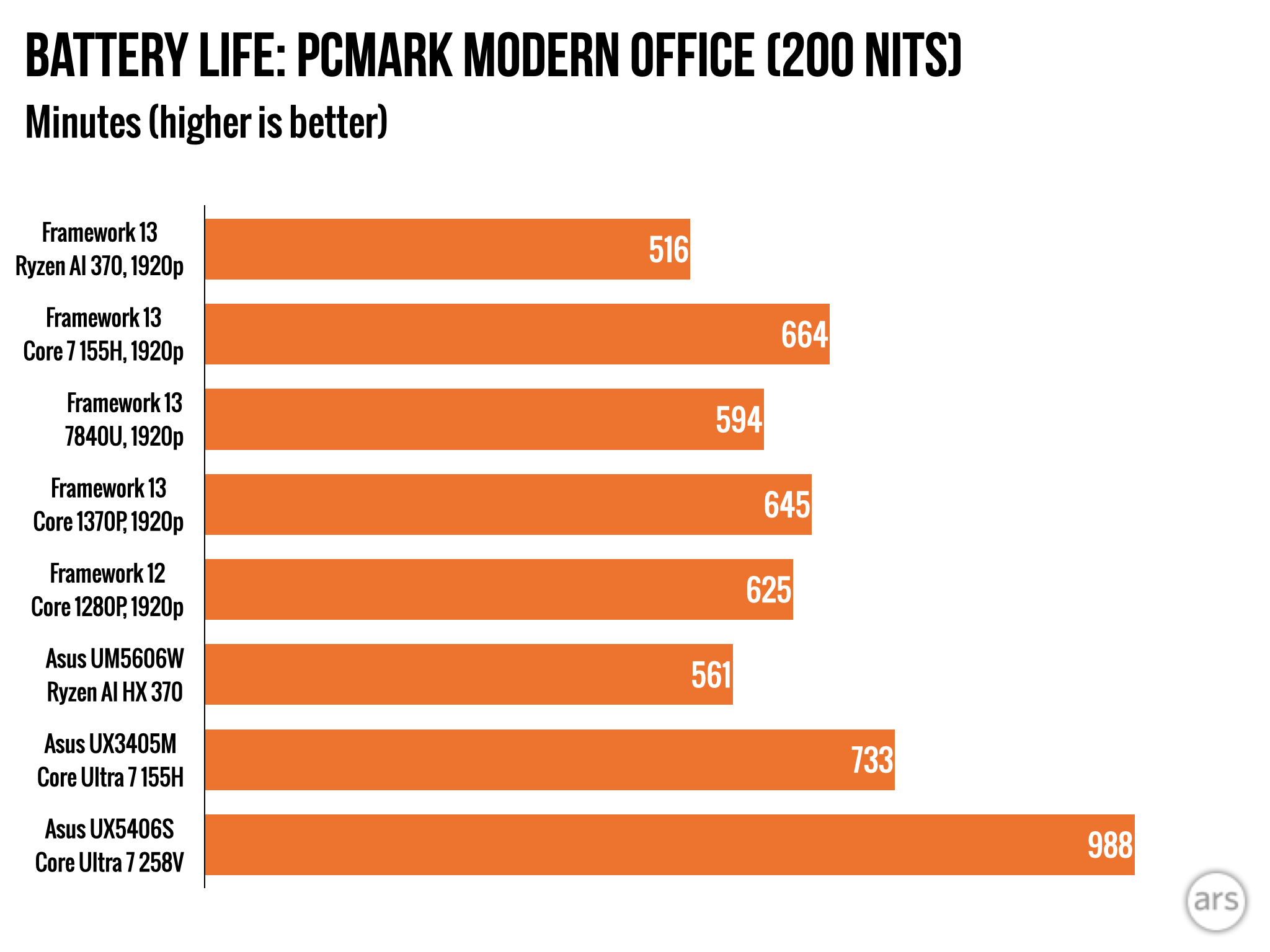

The upside is that the Framework Desktop is quieter than most desktops either under load or when idling. By default, the main CPU fan will turn off entirely when the system is under light load, and I often noticed it parking itself when I was just browsing or moving files around.

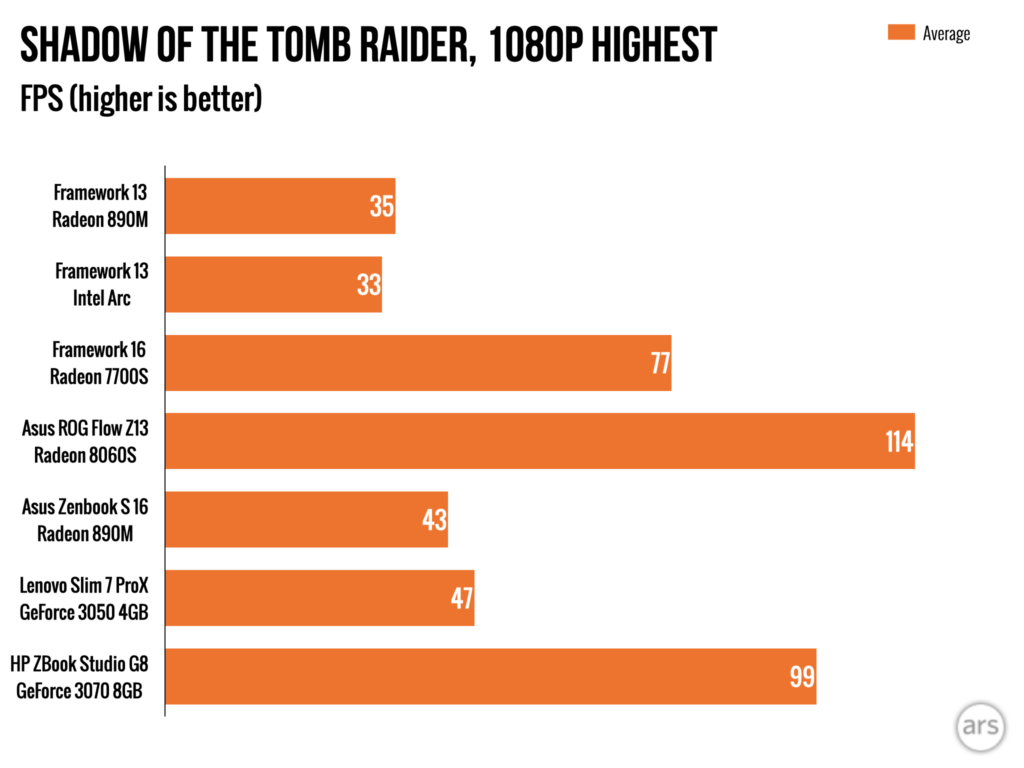

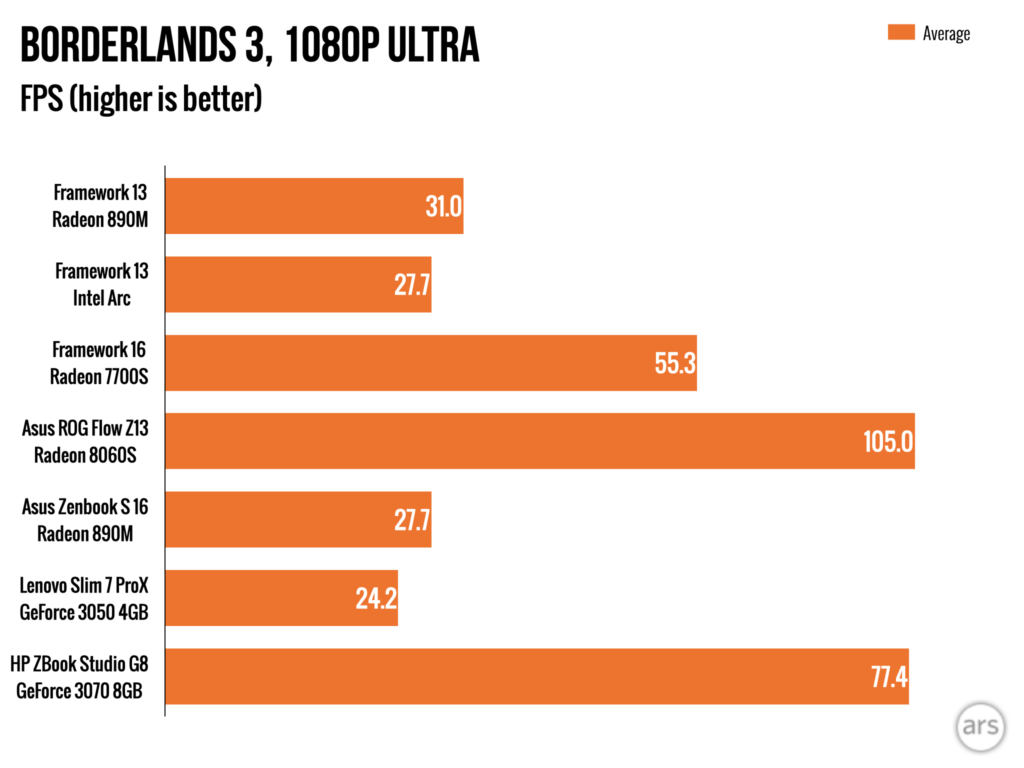

Based on our gaming tests, the Framework Desktop should be a competent 1080p-to-1440p midrange gaming system. We observed similar performance from the Radeon 8060S integrated GPU when we tested it in the Asus ROG Flow Z13 tablet. For an integrated GPU, it’s head and shoulders over anything you can get in a socketed desktop system, and it easily ran three or four times faster than the Radeon 780M in the 8700G. The soldered RAM is annoying, but the extra speed it enables helps address the memory bandwidth problem that starves most integrated GPUs.

Compared to other desktop GPUs, though, the 8060S is merely fine. It’s usually a little slower than the last-generation Radeon RX 7600 XT, a card that cost $329 when it launched in early 2024—and with a performance hit that’s slightly more pronounced in games with ray-tracing effects on.

The 8060S stacks up OK to older midrange GPUs like the GeForce RTX 3060 and 4060, but it’s soundly beaten by the RTX 5060 or the 16GB version of the Radeon RX 9060 XT, cards currently available for $300-to-$400. (One problem for the 8060S—it’s based on the RDNA3.5 architecture, so it’s missing ray-tracing performance improvements introduced in RDNA4 and the RX 9000 series).

All of that said, the GPU may be more interesting than it looks on paper for people whose workloads need gobs and gobs of graphics memory but who don’t necessarily need that memory to be attached to the blazing-fastest GPU that exists. For people running certain AI or machine learning workloads, the 8060S’s unified memory setup means you can get a GPU with 64GB or 128GB of VRAM for less than the price of a single RTX 5090 (Framework says the GPU can use up to 112GB of RAM on the 128GB Desktop). Framework is advertising that use case pretty extensively, and it offers a guide to setting up large language models to run locally on the system.

That memory would likely be even more useful if it were attached to an Nvidia GPU instead of an AMD model—Nvidia’s hold on the workstation graphics market is at least as tight as its hold on the gaming GPU market, and many apps and tools support Nvidia GPUs and CUDA first/best/only. But it’s still one possible benefit the Framework Desktop might offer, relative to a desktop with a dedicated GPU.

You can’t say it isn’t unique

The Framework Desktop is a bit like a PC tower blended with Apple’s Mac Studio. Credit: Andrew Cunningham

In one way, Framework has done the same thing with the Desktop that it has done with all its laptops: found a niche and built a product to fill it. And with its standard-size components and standard connectors, the Framework Desktop is a clear cut above every Intel gaming NUC or Asus ROG thingamajig that’s ever existed.

I’m always impressed by the creativity, thoughtfulness, and attention to detail that Framework brings to its builds. For the Desktop, this is partially offset by how much I don’t care for most of its cheap plastic-and-acrylic exterior. But it’s still thoughtfully designed on the inside, with as much respect for standards, modularity, and repairability as you can get, once you get past that whole thing where that the major functional components are all irrevocably soldered together.

The Framework Desktop is also quiet, cute, and reasonably powerful. You’re paying some extra money and giving up both CPU and GPU speed to get something small. But you won’t run into games or apps that simply refuse to run for performance-related reasons.

It does feel like a weird product for Framework to build, though. It’s not that I can’t imagine the kind of person a Framework Desktop might be good for—it’s that I think Framework has built its business targeting a PC enthusiast demographic that will mainly be turned off by the desktop’s lack of upgradeability.

The Framework desktop is an interesting option for people who want or need a compact and easy-to-build workstation or gaming PC, or a Windows-or-Linux version of Apple’s Mac Studio. It will fit comfortably under a TV or in a cramped office. It’s too bad that it isn’t easier to upgrade. But for people who would prefer the benefits of a socketed CPU or a swappable graphics card, I’m sure the people at Framework would be the first ones to point you in the direction of a good-old desktop PC.

The good

- Solid all-round performance and good power efficiency.

- The Radeon 8060S is exceptionally good for an integrated GPU, delivering much better performance than you can get in something like the Ryzen 7 8700G.

- Large pool of RAM available to the GPU could be good for machine learning and AI workloads.

- Thoughtfully designed interior that’s easy to put together.

- Uses standard-shaped motherboard, fan headers, power supply, and connectors, unlike lots of pre-built mini PCs.

- Front tiles are fun.

The bad

- Power limits keep the 16-core CPU from running as fast as the socketed desktop version.

- A $300-to-$400 dedicated GPU will still beat the Radeon RX 8060S.

- Cheap-looking exterior plastic panels.

The ugly

- Soldered RAM in a desktop system.

Andrew is a Senior Technology Reporter at Ars Technica, with a focus on consumer tech including computer hardware and in-depth reviews of operating systems like Windows and macOS. Andrew lives in Philadelphia and co-hosts a weekly book podcast called Overdue.

Review: Framework Desktop is a mash-up of a regular desktop PC and the Mac Studio Read More »