Runway claims its GWM-1 “world models” can stay coherent for minutes at a time

Even using the word “general” has an air of aspiration to it. You would expect a general world model to be, well, one model—but in this case, we’re looking at three distinct, post-trained models. That caveats the general-ness a bit, but Runway says that it’s “working toward unifying many different domains and action spaces under a single base world model.”

A competitive field

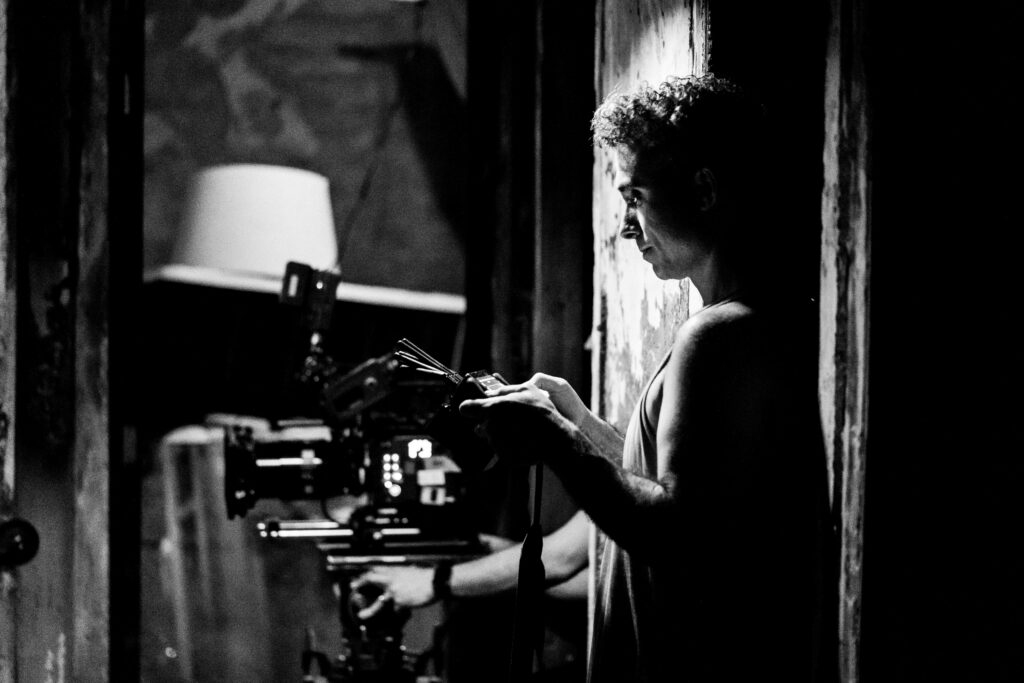

And that brings us to another important consideration: With GWM-1, Runway is entering a competitive gold-rush space where its differentiators and competitive advantages are less clear than they were for video. With video, Runway has been able to make major inroads in film/television, advertising, and other industries because its founders are perceived as being more rooted in those creative industries than most competitors, and they’ve designed tools with those industries in mind.

There are indeed hypothetical applications of world models in film, television, advertising, and game development—but it was apparent from Runway’s livestream that the company is also looking at applications in robotics as well as physics and life sciences research, where competitors are already well-established and where we’ve seen increasing investment in recent months.

Many of those competitors are big tech companies with massive resource advantages over Runway. Runway was one of the first to market with a sellable product, and its aggressive efforts to court industry professionals directly has so far allowed it to overcome those advantages in video generation, but it remains to be seen how things will play out with world models, where it doesn’t enjoy either advantage any more than the other entrants.

Regardless, the GWM-1 advancements are impressive—especially if Runway’s claims about consistency and coherence over longer stretches of time are true.

Runway also used its livestream to announce new Gen 4.5 video generation capabilities, including native audio, audio editing, and multi-shot video editing. Further, it announced a deal with CoreWeave, a cloud computing company with an AI focus. The deal will see Runway utilizing Nvidia’s GB300 NVL72 racks on CoreWeave’s cloud infrastructure for future training and inference.

Runway claims its GWM-1 “world models” can stay coherent for minutes at a time Read More »