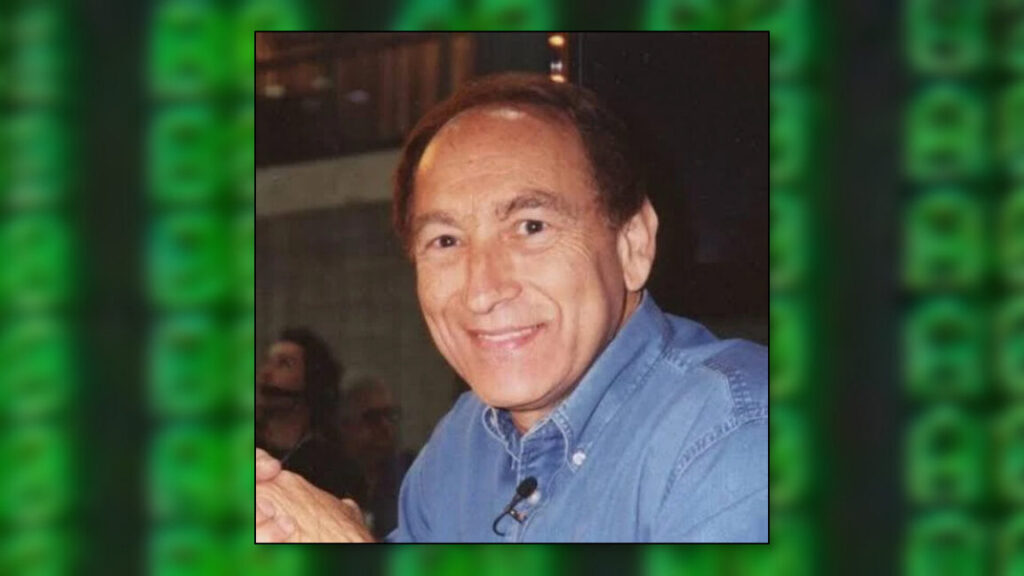

Stewart Cheifet, PBS host who chronicled the PC revolution, dies at 87

Stewart Cheifet, the television producer and host who documented the personal computer revolution for nearly two decades on PBS, died on December 28, 2025, at age 87 in Philadelphia. Cheifet created and hosted Computer Chronicles, which ran on the public television network from 1983 to 2002 and helped demystify a new tech medium for millions of American viewers.

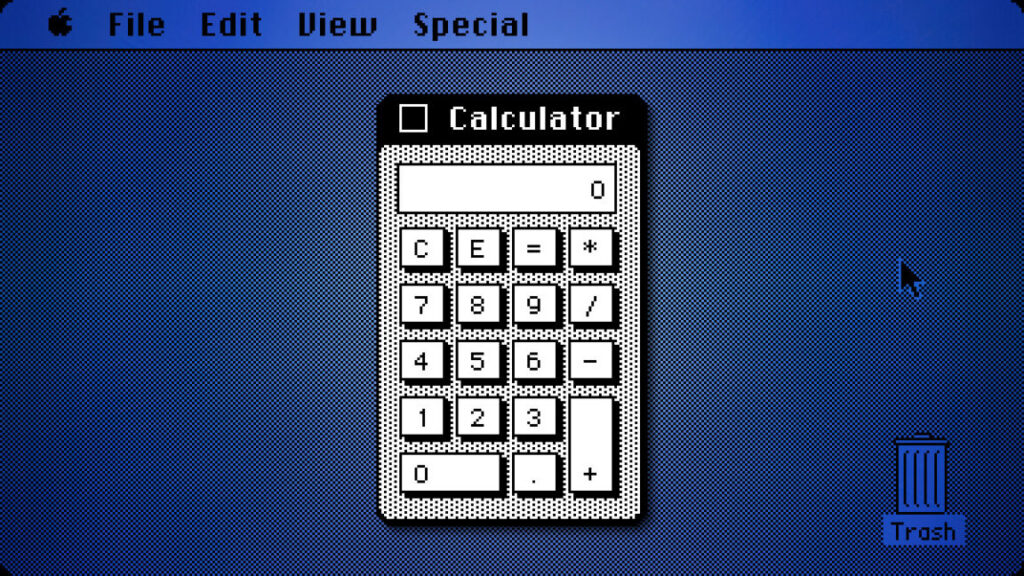

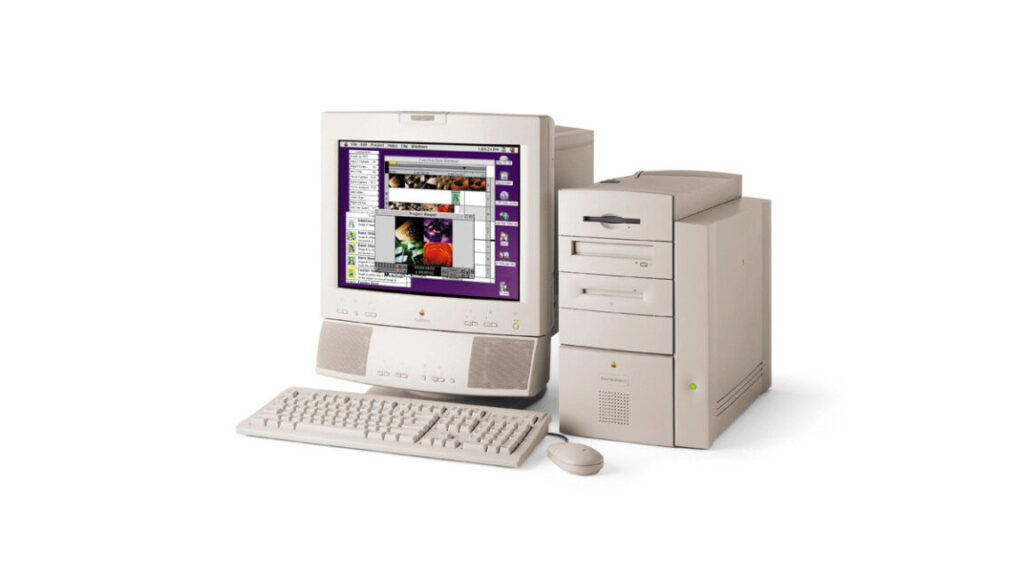

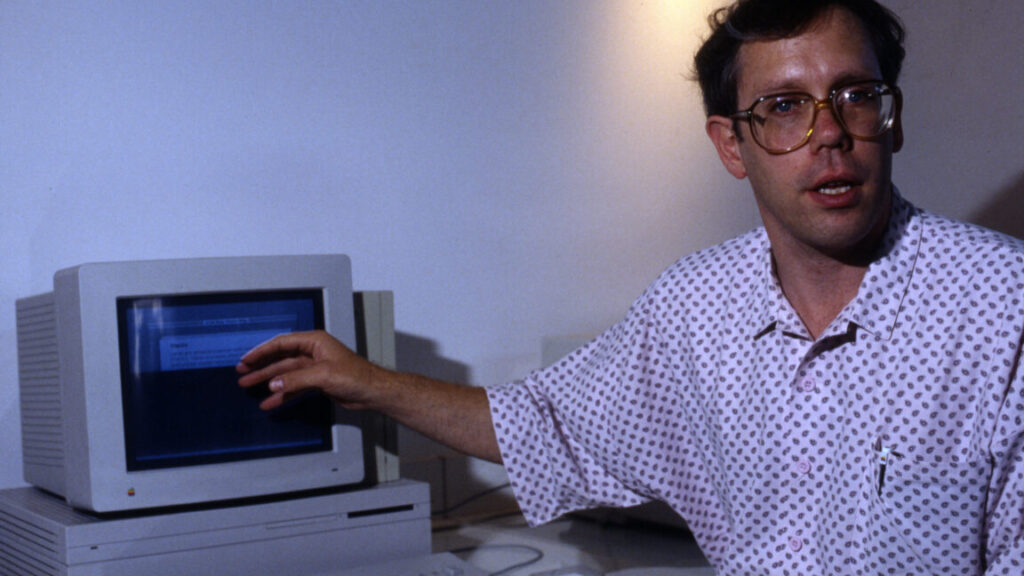

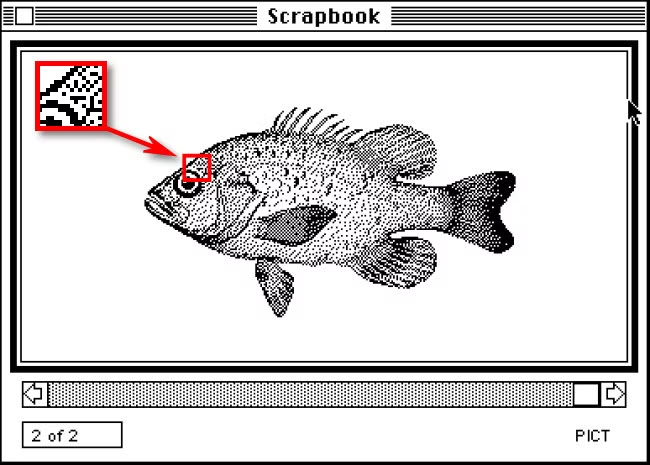

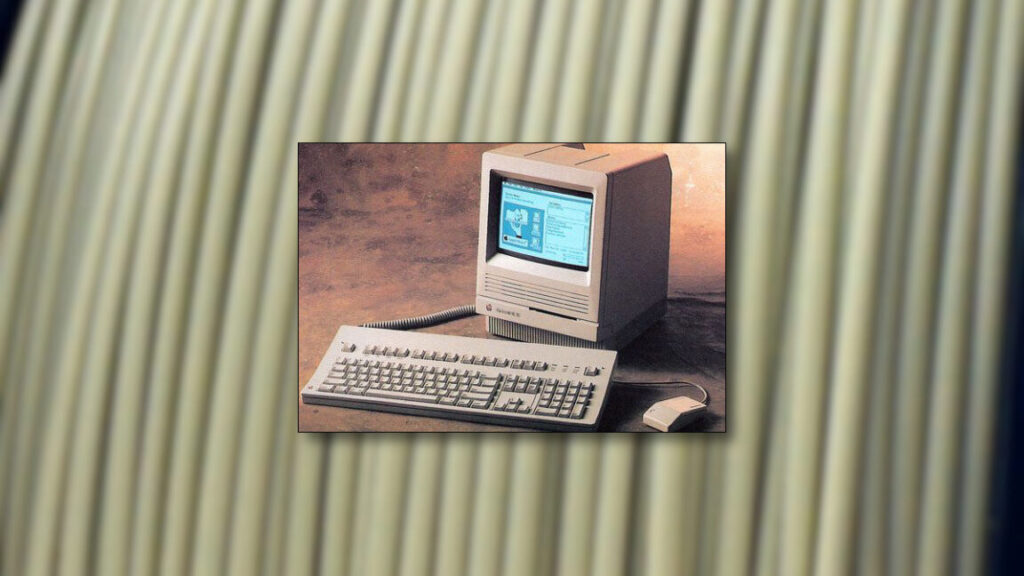

Computer Chronicles covered everything from the earliest IBM PCs and Apple Macintosh models to the rise of the World Wide Web and the dot-com boom. Cheifet conducted interviews with computing industry figures, including Bill Gates, Steve Jobs, and Jeff Bezos, while demonstrating hardware and software for a general audience.

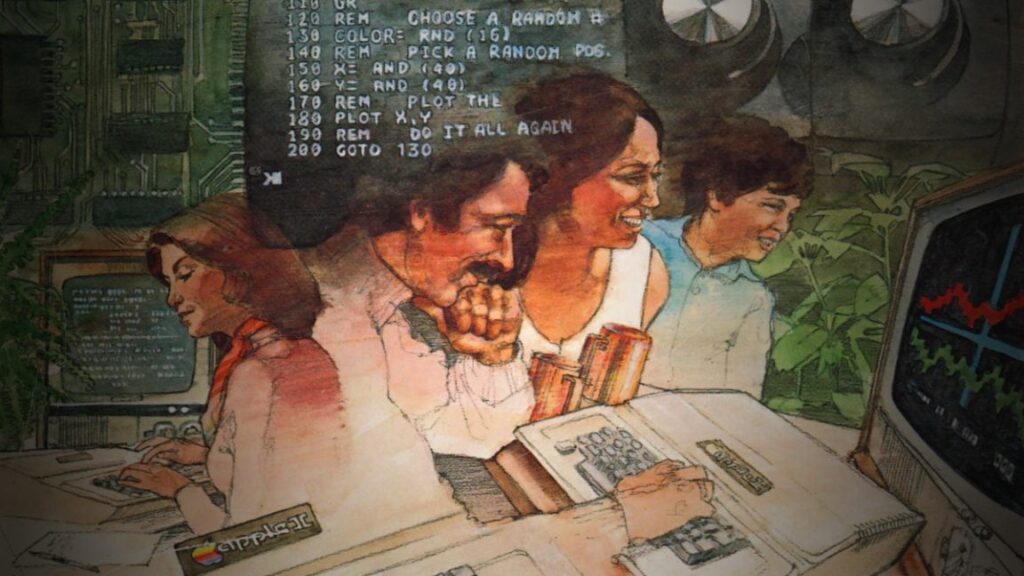

From 1983 to 1990, he co-hosted the show with Gary Kildall, the Digital Research founder who created the popular CP/M operating system that predated MS-DOS on early personal computer systems.

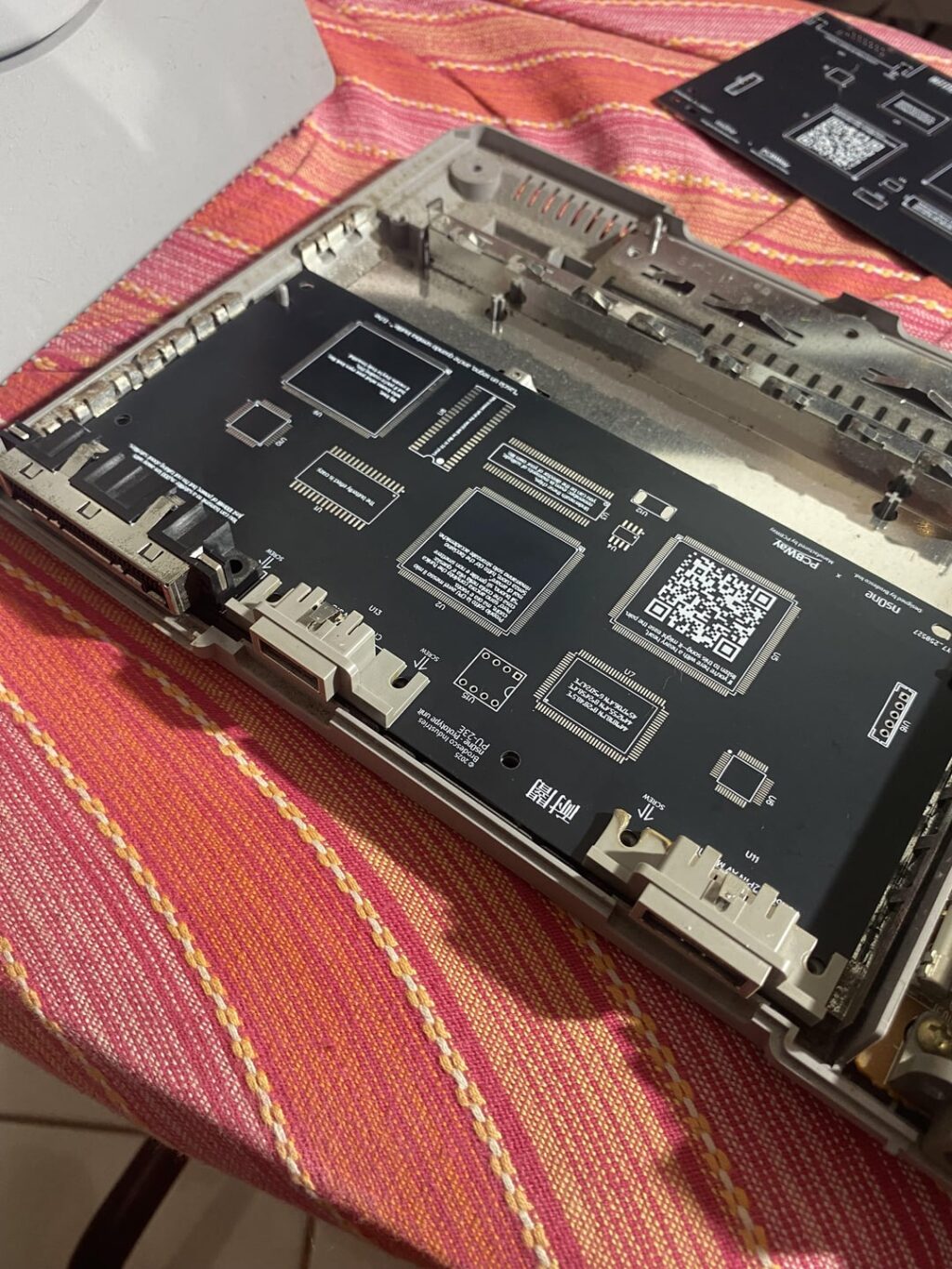

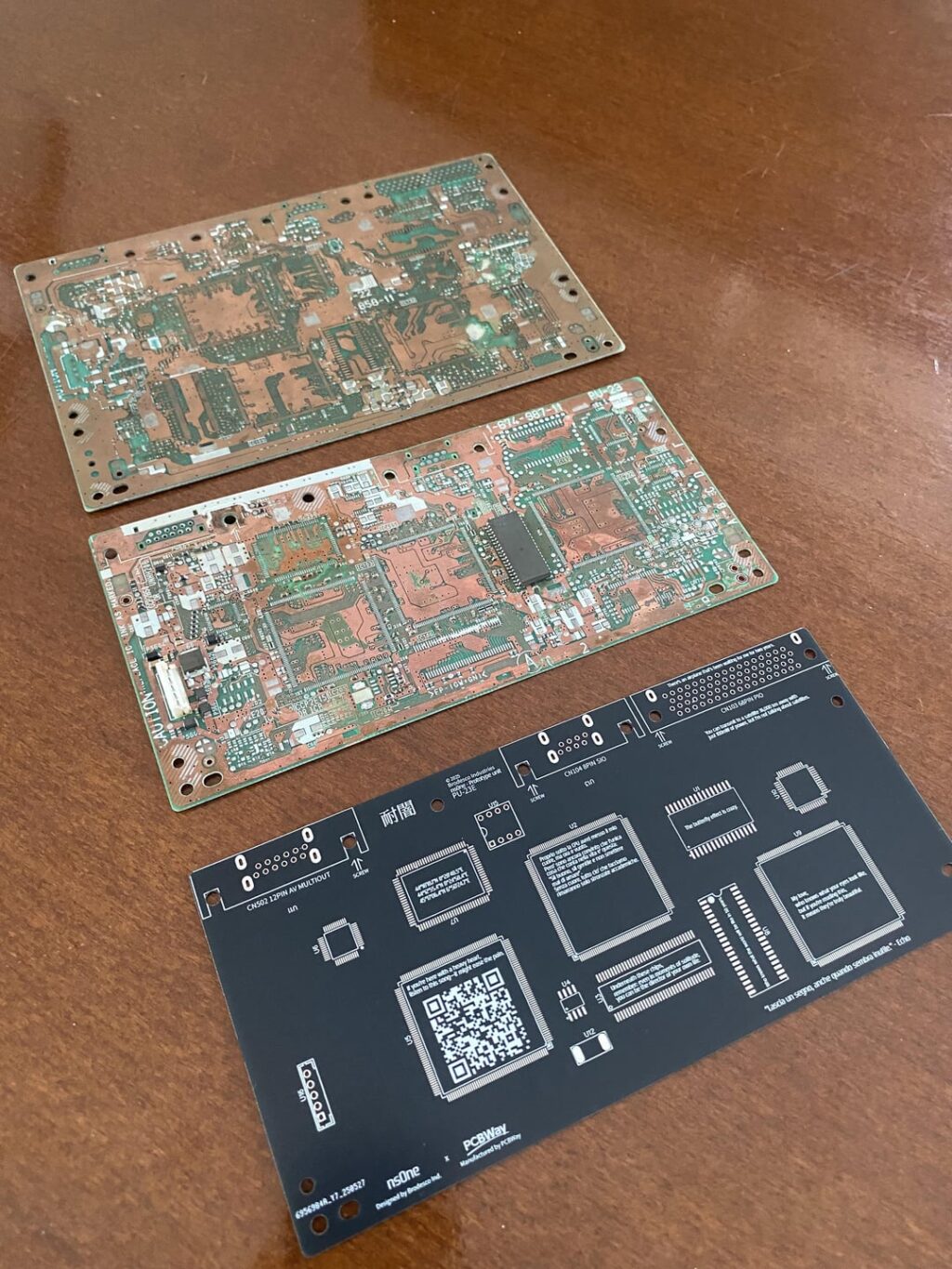

Computer Chronicles – 01×25 – Artificial Intelligence (1984)

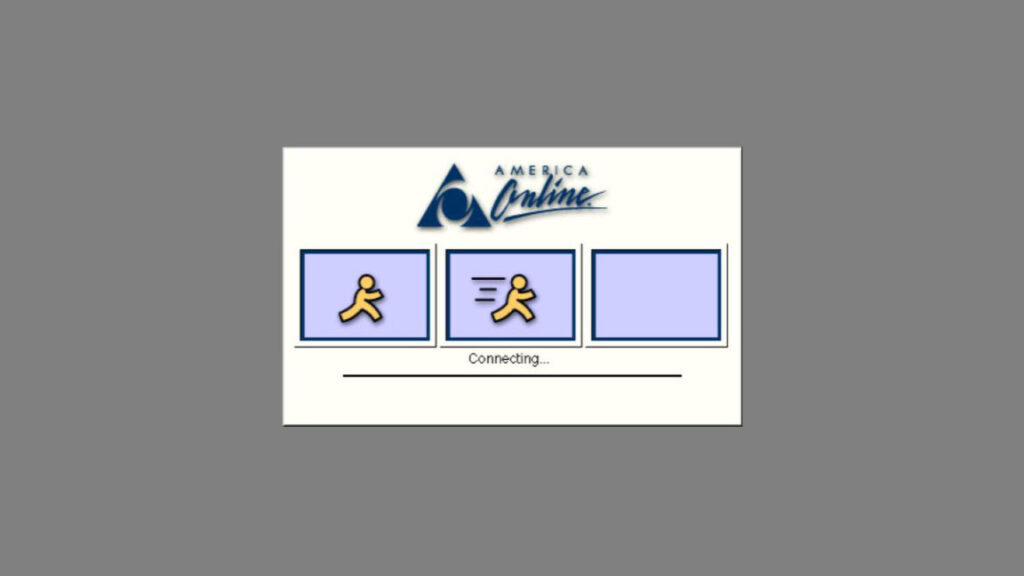

From 1996 to 2002, Cheifet also produced and hosted Net Cafe, a companion series that documented the early Internet boom and introduced viewers to then-new websites like Yahoo, Google, and eBay.

A legacy worth preserving

Computer Chronicles began as a local weekly series in 1981 when Cheifet served as station manager at KCSM-TV, the College of San Mateo’s public television station. It became a national PBS series in 1983 and ran continuously until 2002, producing 433 episodes across 19 seasons. The format remained consistent throughout: product demonstrations, guest interviews, and a closing news segment called “Random Access” that covered industry developments.

After the show’s run ended and Cheifet left television production, he worked to preserve the show’s legacy as a consultant for the Internet Archive, helping to make publicly available the episodes of Computer Chronicles and Net Cafe.

Stewart Cheifet, PBS host who chronicled the PC revolution, dies at 87 Read More »