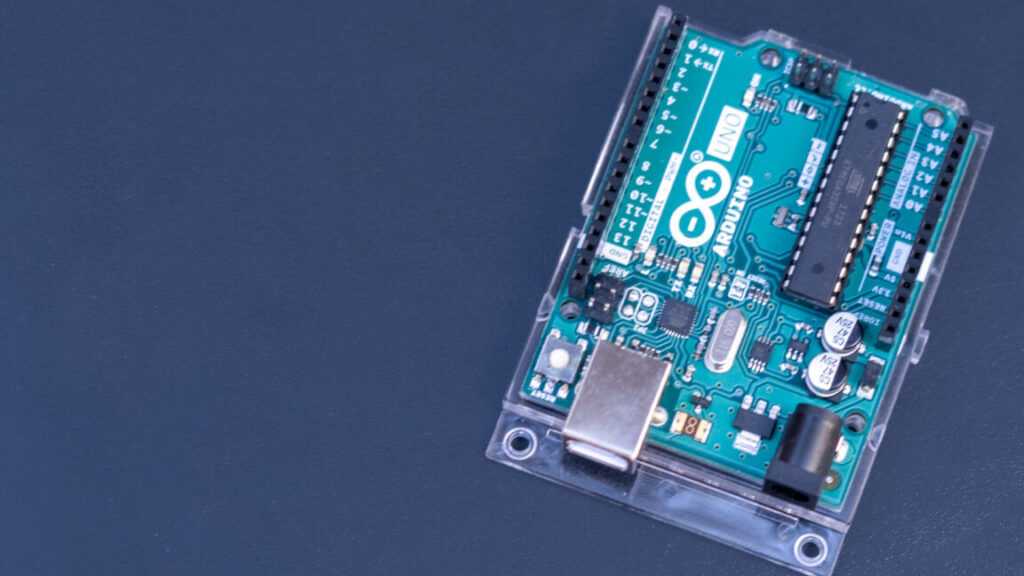

Arduino’s new terms of service worries hobbyists ahead of Qualcomm acquisition

“The Qualcomm acquisition doesn’t modify how user data is handled or how we apply our open-source principles,” the Arduino blog says.

Arduino’s blog didn’t discuss the company’s new terms around patents, which states:

User will use the Site and the Platform in accordance with these Terms and for the sole purposes of correctly using the Services. Specifically, User undertakes not to: … “use the Platform, Site, or Services to identify or provide evidence to support any potential patent infringement claim against Arduino, its Affiliates, or any of Arduino’s or Arduino’s Affiliates’ suppliers and/or direct or indirect customers.

“No open-source company puts language in their ToS banning users from identifying potential patent issues. Why was this added, and who requested it?” Fried and Torrone said.

Arduino’s new terms include similar language around user-generated content that its ToS has had for years. The current terms say that users grant Arduino the:

non-exclusive, royalty free, transferable, sub-licensable, perpetual, irrevocable, to the maximum extent allowed by applicable law … right to use the Content published and/or updated on the Platform as well as to distribute, reproduce, modify, adapt, translate, publish and make publicly visible all material, including software, libraries, text contents, images, videos, comments, text, audio, software, libraries, or other data (collectively, “Content”) that User publishes, uploads, or otherwise makes available to Arduino throughout the world using any means and for any purpose, including the use of any username or nickname specified in relation to the Content.

“The new language is still broad enough to republish, monetize, and route user content into any future Qualcomm pipeline forever,” Torrone told Ars. He thinks Arduino’s new terms should have clarified Arduino’s intent, narrowed the term’s scope, or explained “why this must be irrevocable and transferable at a corporate level.”

In its blog, Arduino said that the new ToS “clarifies that the content you choose to publish on the Arduino platform remains yours and can be used to enable features you’ve requested, such as cloud services and collaboration tools.”

As Qualcomm works toward completing its Arduino acquisition, there appears to be more work ahead for the smartphone processor and modem vendor to convince makers that Arduino’s open source and privacy principles will be upheld. While the Arduino IDE and its source code will stay on GitHub per the AGPL-3.0 Open-Source License, some users remain worried about Arduino’s future under Qualcomm.

Arduino’s new terms of service worries hobbyists ahead of Qualcomm acquisition Read More »