DOJ subpoenas Nvidia in deepening AI antitrust probe, report says

The Department of Justice is reportedly deepening its probe into Nvidia. Officials have moved on from merely questioning competitors to subpoenaing Nvidia and other tech companies for evidence that could substantiate allegations that Nvidia is abusing its “dominant position in AI computing,” Bloomberg reported.

When news of the DOJ’s probe into the trillion-dollar company was first reported in June, Fast Company reported that scrutiny was intensifying merely because Nvidia was estimated to control “as much as 90 percent of the market for chips” capable of powering AI models. Experts told Fast Company that the DOJ probe might even be good for Nvidia’s business, noting that the market barely moved when the probe was first announced.

But the market’s confidence seemed to be shaken a little more on Tuesday, when Nvidia lost a “record-setting $279 billion” in market value following Bloomberg’s report. Nvidia’s losses became “the biggest single-day market-cap decline on record,” TheStreet reported.

People close to the DOJ’s investigation told Bloomberg that the DOJ’s “legally binding requests” require competitors “to provide information” on Nvidia’s suspected anticompetitive behaviors as a “dominant provider of AI processors.”

One concern is that Nvidia may be giving “preferential supply and pricing to customers who use its technology exclusively or buy its complete systems,” sources told Bloomberg. The DOJ is also reportedly probing Nvidia’s acquisition of RunAI—suspecting the deal may lock RunAI customers into using Nvidia chips.

Bloomberg’s report builds on a report last month from The Information that said that Advanced Micro Devices Inc. (AMD) and other Nvidia rivals were questioned by the DOJ—as well as third parties who could shed light on whether Nvidia potentially abused its market dominance in AI chips to pressure customers into buying more products.

According to Bloomberg’s sources, the DOJ is worried that “Nvidia is making it harder to switch to other suppliers and penalizes buyers that don’t exclusively use its artificial intelligence chips.”

In a statement to Bloomberg, Nvidia insisted that “Nvidia wins on merit, as reflected in our benchmark results and value to customers, who can choose whatever solution is best for them.” Additionally, Bloomberg noted that following a chip shortage in 2022, Nvidia CEO Jensen Huang has said that his company strives to prevent stockpiling of Nvidia’s coveted AI chips by prioritizing customers “who can make use of his products in ready-to-go data centers.”

Potential threats to Nvidia’s dominance

Despite the slump in shares, Nvidia’s market dominance seems unlikely to wane any time soon after its stock more than doubled this year. In an SEC filing this year, Nvidia bragged that its “accelerated computing ecosystem is bringing AI to every enterprise” with an “ecosystem” spanning “nearly 5 million developers and 40,000 companies.” Nvidia specifically highlighted that “more than 1,600 generative AI companies are building on Nvidia,” and according to Bloomberg, Nvidia will close out 2024 with more profits than the total sales of its closest competitor, AMD.

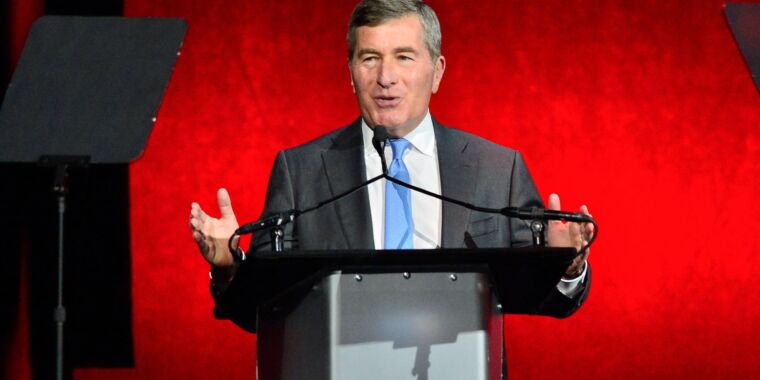

After the DOJ’s most recent big win, which successfully proved that Google has a monopoly on search, the DOJ appears intent on getting ahead of any tech companies’ ambitions to seize monopoly power and essentially become the Google of the AI industry. In June, DOJ antitrust chief Jonathan Kanter confirmed to the Financial Times that the DOJ is examining “monopoly choke points and the competitive landscape” in AI beyond just scrutinizing Nvidia.

According to Kanter, the DOJ is scrutinizing all aspects of the AI industry—”everything from computing power and the data used to train large language models, to cloud service providers, engineering talent and access to essential hardware such as graphics processing unit chips.” But in particular, the DOJ appears concerned that GPUs like Nvidia’s advanced AI chips remain a “scarce resource.” Kanter told the Financial Times that an “intervention” in “real time” to block a potential monopoly could be “the most meaningful intervention” and the least “invasive” as the AI industry grows.

DOJ subpoenas Nvidia in deepening AI antitrust probe, report says Read More »