Trump admin pressured Facebook into removing ICE-tracking group

Attorney General Pam Bondi today said that Facebook removed an ICE-tracking group after “outreach” from the Department of Justice. “Today following outreach from @thejusticedept, Facebook removed a large group page that was being used to dox and target @ICEgov agents in Chicago,” Bondi wrote in an X post.

Bondi alleged that a “wave of violence against ICE has been driven by online apps and social media campaigns designed to put ICE officers at risk just for doing their jobs.” She added that the DOJ “will continue engaging tech companies to eliminate platforms where radicals can incite imminent violence against federal law enforcement.”

When contacted by Ars, Facebook owner Meta said the group “was removed for violating our policies against coordinated harm.” Meta didn’t describe any specific violation but directed us to a policy against “coordinating harm and promoting crime,” which includes a prohibition against “outing the undercover status of law enforcement, military, or security personnel.”

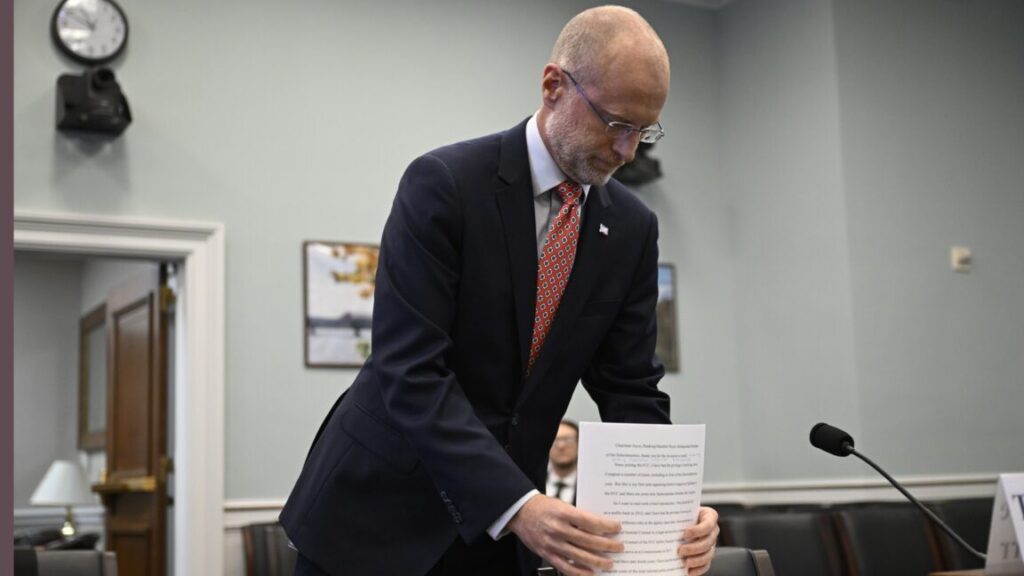

The statement was sent by Francis Brennan, a former Trump campaign advisor who was hired by Meta in January.

The White House recently claimed there has been “a more than 1,000 percent increase in attacks on U.S. Immigration and Customs Enforcement (ICE) officers since January 21, 2025, compared to the same period last year.” Government officials haven’t offered proof of this claim, according to an NPR report that said “there is no public evidence that [attacks] have spiked as dramatically as the federal government has claimed.”

The Justice Department contacted Meta after Laura Loomer sought action against the “ICE Sighting-Chicagoland” group that had over 84,000 members on Facebook. “Fantastic news. DOJ source tells me they have seen my report and they have contacted Facebook and their executives at META to tell them they need to remove these ICE tracking pages from the platform,” Loomer wrote yesterday.

The ICE Sighting-Chicagoland group “has been increasingly used over the last five weeks of ‘Operation Midway Blitz,’ President Donald Trump’s intense deportation campaign, to warn neighbors that federal agents are near schools, grocery stores and other community staples so they can take steps to protect themselves,” the Chicago Sun-Times wrote today.

Trump slammed Biden for social media “censorship”

Trump and Republicans repeatedly criticized the Biden administration for pressuring social media companies into removing content. In a day-one executive order declaring an end to “federal censorship,” Trump said “the previous administration trampled free speech rights by censoring Americans’ speech on online platforms, often by exerting substantial coercive pressure on third parties, such as social media companies, to moderate, deplatform, or otherwise suppress speech that the Federal Government did not approve.”

Trump admin pressured Facebook into removing ICE-tracking group Read More »