Ice discs slingshot across a metal surface all on their own

VA Tech experiment was inspired by Death Valley’s mysterious “sailing stones” at Racetrack Playa.

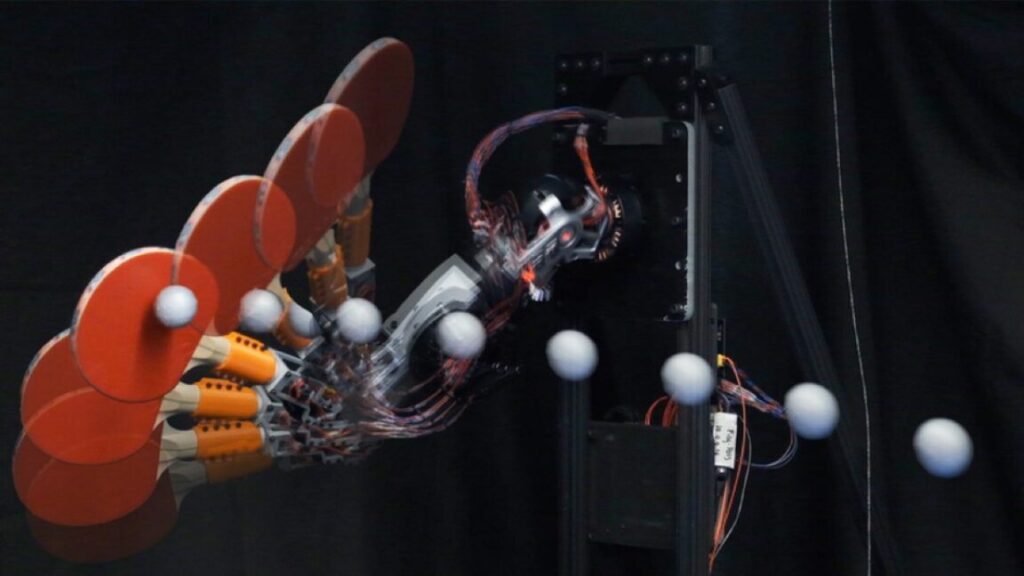

Graduate student Jack Tapocik sets up ice on an engineered surface in the VA Tech lab of Jonathan Boreyko. Credit: Alex Parrish/Virginia Tech

Scientists have figured out how to make frozen discs of ice self-propel across a patterned metal surface, according to a new paper published in the journal ACS Applied Materials and Interfaces. It’s the latest breakthrough to come out of the Virginia Tech lab of mechanical engineer Jonathan Boreyko.

A few years ago, Boreyko’s lab experimentally demonstrated a three-phase Leidenfrost effect in water vapor, liquid water, and ice. The Leidenfrost effect is what happens when you dash a few drops of water onto a very hot, sizzling skillet. The drops levitate, sliding around the pan with wild abandon. If the surface is at least 400° Fahrenheit (well above the boiling point of water), cushions of water vapor, or steam, form underneath them, keeping them levitated. The effect also works with other liquids, including oils and alcohol, but the temperature at which it manifests will be different.

Boreyko’s lab discovered that this effect can also be achieved in ice simply by placing a thin, flat disc of ice on a heated aluminum surface. When the plate was heated above 150° C (302° F), the ice did not levitate on a vapor the way liquid water does. Instead, there was a significantly higher threshold of 550° Celsius (1,022° F) for levitation of the ice to occur. Unless that critical threshold is reached, the meltwater below the ice just keeps boiling in direct contact with the surface. Cross that critical point and you will get a three-phase Leidenfrost effect.

The key is a temperature differential in the meltwater just beneath the ice disc. The bottom of the meltwater is boiling, but the top of the meltwater sticks to the ice. It takes a lot to maintain such an extreme difference in temperature, and doing so consumes most of the heat from the aluminum surface, which is why it’s harder to achieve levitation of an ice disc. Ice can suppress the Leidenfrost effect even at very high temperatures (up to 550° C), which means that using ice particles instead of liquid droplets would be better for many applications involving spray quenching: rapid cooling in nuclear power plants, for example, firefighting, or rapid heat quenching when shaping metals.

This time around, Boreyko et al. have turned their attention to what the authors term “a more viscous analog” to a Leidenfrost ratchet, a form of droplet self-propulsion. “What’s different here is we’re no longer trying to levitate or even boil,” Boreyko told Ars. “Now we’re asking a more straightforward question: Is there a way to make ice move across the surface directionally as it is melting? Regular melting at room temperature. We’re not boiling, we’re not levitating, we’re not Leidenfrosting. We just want to know, can we make ice shoot across the surface if we design a surface in the right way?”

Mysterious moving boulders

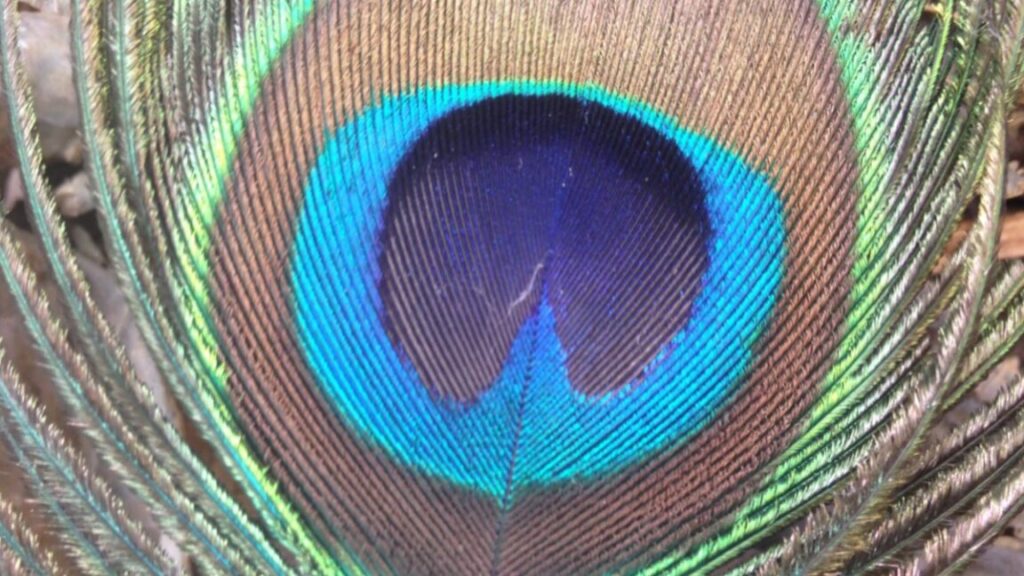

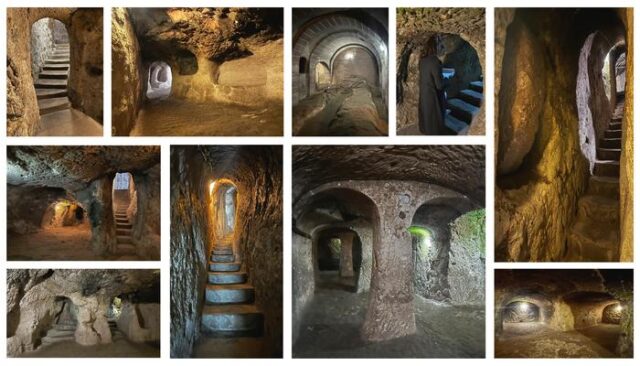

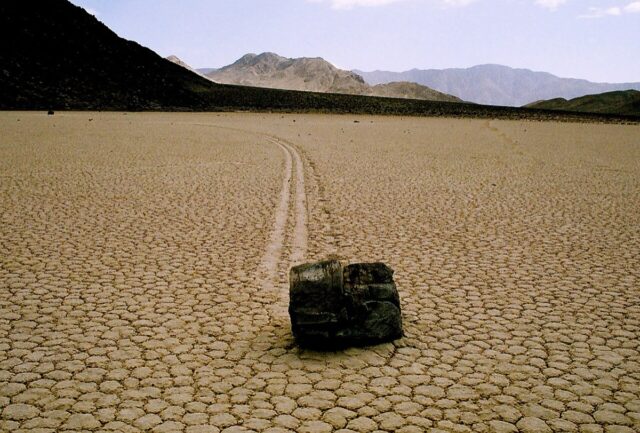

The researchers were inspired by Death Valley’s famous “sailing stones” on Racetrack Playa. Watermelon-sized boulders are strewn throughout the dry lake bed, and they leave trails in the cracked earth as they slowly migrate a couple of hundred meters each season. Scientists didn’t figure out what was happening until 2014. Although co-author Ralph Lorenz (Johns Hopkins University) admitted he thought theirs would be “the most boring experiment ever” when they first set it up in 2011, two years later, the boulders did indeed begin to move while the playa was covered with a pond of water a few inches deep.

So Lorenz and his co-authors were finally able to identify the mechanism. The ground is too hard to absorb rainfall, and that water freezes when the temperature drops. When temperatures rise above freezing again, the ice starts to melt, creating ice rafts floating on the meltwater. And when the winds are sufficiently strong, they cause the ice rafts to drift along the surface.

A sailing stone at Death Valley’s Racetrack Playa. Credit: Tahoenathan/CC BY-SA 3.0

“Nature had to have wind blowing to kind of push the boulder and the ice along the meltwater that was beneath the ice,” said Boreyko. “We thought, what if we could have a similar idea of melting ice moving directionally but use an engineered structure to make it happen spontaneously so we don’t have to have energy or wind or anything active to make it work?”

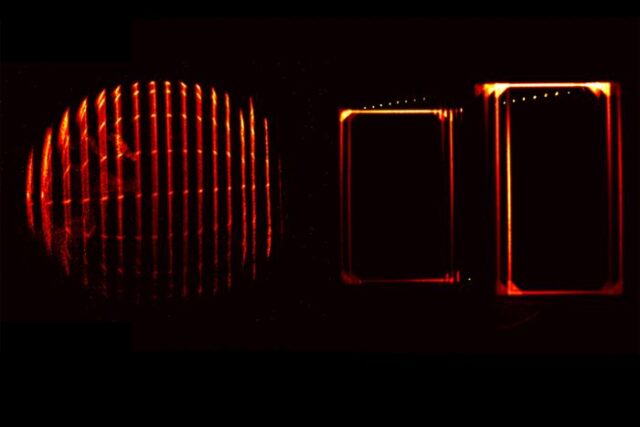

The team made their ice discs by pouring distilled water into thermally insulated polycarbonate Petrie dishes. This resulted in bottom-up freezing, which minimizes air bubbles in the ice. They then milled asymmetric grooves into uncoated aluminum plates in a herringbone pattern—essentially creating arrowhead-shaped channels—and then bonded them to hot plates heated to the desired temperature. Each ice disc was placed on the plate with rubber tongs, and the experiments were filmed from various angles to fully capture the disc behavior.

The herringbone pattern is the key. “The directionality is what really pushes the water,” Jack Tapocik, a graduate student in Boreyko’s lab, told Ars. “The herringbone doesn’t allow for water to flow backward, the water has to go forward, and that basically pushes the water and the ice together forward. We don’t have a treated surface, so the water just sits on top and the ice all moves as one unit.”

Boreyko draws an analogy to tubing on a river, except it’s the directional channels rather than gravity causing the flow. “You can see [in the video below] how it just follows the meltwater,” he said. “This is your classic entrainment mechanism where if the water flows that way and you’re floating on the water, you’re going to go the same way, too. It’s basically the same idea as what makes a Leidenfrost droplet also move one way: It has a vapor flow underneath. The only difference is that was a liquid drifting on a vapor flow, whereas now we have a solid drifting on a liquid flow. The densities and viscosities are different, but the idea is the same: You have a more dense phase that is drifting on the top of a lighter phase that is flowing directionally.”

Jonathan Boreyko/Virginia Tech

Next, the team repeated the experiment, this time coating the aluminum herringbone surface with water-repellant spray, hoping to speed up the disc propulsion. Instead, they found that the disc ended up sticking to the treated surface for a while before suddenly slingshotting across the metal plate.

“It’s a totally different concept with totally different physics behind it, and it’s so much cooler,” said Tapocik. “As the ice is melting on these coated surfaces, the water just doesn’t want to sit within the channels. It wants to sit on top because of the [hydrophobic] coating we have on there. The ice is directly sticking now to the surface, unlike before when it was floating. You get this elongated puddle in front. The easiest place [for the ice] to be is in the center of this giant, long puddle. So it re-centers, and that’s what moves it forward like a slingshot.”

Essentially, the water keeps expanding asymmetrically, and that difference in shape gives rise to a mismatch in surface tension because the amount of force that surface tension exerts on a body depends on curvature. The flatter puddle shape in front has less curvature than the smaller shape in back. As the video below shows, when the mismatch in surface tension becomes sufficiently strong, “It just rips the ice off the surface and flings it along,” said Boreyko. “In the future, we could try putting little things like magnets on top of the ice. We could probably put a boulder on it if we wanted to. The Death Valley effect would work with or without a boulder because it’s the floating ice raft that moves with the wind.”

Jonathan Boreyko/Virginia Tech

One potential application is energy harvesting. For example, one could pattern the metal surface in a circle rather than a straight line so the melting ice disk would continually rotate. Put magnets on the disk, and they would also rotate and generate power. One might even attach a turbine or gear to the rotating disc.

The effect might also provide a more energy-efficient means of defrosting, a longstanding research interest for Boreyko. “If you had a herringbone surface with a frosting problem, you could melt the frost, even partially, and use these directional flows to slingshot the ice off the surface,” he said. “That’s both faster and uses less energy than having to entirely melt the ice into pure water. We’re looking at potentially over a tenfold reduction in heating requirements if you only have to partially melt the ice.”

That said, “Most practical applications don’t start from knowing the application beforehand,” said Boreyko. “It starts from ‘Oh, that’s a really cool phenomenon. What’s going on here?’ It’s only downstream from that it turns out you can use this for better defrosting of heat exchangers for heat pumps. I just think it’s fun to say that we can make a little melting disk of ice very suddenly slingshot across the table. It’s a neat way to grab your attention and think more about melting and ice and how all this stuff works.”

DOI: ACS Applied Materials and Interfaces, 2025. 10.1021/acsami.5c08993 (About DOIs).

Jennifer is a senior writer at Ars Technica with a particular focus on where science meets culture, covering everything from physics and related interdisciplinary topics to her favorite films and TV series. Jennifer lives in Baltimore with her spouse, physicist Sean M. Carroll, and their two cats, Ariel and Caliban.

Ice discs slingshot across a metal surface all on their own Read More »