This week, Altman offers a post called Reflections, and he has an interview in Bloomberg. There’s a bunch of good and interesting answers in the interview about past events that I won’t mention or have to condense a lot here, such as his going over his calendar and all the meetings he constantly has, so consider reading the whole thing.

-

The Battle of the Board.

-

Altman Lashes Out.

-

Inconsistently Candid.

-

On Various People Leaving OpenAI.

-

The Pitch.

-

Great Expectations.

-

Accusations of Fake News.

-

OpenAI’s Vision Would Pose an Existential Risk To Humanity.

Here is what he says about the Battle of the Board in Reflections:

Sam Altman: A little over a year ago, on one particular Friday, the main thing that had gone wrong that day was that I got fired by surprise on a video call, and then right after we hung up the board published a blog post about it. I was in a hotel room in Las Vegas. It felt, to a degree that is almost impossible to explain, like a dream gone wrong.

Getting fired in public with no warning kicked off a really crazy few hours, and a pretty crazy few days. The “fog of war” was the strangest part. None of us were able to get satisfactory answers about what had happened, or why.

The whole event was, in my opinion, a big failure of governance by well-meaning people, myself included. Looking back, I certainly wish I had done things differently, and I’d like to believe I’m a better, more thoughtful leader today than I was a year ago.

I also learned the importance of a board with diverse viewpoints and broad experience in managing a complex set of challenges. Good governance requires a lot of trust and credibility. I appreciate the way so many people worked together to build a stronger system of governance for OpenAI that enables us to pursue our mission of ensuring that AGI benefits all of humanity.

My biggest takeaway is how much I have to be thankful for and how many people I owe gratitude towards: to everyone who works at OpenAI and has chosen to spend their time and effort going after this dream, to friends who helped us get through the crisis moments, to our partners and customers who supported us and entrusted us to enable their success, and to the people in my life who showed me how much they cared.

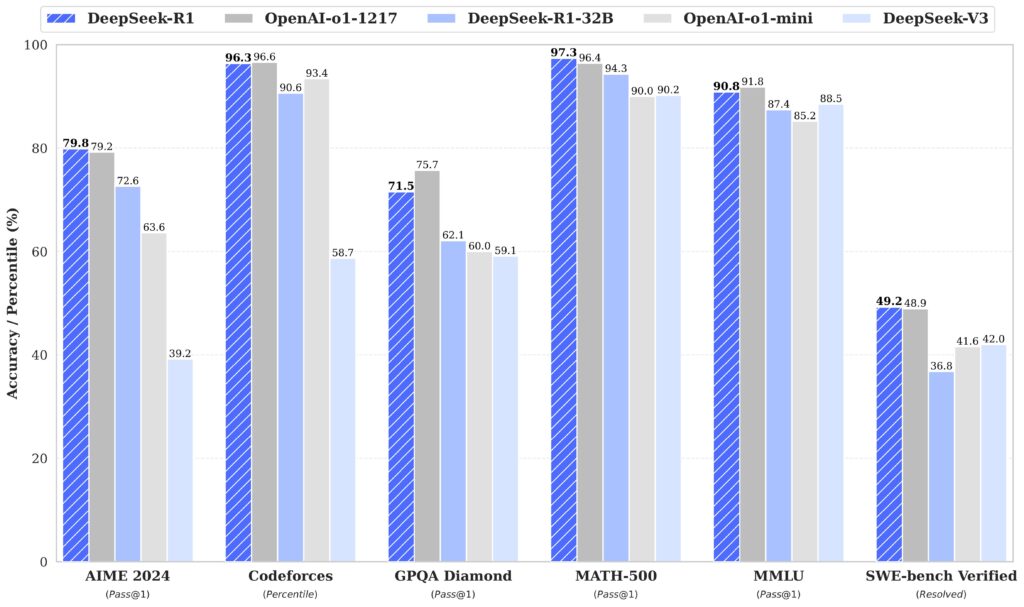

We all got back to the work in a more cohesive and positive way and I’m very proud of our focus since then. We have done what is easily some of our best research ever. We grew from about 100 million weekly active users to more than 300 million. Most of all, we have continued to put technology out into the world that people genuinely seem to love and that solves real problems.

This is about as good a statement as one could expect Altman to make. I strongly disagree that this resulted in a stronger system of governance for OpenAI. And I think he has a much better idea of what happened than he is letting on, and there are several points where ‘I see what you did there.’ But mostly I do appreciate what this statement aims to do.

From his interview, we also get this excellent statement:

Sam Altman: I think the previous board was genuine in their level of conviction and concern about AGI going wrong. There’s a thing that one of those board members said to the team here during that weekend that people kind of make fun of [Helen Toner] for, which is it could be consistent with the mission of the nonprofit board to destroy the company.

And I view that—that’s what courage of convictions actually looks like. I think she meant that genuinely.

And although I totally disagree with all specific conclusions and actions, I respect conviction like that, and I think the old board was acting out of misplaced but genuine conviction in what they believed was right.

And maybe also that, like, AGI was right around the corner and we weren’t being responsible with it. So I can hold respect for that while totally disagreeing with the details of everything else.

And this, which I can’t argue with:

Sam Altman: Usually when you have these ideas, they don’t quite work, and there were clearly some things about our original conception that didn’t work at all. Structure. All of that.

It is fair to say that ultimately, the structure as a non-profit did not work for Altman.

This also seems like the best place to highlight his excellent response about Elon Musk:

Oh, I think [Elon will] do all sorts of bad s—. I think he’ll continue to sue us and drop lawsuits and make new lawsuits and whatever else. He hasn’t challenged me to a cage match yet, but I don’t think he was that serious about it with Zuck, either, it turned out.

As you pointed out, he says a lot of things, starts them, undoes them, gets sued, sues, gets in fights with the government, gets investigated by the government.

That’s just Elon being Elon.

The question was, will he abuse his political power of being co-president, or whatever he calls himself now, to mess with a business competitor? I don’t think he’ll do that. I genuinely don’t. May turn out to be proven wrong.

So far, so good.

Then we get Altman being less polite.

Sam Altman: Saturday morning, two of the board members called and wanted to talk about me coming back. I was initially just supermad and said no. And then I was like, “OK, fine.” I really care about [OpenAI]. But I was like, “Only if the whole board quits.” I wish I had taken a different tack than that, but at the time it felt like a just thing to ask for.

Then we really disagreed over the board for a while. We were trying to negotiate a new board. They had some ideas I thought were ridiculous. I had some ideas they thought were ridiculous. But I thought we were [generally] agreeing.

And then—when I got the most mad in the whole period—it went on all day Sunday. Saturday into Sunday they kept saying, “It’s almost done. We’re just waiting for legal advice, but board consents are being drafted.” I kept saying, “I’m keeping the company together. You have all the power. Are you sure you’re telling me the truth here?” “Yeah, you’re coming back. You’re coming back.”

And then Sunday night they shock-announce that Emmett Shear was the new CEO. And I was like, “All right, now I’m f—ing really done,” because that was real deception. Monday morning rolls around, all these people threaten to quit, and then they’re like, “OK, we need to reverse course here.”

This is where his statements fail to line up with my understanding of what happened. Altman gave the board repeated in-public drop dead deadlines, including demanding that the entire board resign as he noted above, with very clear public messaging that failure to do this would destroy OpenAI.

Maybe if Altman had quickly turned around and blamed the public actions on his allies acting on their own, I would have believed that, but he isn’t even trying that line out now. He’s pretending that none of that was part of the story.

In response to those ultimatums, facing imminent collapse and unable to meet Altman’s blow-it-all-up deadlines and conditions, the board tapped Emmett Shear as a temporary CEO, who was very willing to facilitate Altman’s return and then stepped aside only days later.

That wasn’t deception, and Altman damn well knows that now, even if he was somehow blinded to what was happening at the time. The board very much still had the intention of bringing Altman back. Altman and his allies responded by threatening to blow up the company within days.

Then the interviewer asks what the board meant by ‘consistently candid.’ He talks about the ChatGPT launch which I mention a bit later on – where I do think he failed to properly inform the board but I think that was more one time of many than a particular problem – and then Altman says, bold is mine:

And I think there’s been an unfair characterization of a number of things like [how I told the board about the ChatGPT launch]. The one thing I’m more aware of is, I had had issues with various board members on what I viewed as conflicts or otherwise problematic behavior, and they were not happy with the way that I tried to get them off the board. Lesson learned on that.

There it is. They were ‘not happy’ with the way that he tried to get them off the board. I thank him for the candor that he was indeed trying to remove not only Helen Toner but various board members.

I do think this was primary. Why were they not happy, Altman? What did you do?

From what we know, it seems likely he lied to board members about each other in order to engineer a board majority.

Altman also outright says this:

I don’t think I was doing things that were sneaky. I think the most I would say is, in the spirit of moving really fast, the board did not understand the full picture.

That seems very clearly false. By all accounts, however much farther than sneaky Altman did or did not go, Altman was absolutely being sneaky.

He also later mentions the issues with the OpenAI startup fund, where his explanation seems at best rather disingenuous and dare I say it sneaky.

Here is how he attempts to address all the high profile departures:

Sam Altman (in Reflections): Some of the twists have been joyful; some have been hard. It’s been fun watching a steady stream of research miracles occur, and a lot of naysayers have become true believers. We’ve also seen some colleagues split off and become competitors. Teams tend to turn over as they scale, and OpenAI scales really fast.

I think some of this is unavoidable—startups usually see a lot of turnover at each new major level of scale, and at OpenAI numbers go up by orders of magnitude every few months.

The last two years have been like a decade at a normal company. When any company grows and evolves so fast, interests naturally diverge. And when any company in an important industry is in the lead, lots of people attack it for all sorts of reasons, especially when they are trying to compete with it.

I agree that some of it was unavoidable and inevitable. I do not think this addresses people’s main concerns, especially that they have lost so many of their highest level people, especially over the last year, including almost all of their high-level safety researchers all the way up to the cofounder level.

It is related to this claim, which I found a bit disingenuous:

Sam Altman: The pitch was just come build AGI. And the reason it worked—I cannot overstate how heretical it was at the time to say we’re gonna build AGI. So you filter out 99% of the world, and you only get the really talented, original thinkers. And that’s really powerful.

I agree that was a powerful pitch.

But we know from the leaked documents, and we know from many people’s reports, that this was not the entire pitch. The pitch for OpenAI was that AGI would be built safely, and that Google DeepMind could not to be trusted to be the first to do so. The pitch was that they would ensure that AGI benefited the world, that it was a non-profit, that it cared deeply about safety.

Many of those who left have said that these elements were key reasons they chose to join OpenAI. Altman is now trying to rewrite history to ignore these promises, and pretend that the vision was ‘build AGI/ASI’ rather than ‘build AGI/ASI safety and ensure it benefits humanity.’

I also found his ‘I expected ChatGPT to go well right from the start’ interesting. If Altman did expect it do well and in his words he ‘forced’ people to ship it when they didn’t want to because they thought it wasn’t ready, that provides different color than the traditional story.

It also plays into this from the interview:

There was this whole thing of, like, “Sam didn’t even tell the board that he was gonna launch ChatGPT.” And I have a different memory and interpretation of that. But what is true is I definitely was not like, “We’re gonna launch this thing that is gonna be a huge deal.”

It sounds like Altman is claiming he did think it was going to be a big deal, although of course no one expected the rocket to the moon that we got.

Then he says how much of a mess the Battle of the Board left in its wake:

I totally was [traumatized]. The hardest part of it was not going through it, because you can do a lot on a four-day adrenaline rush. And it was very heartwarming to see the company and kind of my broader community support me.

But then very quickly it was over, and I had a complete mess on my hands. And it got worse every day. It was like another government investigation, another old board member leaking fake news to the press.

And all those people that I feel like really f—ed me and f—ed the company were gone, and now I had to clean up their mess. It was about this time of year [December], actually, so it gets dark at like 4: 45 p.m., and it’s cold and rainy, and I would be walking through my house alone at night just, like, f—ing depressed and tired.

And it felt so unfair. It was just a crazy thing to have to go through and then have no time to recover, because the house was on fire.

Some combination of Altman and his allies clearly worked hard to successfully spread fake news during the crisis, placing it in multiple major media outlets, in order to influence the narrative and the ultimate resolution. A lot of this involved publicly threatening (and bluffing) that if they did not get unconditional surrender within deadlines on the order of a day, they would end OpenAI.

Meanwhile, the Board made the fatal mistake of not telling its side of the story, out of some combination of legal and other fears and concerns, and not wanting to ultimately destroy OpenAI. Then, at Altman’s insistence, those involved left. And then Altman swept the entire ‘investigation’ under the rug permanently.

Altman then has the audacity now to turn around and complain about what little the board said and leaked afterwards, calling it ‘fake news’ without details, and saying how they fed him and the company and were ‘gone and now he had to clean up the mess.’

What does he actually say about safety and existential risk in Reflections? Only this:

We continue to believe that the best way to make an AI system safe is by iteratively and gradually releasing it into the world, giving society time to adapt and co-evolve with the technology, learning from experience, and continuing to make the technology safer.

We believe in the importance of being world leaders on safety and alignment research, and in guiding that research with feedback from real world applications.

Then in the interview, he gets asked point blank:

Q: Has your sense of what the dangers actually might be evolved?

A: I still have roughly the same short-, medium- and long-term risk profiles. I still expect that on cybersecurity and bio stuff, we’ll see serious, or potentially serious, short-term issues that need mitigation.

Long term, as you think about a system that really just has incredible capability, there’s risks that are probably hard to precisely imagine and model. But I can simultaneously think that these risks are real and also believe that the only way to appropriately address them is to ship product and learn.

I know that anyone who previously had a self-identified ‘Eliezer Yudkowsky fan fiction Twitter account’ knows better than to think all you can say about long term risks is ‘ship products and learn.’

I don’t see the actions to back up even these words. Nor would I expect, if they truly believed this, for this short generic statement to be the only mention of the subject.

How can you reflect on the past nine years, say you have a direct path to AGI (as he will say later on), get asked point blank about the risks, and say only this about the risks involved? The silence is deafening.

I also flat out do not think you can solve the problems exclusively through this approach. The iterative development strategy has its safety and adaptation advantages. It also has disadvantages, driving the race forward and making too many people not notice what is happening in front of them via a ‘boiling the frog’ issue. On net, my guess is it has been net good for safety versus not doing it, at least up until this point.

That doesn’t mean you can solve the problem of alignment of superintelligent systems primarily by reacting to problems you observe in present systems. I do not believe the problems we are about to face will work that way.

And even if we are in such a fortunate world that they do work that way? We have not been given reason to trust that OpenAI is serious about it.

Getting back to the whole ‘vision thing’:

Our vision won’t change; our tactics will continue to evolve.

I suppose if ‘vision’ is simply ‘build AGI/ASI’ and everything else is tactics, then sure?

I do not think that was the entirety of the original vision, although it was part of it.

That is indeed the entire vision now. And they’re claiming they know how to do it.

We are now confident we know how to build AGI as we have traditionally understood it. We believe that, in 2025, we may see the first AI agents “join the workforce” and materially change the output of companies. We continue to believe that iteratively putting great tools in the hands of people leads to great, broadly-distributed outcomes.

We are beginning to turn our aim beyond that, to superintelligence in the true sense of the word. We love our current products, but we are here for the glorious future. With superintelligence, we can do anything else. Superintelligent tools could massively accelerate scientific discovery and innovation well beyond what we are capable of doing on our own, and in turn massively increase abundance and prosperity.

This sounds like science fiction right now, and somewhat crazy to even talk about it. That’s alright—we’ve been there before and we’re OK with being there again. We’re pretty confident that in the next few years, everyone will see what we see, and that the need to act with great care, while still maximizing broad benefit and empowerment, is so important. Given the possibilities of our work, OpenAI cannot be a normal company.

Those who have ears, listen. This is what they plan on doing.

They are predicting AI workers ‘joining the workforce’ in earnest this year, with full AGI not far in the future, followed shortly by ASI. They think ‘4’ is conservative.

What are the rest of us going to do, or not do, about this?

I can’t help but notice Altman is trying to turn OpenAI into a normal company.

Why should we trust that structure in the very situation Altman himself describes? If the basic thesis is that we should put our trust in Altman personally, why does he think he has earned that trust?