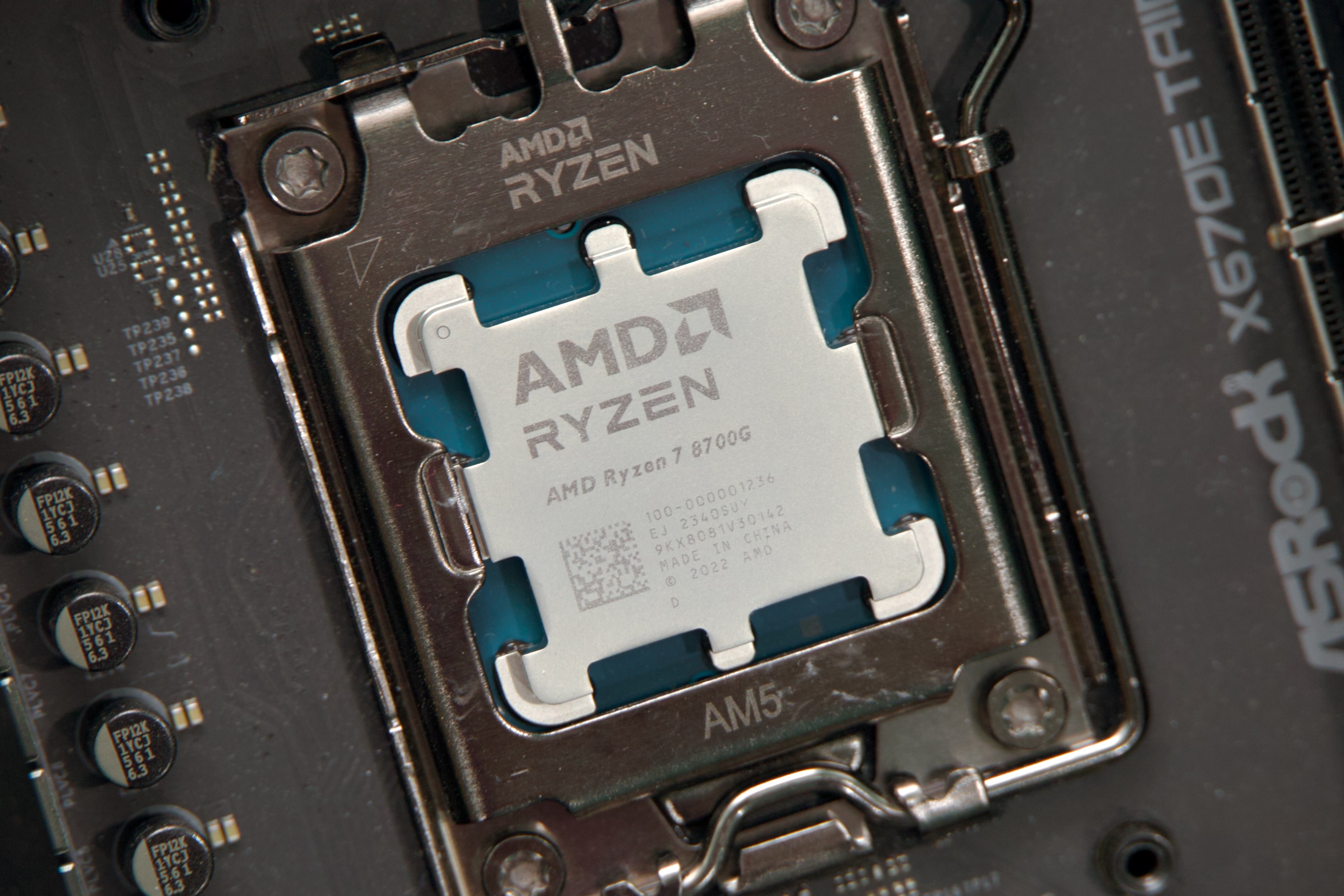

AMD wins massive AI chip deal from OpenAI with stock sweetener

As part of the arrangement, AMD will allow OpenAI to purchase up to 160 million AMD shares at 1 cent each throughout the chips deal.

OpenAI diversifies its chip supply

With demand for AI compute growing rapidly, companies like OpenAI have been looking for secondary supply lines and sources of additional computing capacity, and the AMD partnership is part the company’s wider effort to secure sufficient computing power for its AI operations. In September, Nvidia announced an investment of up to $100 billion in OpenAI that included supplying at least 10 gigawatts of Nvidia systems. OpenAI plans to deploy a gigawatt of Nvidia’s next-generation Vera Rubin chips in late 2026.

OpenAI has worked with AMD for years, according to Reuters, providing input on the design of older generations of AI chips such as the MI300X. The new agreement calls for deploying the equivalent of 6 gigawatts of computing power using AMD chips over multiple years.

Beyond working with chip suppliers, OpenAI is widely reported to be developing its own silicon for AI applications and has partnered with Broadcom, as we reported in February. A person familiar with the matter told Reuters the AMD deal does not change OpenAI’s ongoing compute plans, including its chip development effort or its partnership with Microsoft.

AMD wins massive AI chip deal from OpenAI with stock sweetener Read More »